Tom's Hardware Verdict

Gigabyte’s RX 5500 XT Gaming OC 8G proves 8GB of VRAM is needed when gaming with ‘ultra’ settings at 1080p. It also uses a quiet cooler and improves efficiency immensely over Polaris. But at its current $220 price, the GTX 1660 is a better value.

Pros

- +

8GB VRAM

- +

Faster and more efficient than Sapphire Pulse 4GB

- +

Capable 1080p ‘ultra’ performance

- +

Robust Windforce 3 cooling

Cons

- -

11-inch card won’t fit in all cases

Why you can trust Tom's Hardware

With the recent release of AMD’s Radeon RX 5500 series graphics cards, the company’s 7nm Navi silicon finally made its way into the mainstream. The RX 5500 XT comes in 4GB and 8GB variants, with the 8GB version notably faster in some titles due to VRAM limits. In our launch day coverage of the 4GB model, where the card wasn’t held back by memory limitations, it performed between an Nvidia GeForce GTX 1650 Super and GTX 1660 in most titles and proved to be a capable 1080p gaming card.

Today, we have an 8GB variant on our test bench, the Gigabyte RX 5500 XT Gaming OC 8G. Compared to the Sapphire Pulse we tested previously, this card sports a larger heatsink with three fans and, of course, 8GB of GDDR6. Where the 4GB card struggled in some titles, we will see the 8GB card show the full potential of the Navi 14 XTX GPU powering the card. We saw significant performance improvements in Forza Horizon 4, Battlefield V, Far Cry 5, and Shadow of the Tomb Raider. Where previous frames per second (fps) results on the 4GB Sapphire model were abnormally low, with this 8GB model delivering decidedly better performance.

When launched, AMD’s suggested price for these cards was $169 and $199 for the 4GB and 8GB variants, respectively. At this price point, the cards compete with the GTX 1650 Super ($159.99-plus), and a GTX 1660 ($199.99-plus). Unless you plan on playing older titles or are OK with lowering in-game settings to subvert the VRAM limitation in the 4GB card, the 8GB is the way to go. Pricing on current 8GB cards range from the SRP up to $239.99 for Sapphire’s Nitro version. The Gigabyte RX 5500 XT Gaming OC 8G we’re testing here is priced at $219.99.

Features

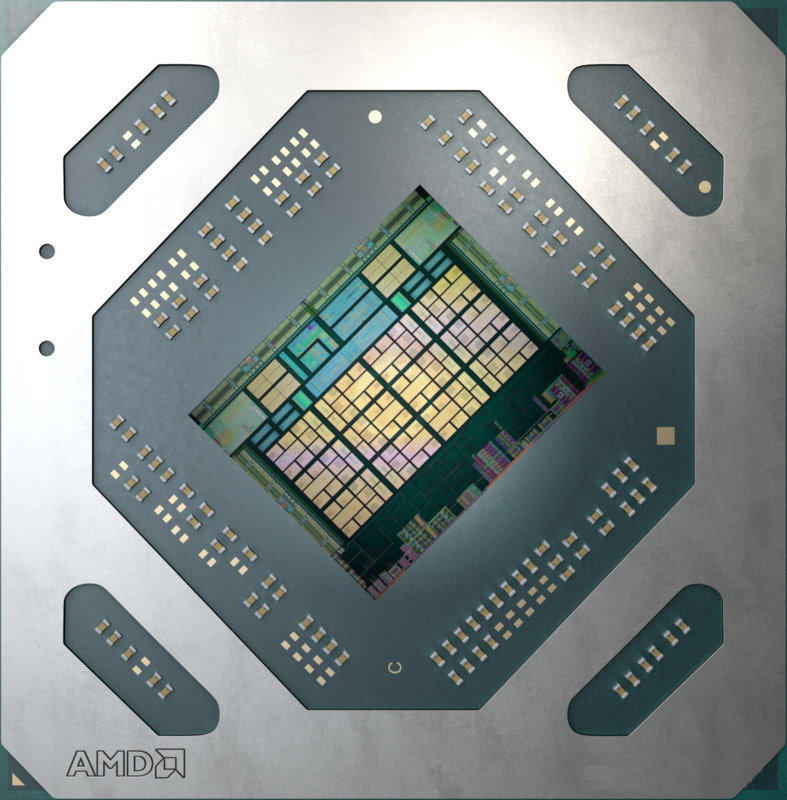

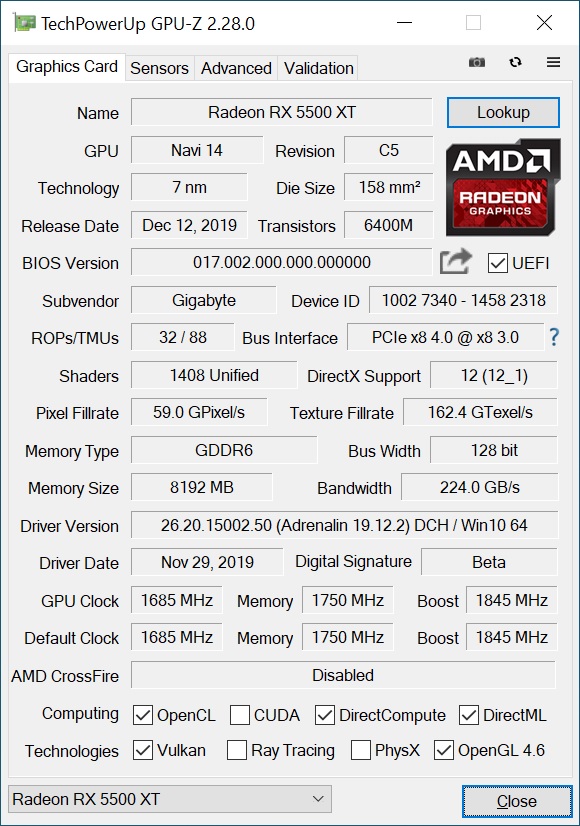

The RX 5500 XT uses the Navi 14 XTX GPU variant. The die is manufactured on TSMC’s 7nm finFET process, with 6.4 billion transistors cut into a 158mm squared die. Under the hood, the Navi 14 XTX features 22 Compute Units (CUs) for a total of 1,408 Stream Processors. Each RDNA CU has four texture units, making for a sum of 88 TMUs, along with 32 ROPs.

Reference clock speeds are listed as 1,607 MHz base, 1707 MHz Game Clock, and 1,845 MHz Boost clock. AMD will not release a reference card, so clock speeds will vary with each board partner card. The Gigabyte RX 5500 XT Gaming OC 8G we have for review has a 1,685 MHz Base clock (+78), a 1737 MHz Game clock (+30) with Boost listed as 1,845 MHz (+0). Actual core clock speeds will be much closer to the game value than the Boost, as has been the trend with Navi.

The 4GB variant we tested previously sits on a 128-bit bus with reference speeds of 1,750 MHz (14 Gbps GDDR6 effective). The 8GB card retains the same specifications, but with a higher capacity. Later on, we’ll see in some games the 4GB card is notably slower when running some games at ultra settings.

Power consumption on the 4GB part comes in at 130W in reference form. The 8GB card will have increased power targets for the additional memory, so power use will likely be a bit higher on this card compared to our 4GB sample. Feeding power to the Gigabyte card is a single required 8-pin connector.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

This compares to the 120W reference GTX 1660/1660 Ti and the GTX 1650 Super at 100W. While we have seen improvements in performance per watt values, the Navi is still a bit behind compared to the competition on that front. That said, the difference isn’t something you are likely to notice on your electric bill or in managing the heat in your case.

The reference specification on the Radeon RX 5500 XT is to include at least one HDMI, DisplayPort, and DVI port. The Gigabyte card changes that up and is equipped with three DisplayPorts and one HDMI port.

Below is a detailed specifications table covering the new GPUs:

| Gigabyte RX 5500 XT Gaming OC 8G | Sapphire Radeon RX 5500 XT Pulse 4GB | Radeon RX 5700 | |

|---|---|---|---|

| Architecture (GPU) | RDNA (Navi 14 XTX) | RDNA (Navi 14 XTX) | RDNA (Navi 10) |

| ALUs/Stream Processors | 1408 | 1408 | 2304 |

| Peak FP32 Compute (Based on Typical Boost) | 5.2 TFLOPS | 5.2 TFLOPS | 7.5 TFLOPS |

| Tensor Cores | N/A | N/A | N/A |

| RT Cores | N/A | N/A | N/A |

| Texture Units | 88 | 88 | 144 |

| ROPs | 32 | 32 | 64 |

| Base Clock Rate | 1685 MHz | 1607 MHz | 1465 MHz |

| Nvidia Boost/AMD Game Rate | 1737 MHz | 1717 MHz | 1625 MHz |

| AMD Boost Rate | 1845 MHz | 1845 MHz | 1725 MHz |

| Memory Capacity | 8GB GDDR6 | 4/8GB GDDR6 | 8GB GDDR6 |

| Memory Bus | 128-bit | 128-bit | 256-bit |

| Memory Bandwidth | 224 GB/s | 224 GB/s | 448 GB/s |

| L2 Cache | 2MB | 2MB | 4MB |

| TDP | 130W | 130W | 177W (measured) |

| Transistor Count | 6.4 billion | 6.4 billion | 10.3 billion |

| Die Size | 158 mm² | 158 mm² | 251 mm² |

Design

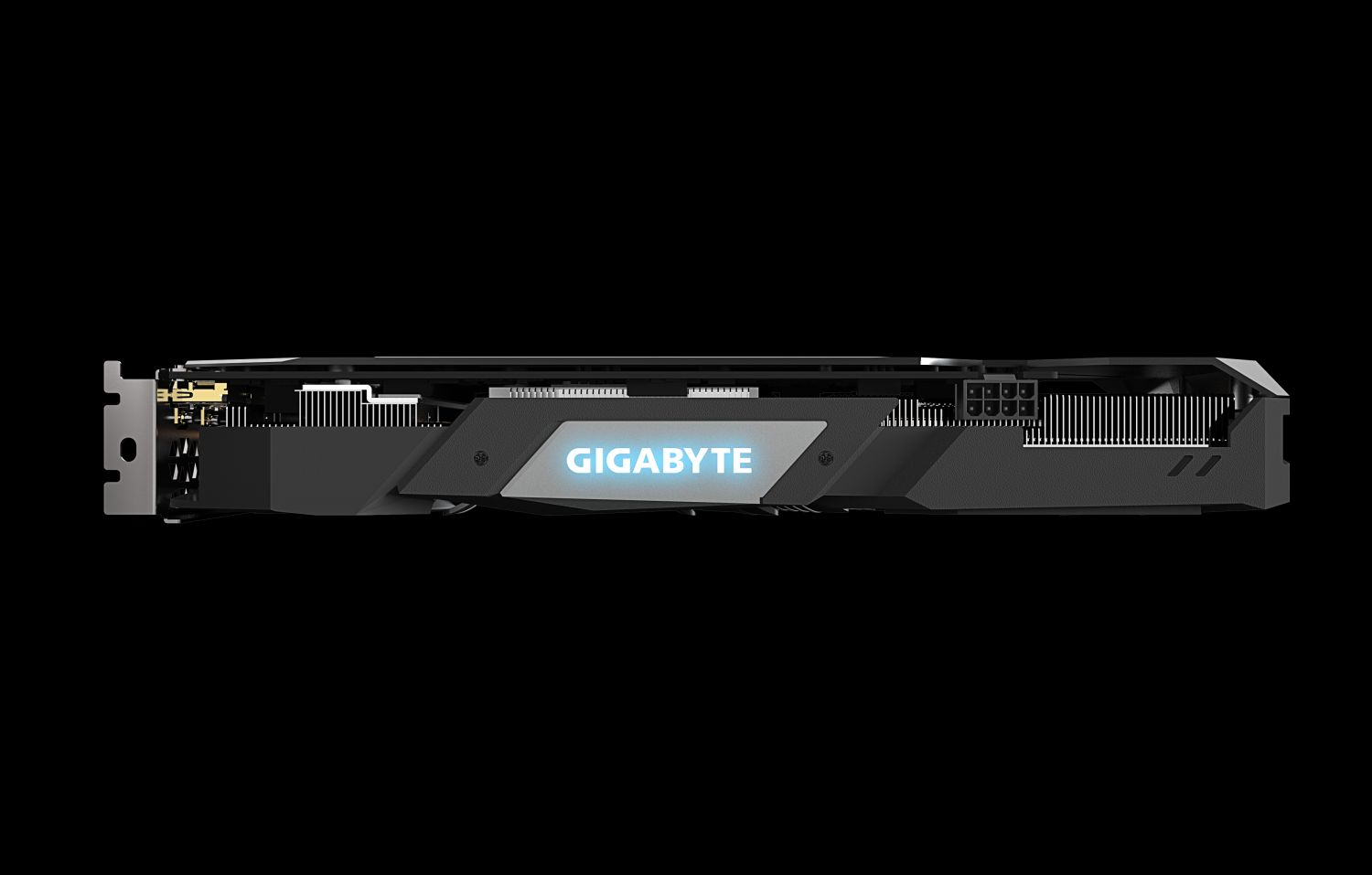

The Gaming OC 8G card we have measures in at 11 x 4.75 x 1.57-inches (281 x 115 x 40mm), making this a true dual-slot card (meaning you can fit a card of some sort in the second slot below it - albeit with millimeters of space). The card’s length is typical of a full-size video card and hangs a bit over the edge of a standard ATX motherboard. In other words, this isn’t a card designed for small form factor systems. While it should fit in most mid-tower or larger cases, be sure to confirm your case clearances before buying.

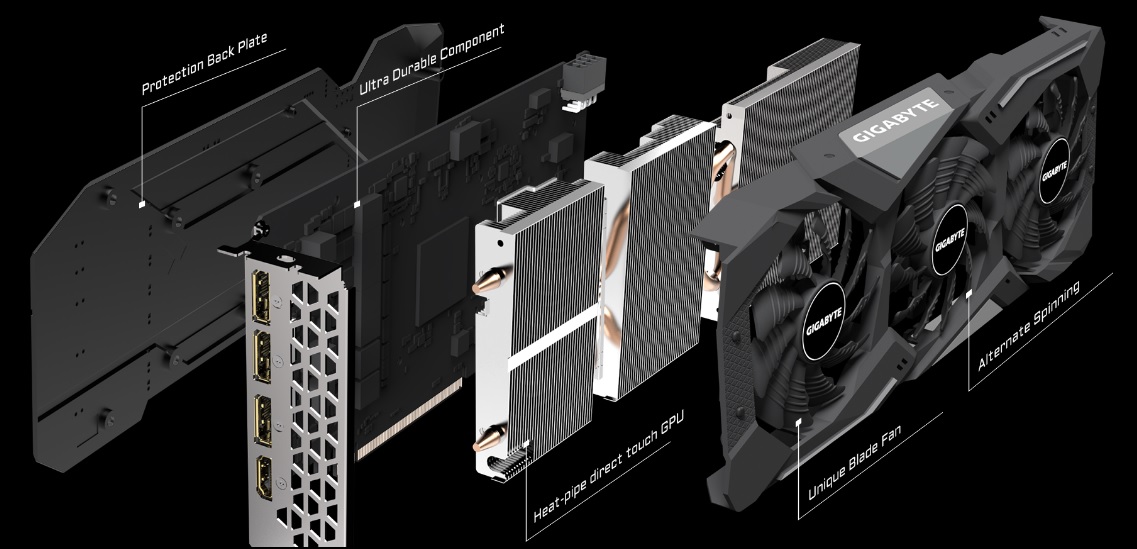

As far as looks go, the card features the Windforce 3x cooling system using three fans and a mostly black shroud. There are two grey accent pieces on the top and bottom edge, along with diamond-patterned accents on the ends. The card also comes with a matching black backplate designed to protect the PCB and increase rigidity. Overall, the card looks good and should fit in with most build themes.

The Windforce 3 cooling system consists of three 80mm fans, with the center fan spinning in the opposite direction of the two outside fans. Gigabyte says this cuts down on turbulence by causing the airflow between the fans to move in the same direction, increasing heat dissipation efficiency and enhancing airflow pressure. The fan has a unique 3D stripe curve on the surface, said to enhance airflow as well.

The heatsink underneath consists of two composite copper heatpipes to spread the load through the fin array. The heat pipes are flattened where they come into contact with the GPU die for increased contact area. Making direct contact with the MOSFETs and RAM is a large aluminum plate with thermal pads in between.

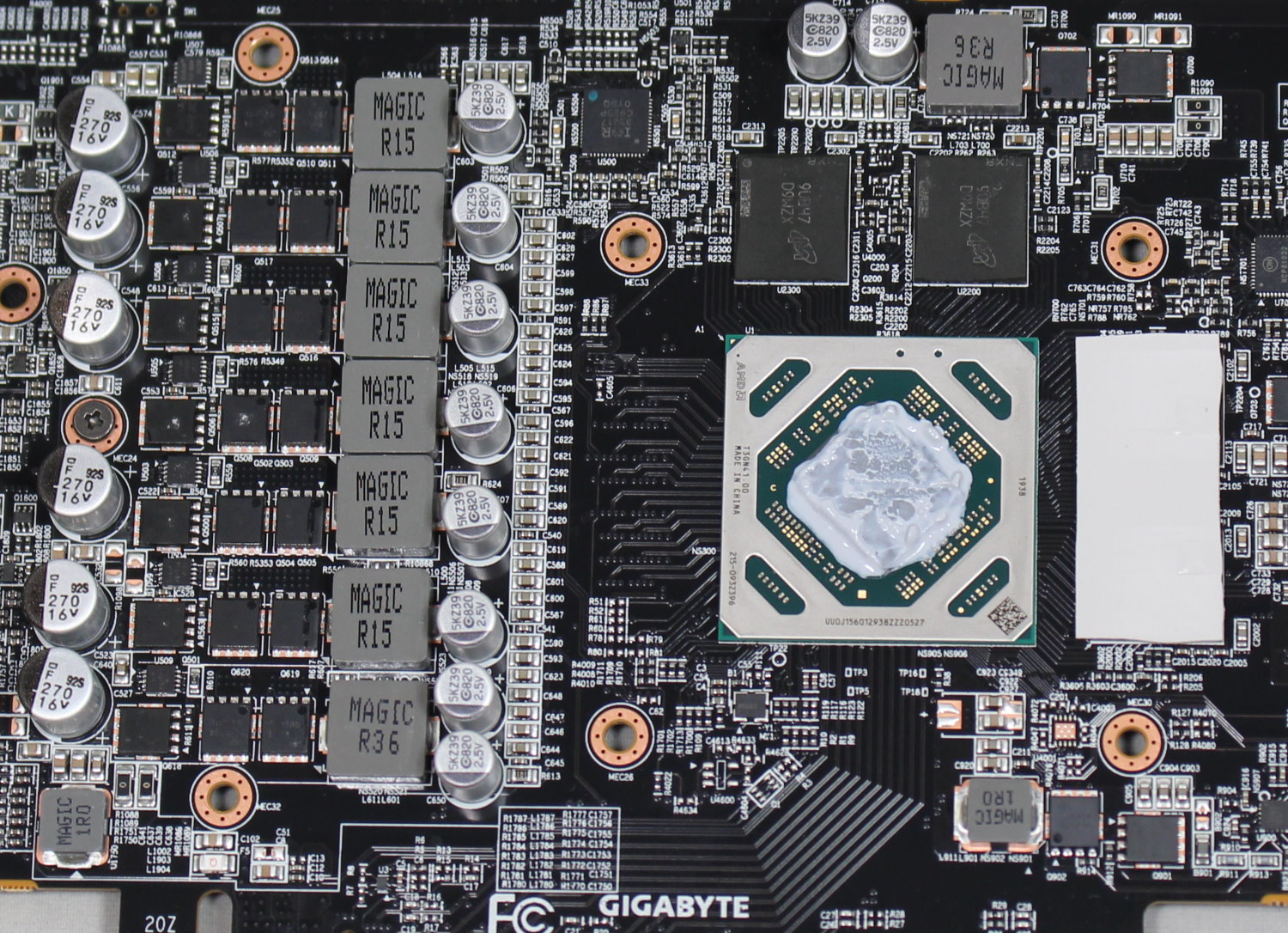

The Gigabyte card we have uses a 6+1 phase VRM for power to the GPU and memory components. The VRM is managed by a high-quality International Rectifier IR35217 controller. Feeding power to these bits is a single 8-pin PCIe connector. Between that and the PCIe slot, the card can supply 225W of in-specification power. For a 130W card on paper, this more than enough for overclocking.

The Gaming OC 8G also sports Gigabyte’s Ultra Durable VGA technology, which consists of 2 ounces of copper used in the PCB, solid capacitors and metal chokes along with lower RDS(on) MOSFETs. The company says this helps the MOSFETs to operate at lower temperatures and promotes a long system life.

As far as video outputs, this card includes three DisplayPort v1.4 ports, along with a single HDMI 2.0b port. The IO plate used features dozens of trapezoid-shaped cutouts to let some air exhaust out the front. But this isn’t a blower-style cooler, so most of the heat will end up inside of the case.

How We Tested Gigabyte RX 5500 XT Gaming OC 8G

Recently, we’ve updated the test system to a new platform. We swapped from an i7-8086K to the Core i9-9900K. The eight-core i9-9900K sits in an MSI Z390 MEG Ace Motherboard along with 2x16GB Corsair DDR4 3200 MHz CL16 RAM (CMK32GX4M2B3200C16). Keeping the CPU cool is a Corsair H150i Pro RGB AIO, along with a 120mm Sharkoon fan for general airflow across the test system. Storing our OS and gaming suite is a single 2TB Kingston KC2000 NVMe PCIe 3.0 x4 drive.

The motherboard was updated to the latest (at this time) BIOS, version 7B12v16, from August 2019. Optimized defaults were used to set up the system. We then enabled the memory’s XMP profile to get the memory running at the rated 3200 MHz CL16 specification. No other changes or performance enhancements were enabled. The latest version of Windows 10 (1909) is used and is fully updated as of December 2019.

We will include GPUs that compete with and are close in performance to the card that is being reviewed. In this case, we have two Nvidia cards from Zotac, the GTX 1650 Super, and GTX 1660 On the AMD side, we’ve used the Polaris based XFX RX 590 Fat Boy as well as a reference RX 5700 and any previous RX 5500 XT cards.

Our list of games test games is currently Tom Clancy’s The Division 2, Ghost Recon: Breakpoint, Borderlands 3, Gears of War 5, Strange Brigade, Shadow of The Tomb Raider, Far Cry 5, Metro: Exodus, Final Fantasy XIV: Shadowbringers, Forza Horizon 4 and Battlefield V. These titles represent a broad spectrum of genres and APIs, which gives us a good idea of the relative performance difference between the cards. We’re using driver build 441.20 for the Nvidia cards and Adrenalin 2020 Edition 19.12.2 for AMD.

We capture our frames per second (fps) and frame time information by running OCAT during our benchmarks. In order to capture clock and fan speed, temperature, and power, GPUz's logging capabilities are used. Soon we’ll resume using the Powenetics-based system used in previous reviews.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Features and Specifications

Next Page Performance Results: 1920 x 1080 (Ultra)

Joe Shields is a staff writer at Tom’s Hardware. He reviews motherboards and PC components.

-

larkspur ReplyThe Windforce 3 cooler has little issue keeping up with the stock power limit of this card.

Yes, obviously. That's why I'm wondering why you didn't crank up the power limit and try some overclocking. This card is begging for it. -

hannibal This card is allready near the upper limit it can get, so it is better to keep it cool and quiet than try to get some extra speed.Reply -

larkspur Reply

No not really... This particular card has an excellent huge cooler and plenty of extra thermal headroom. Look at the temp chart and power usage. The thing isn't even close to maxed-out. Overclocking is exactly why someone would spend extra on this particular card to get this little chip with such a massive oversized cooler... No reason not to at least give it a shot using AMD's own OCing tool...hannibal said:This card is allready near the upper limit it can get, so it is better to keep it cool and quiet than try to get some extra speed.

Furthermore, Igor believes that every Radeon RX 5500 XT should have no problem reaching the 2 GHz mark.

That's from: https://www.tomshardware.com/news/overclock-your-radeon-rx-5500-xt-to-21-ghz-on-air-with-this-tool -

alextheblue Reply

Yeah if you're not planning on overclocking, get a cheaper one.larkspur said:Overclocking is exactly why someone would spend extra on this particular card to get this little chip with such a massive oversized cooler... -

hannibal As we can see from 5700xt. Those huge saphire nitro, asus strix and so on cards Are not faster than much cheaper variants. They just run cooler and quieter that those expensive monster cooled versions.Reply

Same here... -

askeptic Am I the only one who read the entire article and walked away believing that Intel has the edge still?Reply

I don't agree that the AMD would need a custom water loop, but if it did that would throw the price to an absurd amount for that chip.

Intel still wins in the areas where it matters. The only time I would consider the AMD chip would be if my job was to constantly encode videos or unzip files. IE, if I were a creator. Most people are not creators. They may think they are, but they are not.

Software still favors Intel's platform as well. Maybe in 3 more years AMD would be a viable option for most at the high end, but I am just not seeing it. The size of process nodes play no role in my decision as I would never have a reason to care, I just want what is fastest for most applications, and this article leads me to believe that is Intel hands down.

For those videos I use Sony Vegas for twice a year, I guess I will have to wait 120 more seconds than normal.