Why you can trust Tom's Hardware

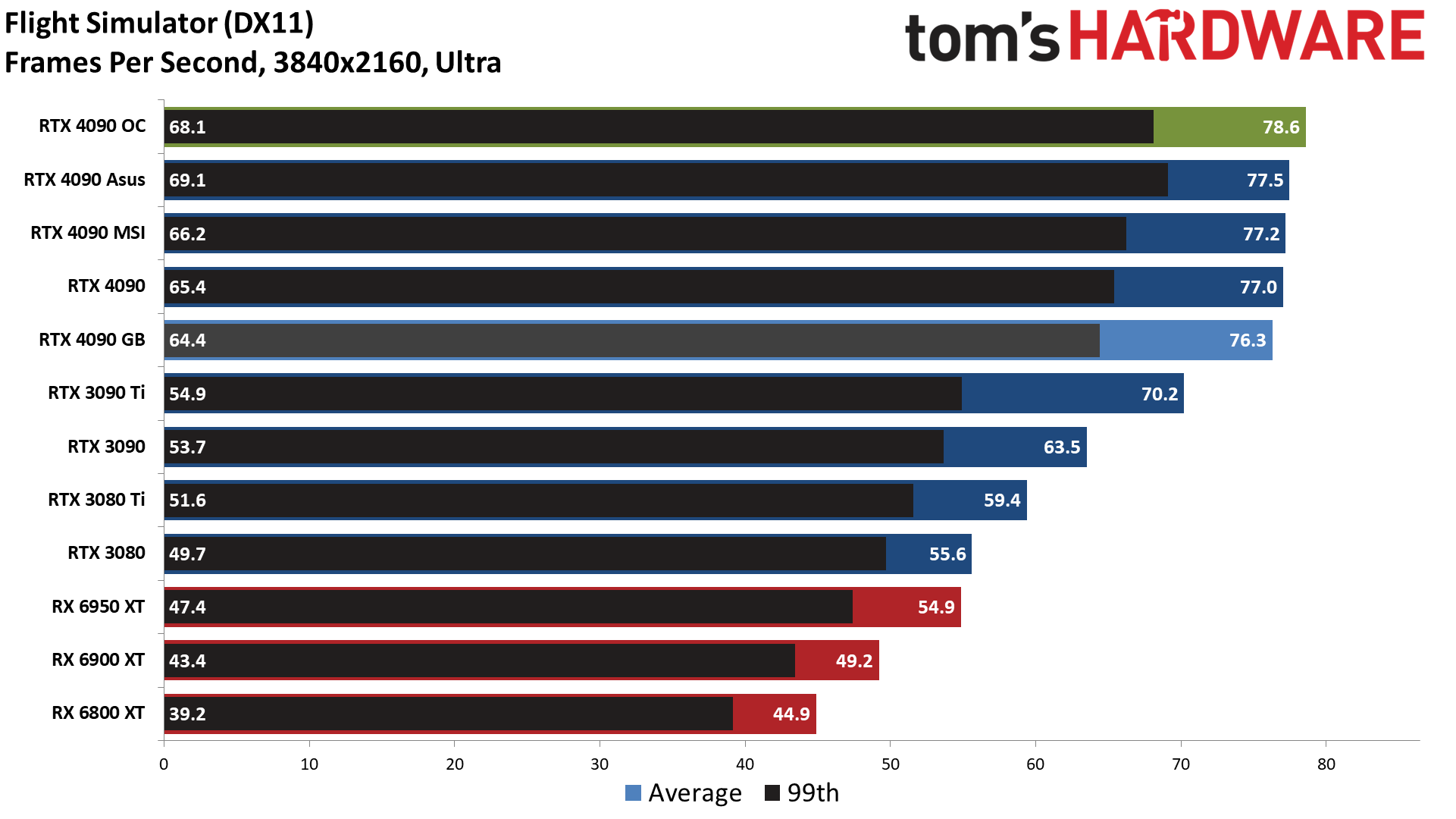

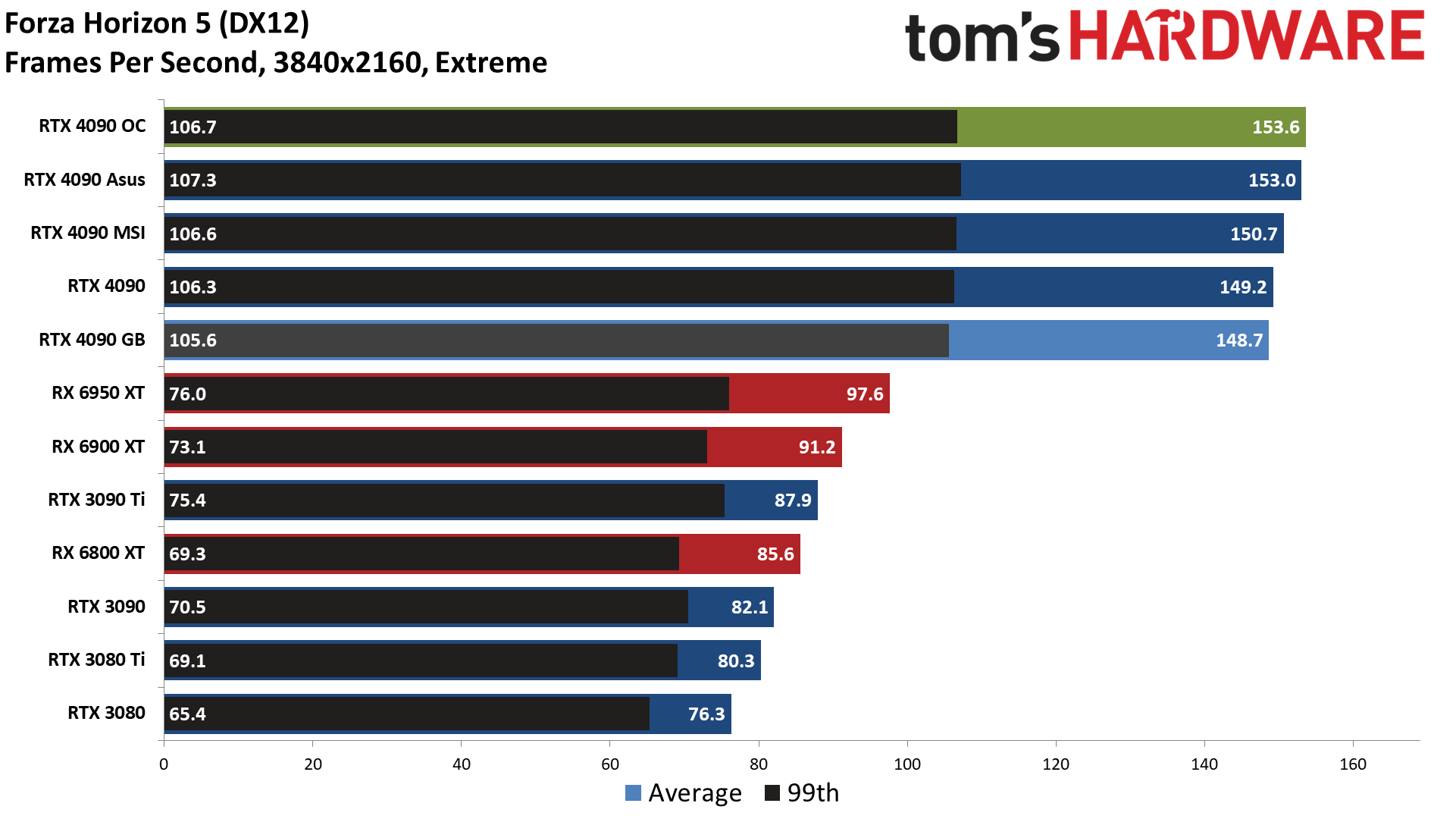

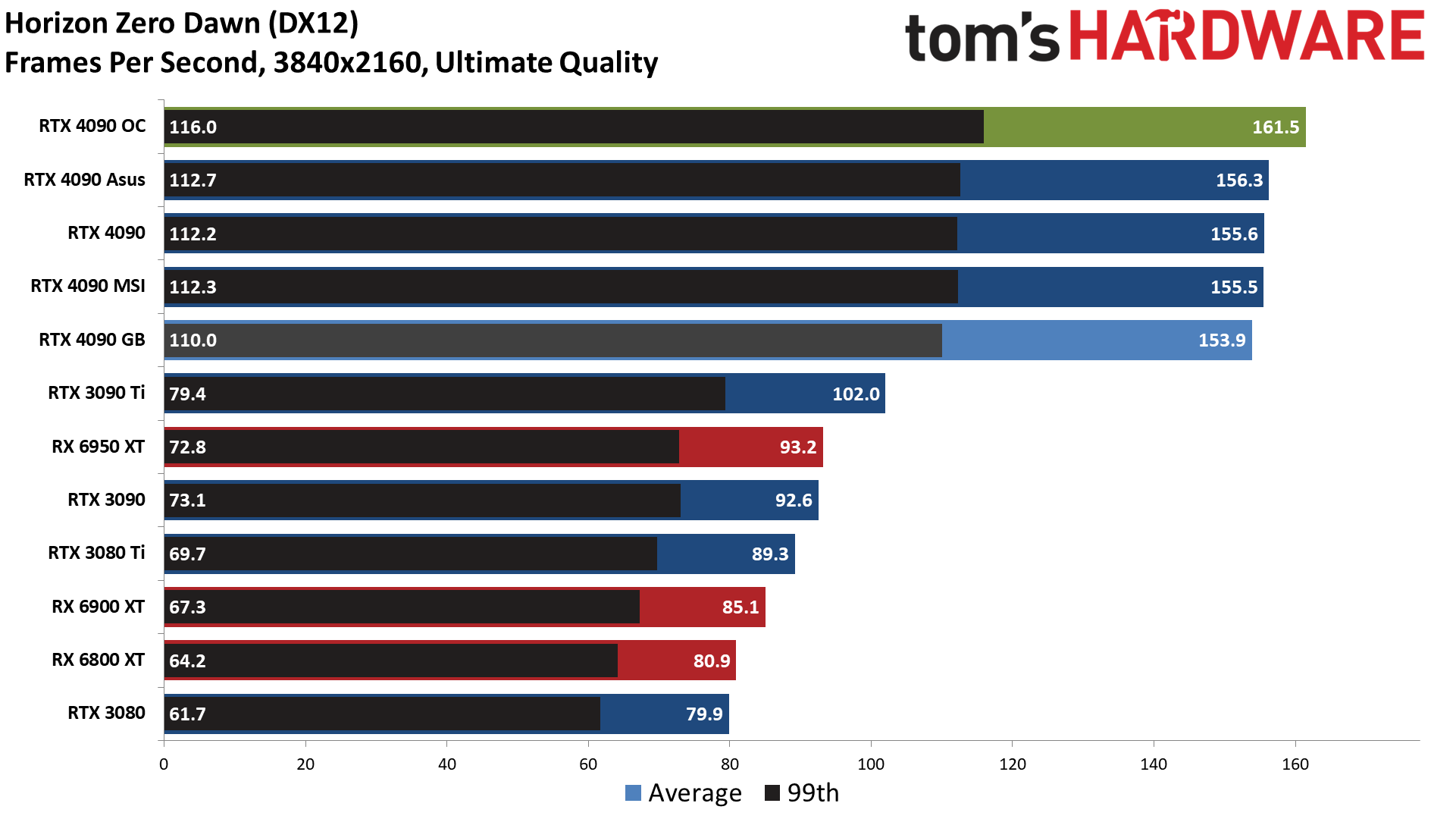

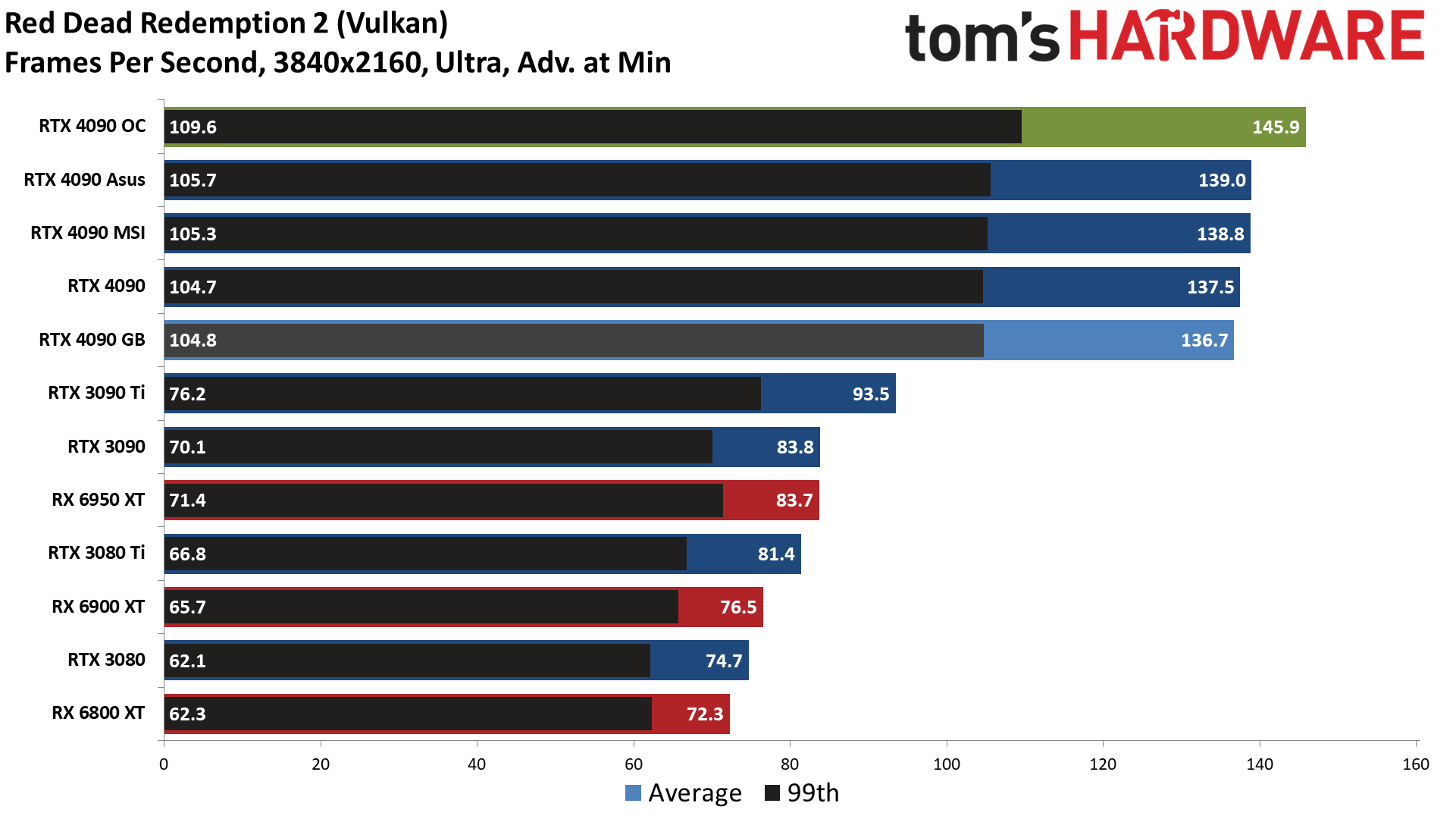

4K Gaming Performance

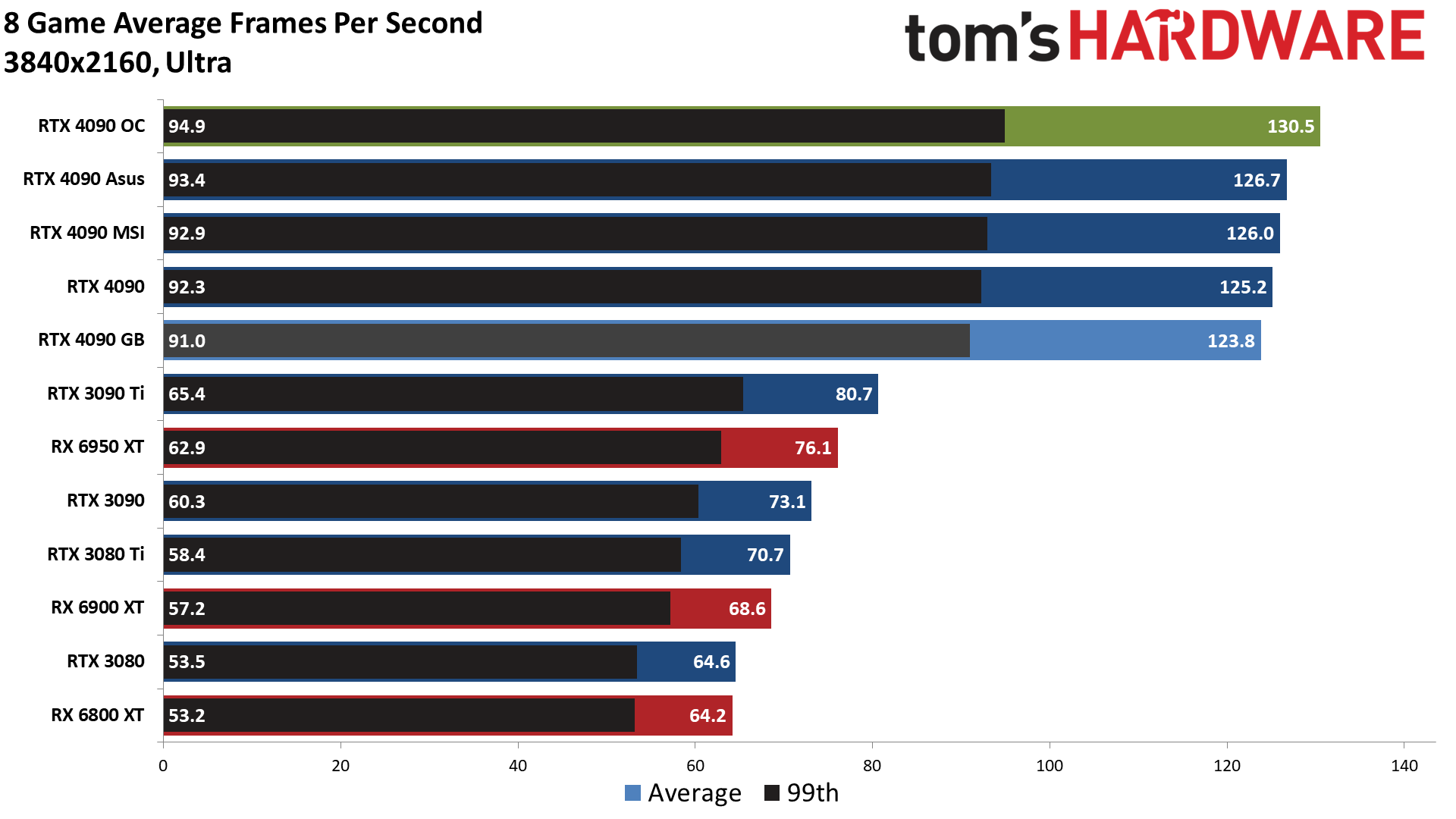

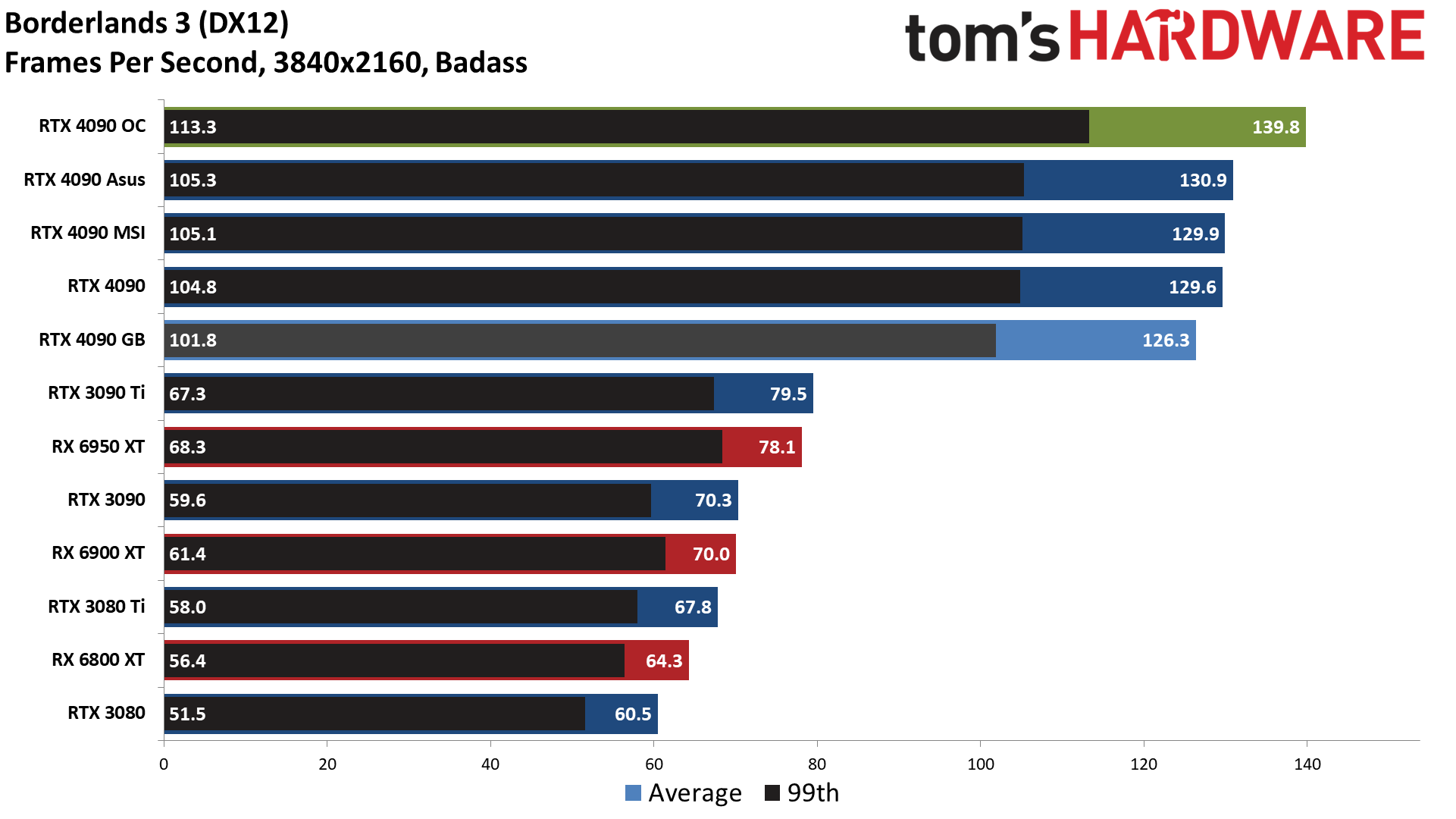

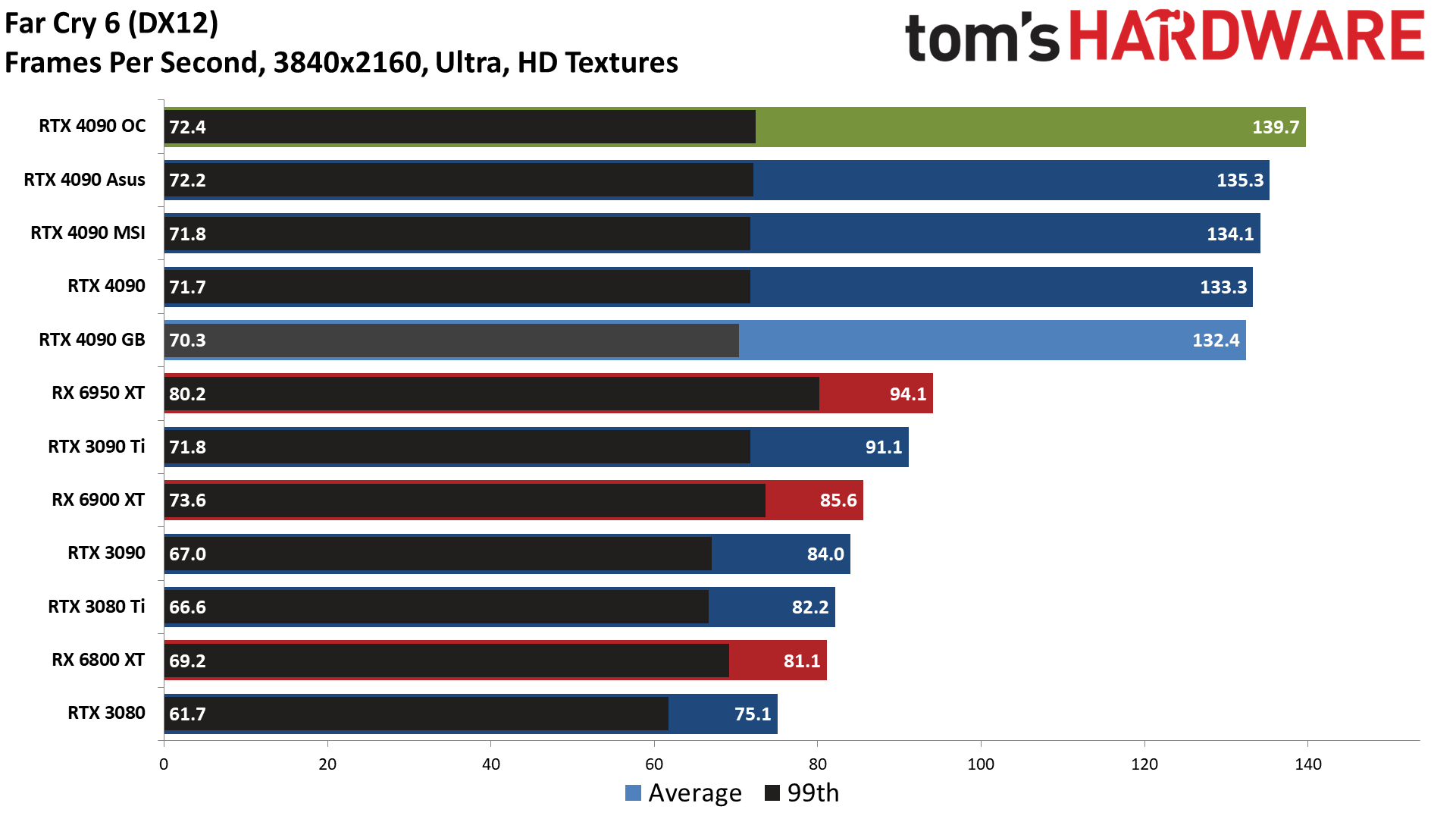

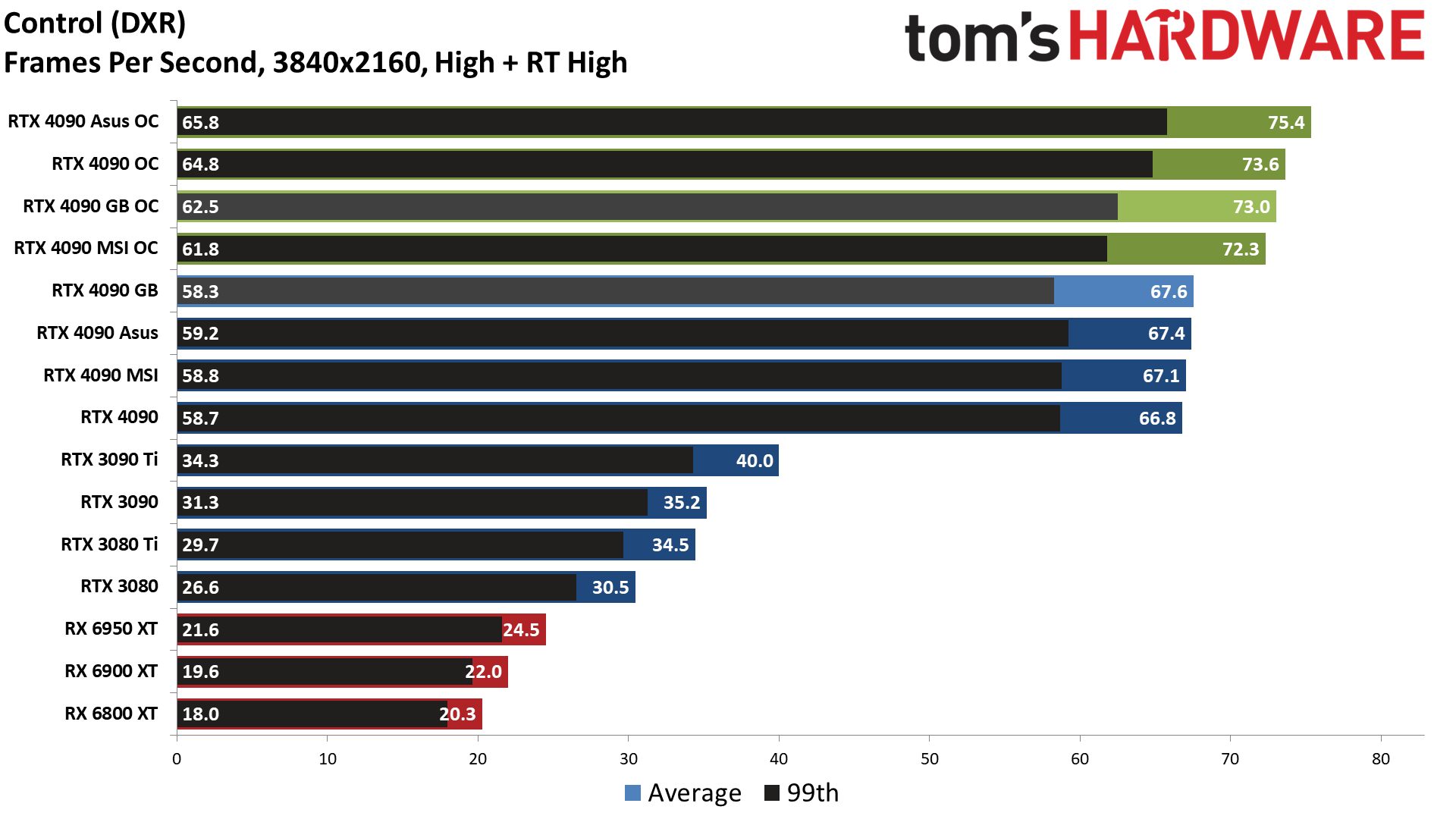

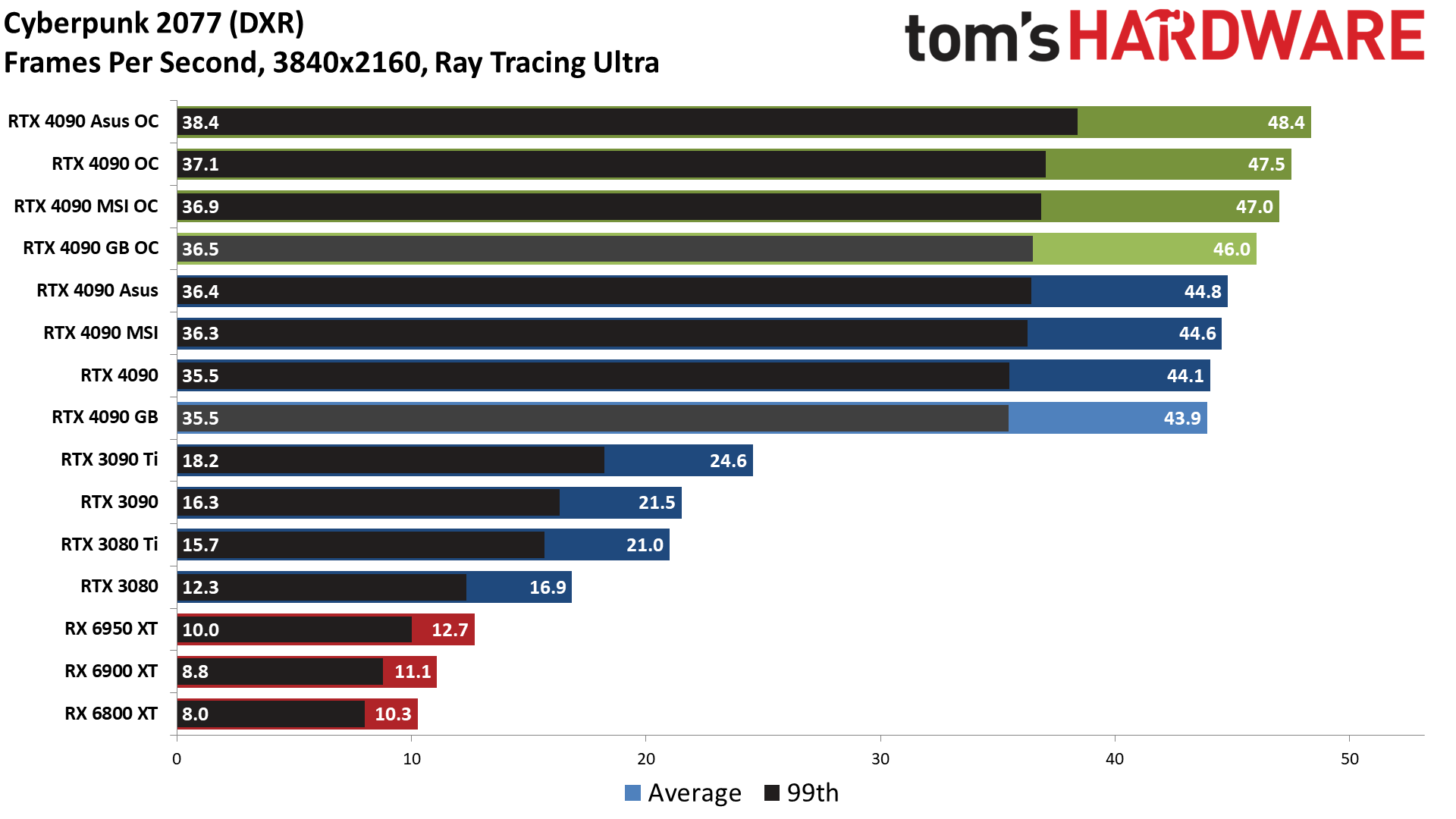

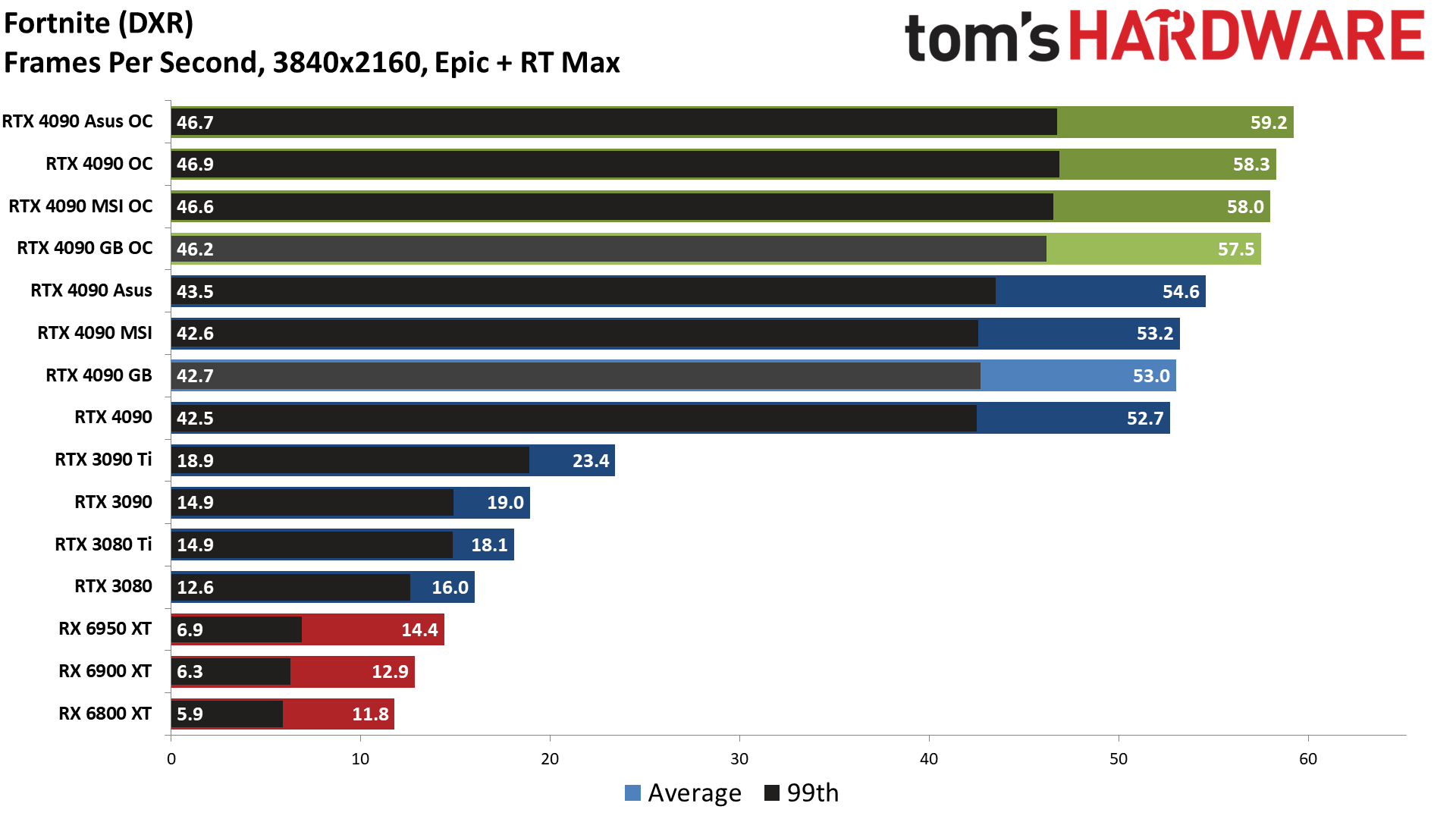

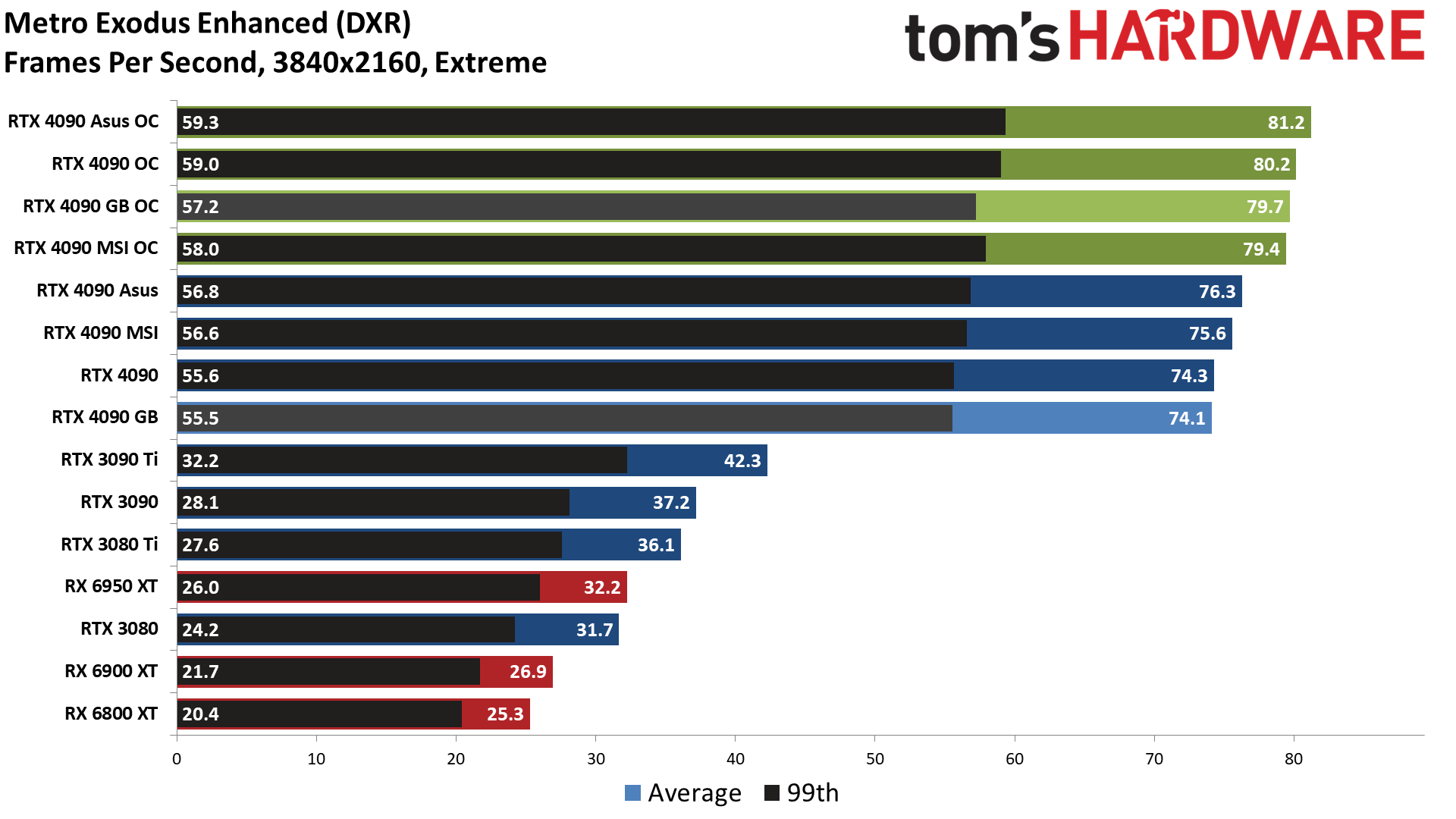

Our gaming tests consist of a "standard" suite of eight games without ray tracing enabled (even if the game supports it), and a separate "ray tracing" suite of six games that all use multiple RT effects. We've already tested the RTX 4090 Founders Edition at 1080p, and our focus on AIB cards will be the 4K and 1440p performance.

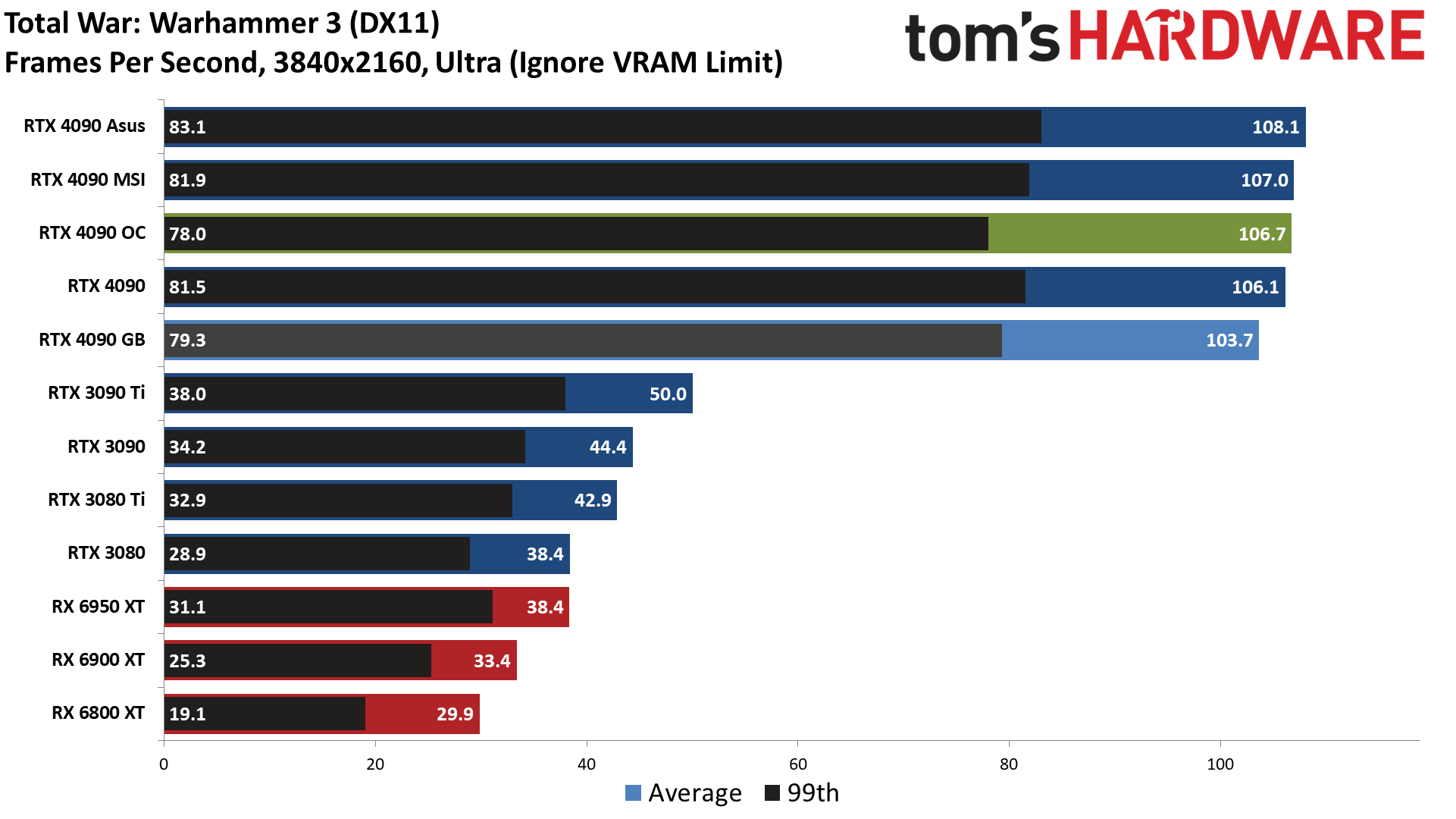

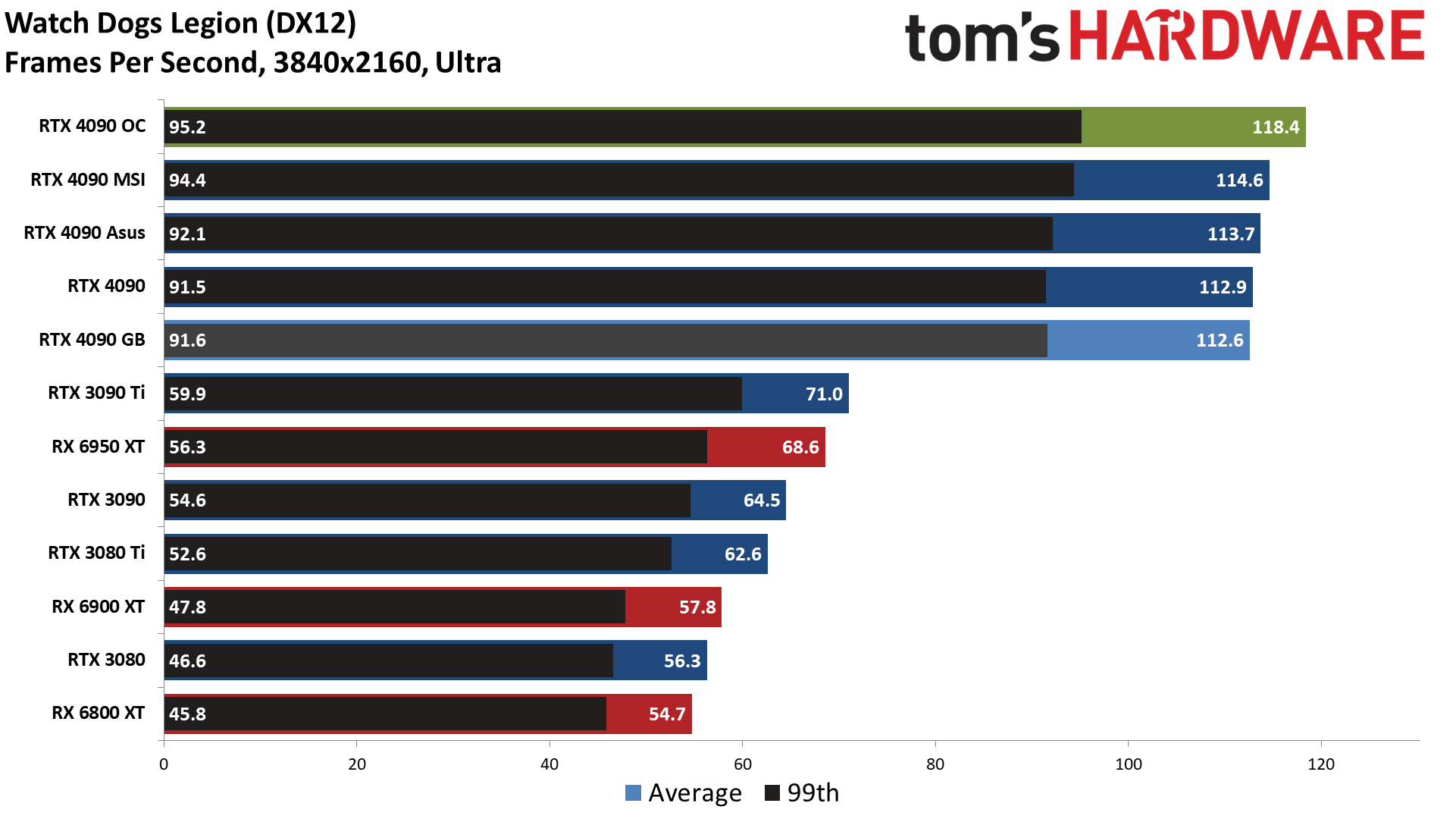

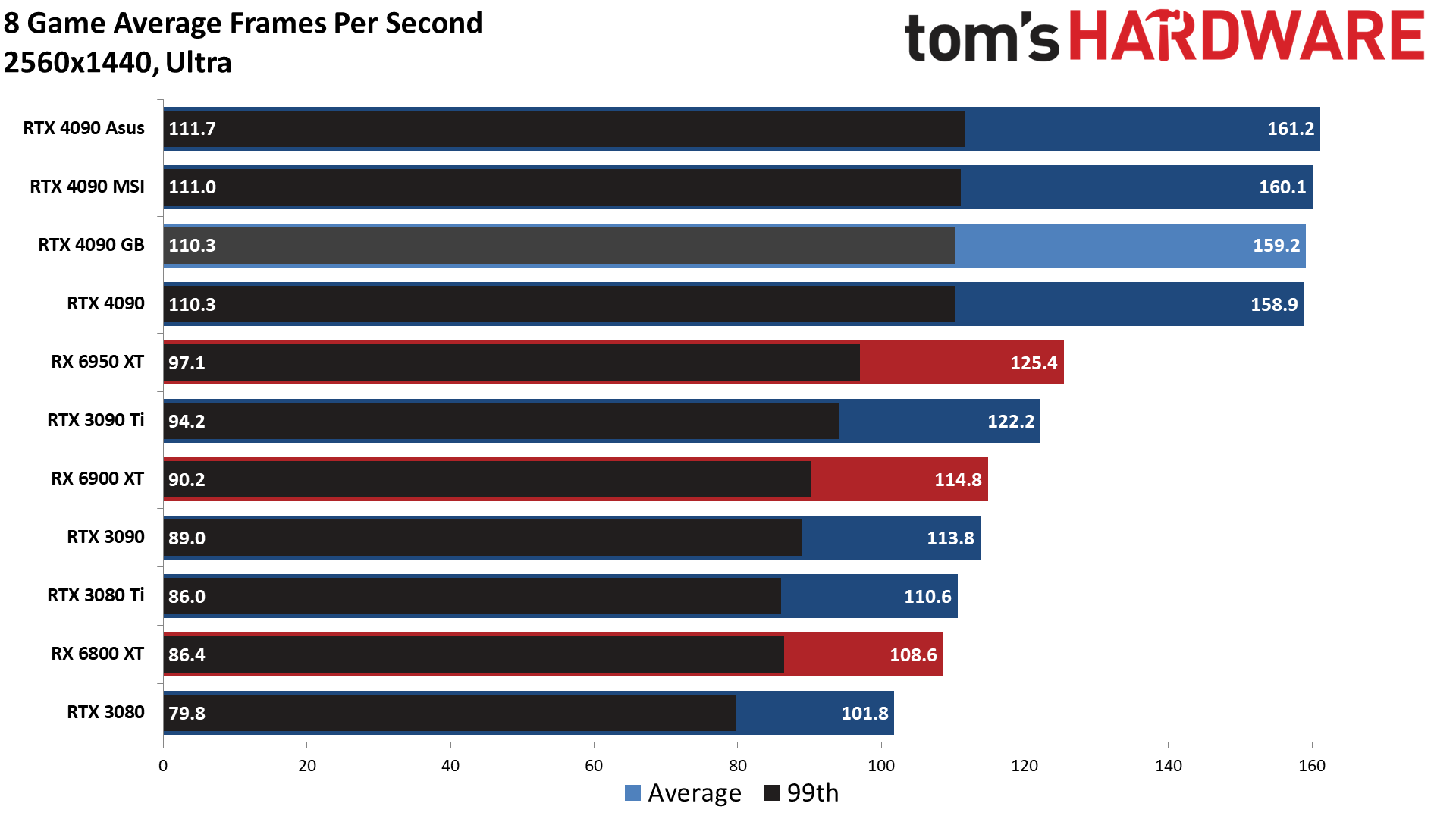

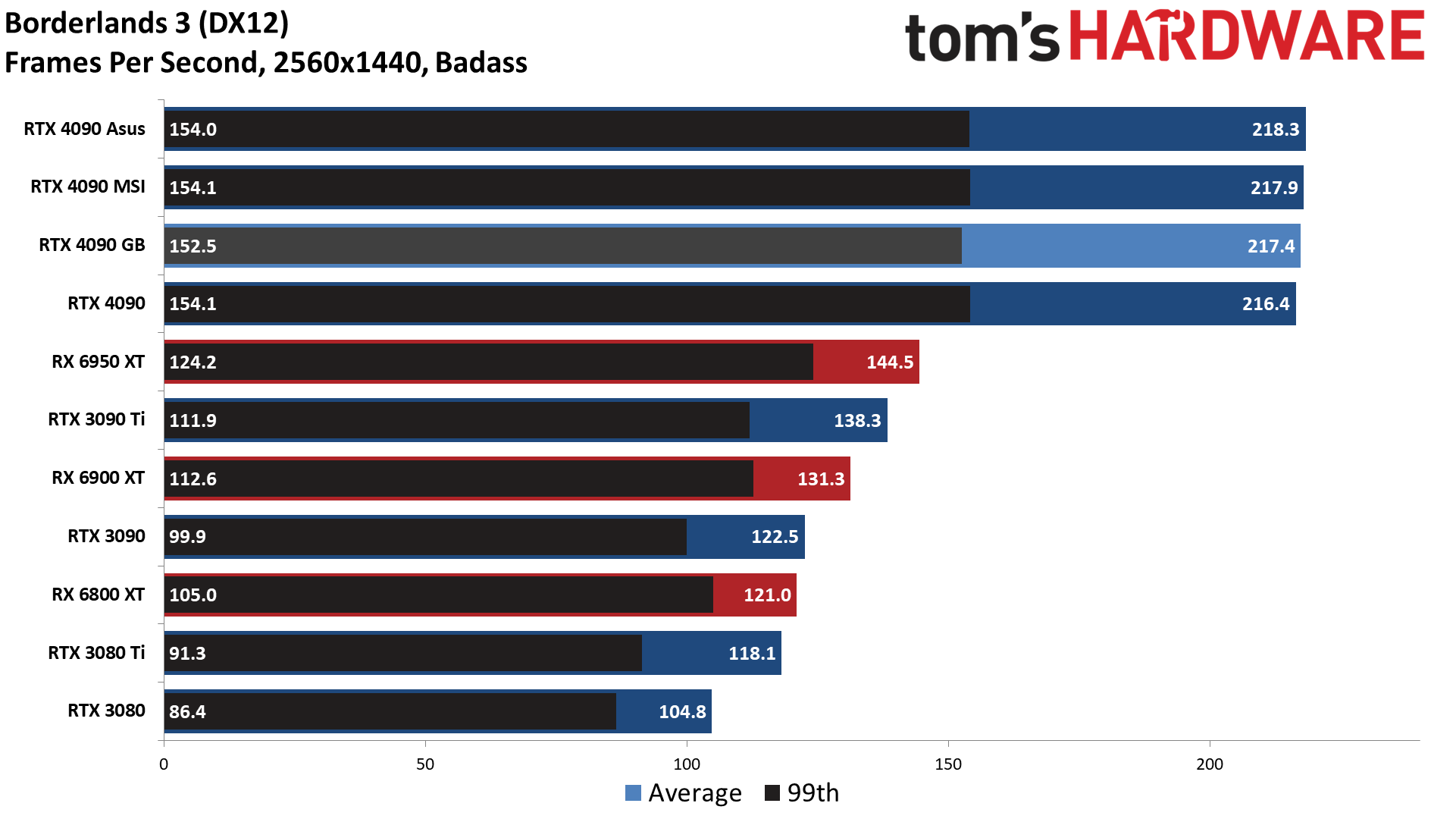

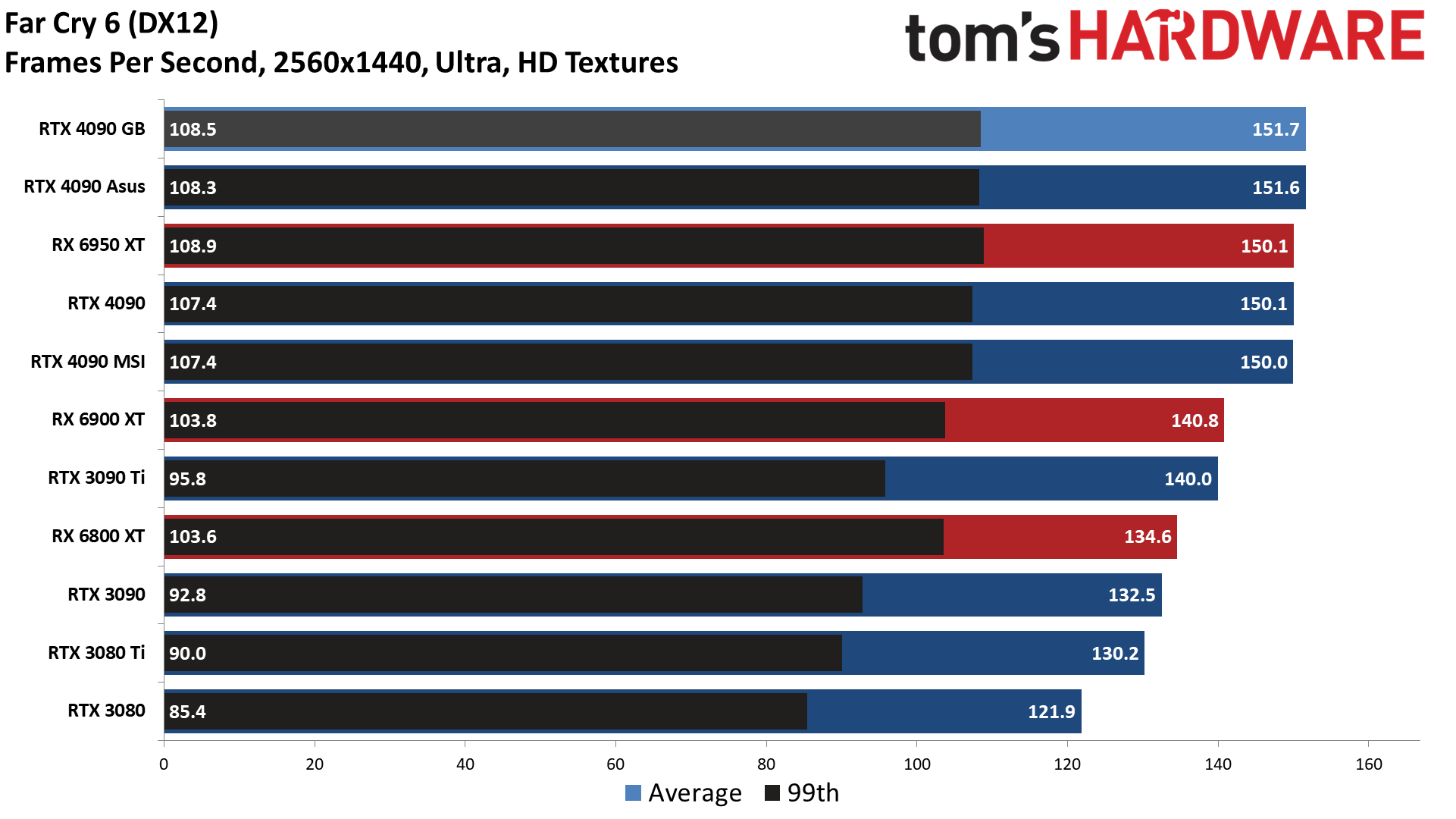

The Gigabyte RTX 4090 Gaming OC ends up as the slowest 4090 card we've tested in our standard gaming suite, averaging 123.8 fps across the eight games compared to 125.2 fps with the Founders Edition. The fastest 4090 we've tested averaged 126.7 fps (Asus ROG Strix), so there's about a 2.3% spread between the four cards. Overclocking on the Founders Edition boosted performance by an additional 4.2%, and you should see similar results from any of the other cards.

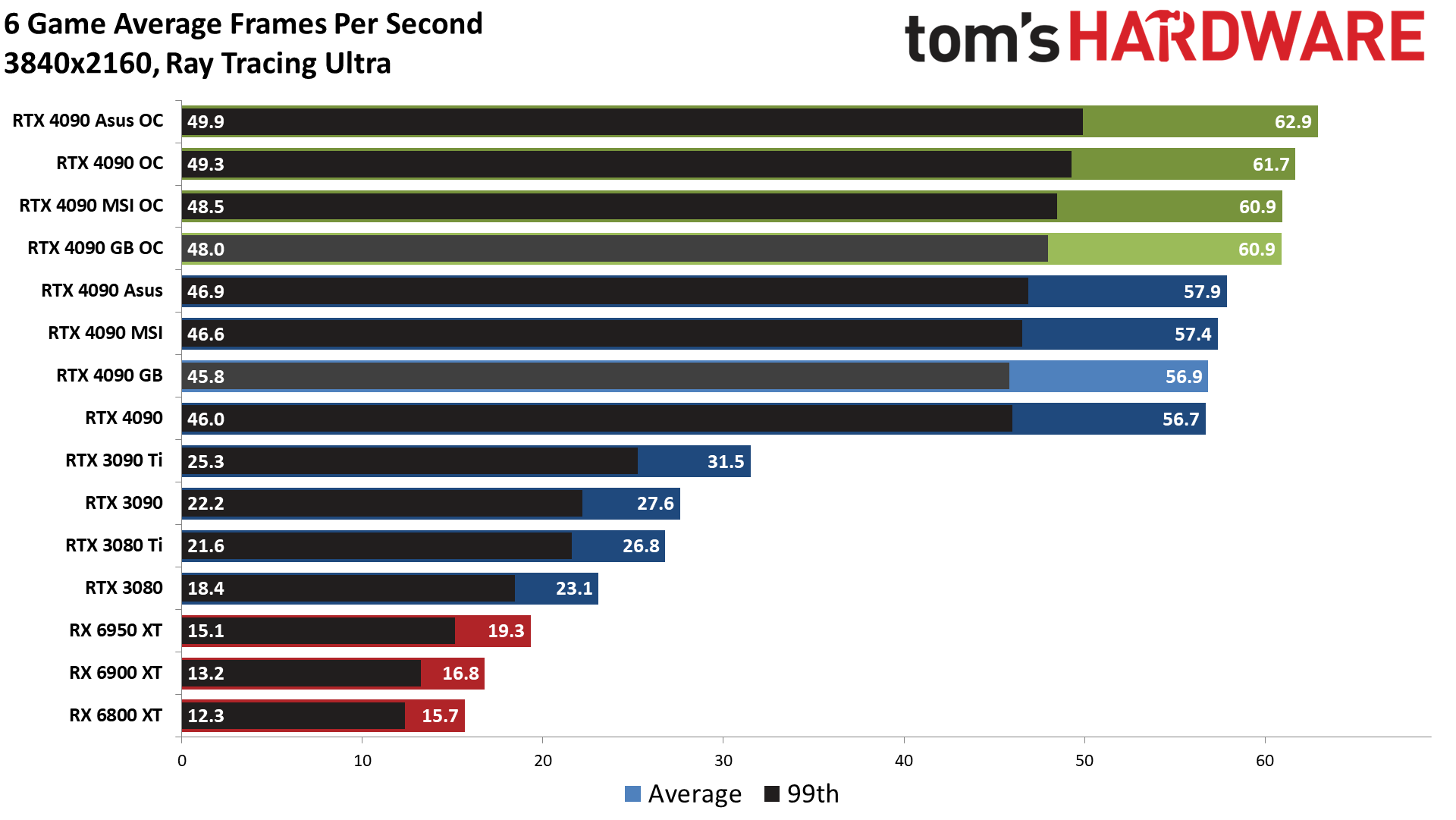

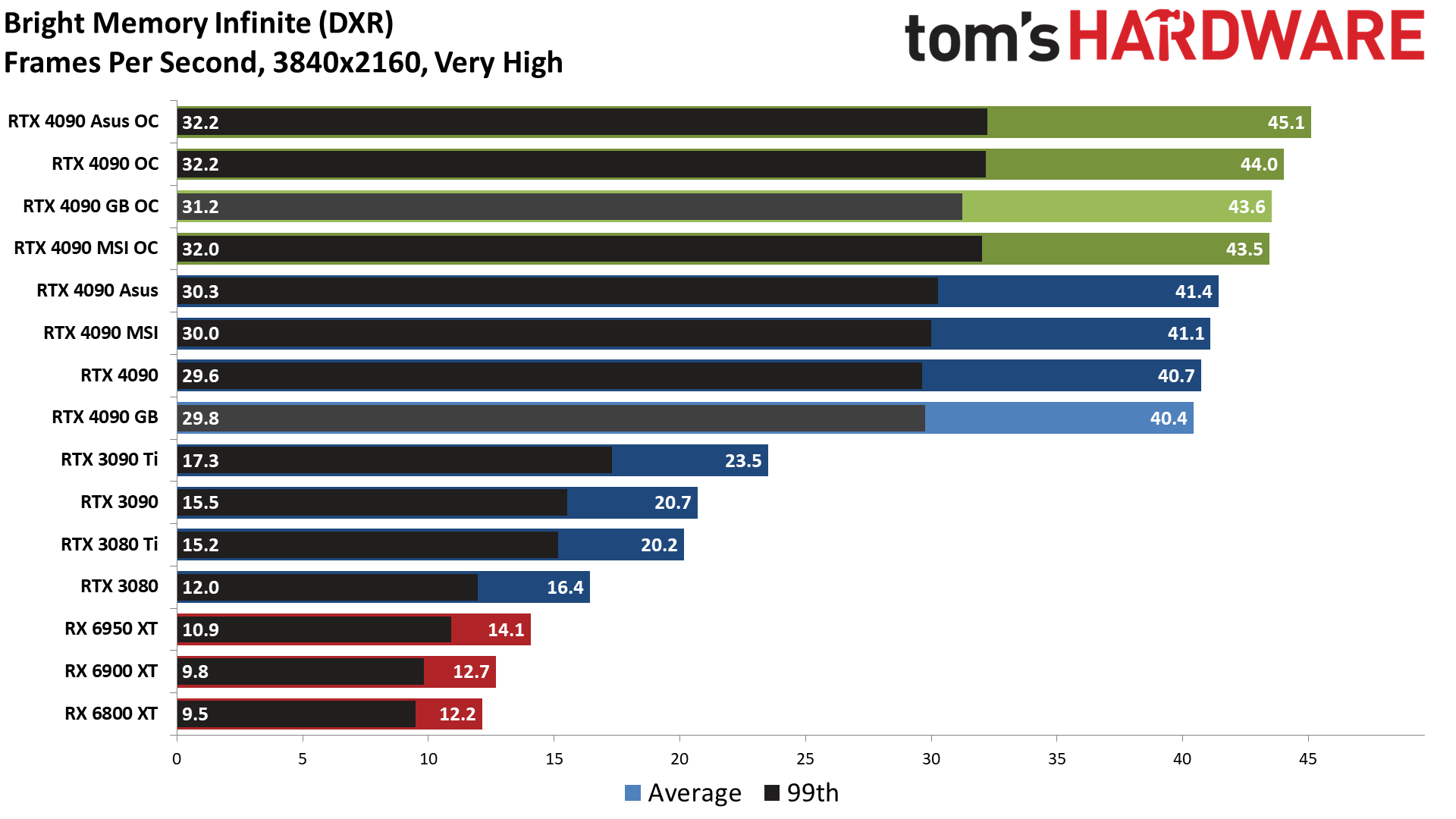

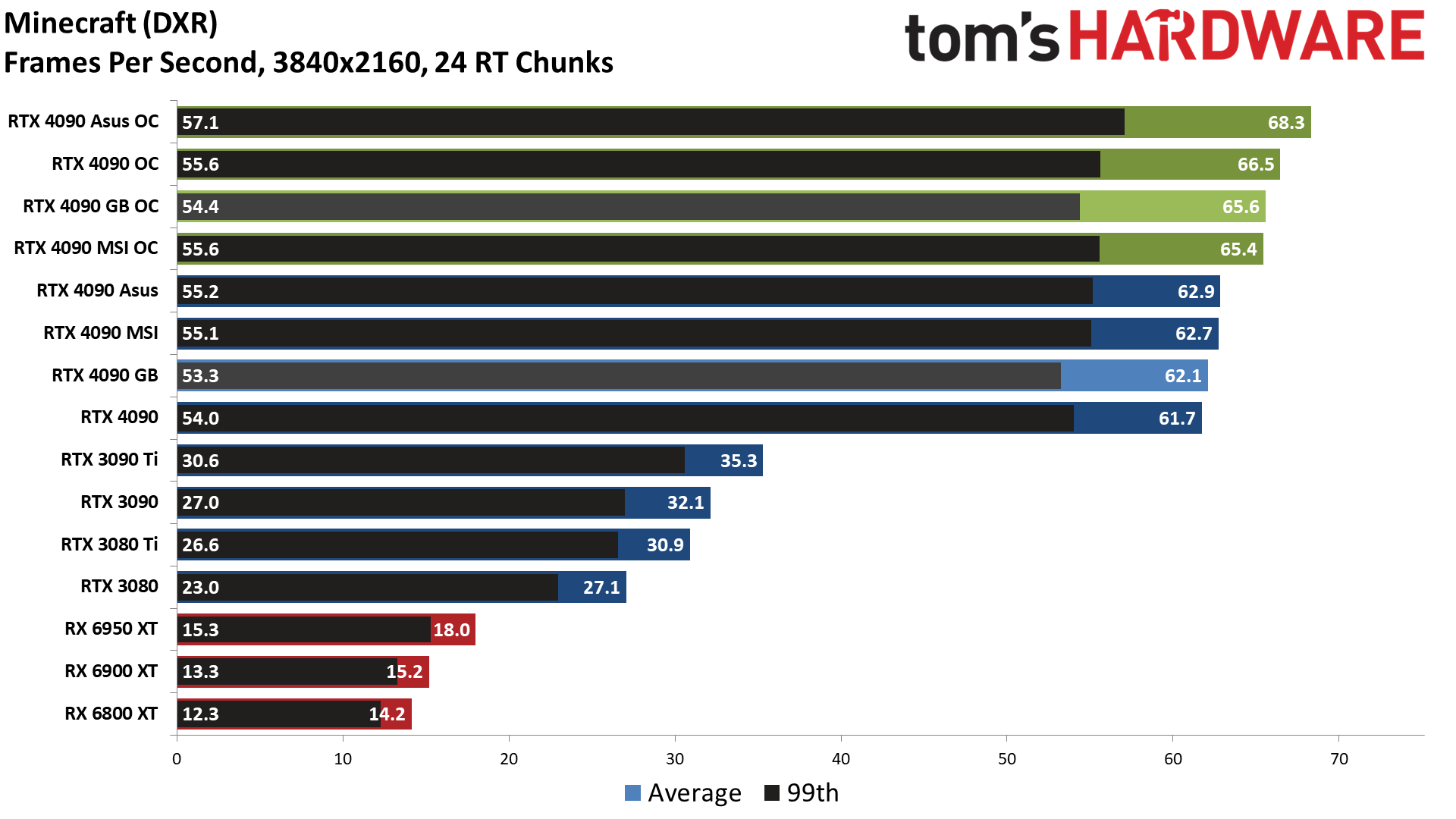

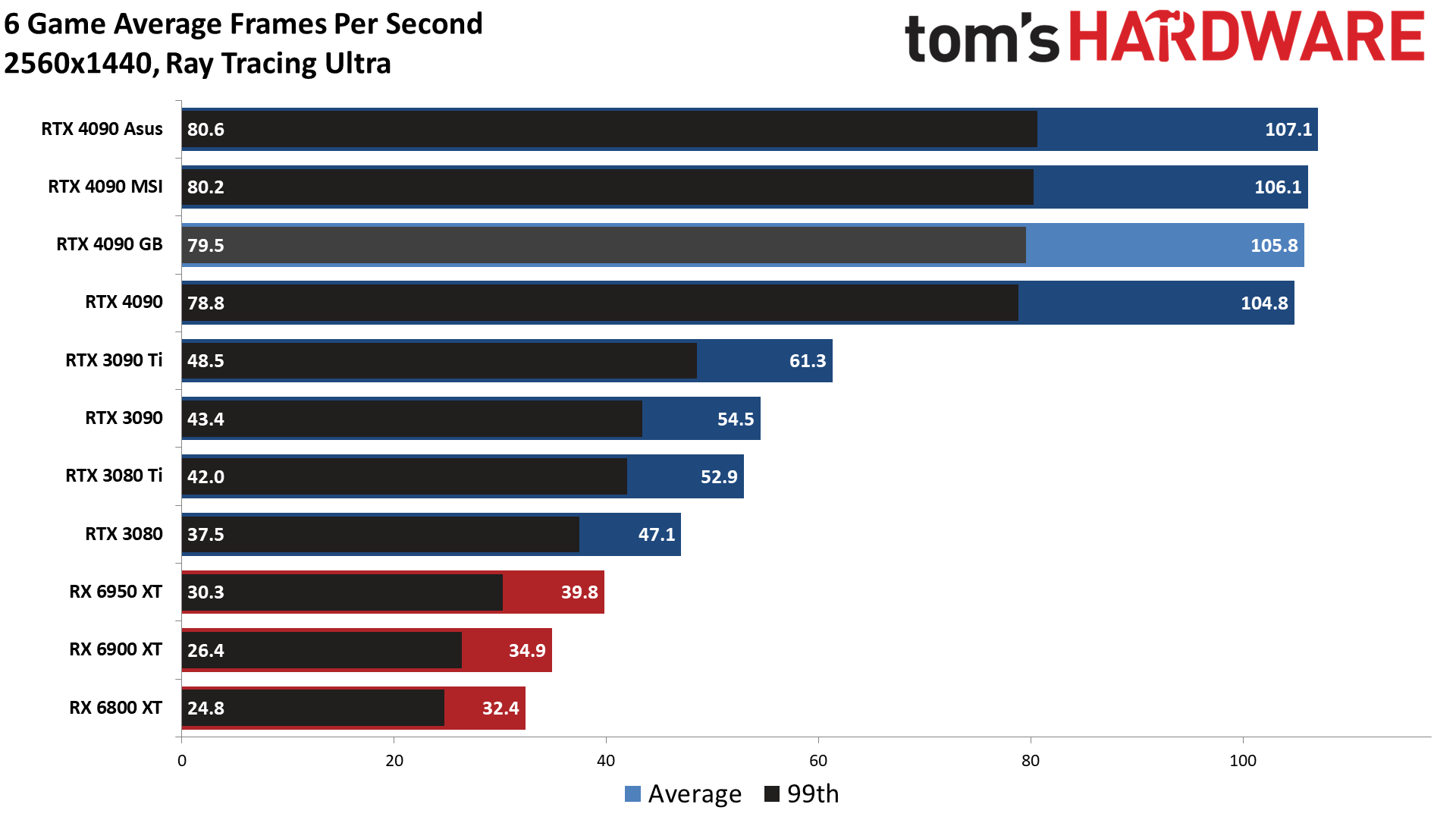

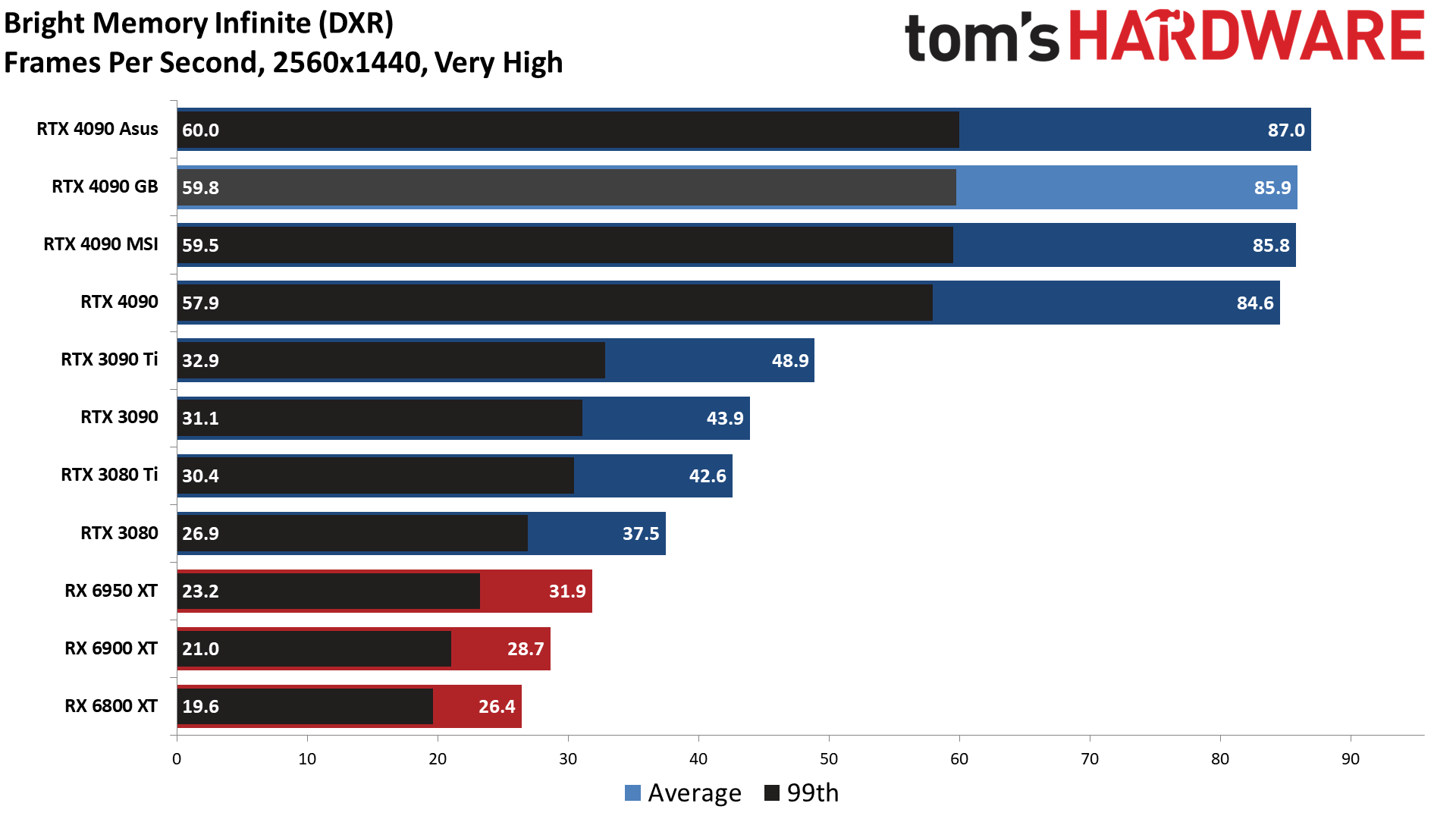

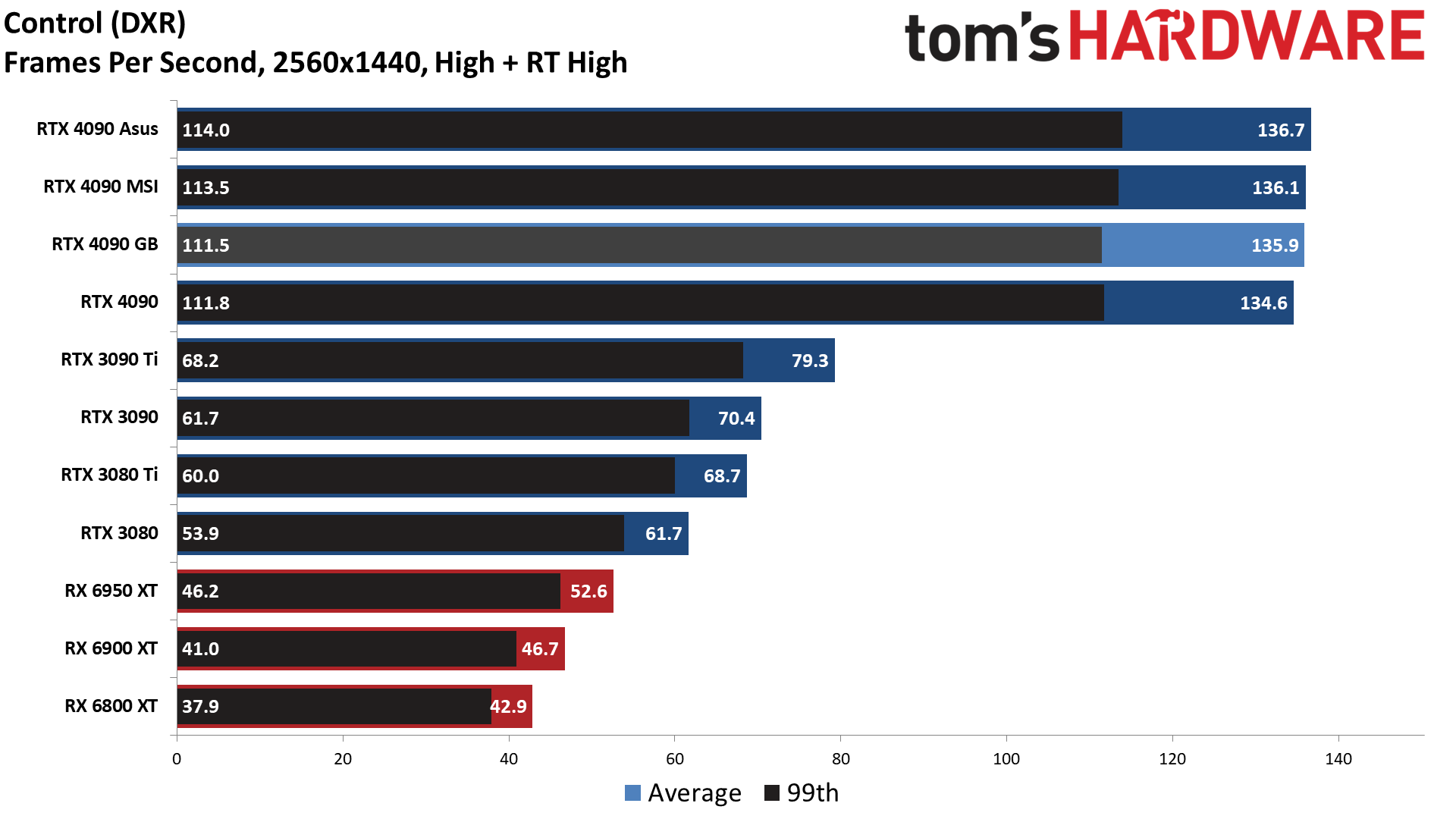

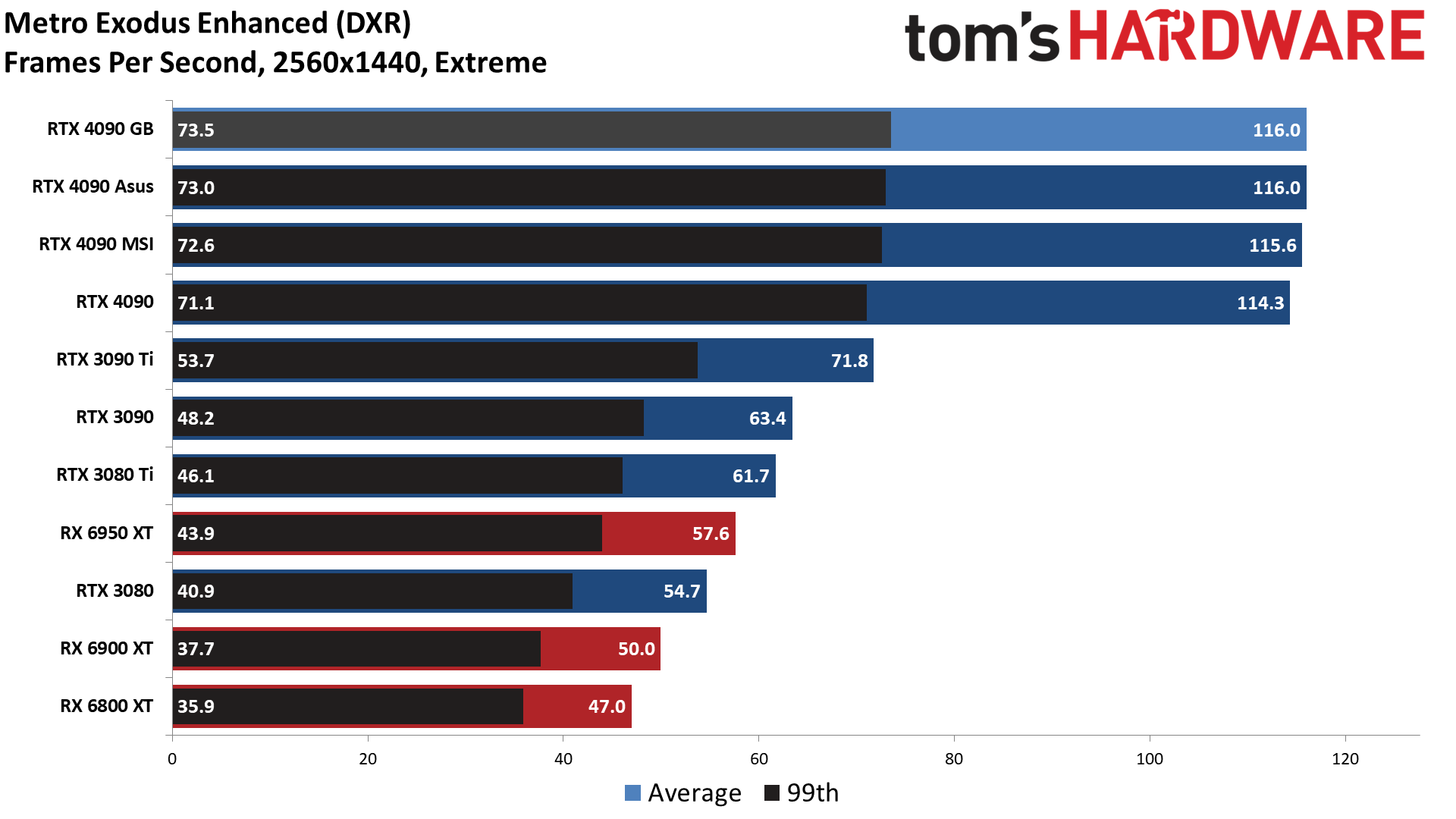

Interestingly, while the Gigabyte was slightly slower (1.1%) than the reference model in our standard suite, it ended up being a hair faster (0.4%) in our ray tracing suite. There's about a 1% margin of error on our tests, so we wouldn't read too much into this. In general, performance should effectively tie the Founders Edition.

Overclocking shows similar results, despite slight differences in the actual values. The Gigabyte card ties the MSI liquid-cooled card across our test suite, while the Founders Edition ends up slightly faster and the Asus card takes the top spot. That could just be the usual silicon lottery effect, and the spread among the overclocked cards is only 3.3% overall, regardless.

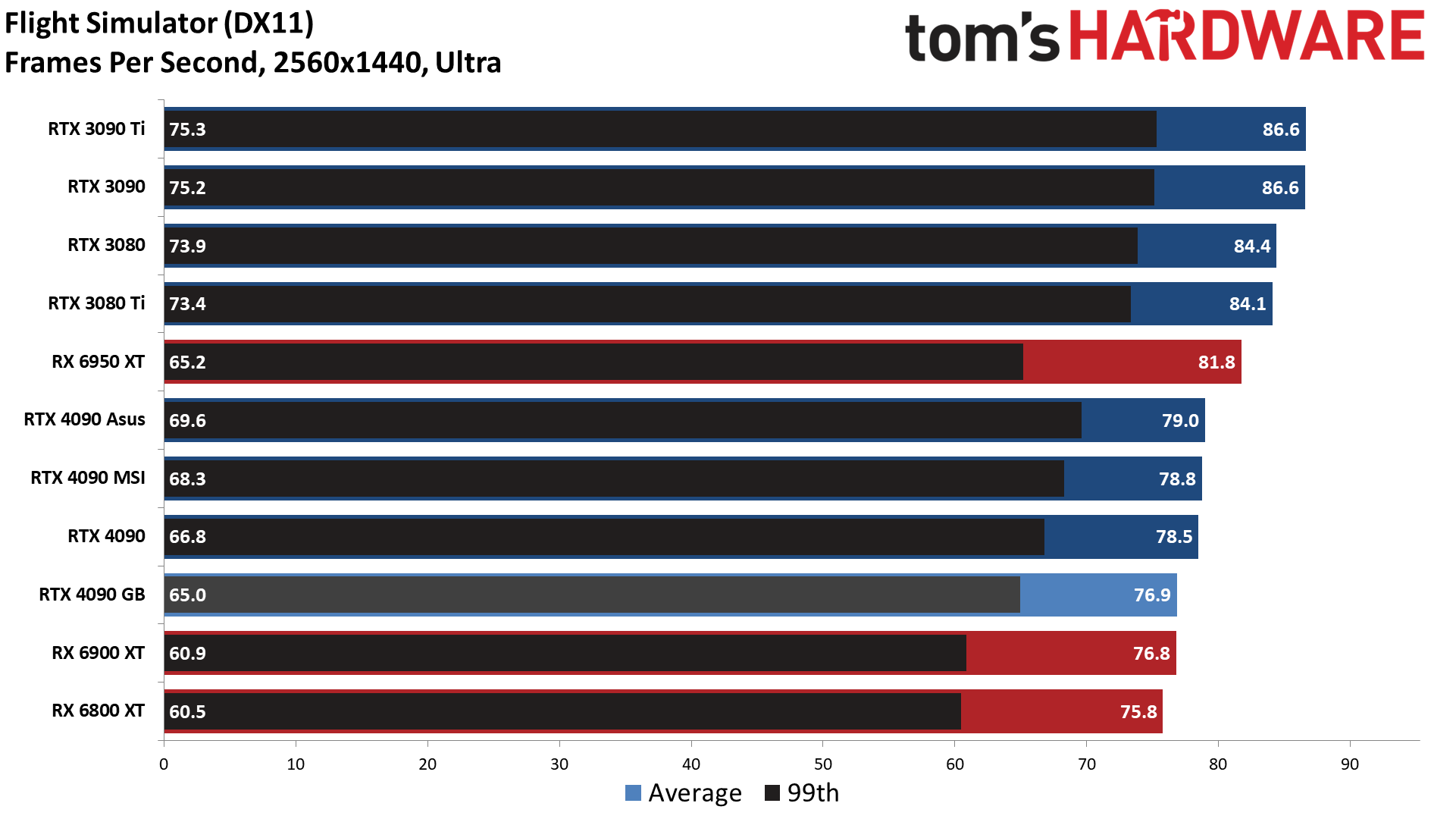

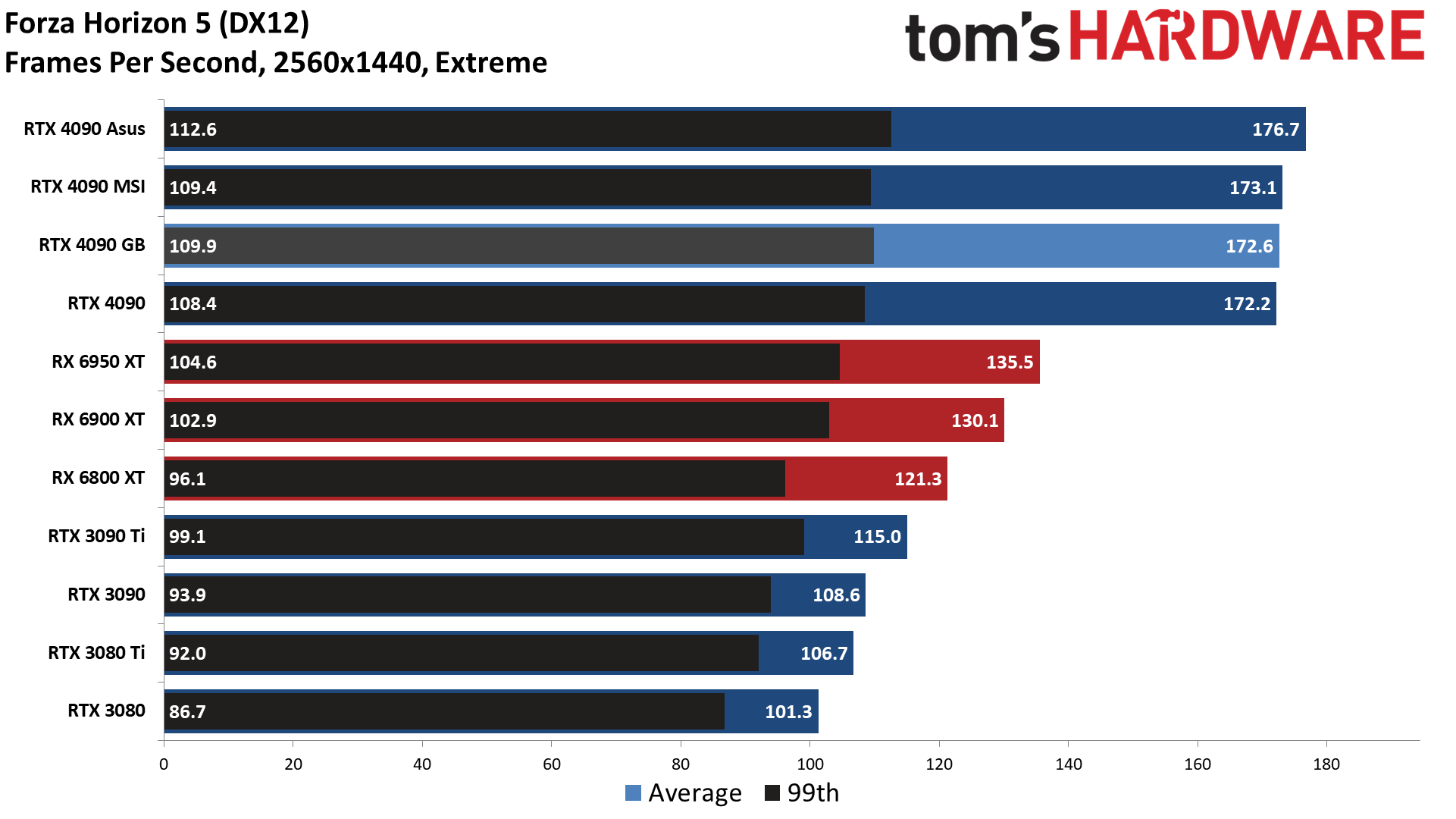

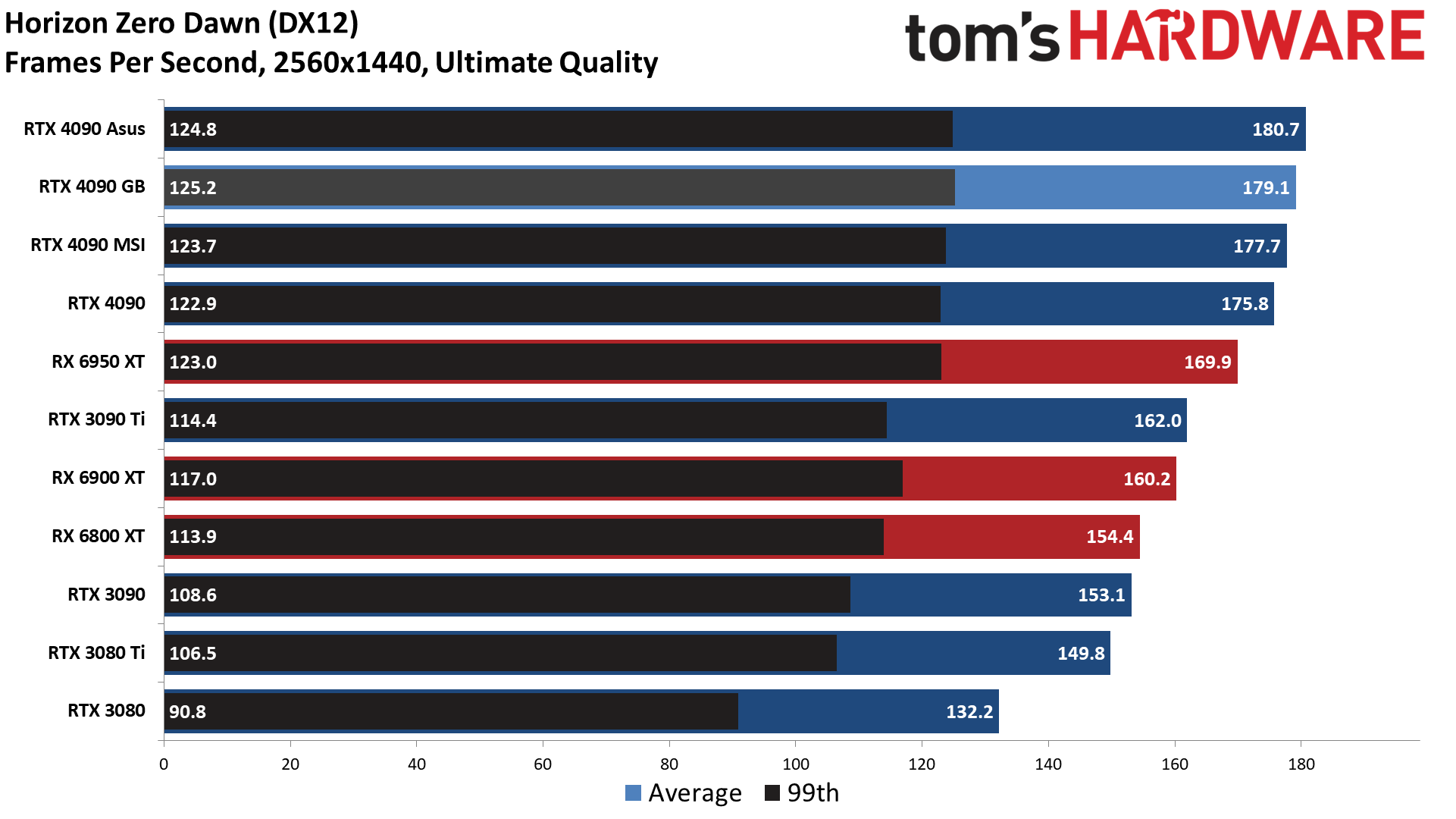

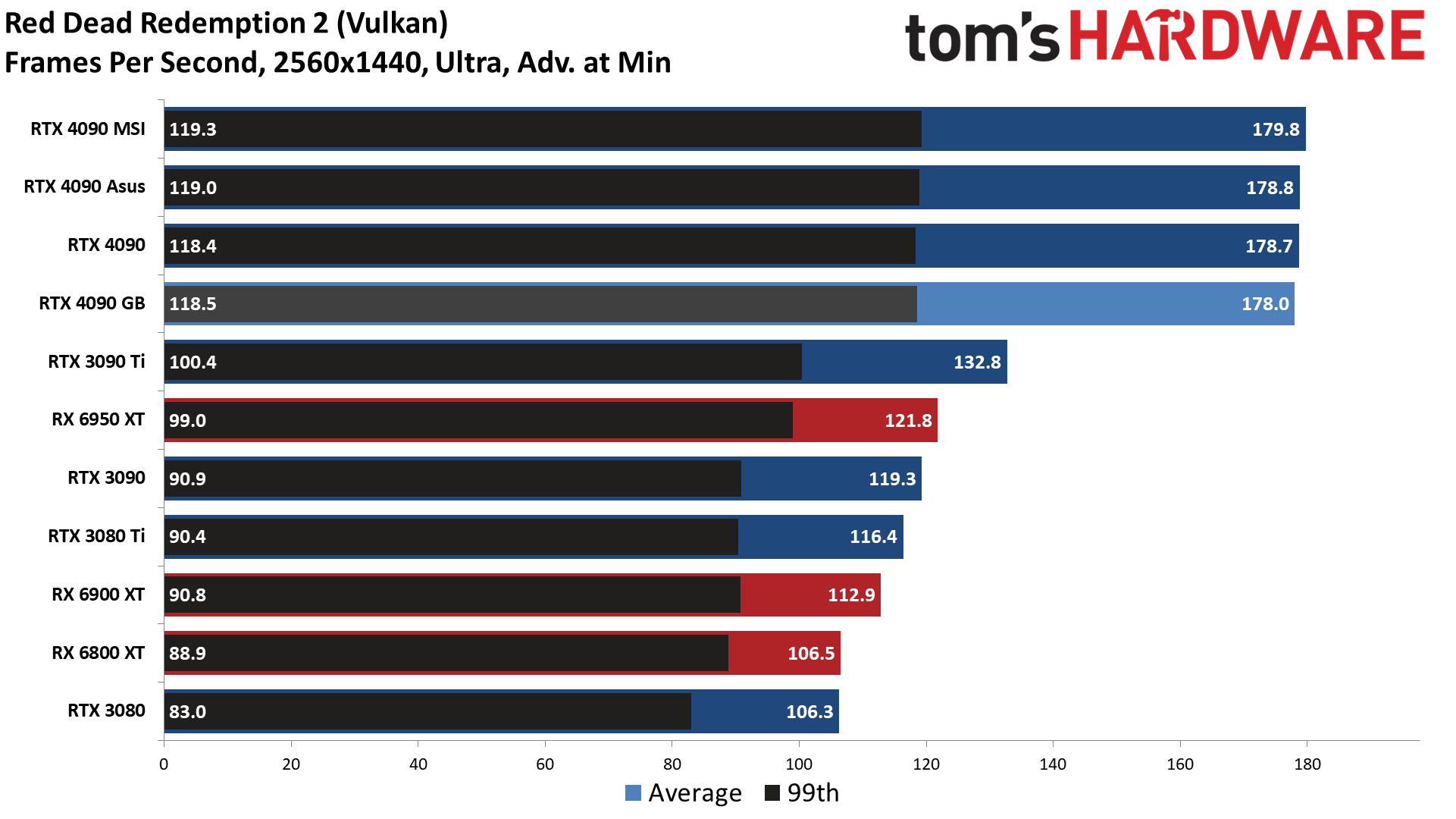

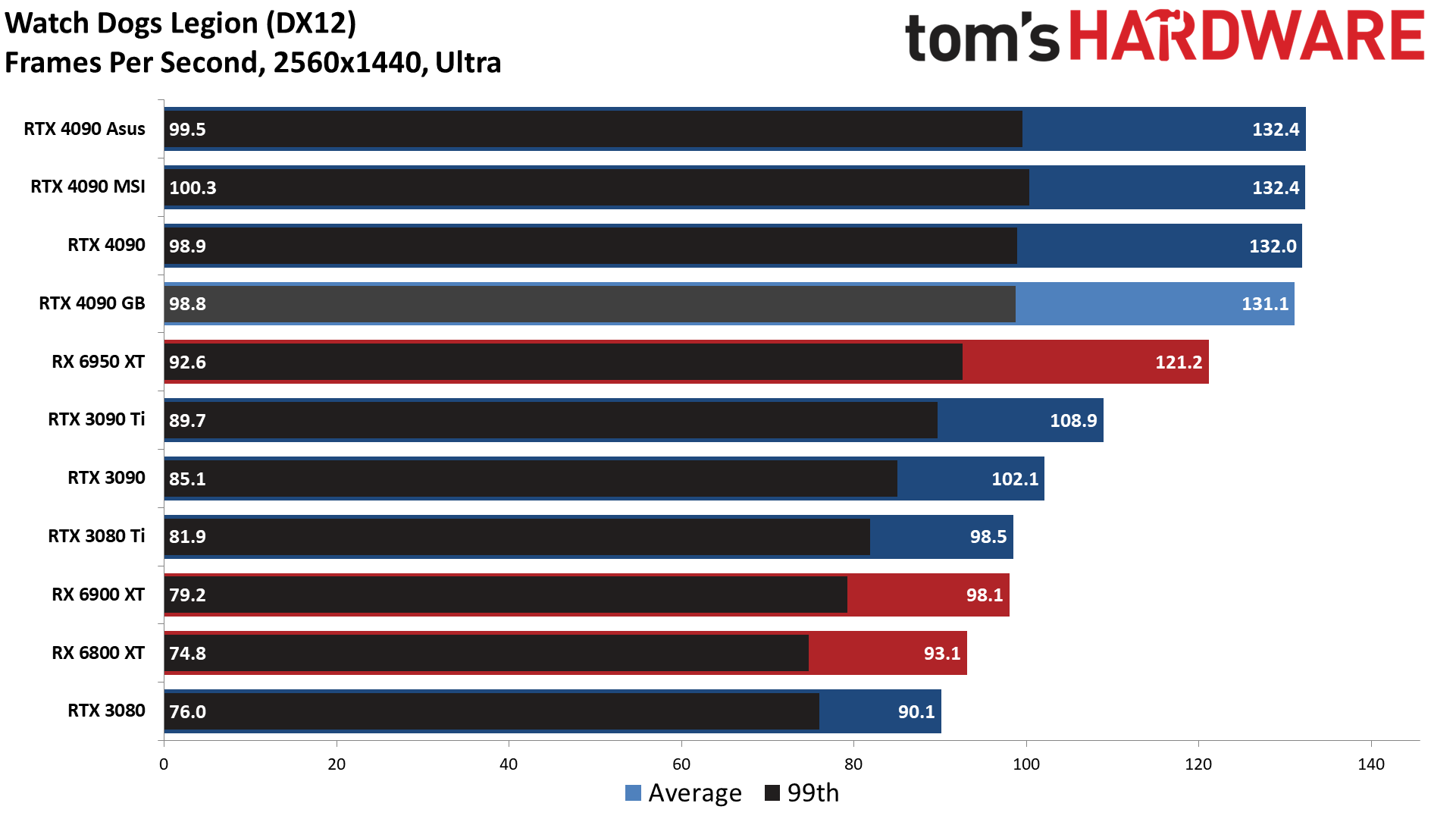

1440p Gaming Performance

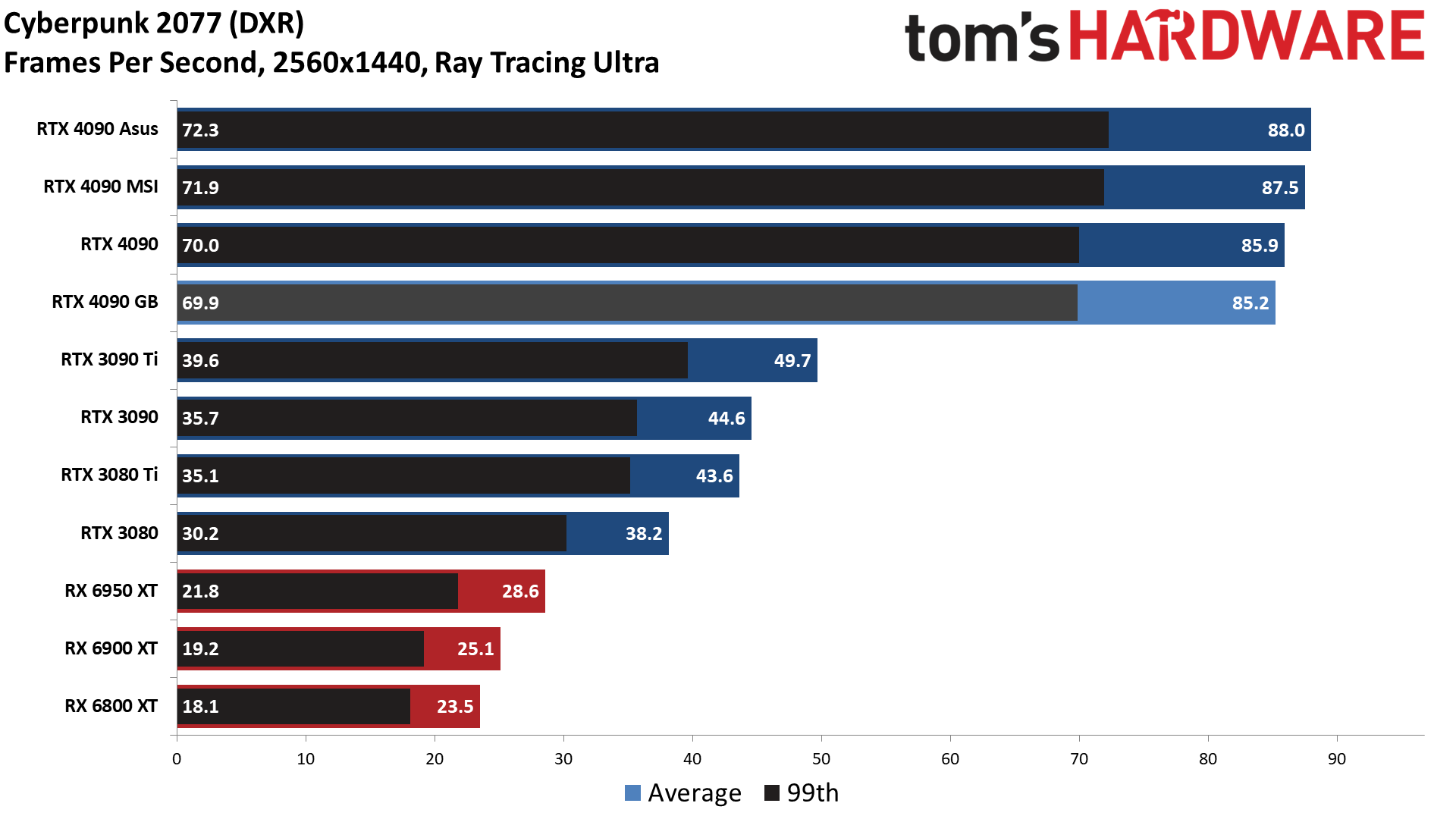

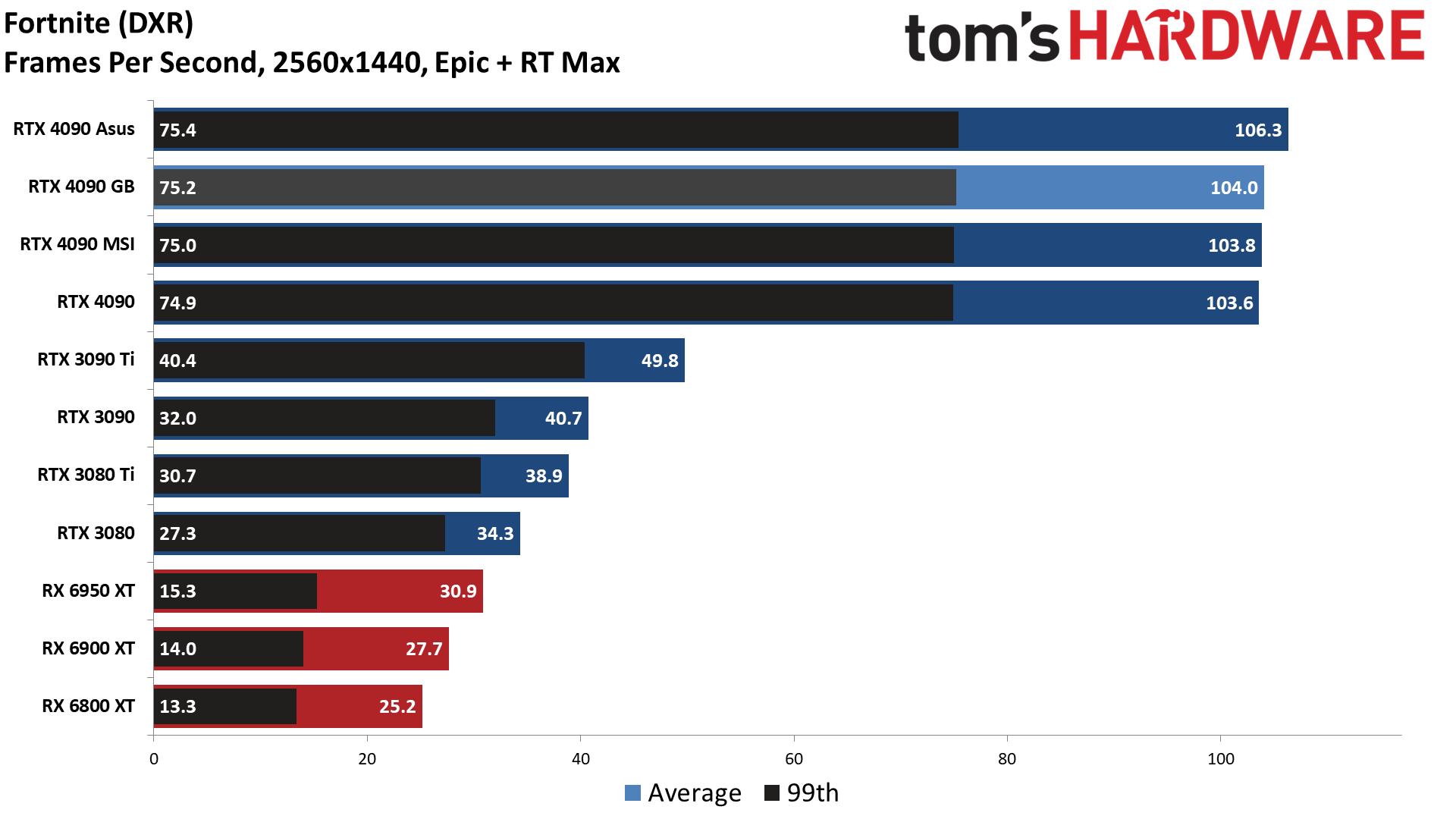

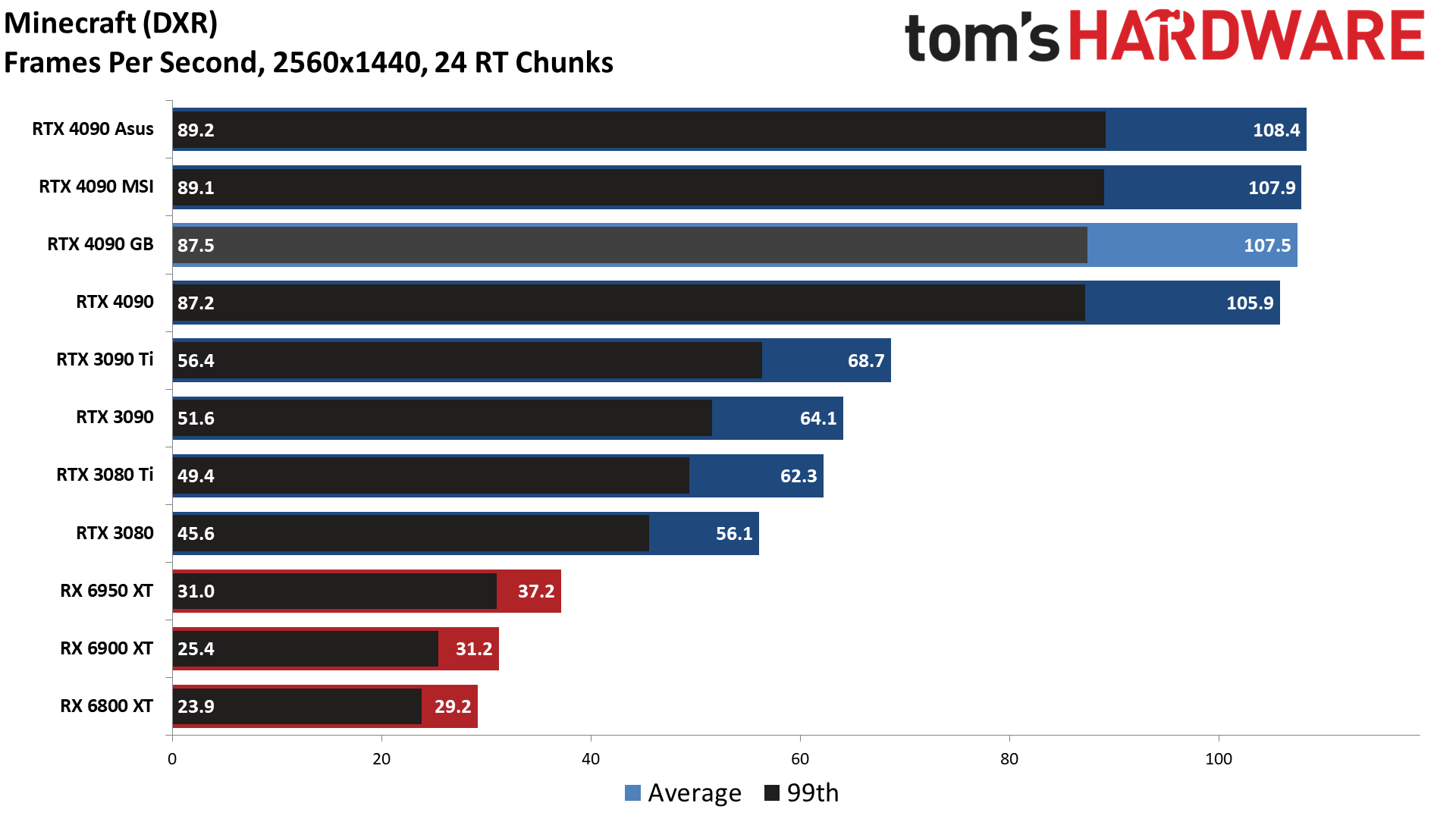

Moving on to our 1440p testing, frame rates improve by 29% compared to 4K ultra in our standard test suite, and by 86% in our DXR suite. What that tells us is even 4K ultra isn't enough to make things fully GPU limited in our standard suite, which matches up with the fact that overclocking did less to boost performance than in DXR.

Also note that with the RTX 3090 Ti, 1440p was 51% faster than 4K in our standard suite, while DXR performance improved by 95%. A faster Core i9-13900K might reduce the CPU bottleneck a bit, so that's something to keep in mind if you want to be sure you’re squeezing every frame possible out of your expensive GPU. CPU performance becomes less of a factor on slower GPUs, but with extreme options like the 4090, you'll want the fastest CPU you can get.

The 1440p tests also hint that perhaps something else is to blame for the slightly lower-than-reference 4K standard results. At 1440p ultra, Gigabyte does come out just a touch ahead of the Founders Edition in both test suites — not by any meaningful amount, but a tie with a minuscule lead does align with the 15 MHz factory overclock.

Test Setup for Gigabyte RTX 4090 Gaming OC

We updated our GPU test PC and gaming suite in early 2022, and we'll continue to use the same hardware for a while longer. AMD's Ryzen 9 7950X and the Core i9-13900K are a bit faster, and those would definitely make more of a difference at 1080p. Still, we're using XMP for a modest boost to performance and the GPU generally becomes the limiting factor at 4K.

Our CPU sits in an MSI Pro Z690-A DDR4 WiFi motherboard, with DDR4-3600 memory — a nod to sensibility rather than outright maximum performance. We also upgraded to Windows 11 and are now running the latest 22H2 version (with VBS and HVCI disabled) to ensure we get the most out of Alder Lake. You can see the rest of the hardware in the boxout.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Gigabyte RTX 4090 Gaming OC: Gaming Performance

Prev Page Gigabyte RTX 4090 Gaming OC: Design, Teardown, and Overclocking Next Page Gigabyte RTX 4090 Gaming OC: Power, Temps, Noise, Etc.

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

cknobman Between pricing, power, things melting I want nothing to do with this gen of Nvidia cards.Reply

I'll wait to see what AMD has and if they suck too then I will just wait for next gen or pricing to drop like a rock. -

Roland Of Gilead Replycknobman said:Between pricing, power, things melting I want nothing to do with this gen of Nvidia cards.

I'll wait to see what AMD has and if they suck too then I will just wait for next gen or pricing to drop like a rock.

Have to agree with you! This chase for max performance is not something I'm interested in. Same with current gen CPU's. Too much power draw.

It's almost cyclical. A good few years ago, power requirements went up dramatically for both GPU's and CPU's, and then dialled back a little with more efficient designs. Seems like we're here again. Hopefully the next gen or two will dial back power consumption to more reasonable levels, while maintaining a decent leap in performance over the previous gen. Specially where we are right now in a global sense, cost of living, utilities, and an ever-worsening climate situation. Electricity/Gas costs a bomb here in Europe. These GPU's just don't make sense for most people. -

RodroX @JarredWaltonGPU with the melting adapter and native cables issues, you Sir are a very brave man to go ahead and OC one of this beasts!Reply -

mrv_co I guess I'm glad these cards exist and continue to push the performance envelope, but I just never made the mental leap to need or want a 'flagship' GPU (much less an even larger case and even more power hungry power supply)... especially since, having had full-tower and mid-tower cases over the years, I transitioned to a SFF case that is cool, quiet and fits neatly under my desk, while realizing that I'm perfectly happy with my ultra wide QHD display.Reply -

JarredWaltonGPU Reply

What's the worst that can happen? It's not like I leave the PC running and walk away for hours. If it melts, that would be sort of awesome and I could do an article on how I caused a 4090 to melt! LOLRodroX said:@JarredWaltonGPU with the melting adapter and native cables issues, you Sir are a very brave man to go ahead and OC one of this beasts!

I still suspect there's a better than reasonable chance Jonny Guru is right and that a few people didn't properly insert the connector. There's a lot of current running through there. Manufacturing defects are the only other real possibility. Given we never heard of people melting RTX 3090 or 3090 Ti 12-pin connections, and that the extra four sense pins make it a bit more difficult to fully insert the 16-pin connector, that seems the most likely option.

But until we have some official statement of cause and cure, it really muddies the RTX 40-series waters! -

junglist724 Reply

I've been sticking flagship CPUs and GPUs into SFF cases for a couple generations now. The 4090FE still fits into some SFF cases and should work well in ones that take good advantage of flow through coolers. The C4-SFX(if it ever actually releases) looks like it would work well even in an air cooled build with a 7950X/13900K + 4090FE. Use an SX1000 PSU plus a 90 or 180 degree 12VHPWR adapter and powering it should be fine.mrv_co said:I guess I'm glad these cards exist and continue to push the performance envelope, but I just never made the mental leap to need or want a 'flagship' GPU (much less an even larger case and even more power hungry power supply)... especially since, having had full-tower and mid-tower cases over the years, I transitioned to a SFF case that is cool, quiet and fits neatly under my desk, while realizing that I'm perfectly happy with my ultra wide QHD display. -

Neilbob Trying hard ... not ... to be ... grumpy old ... misery guts ... ack ...Reply

Subjective: Quantity and/or quality of RGB a matter of total irrelevance to some people.

Pained gasping for breath

(Has no intention of ever letting one of these near the electricity bills. Not sure why the need was felt to comment at all).