Tom's Hardware Verdict

Titan RTX is the right card for the right customer. It's a no-brainer if you're working with large geometry models, training neural networks with large batch sizes, or inferencing trained networks using frameworks like TensorRT with support for the hardware's features. Gaming on Titan RTX doesn't make as much sense when you could have two GeForce RTX 2080 Tis for a similar price.

Pros

- +

24GB of memory is ideal for large professional and deep learning workloads

- +

Improved Tensor cores benefit inferencing performance specifically

- +

Excellent 4K gaming frame rates

- +

NVLink support (which Titan V lacks)

- +

Attractive design

Cons

- -

$2,500 price limits appeal to professionals with deep pockets

- -

Axial fan design exhausts (a lot of) heat into your case

- -

Poor FP64 capabilities compared to Titan V

Why you can trust Tom's Hardware

Nvidia Titan RTX Review

The Titan RTX launch was decidedly unceremonious. Members of the tech press knew that the card was coming but didn’t receive one to test. Nvidia undoubtedly knew its message would be obscured by comparisons drawn between Titan RTX and the other TU102-based card, GeForce RTX 2080 Ti, in games. Based on a complete TU102 processor, Titan RTX was bound to be faster than the GeForce in all benchmarks, regardless of discipline. However, its eye-watering $2,500 price tag would be difficult to justify for entertainment alone.

But even as gamers ponder the effect of an extra four Streaming Multiprocessors on their frame rates, we all know that Titan RTX wasn’t intended for those folks. Of course, we’re still going to run it through our suite of game benchmarks. However, Nvidia says this card was designed for “AI researchers, deep learning developers, data scientists, content creators, and artists.”

Participants in those segments with especially deep pockets often spring for Tesla-based platforms or Quadro cards sporting certified drivers. Those aren’t always necessary, though. As a result, “cost-sensitive” professionals working in smaller shops find themselves somewhere in the middle, needing more than a GeForce but unable to spend $6,300 on an equivalent Quadro RTX 6000.

Out of necessity, our test suite is expanding to include professional visualization and deep learning metrics, in addition to the power consumption analysis we like to perform. Get ready for a three-way face-off between Titan RTX, Titan V, and Titan Xp (with a bit of GeForce RTX 2080 Ti sprinkled in).

Meet Titan RTX: It Starts With A Complete TU102

The Tom’s Hardware audience should be well-acquainted with Nvidia’s TU102 GPU by now: it’s the engine at the heart of GeForce RTX 2080 Ti, composed of 18.6 billion transistors, and measuring 754 square millimeters.

As it appears in the 2080 Ti, though, TU102 features 68 active Streaming Multiprocessors. Four of the chip’s 72 are turned off. One of its 32-bit memory controllers is also disabled, taking eight ROPs and 512KB of L2 cache with it.

Titan RTX is based on the same processor, but with every block active. That means the card boasts a GPU with 72 SMs, 4,608 CUDA cores, 576 Tensor cores, 72 RT cores, 288 texture units, and 36 PolyMorph engines.

Not only does Titan RTX sport more CUDA cores than GeForce RTX 2080 Ti, it also offers a higher GPU Boost clock rating (1,770 MHz vs. 1,635 MHz). As such, its peak single-precision rate increases to 16.3 TFLOPS.

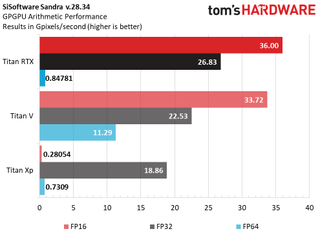

Each SM does contain a pair of FP64-capable CUDA cores as well, yielding a double-precision rate that’s 1/32 of TU102’s FP32 performance, or 0.51 TFLOPS. This is one area where Titan RTX loses big to its predecessor. Titan V’s GV100 processor is better in the HPC space thanks to 6.9 TFLOPS peak FP64 performance (half of its single-precision rate). A quick run through SiSoftware’s Sandra GPGPU Arithmetic benchmark confirms Titan V’s strength, along with the mixed-precision support inherent to Turing and Volta, which Pascal lacks.

The GPU’s GPCs are fed by 12 32-bit GDDR6 memory controllers, each attached to an eight-ROP cluster and 512KB of L2 cache yielding an aggregate 384-bit memory bus, 96 ROPs, and a 6MB L2 cache. At the same 14 Gb/s data rate, one extra memory emplacement buys Titan RTX about 9% more memory bandwidth than GeForce RTX 2080 Ti.

| Row 0 - Cell 0 | Titan RTX | GeForce RTX 2080 Ti FE | Titan V | Titan Xp |

| Architecture (GPU) | Turing (TU102) | Turing (TU102) | Volta (GV100) | Pascal (GP102) |

| CUDA Cores | 4608 | 4352 | 5120 | 3840 |

| Peak FP32 Compute | 16.3 TFLOPS | 14.2 TFLOPS | 14.9 TFLOPS | 12.1 TFLOPS |

| Tensor Cores | 576 | 544 | 640 | N/A |

| RT Cores | 72 | 68 | N/A | N/A |

| Texture Units | 288 | 272 | 320 | 240 |

| Base Clock Rate | 1,350 MHz | 1,350 MHz | 1,200 MHz | 1,404 MHz |

| GPU Boost Rate | 1,770 MHz | 1,635 MHz | 1,455 MHz | 1,582 MHz |

| Memory Capacity | 24GB GDDR6 | 11GB GDDR6 | 12GB HBM2 | 12GB GDDR5X |

| Memory Bus | 384-bit | 352-bit | 3,072-bit | 384-bit |

| Memory Bandwidth | 672 GB/s | 616 GB/s | 653 GB/s | 547.7 GB/s |

| ROPs | 96 | 88 | 96 | 96 |

| L2 Cache | 6MB | 5.5MB | 4.5MB | 3MB |

| TDP | 280W | 260W | 250W | 250W |

| Transistor Count | 18.6 billion | 18.6 billion | 21.1 billion | 12 billion |

| Die Size | 754 mm² | 754 mm² | 815 mm² | 471 mm² |

| SLI Support | Yes (x8 NVLink, x2) | Yes (x8 NVLink, x2) | No | Yes (MIO) |

Whereas GeForce RTX 2080 Ti Founders Edition utilizes Micron’s MT61K256M32JE-14:A modules, the company doesn’t have any 16Gb ICs in its parts catalog. Samsung, on the other hand, does offer a higher-density K4ZAF325BM-HC14 module with a 14 Gb/s data rate. Twelve of them give Titan RTX its 24GB capacity and 672 GB/s peak throughput.

Lots of extra memory, a GPU with more active resources, and faster clock rates necessitate a higher thermal design power rating. Whereas GeForce RTX 2080 Ti Founders Edition is specified at 260W, Titan RTX is a 280W card. That 20W increase is no problem at all for the pair of eight-pin auxiliary power connectors found along the top edge, nor is a challenge for Nvidia’s power supply and thermal solution, both of which appear identical to its GeForce RTX 2080 Ti.

Like the 2080 Ti Founders Edition, we count three phases for Titan RTX’s GDDR6 memory and a corresponding PWM controller up front. A total of 13 phases remain. Five phases are fed by the eight-pin connectors and doubled. With two control loops per phase, 5*2=10 voltage regulation circuits. The remaining three phases to the left of the GPU are fed by the motherboard's PCIe slot and not doubled. That gives us Nvidia's lucky number 13 (along with a smart load distribution scheme). Of course, implementing all of this well requires the right components...

Front and center in this design is uPI's uP9512 eight-phase buck controller specifically designed to support next-gen GPUs. Per uPI, "the uP9512 provides programmable output voltage and active voltage positioning functions to adjust the output voltage as a function of the load current, so it is optimally positioned for a load current transient."

The uP9512 supports Nvidia's Open Voltage Regulator Type 4i+ technology with PWMVID. This input is buffered and filtered to produce a very accurate reference voltage. The output voltage is then precisely controlled to the reference input. An integrated SMBus interface offers enough flexibility to optimize performance and efficiency, while also facilitating communication with the appropriate software. All 13 voltage regulation circuits are equipped with an ON Semiconductor FDMF3170 Smart Power Stage module with integrated PowerTrench MOSFETs and driver ICs.

Samsung’s K4ZAF325BM-HC14 memory ICs are powered by three phases coming from a second uP9512. The same FDMF3170 Smart Power Stage modules crop up yet again. The 470mH coils offer greater inductance than the ones found on the GPU power phases, but they're completely identical in terms of physical dimensions.

Under the hood, Titan RTX’s thermal solution is also the same as what we found on GeForce RTX 2080 Ti. A full-length vapor chamber covers the PCB and is topped with an aluminum fin stack. A shroud over the heat sink houses two 8.5cm axial fans with 13 blades each. These fans blow through the fins and exhaust waste heat out the card’s top and bottom edges. Although we don’t necessarily like that Nvidia recirculates hot air with its Turing-based reference coolers, their performance is admittedly superior to older blower-style configurations.

Titan RTX’s backplate isn't just for looks. Nvidia incorporates the plate into its cooling concept by sandwiching thermal pads between the metal and PCB, behind where you'd find memory modules up front. The company probably could have done without the pad under TU102, though: our measurements showed no difference when we removed it.

It should come as no surprise that the same PCB, same components, same cooler, and same dimensions yield a card weighing the same as GeForce RTX 2080 Ti, too. Our Titan RTX registers 2lb. 14.6 oz. (1.322kg) on a scale. Even the sticker on the back of Titan RTX says GeForce RTX 2080 Ti. Nvidia differentiates the card aesthetically with a matte gold (rather than silver) finish, with polished gold accents around the fans and shroud. A shiny gold Titan logo on the top edge glows white under power.

How We Tested Titan RTX

Benchmarking Titan RTX is a bit more complex than a typical gaming graphics card. Because we ran professional visualization, deep learning, and gaming workloads, we ended up utilizing multiple machines to satisfy the requirements of those disparate applications.

To facilitate deep learning testing, we set up a Core i7-8086K (6C/12T) CPU on a Gigabyte Z370 Aorus Ultra Gaming motherboard. All four of its memory slots were populated with 16GB Corsair Vengeance LPX modules at DDR4-2400, giving us 64GB total. Then, we loaded Ubuntu 18.04 LTS onto a 1.6TB Intel SSD DC P3700. Titan RTX is compared to Titan V, Titan Xp, and GeForce RTX 2080 Ti in these benchmarks.

For professional visualization, we set up a Core i7-8700K (6C/12T) CPU on an MSI Z370 Gaming Pro Carbon AC motherboard. Again, all four of its memory slots were populated with 16GB Corsair Vengeance LPX modules at DDR4-2400, giving us 64GB total. Then, we loaded Windows 10 Professional onto a 1.2TB Intel SSD 750-series drive. Incidentally, this is the same system running our Powenetics software for power consumption measurement. Titan RTX is compared to Titan V, Titan Xp, and GeForce RTX 2080 Ti in these benchmarks.

Finally, we collect gaming results on the same Core i7-7700K used in previous reviews. It populates an MSI Z170 Gaming M7 motherboard, which also hosts G.Skill’s F4-3000C15Q-16GRR memory kit. Crucial’s MX200 SSD remains, joined by a 1.6TB Intel DC P3700 loaded down with games. Titan RTX is compared to Gigabyte’s Aorus GeForce RTX 2080 Ti Xtreme 11G, the GeForce RTX 2080 Ti Founders Edition, Titan V, GeForce RTX 2080, GeForce RTX 2080, GeForce GTX 1080 Ti, Titan X, GeForce GTX 1070 Ti, GeForce GTX 1070, Radeon RX Vega 64, and Radeon RX Vega 56 in these benchmarks.

All of those cards are tested at 2560x1440 and 3840x2160 in Ashes of the Singularity: Escalation, Battlefield V, Destiny 2, Far Cry 5, Forza Motorsport 7, Grand Theft Auto V, Metro: Last Light Redux, Rise of the Tomb Raider, Tom Clancy’s The Division, Tom Clancy’s Ghost Recon Wildlands, The Witcher 3, and Wolfenstein II: The New Colossus.

We invited AMD to submit a more compute-oriented product for our deep learning and workstation testing. However, company representatives didn’t respond after initially looking into the request. Fortunately, we should have Radeon VII to test very soon.

The testing methodology we're using comes from PresentMon: Performance In DirectX, OpenGL and Vulkan. In short, these games are evaluated using a combination of OCAT and our own in-house GUI for PresentMon, with logging via GPU-Z.

As we generate new data, we’re using the latest drivers. For Linux, that meant using 415.18 for Titan RTX and Titan V, and then 410.93 for Titan Xp. Under Windows 10, we went with Nvidia’s 417.26 press driver for Titan RTX, 417.22 for Gigabyte’s card, 416.33 For GeForce RTX 2070, and 411.51 for 2080/2080 Ti FE. Older Pascal-based boards were tested with build 398.82. Titan V’s results were spot-checked with 411.51 to ensure performance didn’t change. AMD’s cards utilize Crimson Adrenalin Edition 18.8.1 (except for the Battlefield V and Wolfenstein tests, which are tested with Adrenalin Edition 18.11.2).

Special thanks to Noctua for sending over a batch of NH-D15S heat sinks with fans. These top all three systems, giving us consistency in effective, quiet cooling.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Nvidia Titan RTX Review

Next Page Performance Results: Pro Visualization

Intel and ExxonMobil working on advanced liquid cooling — laying groundwork for 2000W TDP Xeon chips

EKWB issues public apology regarding delayed payments — promises employees and suppliers it will change its ways

Noctua releases low-profile CPU cooler for SFF builds — NH-L12Sx77 has better clearance for RAM, VRM heatsinks

-

AgentLozen This is a horrible video card for gaming at this price point but when you paint this card in the light of workstation graphics, the price starts to become more understandable.Reply

Nvidia should have given this a Quadro designation so that there is no confusion what this thing is meant for. -

bloodroses Reply21719532 said:This is a horrible video card for gaming at this price point but when you paint this card in the light of workstation graphics, the price starts to become more understandable.

Nvidia should have given this a Quadro designation so that there is no confusion what this thing is meant for.

True, but the 'Titan' designation was more so designated for super computing, not gaming. They just happen to game well. Quadro is designed for CAD uses, with ECC VRAM and driver support being the big difference over a Titan. There is quite a bit of crossover that does happen each generation though, to where you can sometimes 'hack' a Quadro driver onto a Titan

https://www.reddit.com/r/nvidia/comments/a2vxb9/differences_between_the_titan_rtx_and_quadro_rtx/ -

madks Is it possible to put more training benchmarks? Especially for Recurrent Neural Networks (RNN). There are many forecasting models for weather, stock market etc. And they usually fit in less than 4GB of vram.Reply

Inference is less important, because a model could be deployed on a machine without a GPU or even an embedded device. -

mdd1963 Reply21720515 said:Just buy it!!!

Would not buy it at half of it's cost either, so...

:)

The Tom's summary sounds like Nviidia payed for their trip to Bangkok and gave them 4 cards to review....plus gave $4k 'expense money' :)

-

alextheblue So the Titan RTX has roughly half the FP64 performance of the Vega VII. The same Vega VII that Tom's had a news article (that was NEVER CORRECTED) that bashed it for "shipping without double precision" and then later erroneously listed the FP64 rate at half the actual rate? Nice to know.Reply

https://www.tomshardware.com/news/amd-radeon-vii-double-precision-disabled,38437.html

There's a link to the bad news article, for posterity.