Samsung to launch AI chips to challenge Nvidia, others — 'Mach 1' chips to launch in early 2025

Samsung will enter AI processor game next year.

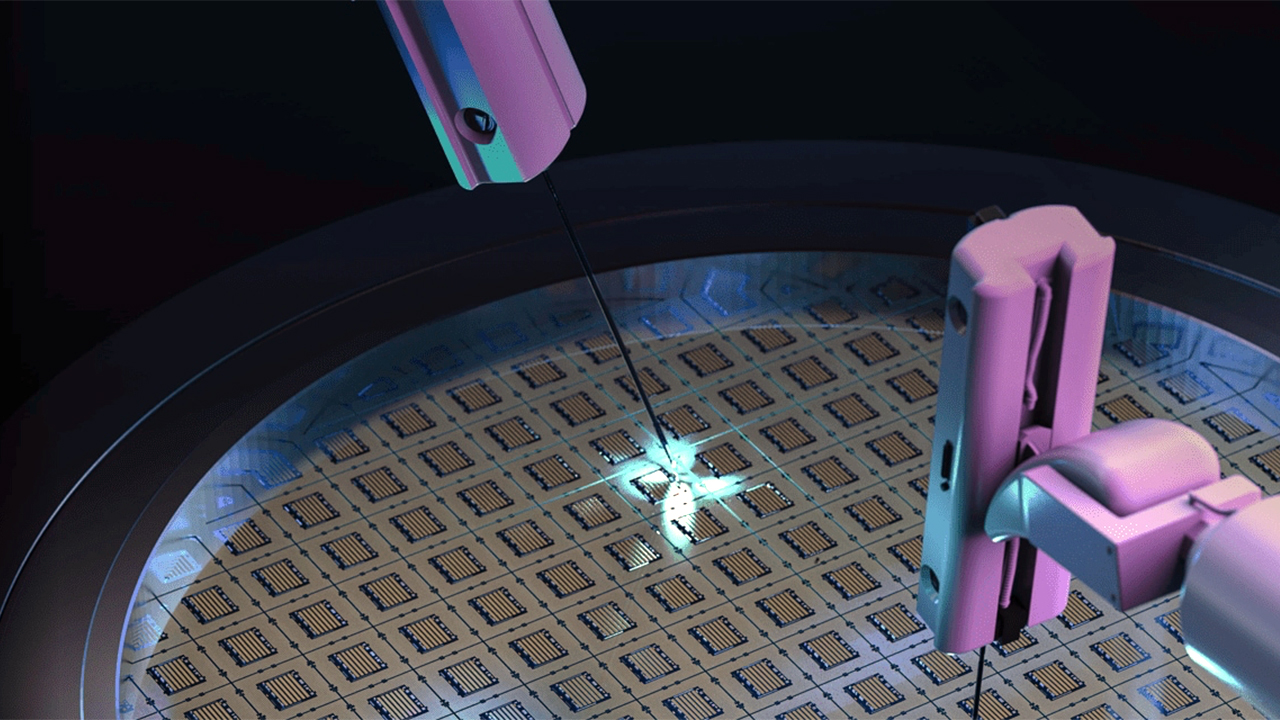

Samsung Electronics is gearing up to launch its own AI accelerator chip — the Mach-1 — in early 2025, the company announced at its 55th annual shareholders meeting, reports Sedaily. The move will mean competing against companies like Nvidia, but the South Korean company has no plans (at least for now) to rival Nvidia's ultra-high-end AI solutions, such as the H100, B100, or B200.

Samsung's Mach-1 is an AI inference accelerator based on an application specific integrated circuit (ASIC) and equipped with LPDDR memory, which makes it particularly suitable for edge computing applications. The report claims that Samsung's Mach-1 has a unique feature that will dramatically reduce memory bandwidth requirements for inference to about 0.125x when compared to existing designs — but does not elaborate on how it will do this, so take this claim with a grain of salt.

Kye Hyun Kyung, the head of Samsung Electronics Device Solutions Division, overseeing global operations of the Memory, System LSI, and Foundry business units, said that the chip design had passed technological validation on field-programmable gate arrays (FPGAs) and that the physical finalization of the system-on-chip (SoC) is currently underway. Kyung assured that the chip would be ready by the end of the year, allowing for the launch of an AI system powered by the Mach-1 chip early next year.

Samsung's Mach-1 is not designed to compete against high-performance AI processors such as the AWS Trainium or the Nvidia H100, but it will certainly compete against other inference-oriented solutions, including AWS Inferentia. It is highly likely that Samsung will position its Mach-1 primarily for edge applications that require low power consumption, minimal dimensions, and low costs, which is where it can probably excel.

Samsung is not only focused on developing the Mach-1 chip but is also actively involved in broader AI semiconductor development efforts, according to Kye Hyun Kyung. The company has established a lab in Silicon Valley dedicated to general artificial intelligence (AGI) research, signaling its commitment to becoming a key player in the future of AI technology. The lab's mission is to create new types of processors and memory that meet processing requirements of future AGI systems.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Pierce2623 Well it’s become abundantly clear that anyone can slap some matrix cores together but I’d imagine software compatibility is what will sell these things.Reply