Why you can trust Tom's Hardware

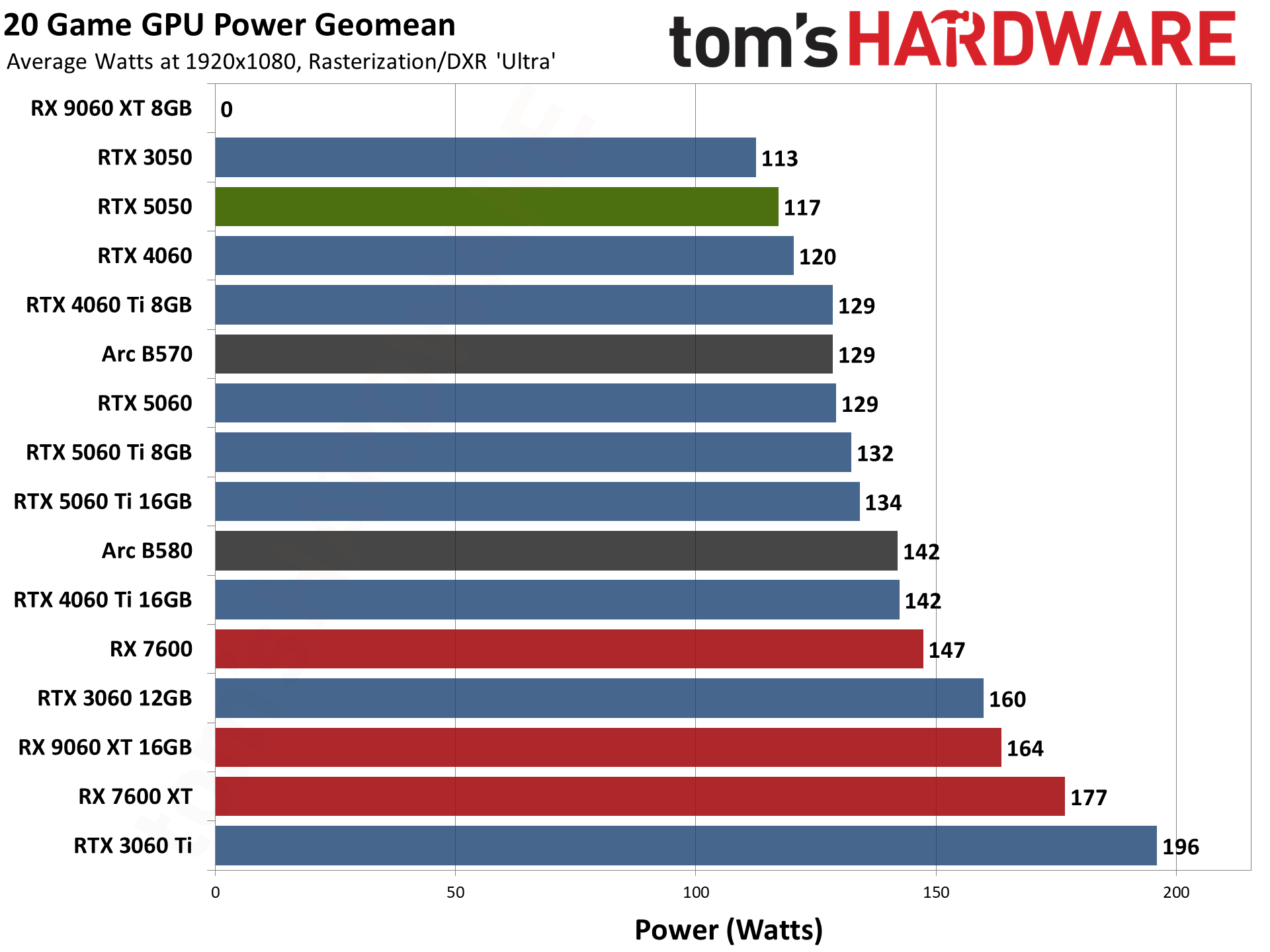

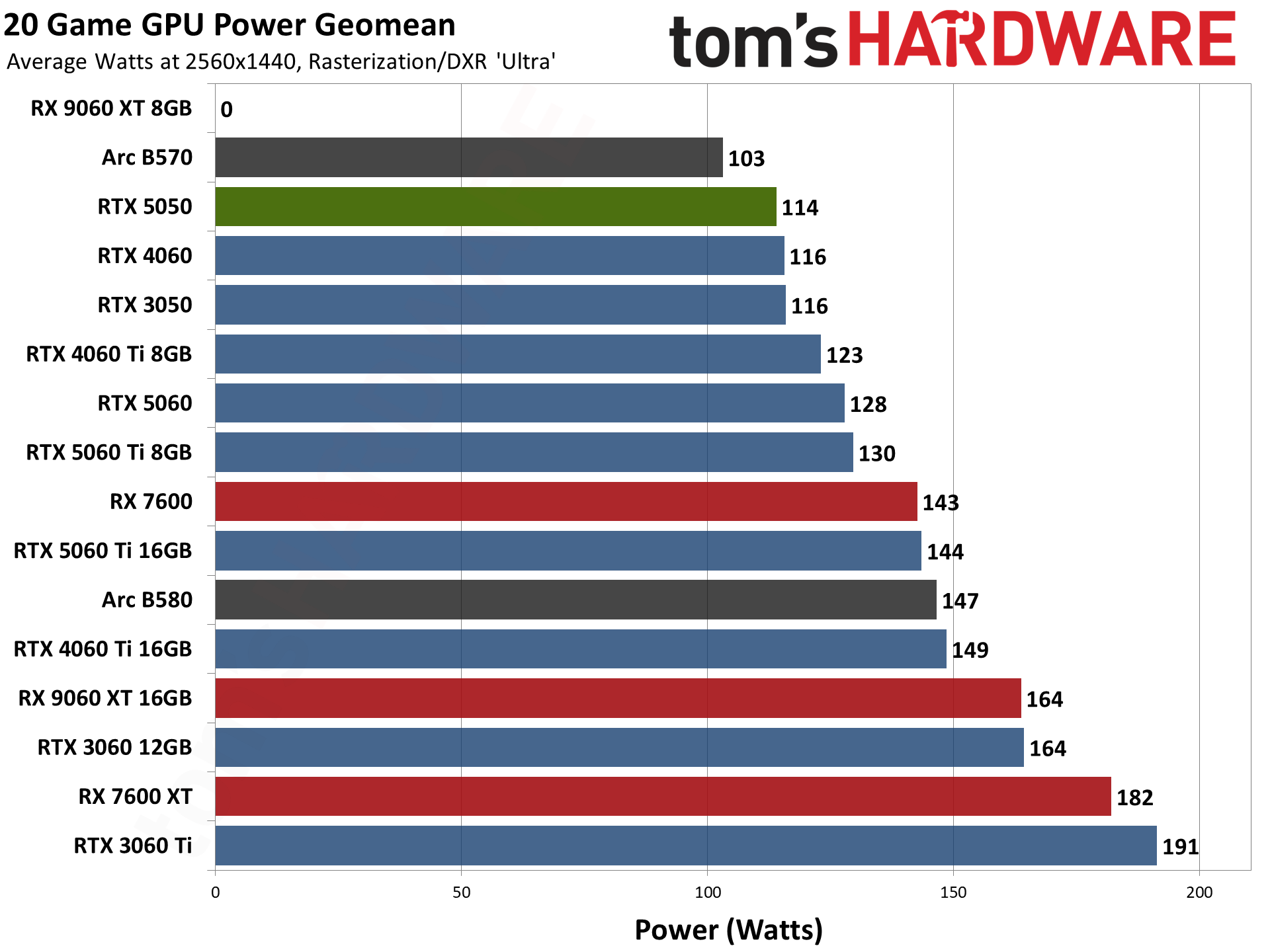

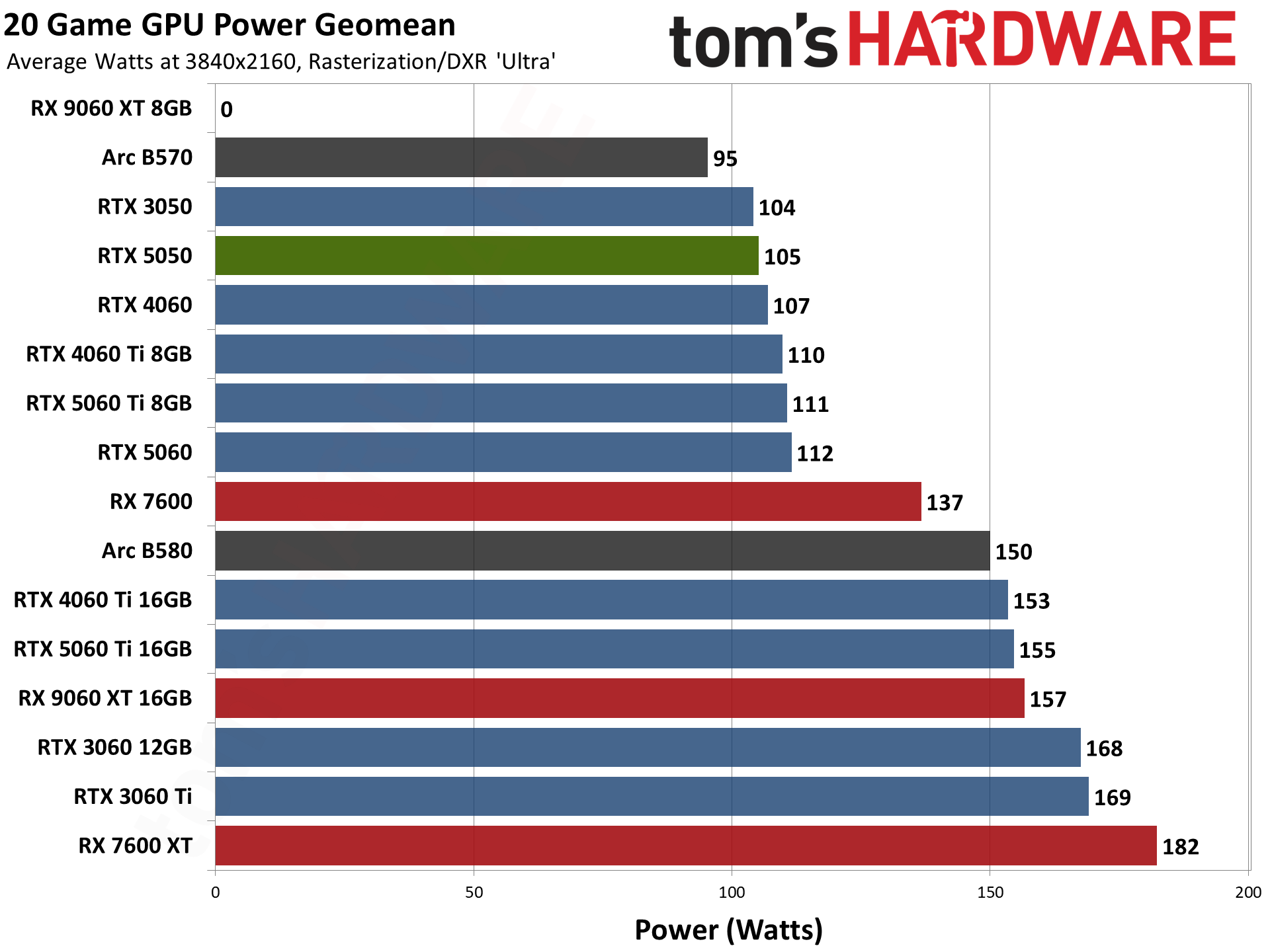

Used alongside the FrameView software that we use to capture performance results, Nvidia’s PCAT hardware allows us to capture live power consumption data with every frame, and we can use that data to communicate real-world power usage figures that are more precise than a worst-case total board power rating.

Power consumption taken in isolation doesn't mean much, though. A card can be both highly efficient and still draw a lot of power to turn in a high overall performance level, and it can also draw a modest amount of power and still deliver relatively low performance per watt.

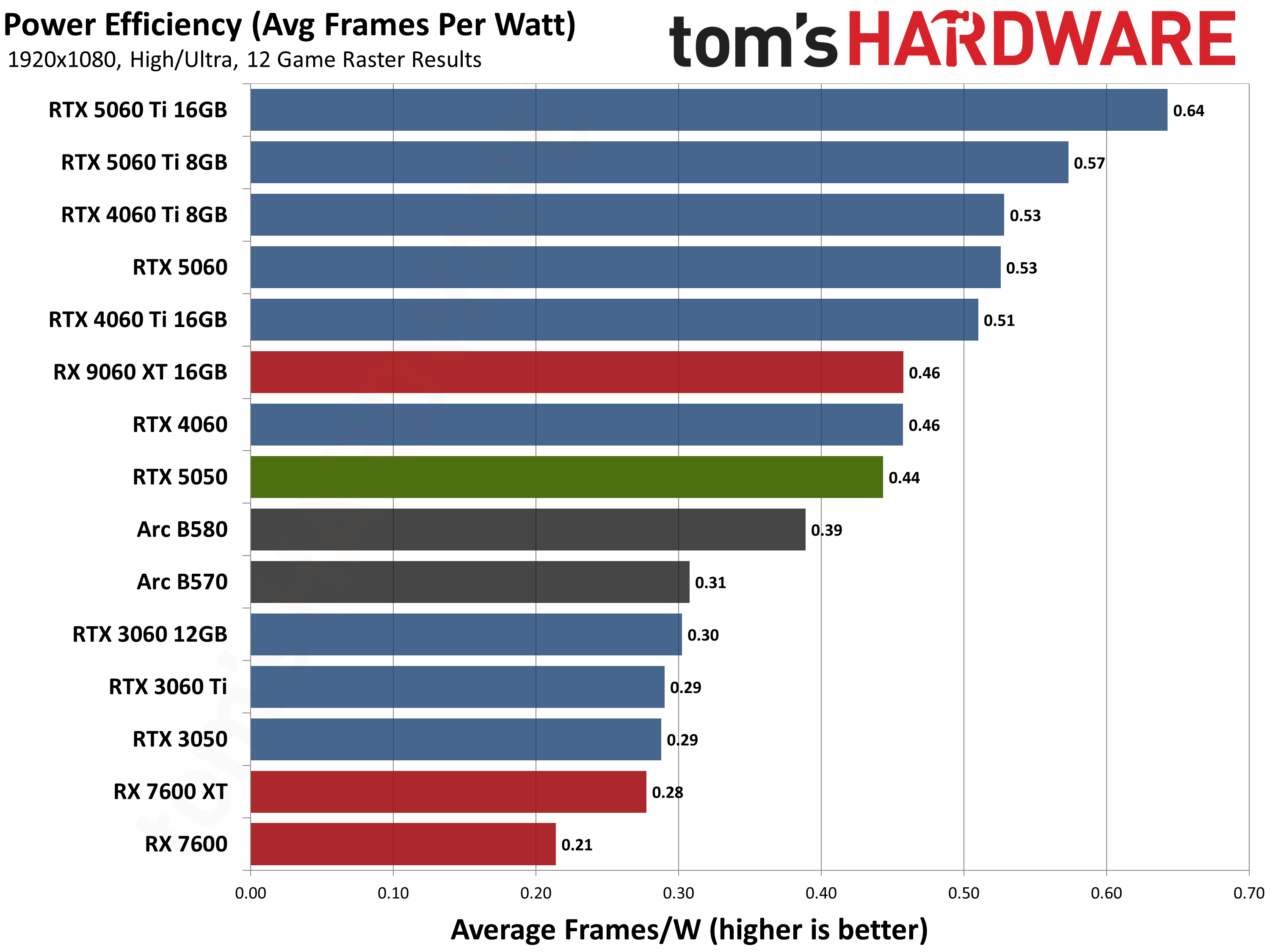

To express power efficiency, we simply divide a card's average frames per second by its average power consumption across all of our tests. We stuck with our 1080p results for this analysis, as these cards' relatively low performance at 1440p and 4K would make efficiency discussions with those results more academic than anything.

By this measure, the RTX 5050 is much more efficient than the Ampere-powered RTX 3050, but it’s disappointingly inefficient for a Blackwell card. The RTX 5060 turns in 20% higher frames per watt at 1080p, and the RTX 5060 Ti duo is even better still. Any generational efficiency gains Nvidia made in the GB207 GPU on the RTX 5050 appear to be offset entirely by sticking with fast GDDR6 memory at the board level. On net, we end up with efficiency even slightly worse than that of the RTX 4060.

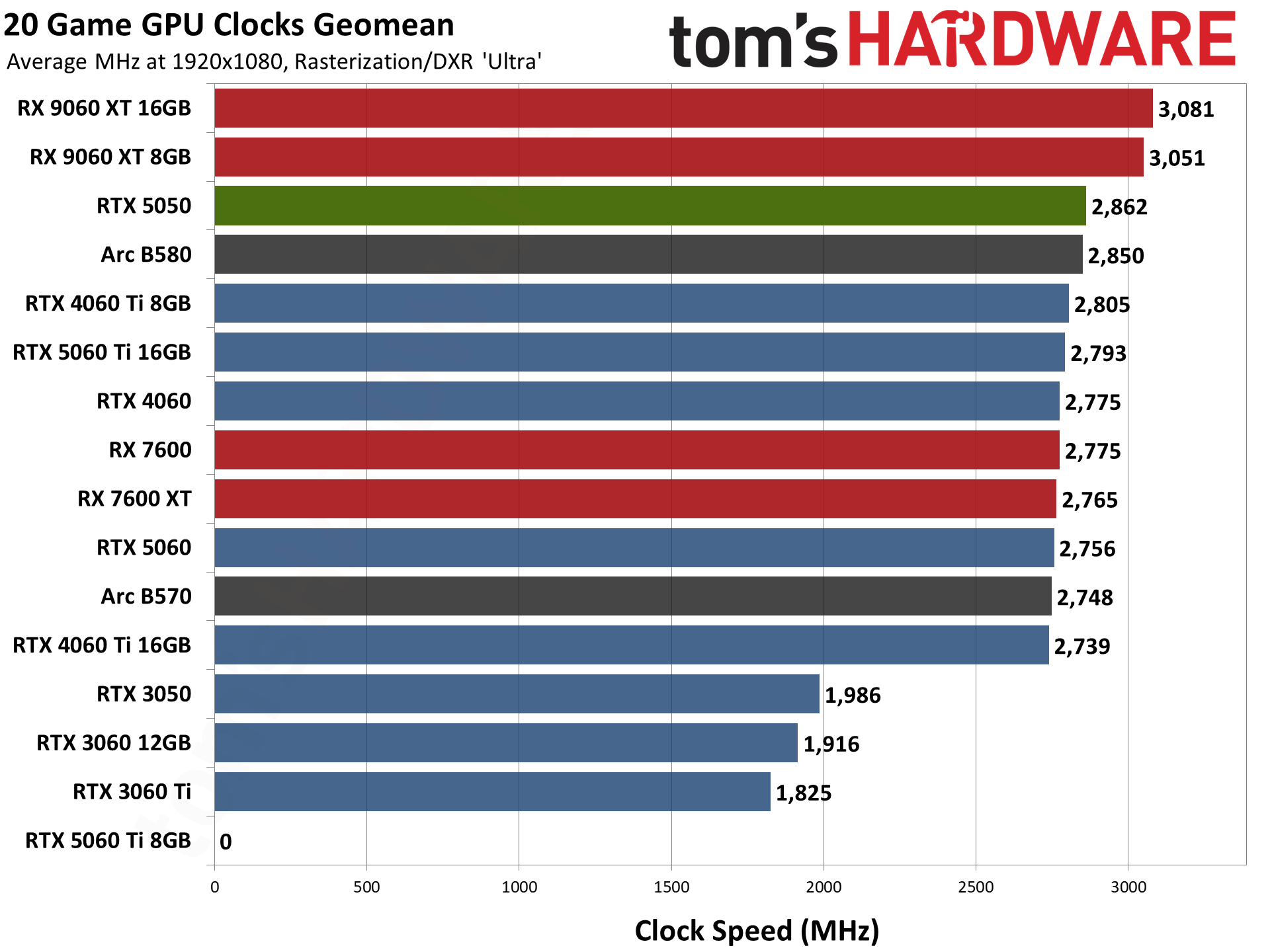

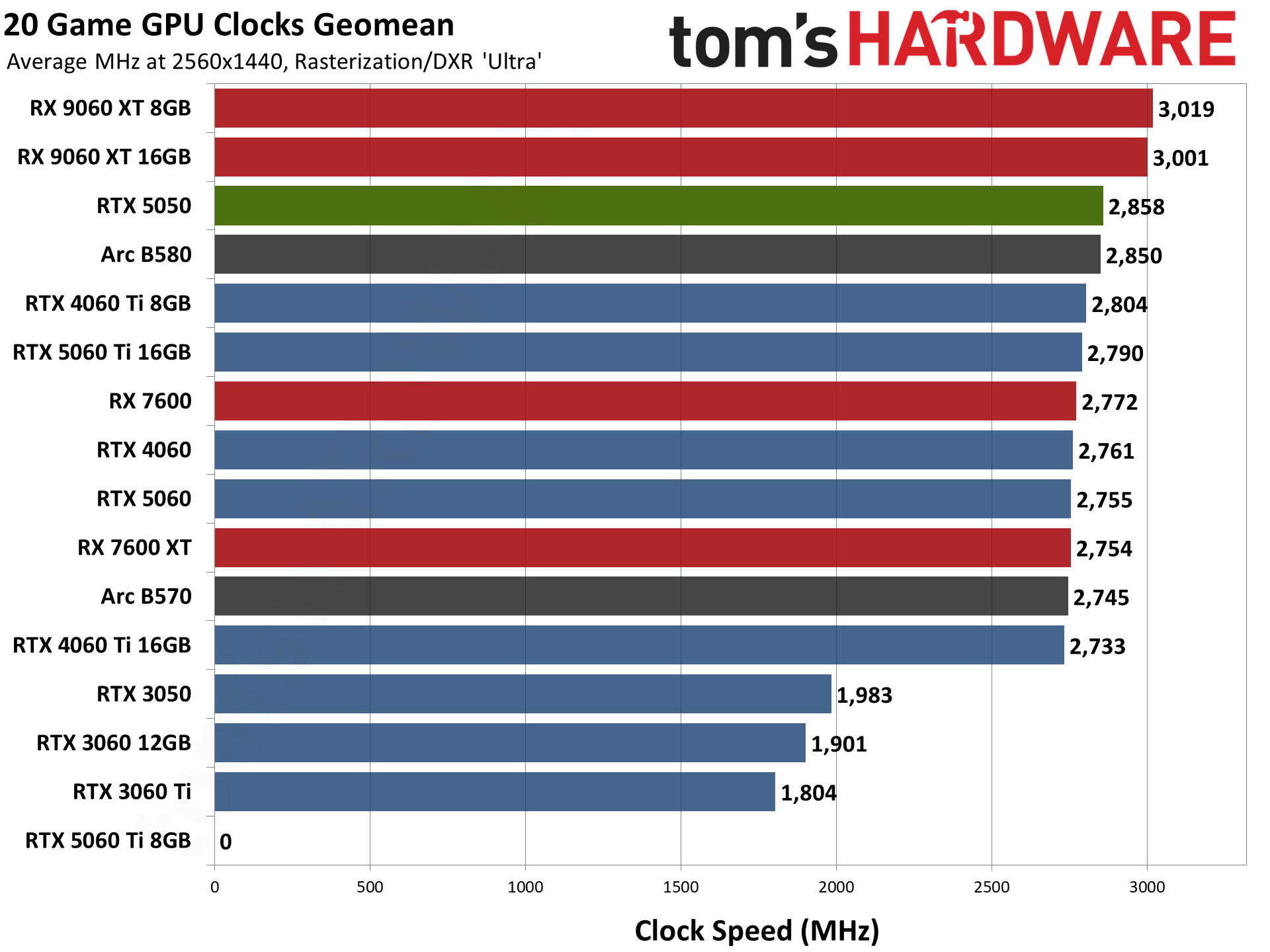

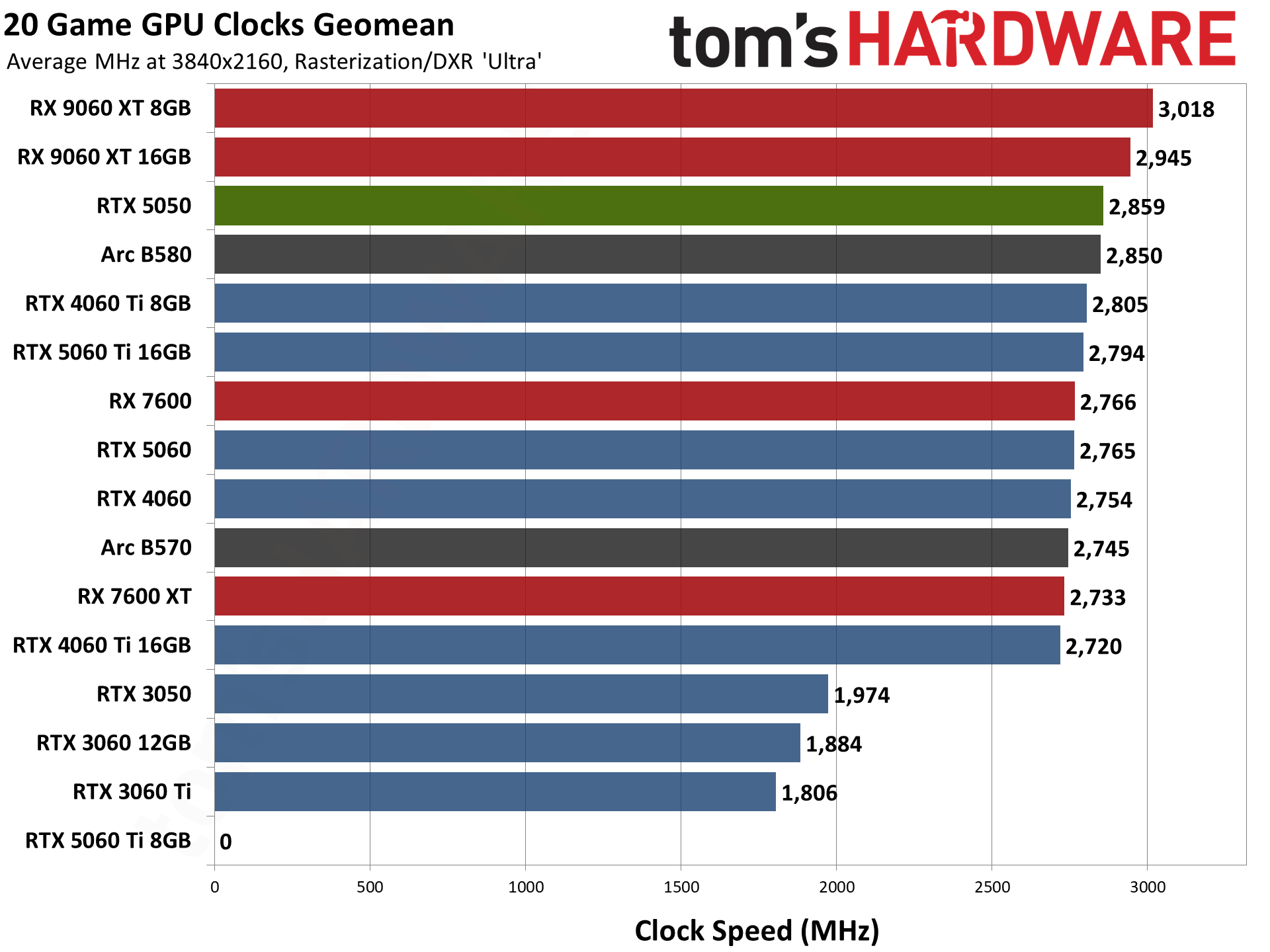

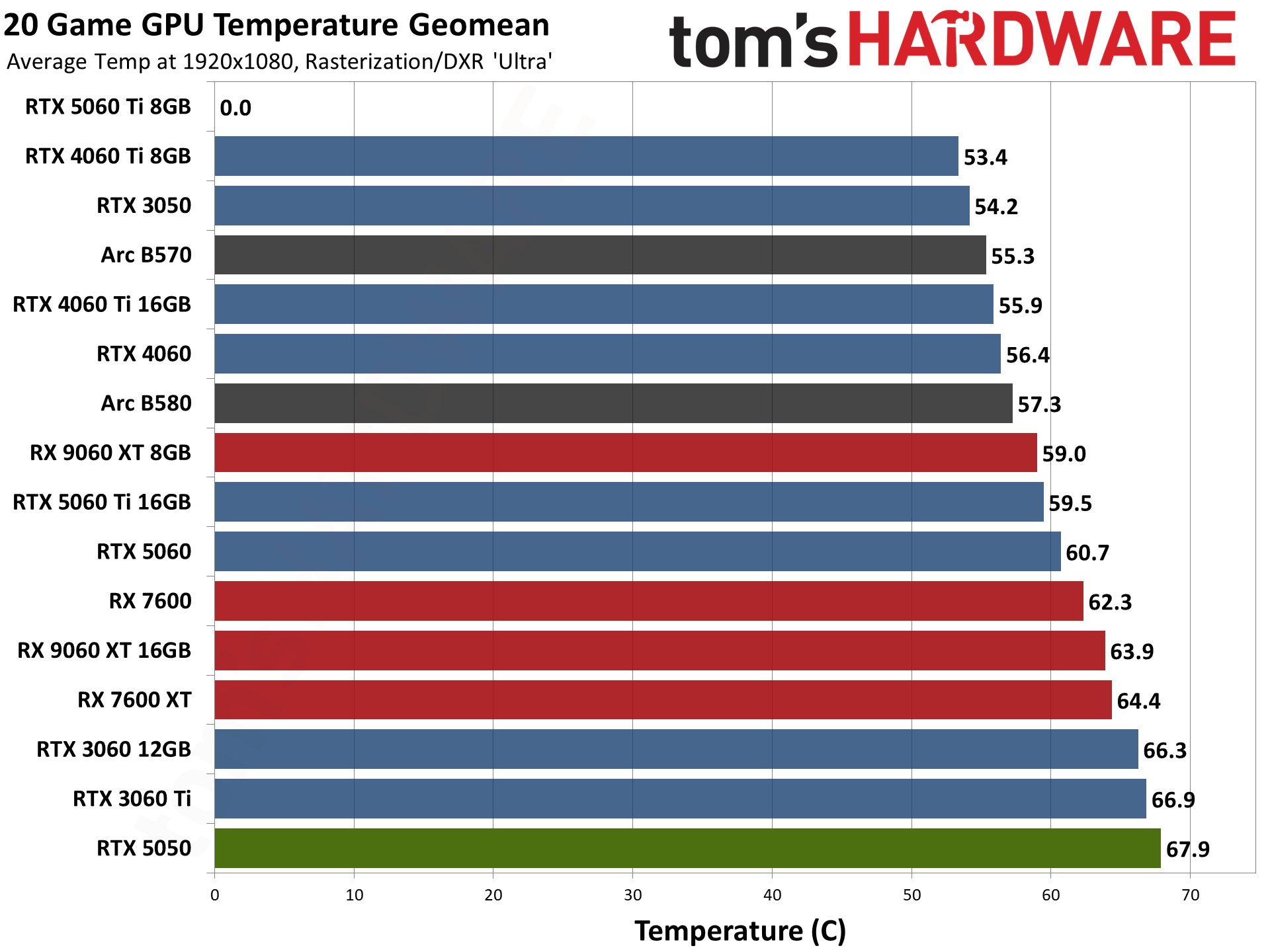

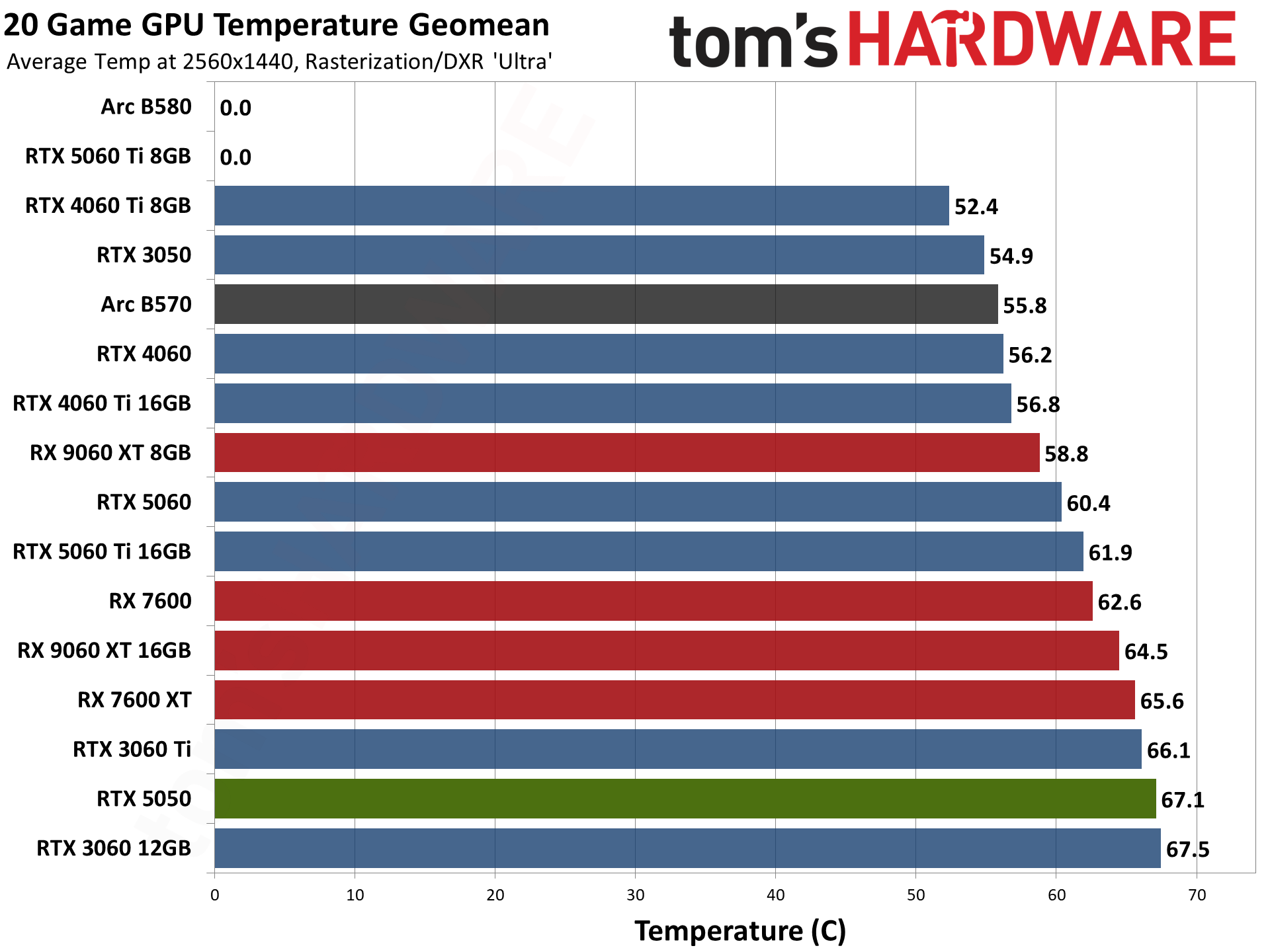

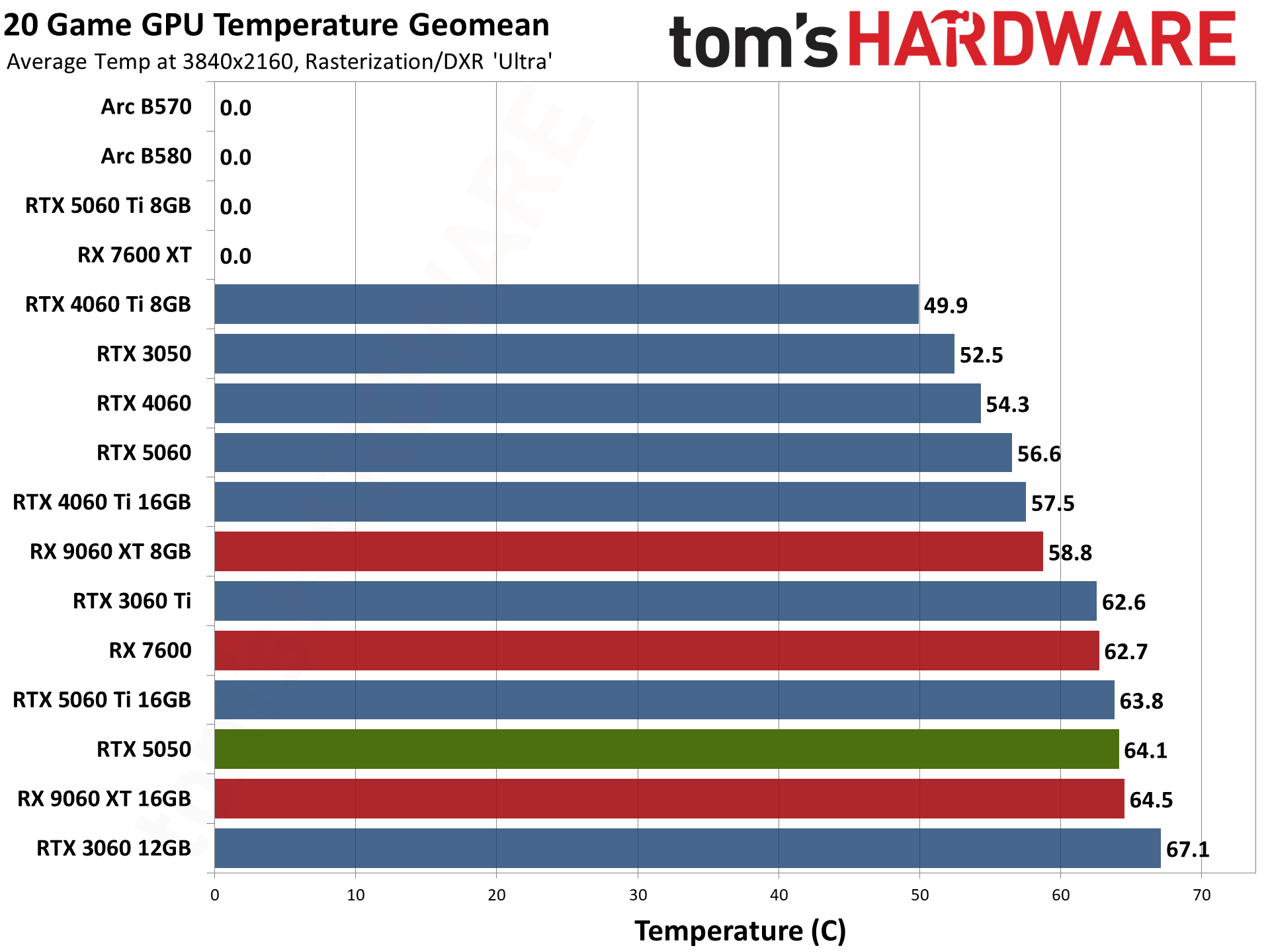

For reference, we've also included the geomean of clock speeds and temperatures across all our cards at the tested resolutions. Some data is missing due to driver and/or software hiccups, which we'll correct in future testing.

We don't think there are any surprises in these results. The RTX 5050 clocks much higher than its rated boost speed, a testament to Nvidia's GPU Boost logic and the thermal and electrical headroom that even this modest card apparently boasts.

The Gigabyte card is the hottest-running of this bunch, but it's also got one of the smallest coolers among the cards we tested, and it's still well below any level that would represent cause for concern.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Nvidia RTX 5050 Power, temps, clocks, and efficiency

Prev Page Nvidia RTX 5050: Ray-traced gaming performance Next Page Nvidia RTX 5050: Conclusions

As the Senior Analyst, Graphics at Tom's Hardware, Jeff Kampman covers everything to do with GPUs, gaming performance, and more. From integrated graphics processors to discrete graphics cards to the hyperscale installations powering our AI future, if it's got a GPU in it, Jeff is on it.

-

Neilbob Reply

Yes, indeed.Notton said:Geforce Give-me-my-money RTX 5050

$150 performance levels at best.

For the tiny number and type of games I play these days, 8GB and this performance level is perfectly sufficient, but NOT at this stupid price.

I wonder if we will ever again see a properly priced budget GPU segment (not what should be mid-range). I'm kind of hoping AMD will churn out something, but I think we all know that isn't going to happen. And I'm still not quite prepared to hold my breath on Intel.

My current system is pushing 6. It is starting to make me nervous... -

usertests ReplyRTX 5050... a necessary update

It's basically a 4060 or a little slower, with worse efficiency. This review is actually more positive than some of the launch reviews I saw, which had it losing even more performance and efficiency against the 4060. Perhaps the Blackwell drivers have improved between launch and this late review.

That along with the 5060 shockingly having greater performance per dollar, as well as the unloved 9060 XT 8 GB annihilating it at similar pricing, shows that it's unnecessary.

The 5050 is so close to the 4060 that I bet a silently introduced GDDR7 desktop variant could raise the efficiency and maybe even the performance to above the 4060, even if the effect was as low as 0-5%. I believe efficiency is the reason why the laptop 5050s are getting GDDR7 instead of the GDDR6 in this one. The desktop 5050 card is truly a second class citizen. -

bourgeoisdude "The Dells, HPs, and Lenovos of the world that need to build cheap gaming PCs for buyers at Wal-Mart and Best Buy now have access to a product that says RTX 50 rather than RTX 30 on the shelf sticker..."Reply

^Well said; this is exactly what this card is for. At least this means less GTX 3050s in 'new' gaming PCs. But yeah the GTX 5050 is not really meant for the Toms Hardware audience. -

8086 Reply

No.Notton said:Geforce Give-me-my-money RTX 5050

$150 performance levels at best.

This was a $79 card before the pandemic hit. -

LordVile Reply

No it’s not, stop pretending it’s 15 years ago. Things are more expensive in general and even a 1030 was $79 at launch. And that thing lost to iGPUs. The 5050 had increased the same percentage as every other product8086 said:No.

This was a $79 card before the pandemic hit. -

atomicWAR Reply

Fair point but prices have increased well past inflation as well though. Ngreedia has to milk and all. All skus are also one lowered down by at least one sku too. 90 replaced 80 (by die size and perfomance metrics), 80 replaced 70ti, 70ti replaced 70, 70 replaced 60ti, 60ti replaced 60, 60 replaced 50 and 50 replaced 30. It is well documented shrinkflation hit nvidia gpus. Either way we have been getting screwed as consumers by Ngreedia (amd as well)LordVile said:No it’s not, stop pretending it’s 15 years ago.

2tJpe3Dk7Ko:2View: https://m.youtube.com/watch?v=2tJpe3Dk7Ko&t=2s -

LordVile Reply

90 replaced the Titan not the 80 so your entire point is mootatomicWAR said:Fair point but prices have increased well past inflation as well though. Ngreedia has to milk and all. All skus are also one lowered down by at least one sku too. 90 replaced 80 (by die size and perfomance metrics), 80 replaced 70ti, 70ti replaced 70, 70 replaced 60ti, 60ti replaced 60, 60 replaced 50 and 50 replaced 30. It is well documented shrinkflation hit nvidia gpus. Either way we have been getting screwed as consumers by Ngreedia (amd as well)

2tJpe3Dk7Ko:2View: https://m.youtube.com/watch?v=2tJpe3Dk7Ko&t=2s -

atomicWAR Reply

Watch the video. I am talking silicon used, performance upticks etc. So no the 90 didn't replace the titan...it replaced the 80 class. Try again with a good source like I did, please. If you can prove me wrong with out just saying I am wrong, I am happy to listen and learn. Because it you want to go the titan route. It was the start of replacing the 80 TI class. Way back when the top 110 dies (now 102 dies) fully unlocked was the 80 class card. It really started to show up with the GTX 680 when we got a GK104 instead of a 102 and kicked into high gear after the GTX 700s/kepler which is the gen Titan launched with. Point being Nvidia set the stage with the lesser GTX 680/ gk104 and excuted the move with the 700 series. And things have snowballed from there. When you use mm^2 we are getting less gpu per class than ever now.LordVile said:90 replaced the Titan not the 80 so your entire point is moot