Tom's Hardware Verdict

The Aorus RX 5700 XT 8G is a great performer at 1440p with ultra settings. And with some tweaking, it’s 4K UHD/60 capable. But it's pricier than similarly performing cards.

Pros

- +

Very capable 1440p card

- +

Much cheaper than RTX 2070 Super

- +

Six display outputs

Cons

- -

Expensive compared to other 5700 XTs

- -

Bulky cooler makes for a tight fit in many cases

Why you can trust Tom's Hardware

In late 2019, Gigabyte announced the Aorus RX 5700 XT 8G, a flagship card that sits atop two lesser models, the Gaming OC and a reference design. Helped by a factory overclock and a large cooler, this is one of the fastest Navi-based cards we've tested.

The 5700 XT’s sweet spot for game resolution is 2560 x 1440 (1440p) at 60 fps while using the highest settings. In short, this card is great for high-fps gaming at 1080p (some 1440p), 60 fps plus 1440p, and medium settings at 4K UHD. Across 10 of 11 games tested, Metro: Exodus is the only outlier that lands under 60 fps at 1440p. We also tested at 4K UHD (3840 x 2160) with the same settings and found four of our 11 titles are very close to or at 60 fps. Lowering image quality will be a must to have a smooth gaming experience at that resolution. But if smooth 4K gaming is your aim, you should probably buy a better-performing (and more expensive) Nvidia card like the 2080 Super.

The Aorus RX 5700 XT 8G costs $449.99 on Newegg when we wrote this, which is on the high end of 5700 XT options (which start at $380) and $10 more than the $439.99 ASRock 5700 XT Taichi. Compare this to the Gigabyte RTX 2070 Super Gaming OC ($520) also used for comparison. The Gigabyte RTX 2060 Super Gaming OC is slower but costs less, starting at $399.99.

We’ll take a more in-depth look at how the Aorus RX 5700 XT 8G card performed below, as well as how well the Windforce 3 cooler kept this card cool and quiet.

Features

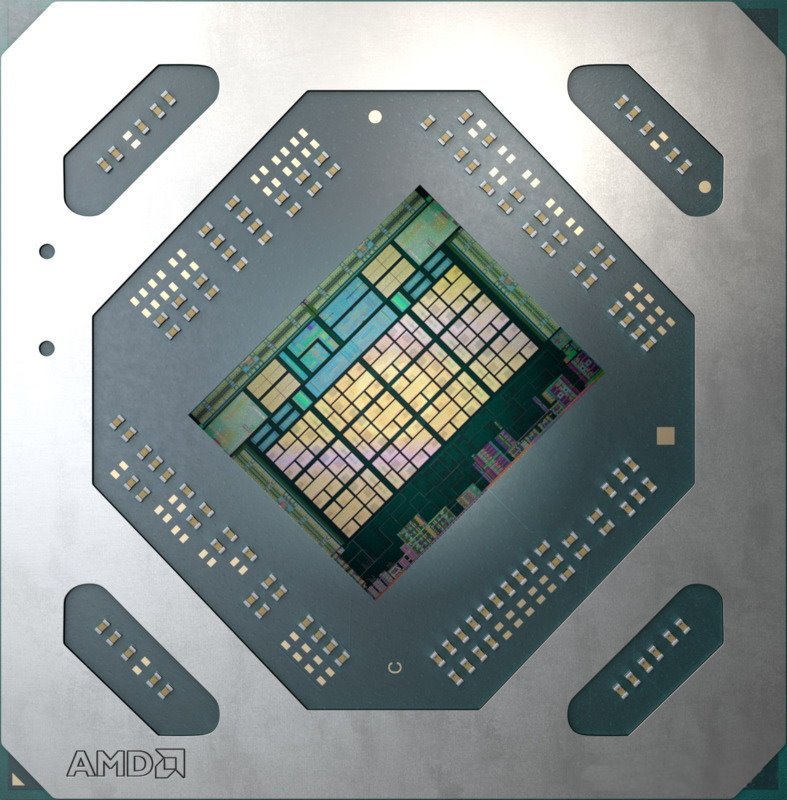

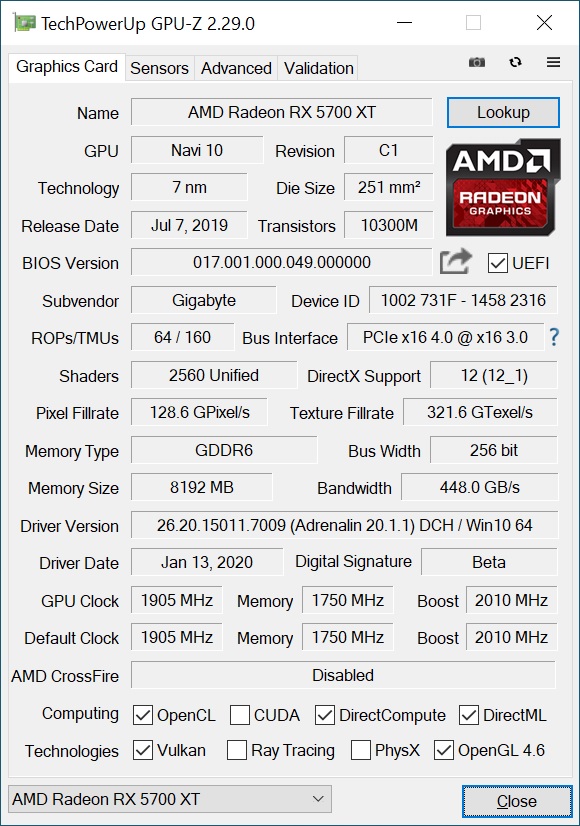

The AMD RX 5700 XT uses a full-fledged Navi 10 GPU manufactured using TSMC’s 7nm finFET process. This process node and architecture are able to fit 10.3 billion transistors onto a 251 mm squared space. Internally, Navi 10 consists of 40 Compute Units (CUs) and a total of 2,560 stream processors. Each RDNA-based CU has four texture units yielding 144 TMUs and 64 ROPs.

Reference clock speeds for the Radeon RX 5700 XT are 1,605 MHz (Base Clock), a 1,755 MHz Game clock, and Boost of 1,905 MHz. The Aorus RX 5700 XT 8G ratchets that up to 1,905 MHz (+150 MHz) Game clock and 2,010 MHz boost clock (+105 MHz) -- a significant gain over reference clocked cards.

The 5700 XT has 8GB of GDDR6 that rests on a 256-bit bus. Default memory speeds are 1,750 MHz (14,000 MHz GDDR6 effective) which gives us 448 GBps of bandwidth. 8GB capacity is enough for an overwhelming majority of today’s games, even at 2560 x 1440 resolution.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

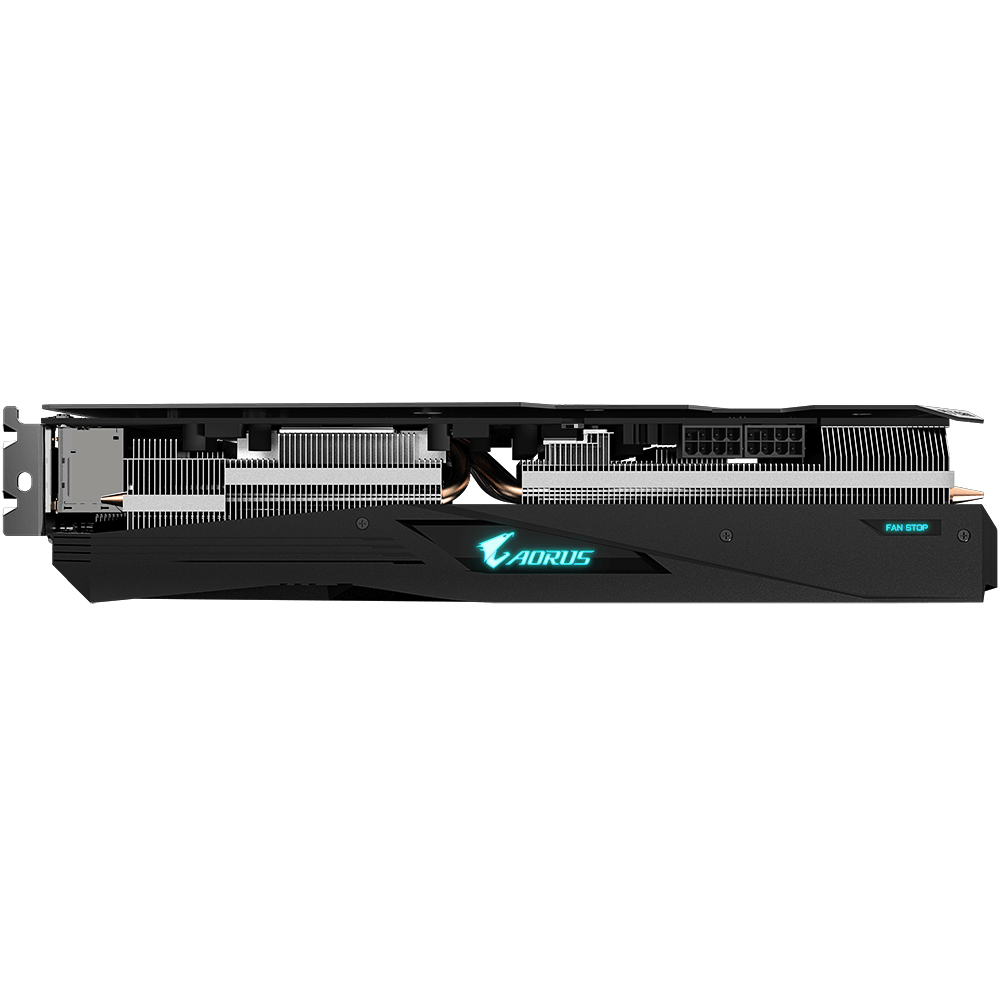

Power consumption for the reference specification is listed at 225W TDP. Gigabyte does not list a TDP/TBP, but the power supply recommendation on the website is 600W, 50W more than reference clocked cards. With that as well as the increased clocks, we can expect something north of 225W.

Below is a more detailed specifications table covering recent AMD GPUs:

| Header Cell - Column 0 | Sapphire Radeon RX 5600 XT Pulse OC | Radeon RX 5700 | Radeon RX 5700 XT | Aorus RX 5700 XT |

|---|---|---|---|---|

| Architecture (GPU) | RDNA (Navi 10) | RDNA (Navi 10) | RDNA (Navi 10) | RDNA (Navi 10) |

| ALUs/Stream Processors | 2304 | 2304 | 2560 | 2560 |

| Peak FP32 Compute (Based on Typical Boost) | 7.2 TFLOPS | 7.2 TFLOPS | 9 TFLOPS | 9 TFLOPS |

| Tensor Cores | N/A | N/A | N/A | N/A |

| RT Cores | N/A | N/A | N/A | N/A |

| Texture Units | 144 | 144 | 160 | 160 |

| ROPs | 64 | 64 | 64 | 64 |

| Base Clock Rate | N/A | 1465 MHz | 1605 MHz | 1605 MHz |

| Nvidia Boost/AMD Game Rate | 1615 MHz | 1625 MHz | 1755 MHz | 1905 MHz |

| AMD Boost Rate | 1750 MHz | 1725 MHz | 1905 MHz | 2010 MHz |

| Memory Capacity | 6GB GDDR6 | 8GB GDDR6 | 8GB GDDR6 | 8GB GDDR6 |

| Memory Bus | 192-bit | 256-bit | 256-bit | 256-bit |

| Memory Bandwidth | 336 GB/s | 448 GB/s | 448 GB/s | 448 GB/s |

| L2 Cache | 4MB | 4MB | 4MB | 4MB |

| TDP | 150W | 177W (measured) | 218W (measured) | 225W |

| Transistor Count | 10.3 billion | 10.3 billion | 10.3 billion | 10.3 billion |

| Die Size | 251 mm² | 251 mm² | 251 mm² | 251 mm² |

Design

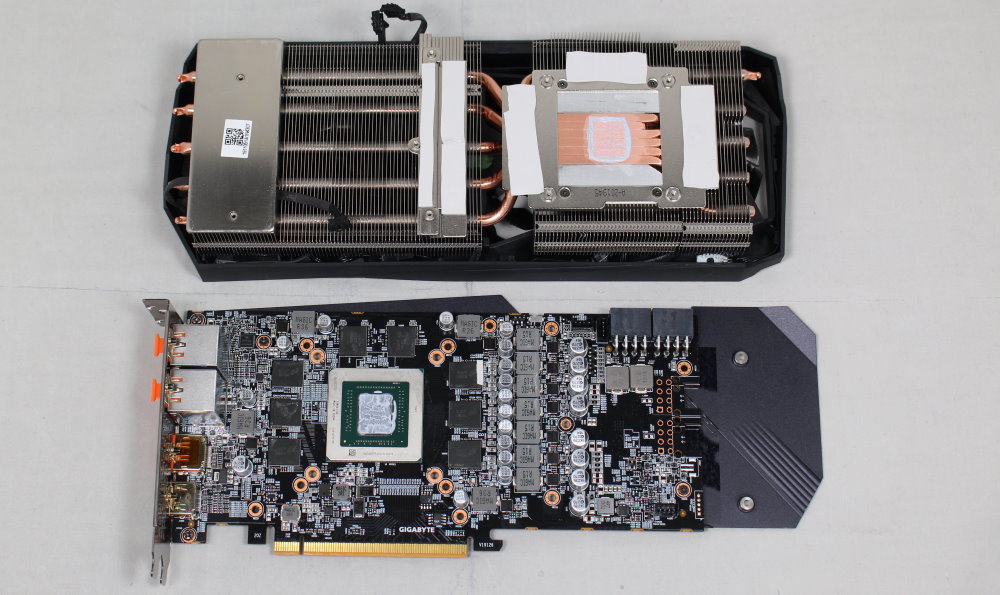

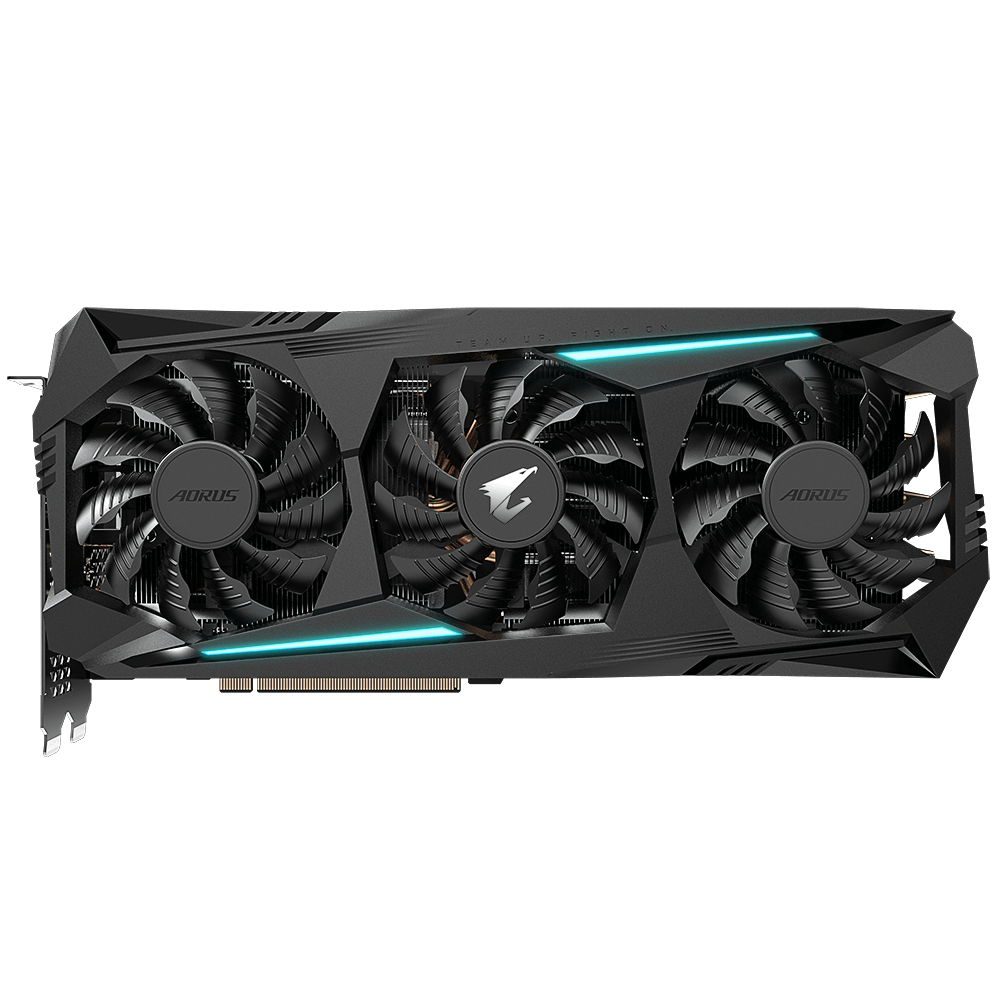

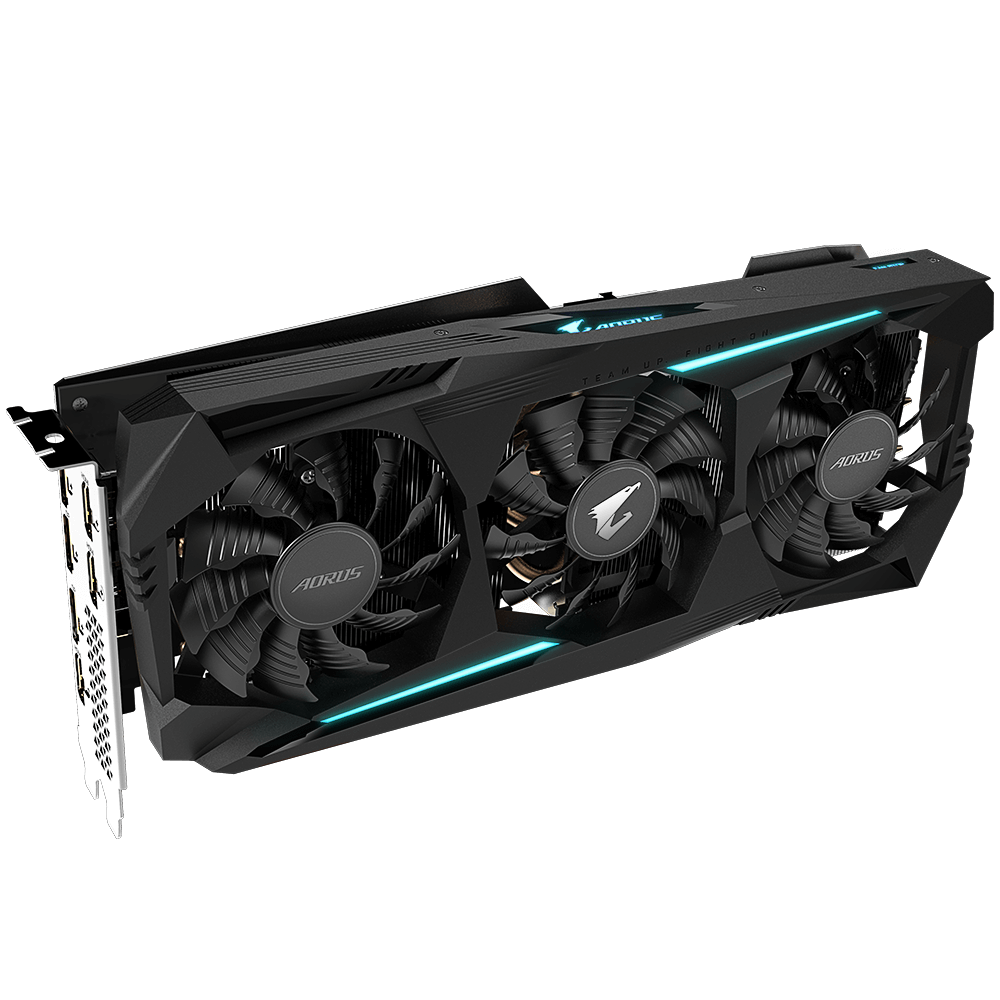

The flagship Aorus card and its large heatsink will take up three slots on your motherboard, and measures 11.4 x 4.8 x 2.3-inches (290 x 123 x 58mm). The length of the card has it hanging over the edge of ATX-size boards, meaning it won’t fit in most SFF systems. Most mid-towers and up shouldn’t be an issue, but as always, verify the space your chassis for a video card before buying.

The Windforce 3 heatsink, Gigabyte says, is an all-around cooling solution for key components of the graphics card (the GPU itself, VRAM and the MOSFETs) that the company says helps with stable overclocking and longer life. Dominating the exterior are three 82mm fans spread out across the face of the card. Flanking the fans on the top and bottom are two RGB lighting elements protruding out from the center fan. On top of the card are two more RGB locations. The first displays the Aorus branding while the other lights up a “No Fan” indicator when the fans are intentionally off when at the desktop or under light loads.

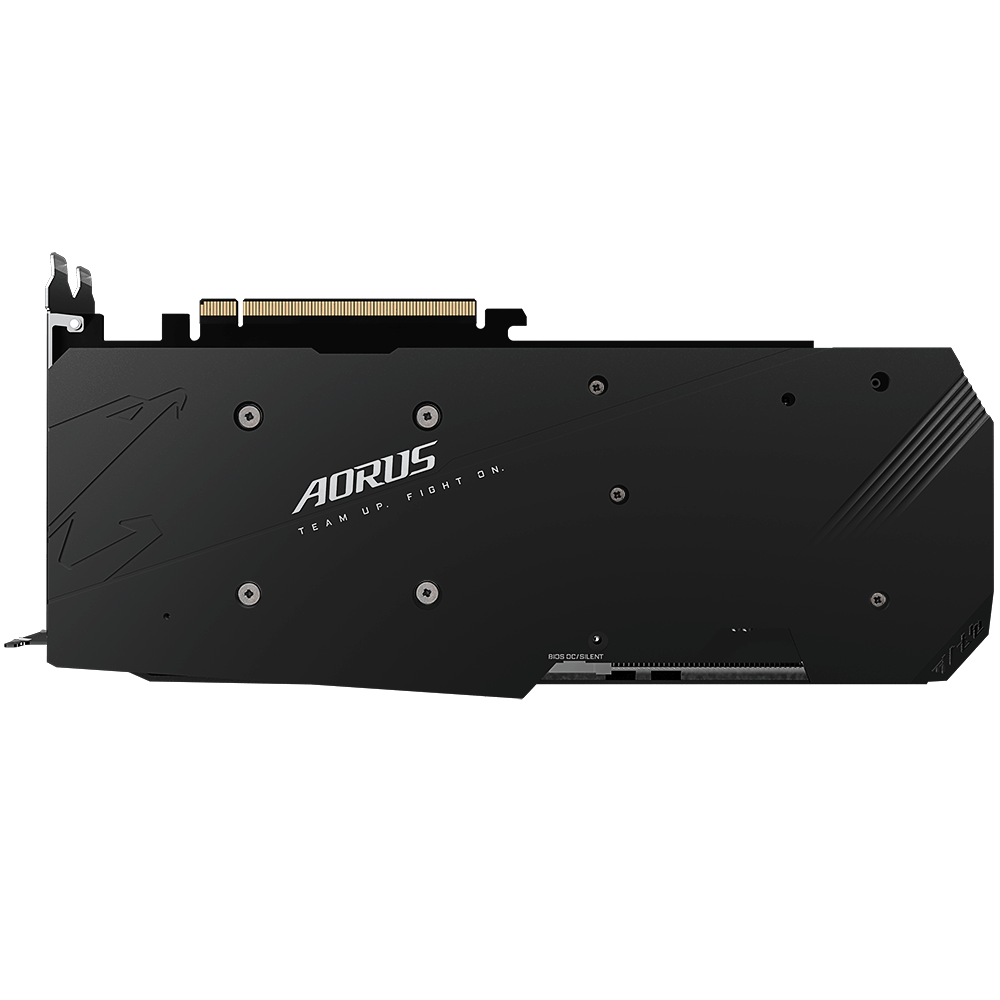

On the back of the card is a black metal backplate designed to protect the PCB and increase structural integrity. Gigabyte places thermal pads in strategic locations (behind the VRM and GPU core) between the backplate and the rear of the PCB, which helps cool the components on the backside. Overall, the card, with its black plastic shroud, will fit in with most build themes we don’t see any polarizing design choices or obnoxiously bright lighting.

The heatsink and its three fans utilize “alternate spinning” to reduce turbulent airflow and enhance air pressure. Specifically, the two outside fans spin counter-clockwise while the middle fan turns clockwise.

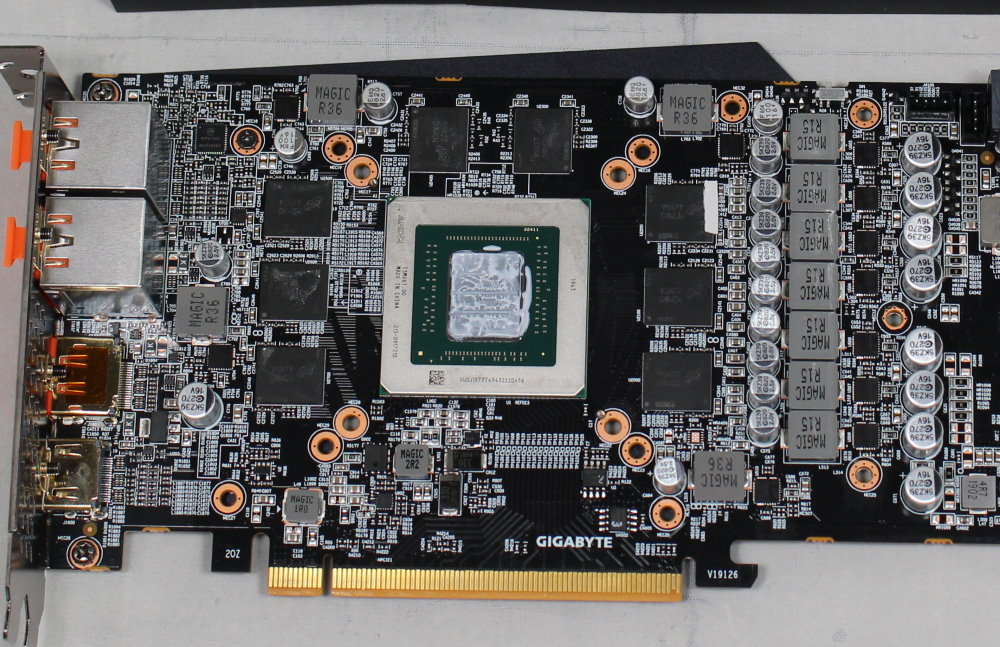

The heatsink in the Windforce 3 cooler uses two fin arrays connected by six copper heat pipes that make direct contact with the GPU die for best cooling results. The RAM and MOSFETs attach to the fin array through metal plates and thermal pads. Any critical areas seem to make good contact with the heatsink and are cooled adequately.

Power is delivered to the GPU and other components through a 7-phase VRM, managed by an International Rectifier IR35217 controller capable of supporting up to eight phases. In this case, we’re looking at a 6+1 configuration. The setup is more than capable of supporting this card even while overclocked.

One of the more significant differences between this and other RX 5700 XT’s are the display outputs. Typically, cards will have three or four in total, but the Aorus model gives you six, all of which can be used at the same time. There are three DisplayPorts (v1.4), one HDMI (v2.0b) and two other HDMI (1.4b). That’s plenty of connectivity for most users.

How We Tested Aorus RX 5700 XT 8GB

Recently, we’ve updated the test system to a new platform. We swapped from an i7-8086K to the Core i9-9900K. The eight-core i9-9900K sits in an MSI Z390 MEG Ace Motherboard along with 2x16GB Corsair DDR4 3200 MHz CL16 RAM (CMK32GX4M2B3200C16). Keeping the CPU cool is a Corsair H150i Pro RGB AIO, along with a 120mm Sharkoon fan for general airflow across the test system. Storing our OS and gaming suite is a single 2TB Kingston KC2000 NVMe PCIe 3.0 x4 drive.

The motherboard was updated to the latest (at this time) BIOS, version 7B12v16, from August 2019. Optimized defaults were used to set up the system. We then enabled the memory’s XMP profile to get the memory running at the rated 3200 MHz CL16 specification. No other changes or performance enhancements were enabled. The latest version of Windows 10 (1909) is used and is fully updated as of December 2019.

As time goes on we will build our database of results back up based on this test system. For now, we will include GPUs that compete with and are close in performance to the card that is being reviewed. For AMD cards, we compared this to the Gigabyte RX 5700 XT Gaming OC and the ASRock RX 5700 XT Taichi. On the Nvidia side, a Gigabyte RTX 2070 Super Gaming OC, and RTX 2060 Super Gaming OC.

Our list of test games is currently Tom Clancy’s The Division 2, Ghost Recon: Breakpoint, Borderlands 3, Gears of War 5, Strange Brigade, Shadow of The Tomb Raider, Far Cry 5, Metro: Exodus, Final Fantasy XIV: Shadowbringers, Forza Horizon 4 and Battlefield V. These titles represent a broad spectrum of genres and APIs, which gives us a good idea of the relative performance difference between the cards. We’re using driver build 441.20 for the Nvidia cards and Adrenalin 2020 Edition 20.1.1 for AMD cards.

We capture our frames per second (fps) and frame time information by running OCAT during our benchmarks. In order to capture clock and fan speed, temperature, and power, GPUz's logging capabilities are used. Soon we’ll resume using the Powenetics-based system used in previous reviews.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Features and Specifications

Next Page Performance Results: 2560 x 1440 (Ultra)

Joe Shields is a staff writer at Tom’s Hardware. He reviews motherboards and PC components.

-

g-unit1111 Love to see that AMD is coming very close to matching NVIDIA's best cards while at the same time costing a lot less than the comparable NVIDIA product. Some competition in the graphics department is very badly needed these days.Reply -

pahbi I was all set to build a new all AMD pc, but seeing all the problems folks are having with 5700 XT GPUs pushed me back to a intel/nvidia build.Reply

Seems like crashes or under-whelming drivers should have been one of the cons to this card.

- P -

mitch074 Reply

Why would unstable GPU drivers make you buy an Intel CPU? Well, enjoy your CPU security vulnerabilities...pahbi said:I was all set to build a new all AMD pc, but seeing all the problems folks are having with 5700 XT GPUs pushed me back to a intel/nvidia build.

Seems like crashes or under-whelming drivers should have been one of the cons to this card.

- P -

waltc3 Replypahbi said:I was all set to build a new all AMD pc, but seeing all the problems folks are having with 5700 XT GPUs pushed me back to a intel/nvidia build.

Seems like crashes or under-whelming drivers should have been one of the cons to this card.

- P

But you see the problem is that with my 50th Ann. Ed 5700XT, I'm not having crashes or underwhelming drivers, so it's indeed hard to sympathize...;) -

JayGau Kind of disappointing to see 500$ range cards doing as good as my Evga RTX 2080 XC Ultra for which I paid 750$ five months ago. And even more disappointing to see that the "super" version of this model is now cheaper than that (730$ on Amazon). Maybe when the next gen cards will arrive I should buy the ti version right away. It cost an arm and a leg but at least you know your card will not be beaten by cheaper ones a few months later.Reply -

waltc3 ReplyJayGau said:Kind of disappointing to see 500$ range cards doing as good as my Evga RTX 2080 XC Ultra for which I paid 750$ five months ago. And even more disappointing to see that the "super" version of this model is now cheaper than that (730$ on Amazon). Maybe when the next gen cards will arrive I should buy the ti version right away. It cost an arm and a leg but at least you know your card will not be beaten by cheaper ones a few months later.

Don't be so hard on yourself....;) Just be delighted you weren't one of those poor suckers who spent $1400 or more for a 3d card. Same thing will happen to those GPUs as well--only it may take a bit longer, is all. Progress is inevitable and inexorable. No matter what you buy it will be eclisped by something better it's only a matter of time. That's why it's best to spend as little as you can to get performance and quality that are acceptable to you--it leaves room for "next" GPU, and etc. and etc. -

JayGau Replywaltc3 said:Don't be so hard on yourself....;) Just be delighted you weren't one of those poor suckers who spent $1400 or more for a 3d card. Same thing will happen to those GPUs as well--only it may take a bit longer, is all. Progress is inevitable and inexorable. No matter what you buy it will be eclisped by something better it's only a matter of time. That's why it's best to spend as little as you can to get performance and quality that are acceptable to you--it leaves room for "next" GPU, and etc. and etc.

Thank you for the comforting I appreciate it :)

I actually still like very much my 2080. It can run any games at 2K max details at above 70 fps. The only game I cannot max out without dropping below 50 fps is Control but according to benchmarks and reviews even the ti version struggles to run that game (thanks to the full scale implementation of ray tracing... ...and it's beautiful!).

I know there will always be progress but my frustration is more about the price matching. I have been buying graphics cards for almost 20 years and the prices have never been so inconsistent. Having paid 750$ for a 2080 five months ago to see a 500$ 2070 doing the same performance is certainly disappointing at best.

But I also understand that AMD's return to the market competition after years of NVIDIA domination can lead to some strangeness like that. At least the RTX 2080 doesn't seem to be available anymore (beside from third party sellers at ridiculously high price) and only the super versions can be purchased at a similar price the non super models were before. So it looks like the consistency is back but too bad it's five months too late for me! -

waltc3 ReplyJayGau said:Thank you for the comforting I appreciate it :)

I actually still like very much my 2080. It can run any games at 2K max details at above 70 fps. The only game I cannot max out without dropping below 50 fps is Control but according to benchmarks and reviews even the ti version struggles to run that game (thanks to the full scale implementation of ray tracing... ...and it's beautiful!).

I know there will always be progress but my frustration is more about the price matching. I have been buying graphics cards for almost 20 years and the prices have never been so inconsistent. Having paid 750$ for a 2080 five months ago to see a 500$ 2070 doing the same performance is certainly disappointing at best.

But I also understand that AMD's return to the market competition after years of NVIDIA domination can lead to some strangeness like that. At least the RTX 2080 doesn't seem to be available anymore (beside from third party sellers at ridiculously high price) and only the super versions can be purchased at a similar price the non super models were before. So it looks like the consistency is back but too bad it's five months too late for me!

It's amusing to think about things...years ago, before 3dfx and the V1 then the V2, everything was 2d--"3d" hadn't yet come to market in a viable, playable form. Card to have in those days was the Matrox Millennium--the card cost $475-$575--probably like ~$800 today, with inflation--depending on where you bought them--I had one--it was a great card--but I cannot recall if it had 4 Megabytes of Vram or 8 MBs--anyway--for the same or a bit more money now look what we can get! GBs of onboard VRAM...processors maybe 1000X faster than in those days and infinitely faster in processing polygons! You're running 2k; I'm running 4k--but at either res it's still amazing to think how much things have improved! You seem very happy with your choice, and that's what it's all about, isn't it?....;) Of course, at 4k max eye-candy I don't get 70 fps most of the time, but in some games I get a tad more and when the fps drops to below 60--as it often does--it's still stutter free and smooth--so I'm happy, too! Anyway, "new and improved" is always there just around the bend...;) No such thing as "future proof"--learned that long ago! Happy gaming!