Why you can trust Tom's Hardware

Nvidia RTX 4070 Founders Edition Overclocking

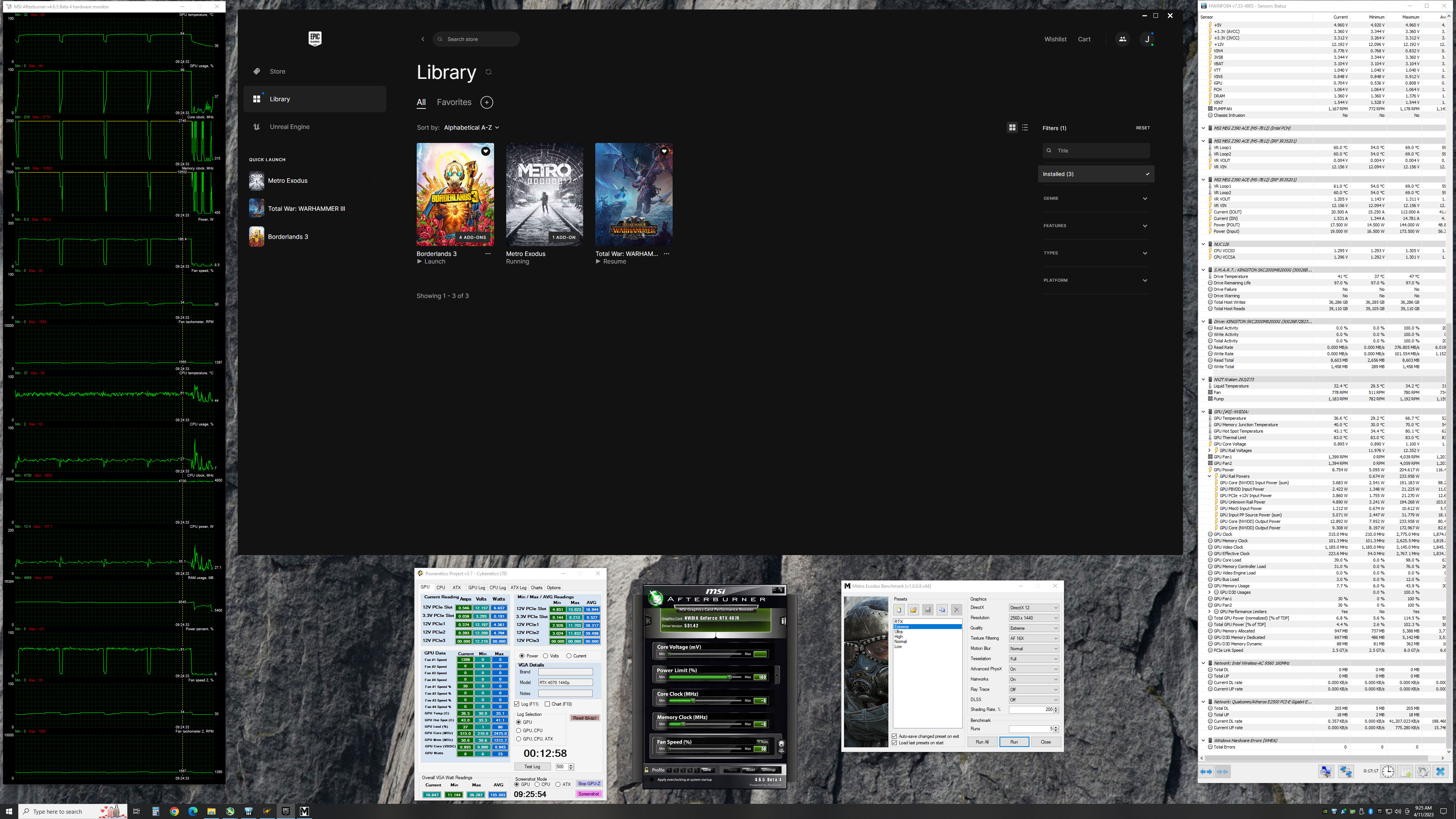

In the above images, you can see the results of our power testing that shows clocks, power, and temperatures. The big takeaway is that the card ran quite cool on both the GPU and VRAM, and you can see the clocks were hitting well over 2.7GHz in the gaming test. As for overclocking, there's trial and error as usual, and our results may not be representative of other cards — each card behaves slightly differently. We attempt to dial in stable settings while running some stress tests, but what at first appears to work just fine may crash once we start running through our gaming suite.

We start by maxing out the power limit, which in this case was 110% — and again, that can vary by card manufacturer and model. Most of the RTX 40-series cards we've tested can't do more than about +150 MHz on the cores, but we were able to hit up to +250 MHz on the RTX 4070. We backed off slightly to a +225 MHz overclock on the GPU to keep things fully stable.

The GDDR6X memory was able to reach +1450 MHz before showing some graphical corruption at +1500 MHz. Because Nvidia has error detection and retry, that generally means you don't want to fully max out the memory speed so we backed off to +1350 MHz. With both the GPU and VRAM overclocks and a fan curve set to ramp from 30% at 30C up to 100% at 80C, we were able to run our full suite of gaming tests at 1080p and 1440p ultra without any issues.

As with other RTX 40-series cards, there's no way to increase the GPU voltage short of doing a voltage mod (not something we wanted to do), and that seems to be a limiting factor. GPU clocks did break the 3 GHz mark at times, and we'll have the overclocking results in our charts for reference.

Nvidia RTX 4070 Test Setup

We updated our GPU test PC at the end of last year with a Core i9-13900K, though we continue to also test reference GPUs on our 2022 system that includes a Core i9-12900K for our GPU benchmarks hierarchy. (We'll be updating that later today, once the embargo has passed.) For the RTX 4070, our review will focus on the 13900K performance, which ensures (as much as possible) that we're not CPU limited.

TOM'S HARDWARE INTEL 13TH GEN PC

Intel Core i9-13900K

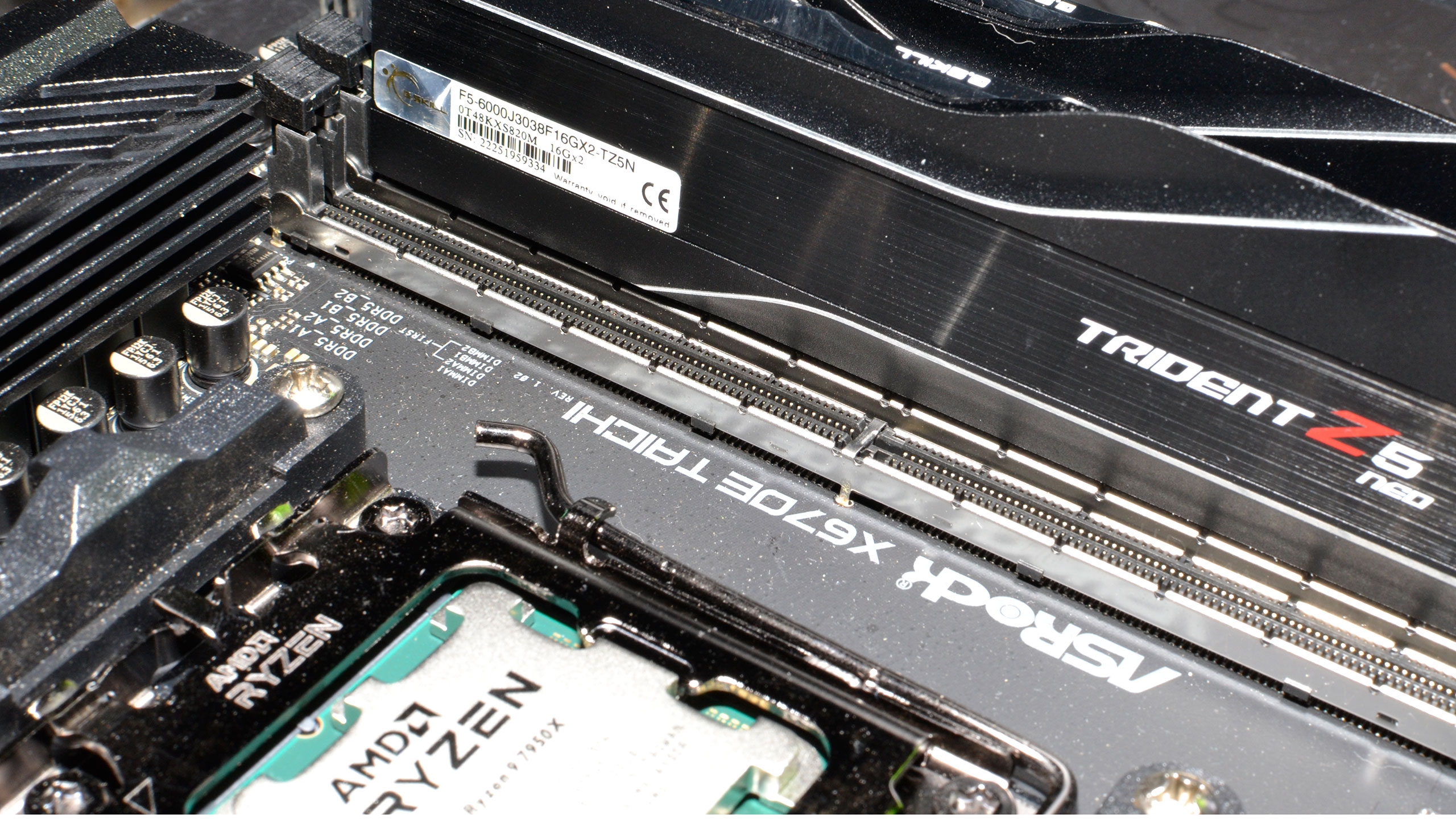

MSI MEG Z790 Ace DDR5

G.Skill Trident Z5 2x16GB DDR5-6600 CL34

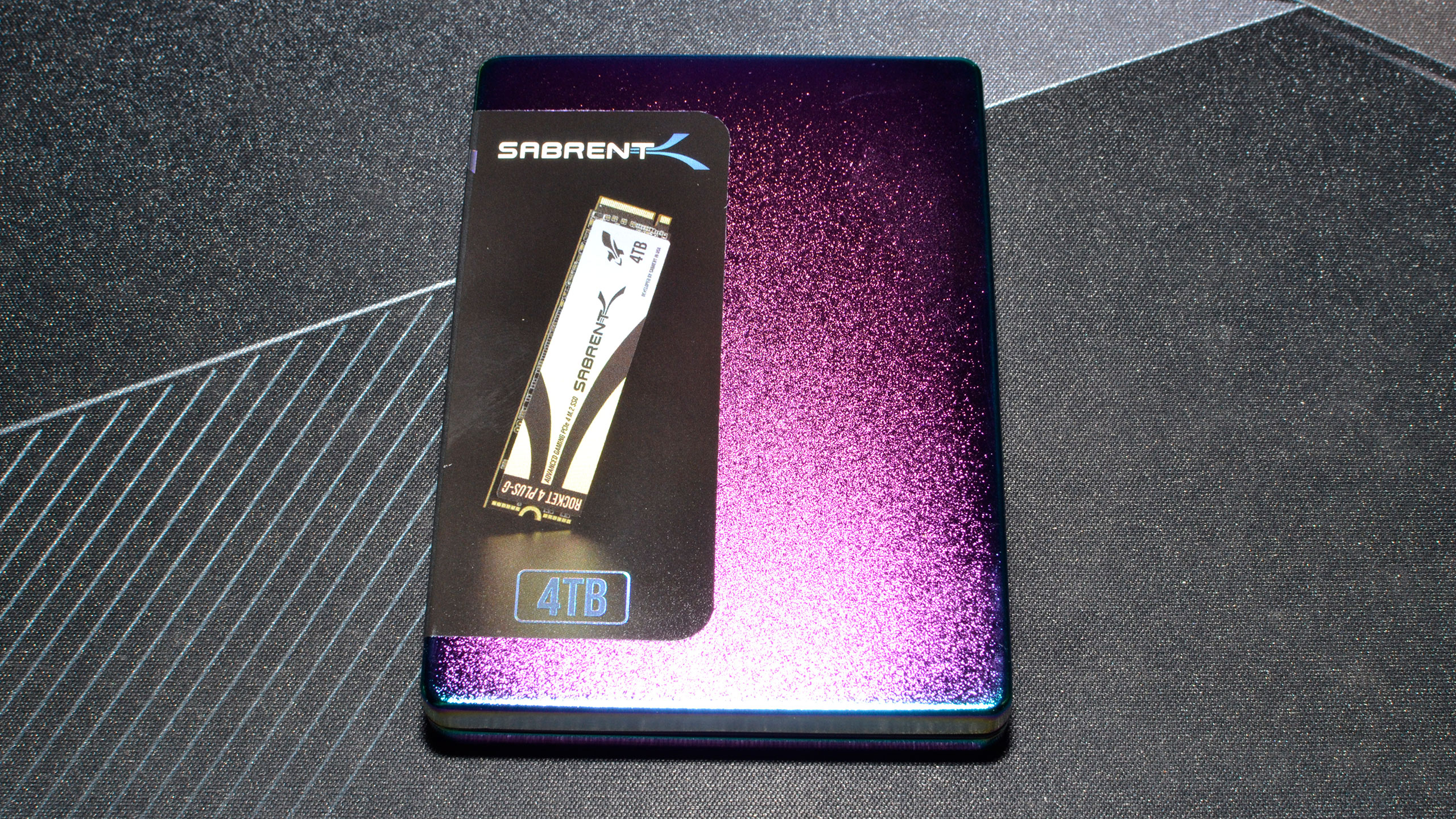

Sabrent Rocket 4 Plus-G 4TB

be quiet! 1500W Dark Power Pro 12

Cooler Master PL360 Flux

Windows 11 Pro 64-bit

TOM'S HARDWARE 2022 PC

Intel Core i9-12900K

MSI Pro Z690-A WiFi DDR4

Corsair 2x16GB DDR4-3600 CL16

Crucial P5 Plus 2TB

Cooler Master MWE 1250 V2 Gold

Corsair H150i Elite Capellix

Cooler Master HAF500

Windows 11 Pro 64-bit

OTHER GRAPHICS CARDS

AMD RX 7900 XT

AMD RX 6950 XT

AMD RX 6900 XT

AMD RX 6800 XT

AMD RX 6800

Nvidia RTX 4080

Nvidia RTX 4070 Ti

Nvidia RTX 3080 Ti

Nvidia RTX 3080 (10GB)

Nvidia RTX 3070 Ti

Nvidia RTX 3070

Multiple games have been updated over the past few months, so we retested all of the cards for this review (though some of the tests were done with the previous Nvidia drivers, as we've only had the review drivers for about a week). We're running Nvidia 531.41 and 531.42 drivers, and AMD 23.3.2. Our professional and AI workloads were also tested on the 12900K PC, since that allowed better multitasking on the part of our testing regimen.

Our current test suite consists of 15 games. Of these, nine support DirectX Raytracing (DXR), but we only enable the DXR features in six of the games. At the time of testing, 12 of the games support DLSS 2, five support DLSS 3, and five support FSR 2. We'll cover performance with the various upscaling modes enabled in a separate gaming section.

We tested all of the GPUs at 4K, 1440p, and 1080p using "ultra" settings — basically the highest supported preset if there is one, and in some cases maxing out all the other settings for good measure (except for MSAA or super sampling). We also tested at 1080p "medium" to show what sort of higher FPS games can hit. Our PC is hooked up to a Samsung Odyssey Neo G8 32, one of the best gaming monitors around, just so we could fully experience some of the higher frame rates that might be available — G-Sync and FreeSync were enabled, as appropriate.

When we assembled the new test PC, we installed all of the then-latest Windows 11 updates. We're running Windows 11 22H2, but we've used InControl to lock our test PC to that major release for the foreseeable future (security updates still get installed on occasion).

Our new test PC includes Nvidia's PCAT v2 (Power Capture and Analysis Tool) hardware, which means we can grab real power use, GPU clocks, and more during all of our gaming benchmarks. We'll cover those results in our page on power use.

Finally, because GPUs aren't purely for gaming these days, we've run some professional application tests, and we also ran some Stable Diffusion benchmarks to see how AI workloads scale on the various GPUs.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Nvidia RTX 4070 Overclocking and Test Setup

Prev Page Nvidia RTX 4070 Founders Edition Design Next Page GeForce RTX 4070: 1440p Gaming Performance

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Elusive Ruse If there were no previous gen high-end GPUs available this would be a good buy but at this price you can just buy a new AIB 6950XT.Reply -

PlaneInTheSky So it offers similar performance to the 3080 and launches at an only slightly lower MSRP.Reply

Going by price leaks of some AIB, we will be lucky if we're not going backwards in price / performance.

In 2 years time since the 3080, performance per $ has barely increased.

The 12GB VRAM is depressing too, considering a RX 6950XT offers 16GB for the same price.

DLSS 3.0 doesn't interest me in the slightest since it's just glorified frame interpolation that does nothing to help responsiveness, it actually adds lag.

It's hard to get excited about PC Gaming when it is in such a terrible state. -

PlaneInTheSky In Europe, price/performance of Nvidia GPU generation-generation has basically flatlined now.Reply

3080 MSRP: €699

4070 MSRP: €669

That's the state PC gaming is in now. In 2 years time, no improvement in price/performance.

It is a good example of what happens in a duopoly market without competition. -

peachpuff Reply

That's really sad, how many gold plated leather jackets does jensen really need?PlaneInTheSky said:In 2 years time since the 3080, performance per $ has barely increased.

-

DSzymborski The 4070 is releasing at a significantly lower price than the 3080. $699 in September 2020 is $810 in March 2023 dollars. $200 over the 4070 MSRP of $599 is a significant amount. Now, few 4070s will be available at $599, but then few 3080s were actually available at $699 (which, again, is $810 in current dollars).Reply

The 3070 was released at an MSRP of $499, which is $578 in March 2023 dollars. -

Exphius_Mortum Title of the article needs a little adjustment, it should read as followsReply

Nvidia GeForce RTX 4070 Review: Mainstream Ada Arrives at Enthusiast Pricing -

Elusive Ruse @JarredWaltonGPU thanks for the review, I saw that you retested all the GPUs with recent drivers which must have taken a huge effort (y)Reply

PS: Are you planning to add or replace some older titles with new games for future benchmarks? -

btmedic04 Hey look, a 1.65% performance improvement per 1% more money card when compared to the 3070. It's disappointing that's where we are at with gpus these daysReply -

healthy Pro-teen Reply

A duopoly at best, it's almost a monopoly. AMD will probably undercut it by a small amount, get middling reviews and then heavily discount the GPU only when most people have already decided on the 4070. 6950XT is discounted right now and offers better value and the average gamer doesn't know it, so 4070 will sell regardless.PlaneInTheSky said:In Europe, price/performance of Nvidia GPU generation-generation has basically flatlined now.

3080 MSRP: €699

4070 MSRP: €669

That's the state PC gaming is in now. In 2 years time, no improvement in price/performance.

It is a good example of what happens in a duopoly market without competition.