QNAP TS-453 Pro-8G NAS Review

Test Setup And Methodology

Test Setup

Our test system (client PC) consists of the following components:

| Test System Configuration | |

|---|---|

| Processor | Intel Pentium G3258 (3MB Cache, 3.2GHz) |

| Motherboard/Platform | Gigabyte GA-Z97X-UD3H |

| Memory | 8GB DDR3-1600 (2 x 4GB) |

| Graphics | AMD Radeon HD 7870 |

| Storage | SSD: OCZ Vertex 4 256GBHDD: Samsung F4 2000GB |

| Networking | Intel Pro/1000 PT Dual-Port Server Adapter |

| Power Supply | Seasonic X-520 |

| Cooling | Thermalright SilverArrow SB-E Extreme |

| Case | Cooler Master HAF XB |

| Operating System | Windows 7 64-bit Service Pack 1 |

| Network Switch | TL-SG3216 16-port GbE managed switch (LACP and jumbo frames support) |

| Ethernet Cabling | CAT 6e, 2m |

As you can see, we use a capable client test system with a fast SSD from which all tests are executed. This helps to ensure there are no bottlenecks on our side, since the specific SSD can achieve up to 560 MB/s read and 510 MB/s write (sequential).

| NAS Configuration | |

|---|---|

| Internal Disks | 4x Seagate ST500DM005 500GB (HD502HJ, SATA 6Gb/s, 7200 RPM, 16MB) |

| External Disk | SSD OCZ Agility 2 60GB in USB 3.0 enclosure |

| Firmware | QTS 4.1.1 |

Methodology

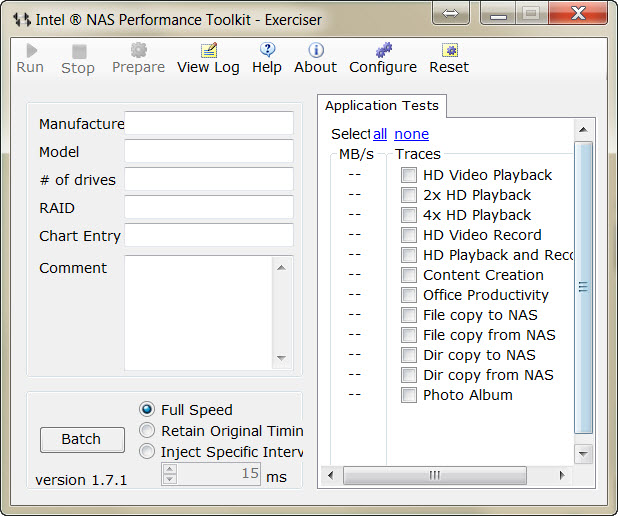

We use three different programs to evaluate NAS performance. The first is Intel's NAS Performance Toolkit. Our only problem with this software is that using a client PC with more than 2GB of memory heavily affects the HD Video Record and File Copy to NAS tests, since they end up measuring the client's RAM buffer speed and not the network performance. Therefore, we set the maximum memory of our test PC to 2GB via msconfig's advanced options. We also exploit the utility's batch run function, which repeats the selected tests five times and uses the averages for its final results.

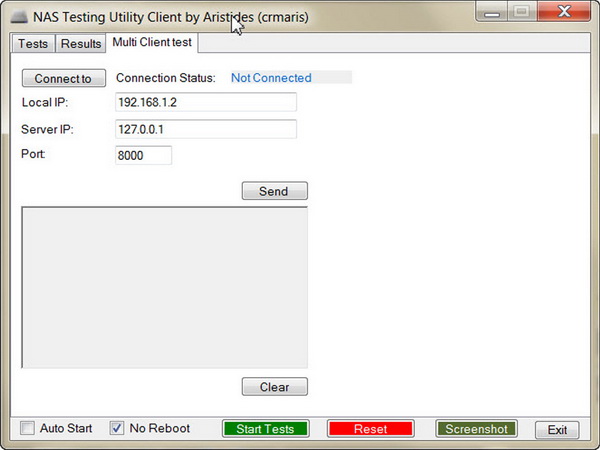

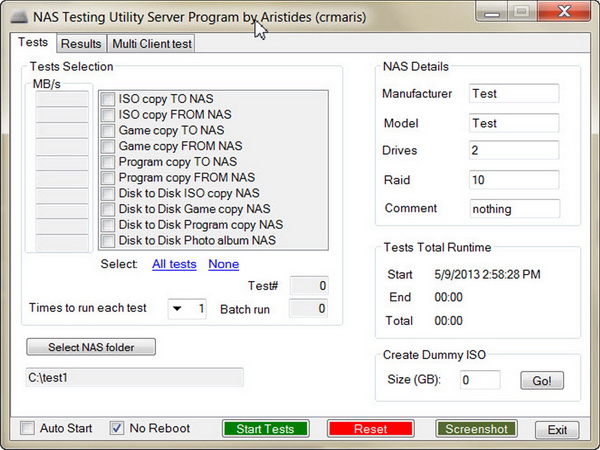

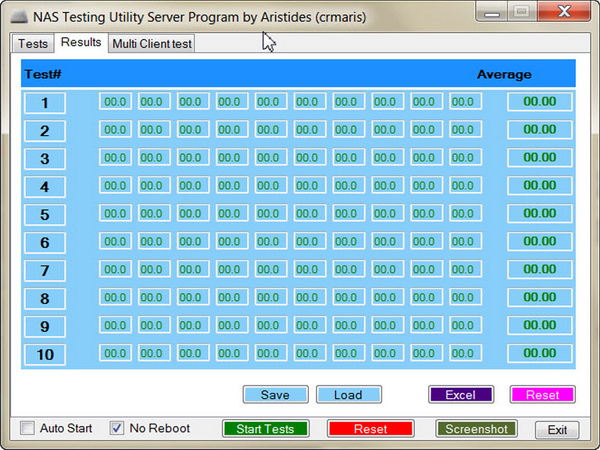

The second program is custom-made. It performs 10 basic file transfer tests and measures the average speed in MB/s for each. To extract results that are as accurate as possible, we run each metric 10 times, using the average as our result.

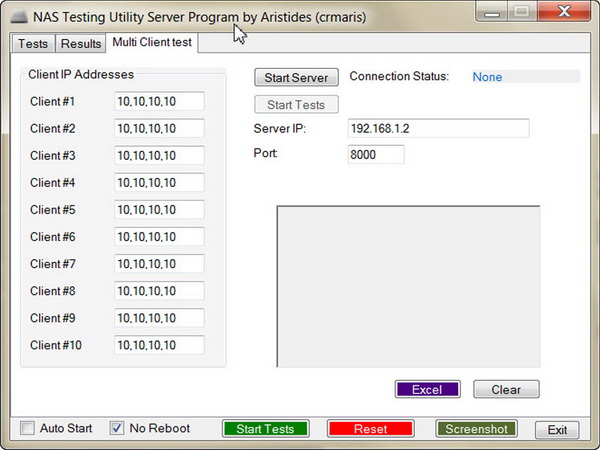

We also perform multiple client tests (up to 10 clients are supported by one server instance) through the same program. The server utility runs on the main workstation/server, while clients run the client version. All are synchronized and operate in parallel; after all of the tests are finished, the clients report their results to the server, which sums them up and transfers them to an Excel sheet to generate the corresponding graphs.

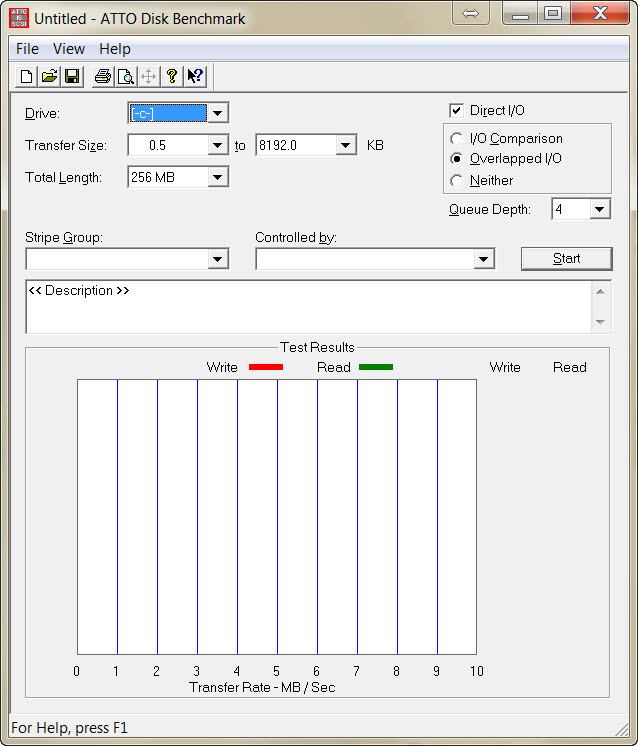

The third program we use in our test sessions is ATTO, a well-known program for storage benchmarks. In order to use ATTO for benchmarking, we are forced to map a shared folder of the NAS to a local drive, since ATTO cannot directly access network devices.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Test Setup And Methodology

Prev Page HybridDesk, XBMC, Multimedia Performance And Virtualization Next Page Performance Results

Aris Mpitziopoulos is a contributing editor at Tom's Hardware, covering PSUs.

-

elbutchos I know it is not supposed to support 16GB RAM but please guys bust this myth.Reply

Thank you in advance. -

Aris_Mp This is not the NAS mainboard's fault but the CPU cannot support more than 8 GB of RAM.Reply

Check here: http://ark.intel.com/products/78867/Intel-Celeron-Processor-J1900-2M-Cache-up-to-2_42-GHz -

milkod2001 Any chance you guys could review: Zyxel NAS540Reply

I'd love to see how above reviewed product stands against €226 Zyxel NAS540.

@blackmagnum old computers usually have old big inefficient CPU(overkill for NAS), sitting in big old, ugly,dusty case.

For NAS you want something small, efficient, cool & quite. It's better to sell old PC and get NAS ready to go solution or build your own from scratch.

-

nekromobo Could you please test the Ts-453 or ts-451 with all SSD's array? Or just try the 3x HDD + 1 SSD cache acceleration disk and add results. Im really thinking of buying a SSD cache disk for my Qnap but can't decide. Also recommend what SSD to buy for? I hear SSD would need DZAT, not sure if Intal or Samsung supports that. Please investigate!Reply -

Aris_Mp In the next reviews I will do this (use a single SSD as cache). However I don't know if any of my next NAS reviews will be posted here.Reply -

Rookie_MIB I have a mobo with one of the J1900 chips (ASRock Q1900M) and it's a surprisingly capable little chip. Since it has a few PCI-e slots I'm tempted to turn it into a NAS with some SATA adapters.Reply

Slap in FreeNAS or just a good Linux distro w/raid and it'd be good to go. -

Eggz Why are these expensive NAS boxes still on 1 Gbps interfaces? That's such an old standard! Aren't there 10 Gbps solutions in a similar form factor? I am pretty certain I recall seeing some small 10 Gbps NAS solutions that would be much faster, and I think someone would be able to make one for less money than this.Reply