Tom's Hardware Verdict

Nvidia's RTX 3050 delivers good performance for its theoretical $249 starting price. Unfortunately, that also means there's not a snowball's chance in hell that it won't sell for radically inflated prices. It lands between the previous-gen RTX 2060 and GTX 1660 Super, both of which currently sell for far more than Nvidia's asking price.

Pros

- +

+ Theoretically good price

- +

+ Much better than AMD RX 6500 XT

- +

+ Comes with plenty of VRAM

- +

+ Full PCIe 4.0 x16 connection

Cons

- -

Little chance it will sell for MSRP

- -

Slower than RTX 2060 and RX 5600 XT

- -

8GB means it can mine crypto

Why you can trust Tom's Hardware

The Nvidia GeForce RTX 3050 feels a bit like a breath of fresh air after last week's rather unimpressive launch of the AMD Radeon RX 6500 XT. On paper, there aren't any major compromises to speak of, and the launch price falls squarely into the mainstream market at $249. Unfortunately, GPU prices are still extremely inflated, and while cryptocurrency prices have plummeted, that doesn't mean we'll see reasonable prices on the best graphics cards any time soon. But setting aside real-world pricing and supply, how does the RTX 3050 stack up to the competition?

Thankfully, Nvidia hasn't put the Ampere architecture on the chopping block to create the desktop RTX 3050. While the GA107 used in mobile RTX 3050 Ti and RTX 3050 only uses an x8 PCIe interface, the desktop RTX 3050 uses the larger GA106 chip and includes full PCIe 4.0 x16 connectivity, along with full support for all the latest video codecs. It also includes 8GB of GDDR6 memory, though on a narrower 128-bit interface than the RTX 3060. Here's the full specs overview of Nvidia's RTX 3050, along with some competing GPUs.

| Graphics Card | RTX 3050 | RTX 2060 | GTX 1650 Super | RX 6500 XT |

|---|---|---|---|---|

| Architecture | GA106 | TU106 | TU116 | Navi 24 |

| Process Technology | Samsung 8N | TSMC 12FFN | TSMC 12FFN | TSMC N6 |

| Transistors (Billion) | 12 | 10.8 | 6.6 | 5.4 |

| Die size (mm^2) | 276 | 445 | 284 | 107 |

| SMs / CUs | 20 | 30 | 20 | 16 |

| GPU Cores | 2560 | 1920 | 1280 | 1024 |

| Tensor Cores | 80 | 240 | N/A | N/A |

| RT Cores | 20 | 30 | N/A | 16 |

| Boost Clock (MHz) | 1777 | 1680 | 1725 | 2815 |

| VRAM Speed (Gbps) | 14 | 14 | 12 | 18 |

| VRAM (GB) | 8 | 6 | 4 | 4 |

| VRAM Bus Width | 128 | 192 | 128 | 64 |

| ROPs | 48 | 48 | 48 | 32 |

| TMUs | 80 | 120 | 80 | 64 |

| TFLOPS FP32 (Boost) | 9.1 | 6.5 | 4.4 | 5.8 |

| TFLOPS FP16 (Tensor) | 36 (73) | 52 | N/A | N/A |

| Bandwidth (GBps) | 224 | 336 | 192 | 144 |

| Board Power (Watts) | 130 | 160 | 100 | 107 |

| Launch Date | January 2022 | January 2019 | November 2019 | January 2022 |

| Official Launch MSRP | $249 | $349 | $159 | $199 |

Along with the RTX 3050, we've included the RTX 2060, GTX 1650 Super, and RX 6500 XT as points of reference. The official launch prices are obviously a bit of a joke right now, with most of these cards costing 50–100% more than the suggested values, but that's not likely to change any time soon. A key difference between the RTX 3050 and its nominal predecessors, the GTX 1650 and GTX 1650 Super, is that the 3050 adds RT and Tensor cores to the mix. That brings Nvidia's ray tracing and DLSS support to a new tier of hardware, and like all Ampere GPUs, these are second-gen RT and third-gen Tensor cores.

Of course, that makes performance comparisons between the old and new GPUs sort of pointless in some cases. For example, Nvidia showed how RTX 3050 with RT and DLSS delivered performance compared to the GTX 1650, GTX 1050 Ti, and GTX 1050. So naturally, those GPUs all scored zero in RT and DLSS tests since they can't run either one. Meanwhile, the GTX 1660 series can support ray tracing (in some games) but still lacks Tensor hardware. So, in effect, every GTX GPU scores zero on RT + DLSS performance, and the same applies to all AMD GPUs. That's not a particularly helpful comparison.

One interesting item to note is the die size for the various chips. Nvidia hasn't revealed a die size for the (currently) laptop-only GA107, but GA106 ends up roughly the same size as the TU116 chip used in the GTX 16-series cards, and 150% larger than AMD's tiny Navi 24. Some of that is thanks to TSMC's N6 process, and the cost per square mm for TSMC N6 is certainly higher than Samsung 8N, but die size definitely favors AMD.

At the same time, we've already said we think AMD went too far in cutting features and specs to keep the Navi 24 chip as small as possible. Look at all the other specs and RTX 3050 should easily dominate over AMD's RX 6500 XT, and it might even give the RX 6600 some competition at times. We'll have the RX 6600 in our performance charts, along with some other current and previous-gen GPUs. One thing to keep an eye on is how the RTX 3050 compares to the RTX 2060. It has quite a bit less bandwidth but also more compute performance. RTX 2060 will be faster for mining purposes (not necessarily a good thing) as well, which means the RTX 3050 will likely end up in the hands of more gamers going forward.

Obviously, real-world pricing and availability will be the deciding factors for any current graphics card purchase. Nvidia told us it has been working to build up inventory before launching its new GPUs, and it knows the RTX 3050 will be in very high demand thanks to its lower price. It's still going to sell out, but hopefully we'll see a decent number of cards available at reasonable prices with tomorrow's launch. However, once the initial supply gets cleared out, we'll have to wait and see how things develop going forward.

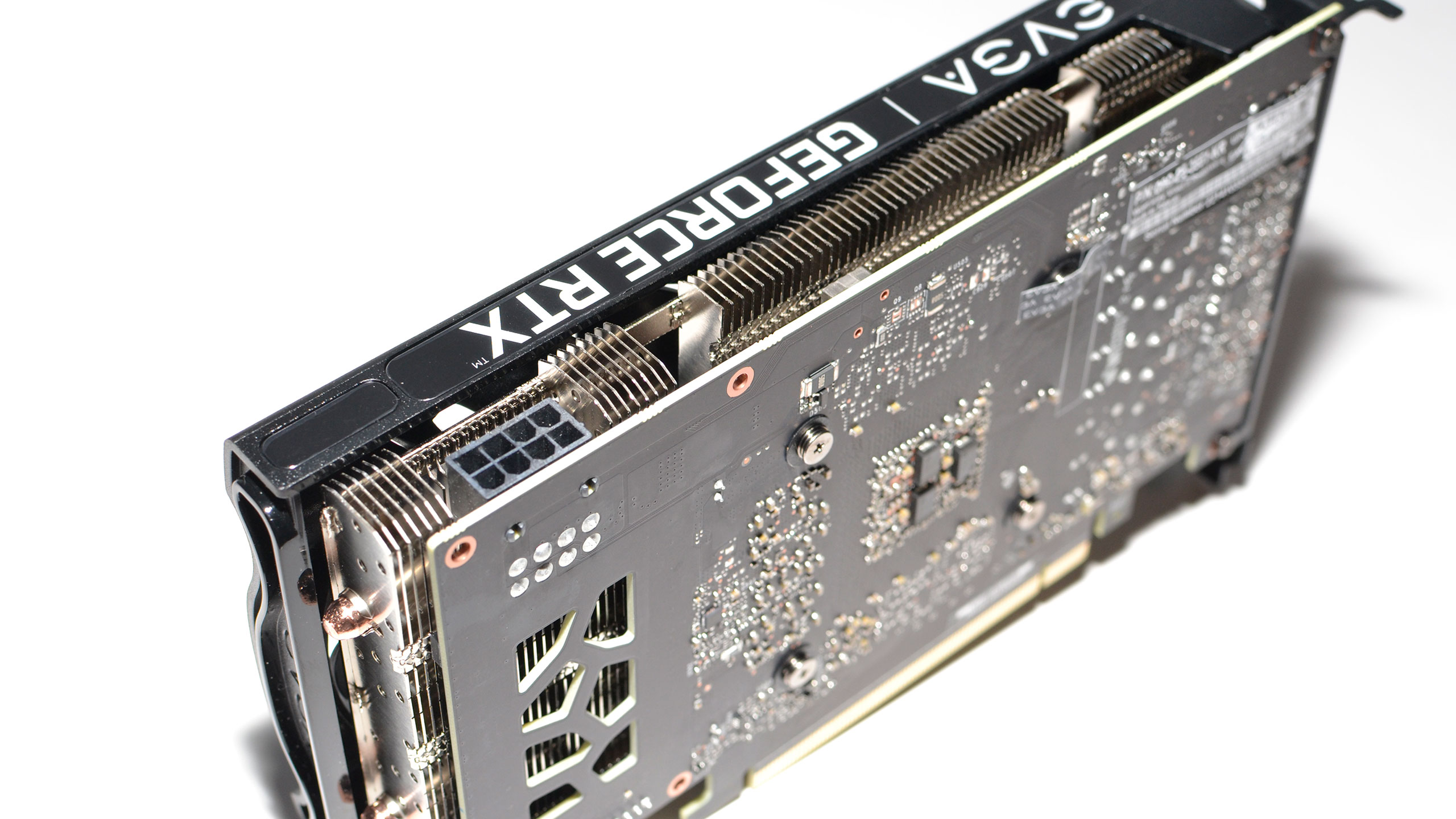

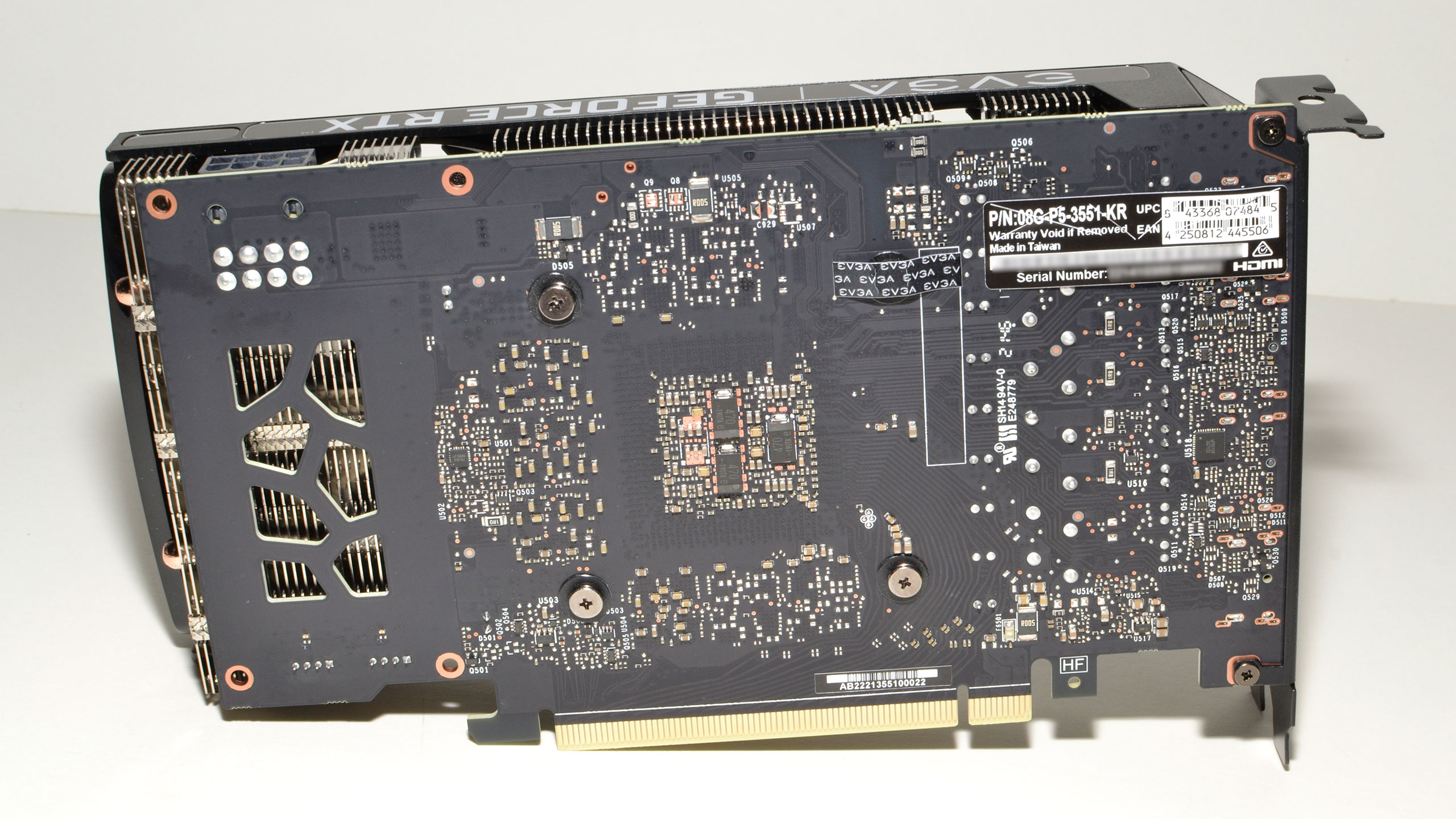

EVGA GeForce RTX 3050 XC Black

For our launch review, Nvidia provided us with an EVGA RTX 3050 XC Black… except, it sort of didn't. Officially, the XC Black runs at reference clocks, meaning a boost clock of 1777MHz. EVGA inadvertently put the non-Black 3050 XC Gaming VBIOS on the card, which gave it a modest factory overclock to 1845MHz boost clock. EVGA did send a corrected VBIOS, and we have now retested everything, but the initial data used the overclocked results.

Fundamentally, we're not particularly concerned with receiving a factory overclocked card. AMD sent us factory-overclocked RX 6600 XT, RX 6600, and RX 6500 XT cards. Nvidia has also sent some factory overclocked GPUs in the past. The performance difference between the XC Gaming and XC Black should only be about 2–3%, and not surprisingly, the XC Black ran just fine at the XC clocks. You could certainly manually overclock just about any RTX 3050 card to similar levels. If you're looking at this review and wondering if you should buy the XC Black, the only thing you really need to know is that performance is slightly lower than what we show here, and power consumption is also a tiny bit lower.

The XC Black comes with a suggested starting price of $249, meaning it will likely be available for that price in limited quantities for the launch tomorrow. After the initial launch, all bets are off. We also have a Zotac RTX 3050 Twin Edge OC (1807MHz boost clock), and we're working on testing that and will post that review in the near future. We have a pricing table in our conclusion that will certainly raise some eyebrows, if you were hoping for more cards selling at the $249 base price.

The RTX 3050 GPU doesn't require a lot in the way of power or cooling, and as a baseline design, the EVGA XC Black trims most of the extras you'll find on higher-cost models. There's no RGB lighting, and the card has two 87mm EVGA fans — it's essentially identical in appearance to the RTX 3060 EVGA card, except with less VRAM and fewer GPU cores. The card doesn't include a backplate, but there are still three DisplayPort 1.4a connections and a single HDMI 2.1 port, which is standard for just about every recent GPU introduction other than AMD's RX 6500 XT.

The card measures 202x110x38mm, only slightly longer than an x16 PCIe slot and also smaller than the XFX RX 6500 XT we reviewed. There's a single 8-pin PEG power connector that should provide more than enough power for the RTX 3050, and any power from the PCIe slot is just gravy. Interestingly, the XC Black weighs 527g, about 125g lighter than the EVGA RTX 3060. Some of that comes from the two extra memory chips on the 3060, but it also has a slightly reworked heatsink with two fewer copper heatpipes (not that the GPU really needs them).

Test Setup for GeForce RTX 3050

As discussed in our Radeon RX 6500 XT review, we've recently updated our GPU test PC and gaming suite. We're now using a Core i9-12900K processor, paired with a DDR4 motherboard (because DDR5 is nearly impossible to buy and ludicrously expensive right now). We also upgraded to Windows 11 Pro, since it's basically required to get the most out of Alder Lake. You can see the rest of the hardware in the boxout.

Besides the change in hardware, we've also updated our gaming test suite, focusing on newer and more demanding games. While we could include various esports games and lighter fare, we don't feel that's particularly useful. Just about any GPU can handle games like CS:GO, for example. Instead, we're pushing the GPU to its limits to see how the various cards fare.

We've selected seven games and test at four settings: 1080p "medium" (or thereabouts) and 1080p/1440p/4K "ultra" (basically maxed out settings except for SSAA). We'll only test at settings that make sense for the hardware, however, so for example we didn't test the RTX 3050 at 4K ultra. We intend to run every card at 1080p medium and ultra, as those will be used for determining ranking in our GPU hierarchy. We'll also include results with DLSS for the card being reviewed, in games that support the technology.

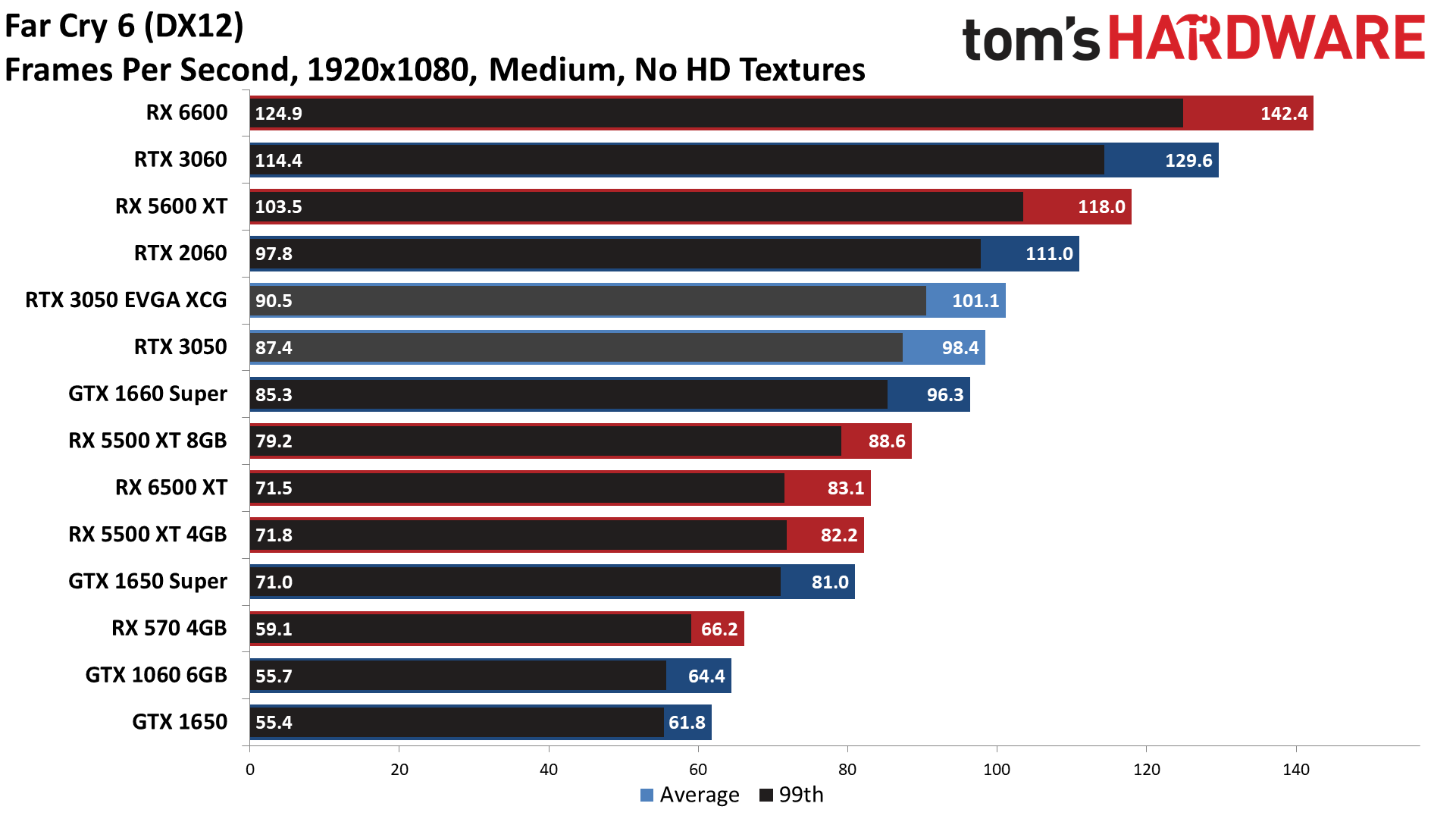

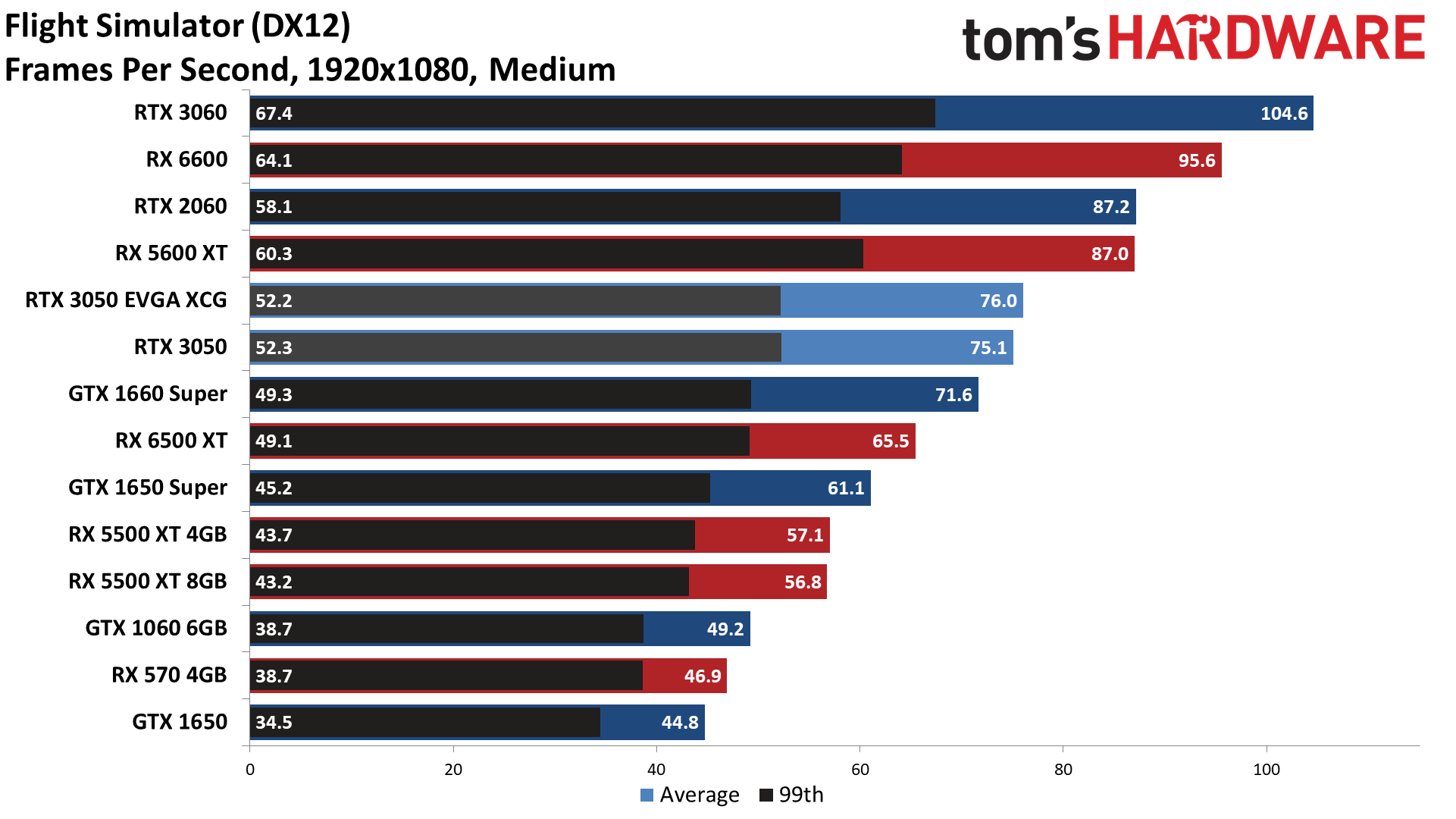

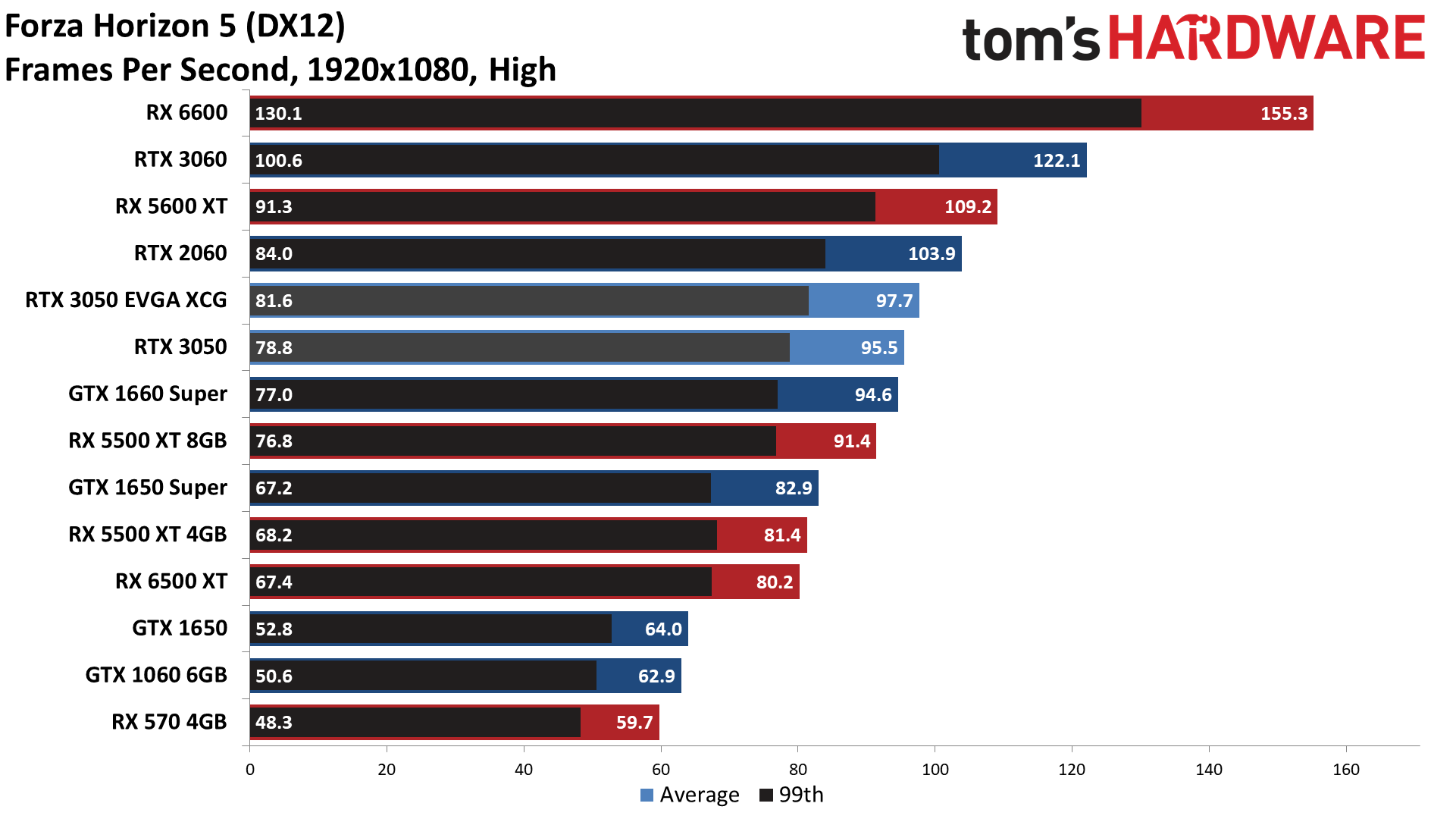

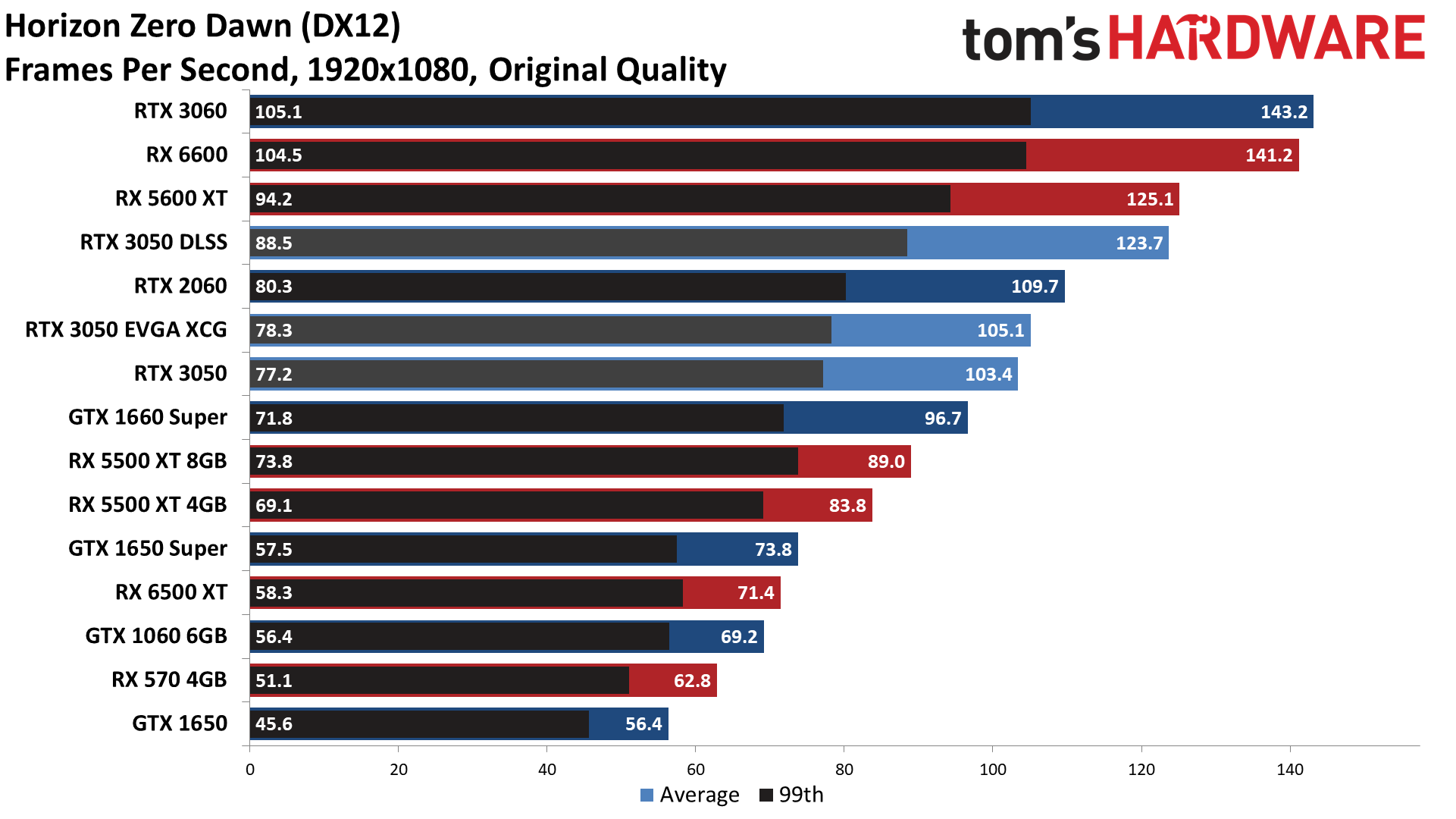

The games we're using for the coming year are Borderlands 3, Far Cry 6, Flight Simulator, Forza Horizon 5, Horizon Zero Dawn, Red Dead Redemption 2, and Watch Dogs Legion. Six of the seven games use DirectX 12 for the API, with RDR2 being the sole Vulkan representative. We didn't include any DX11 testing because, frankly, most modern games are opting for DX12 instead. We'll revisit and revamp the testing regimen over the coming year as needed, but this is what we're going with for now.

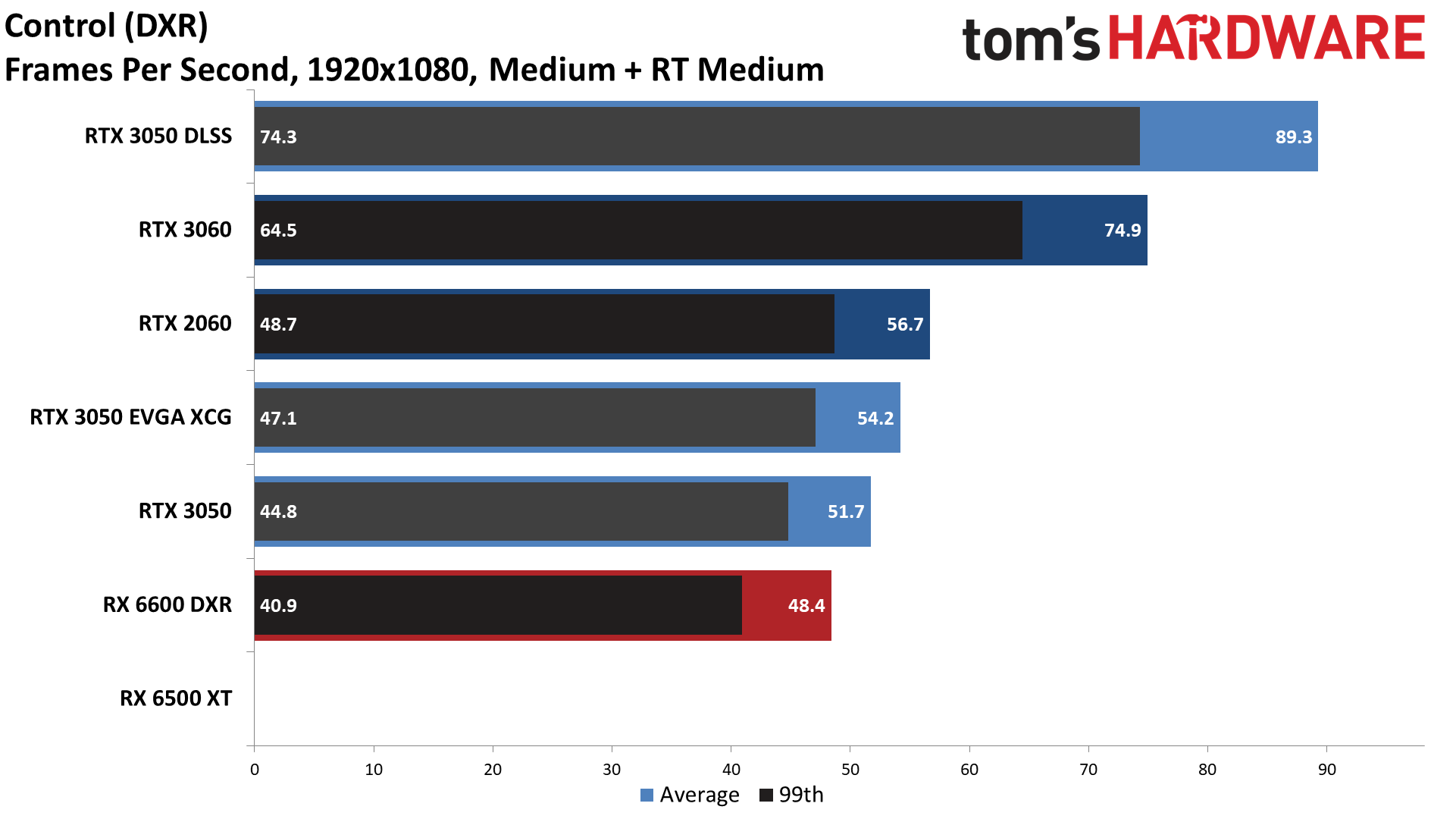

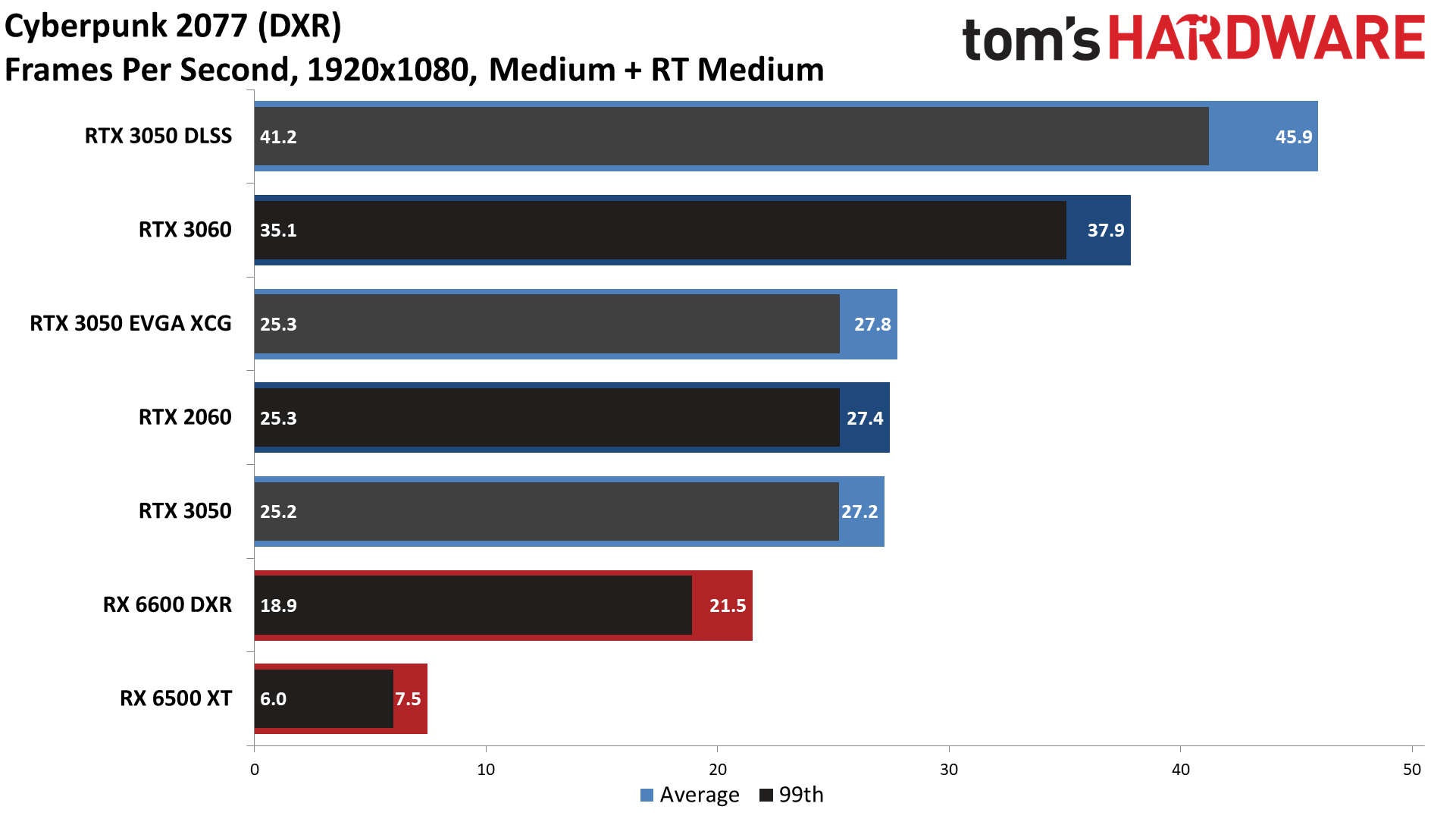

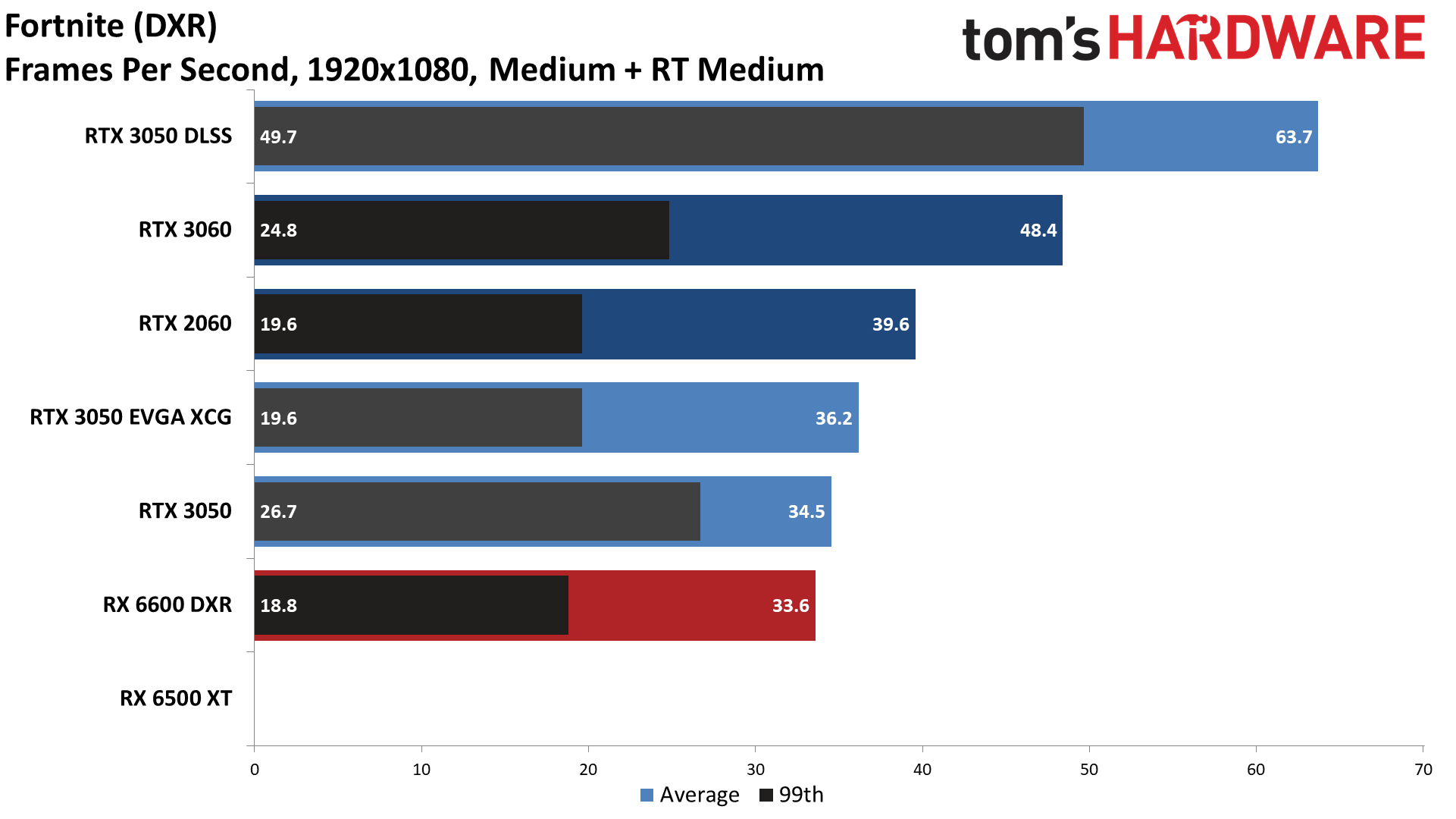

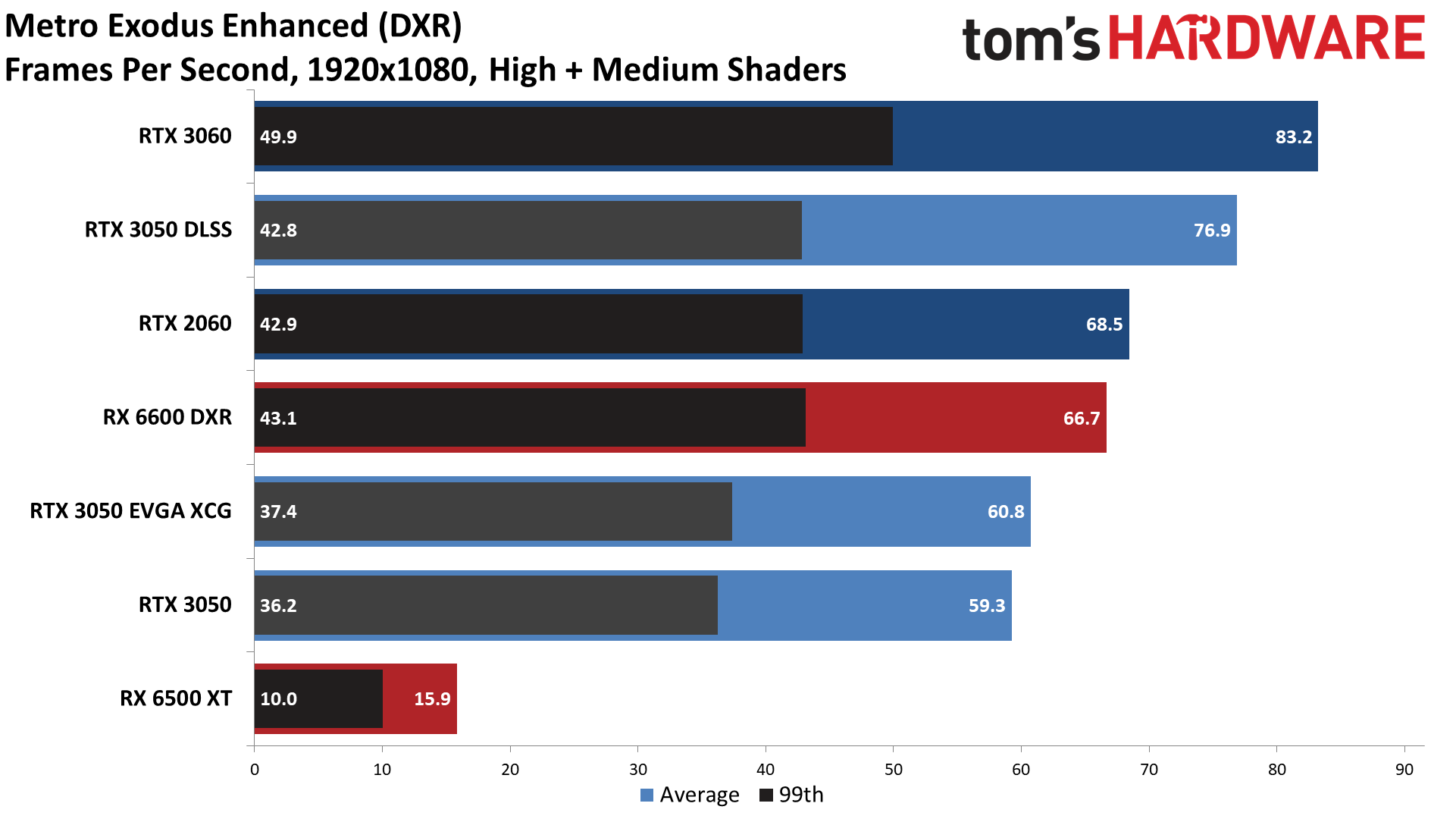

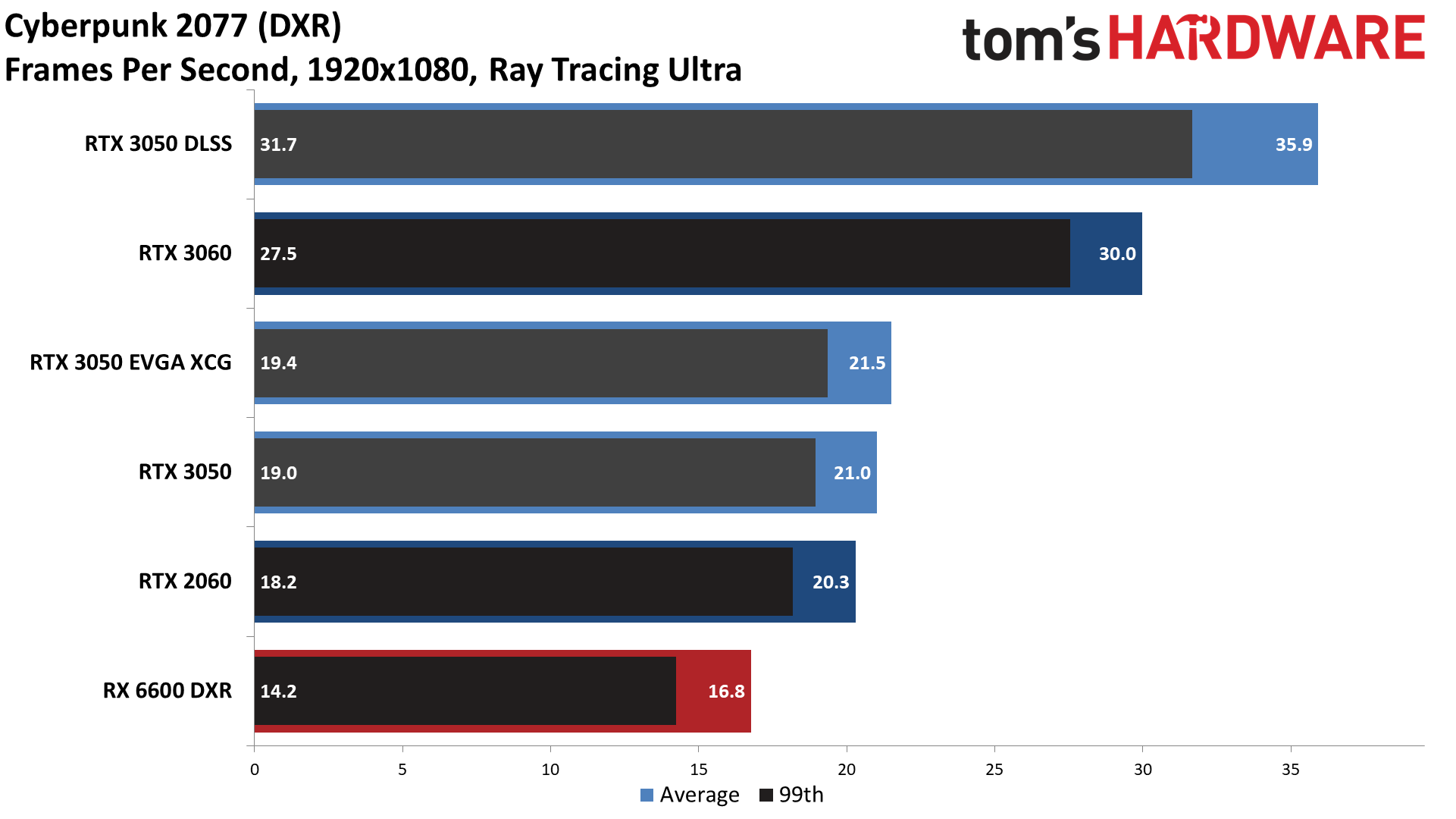

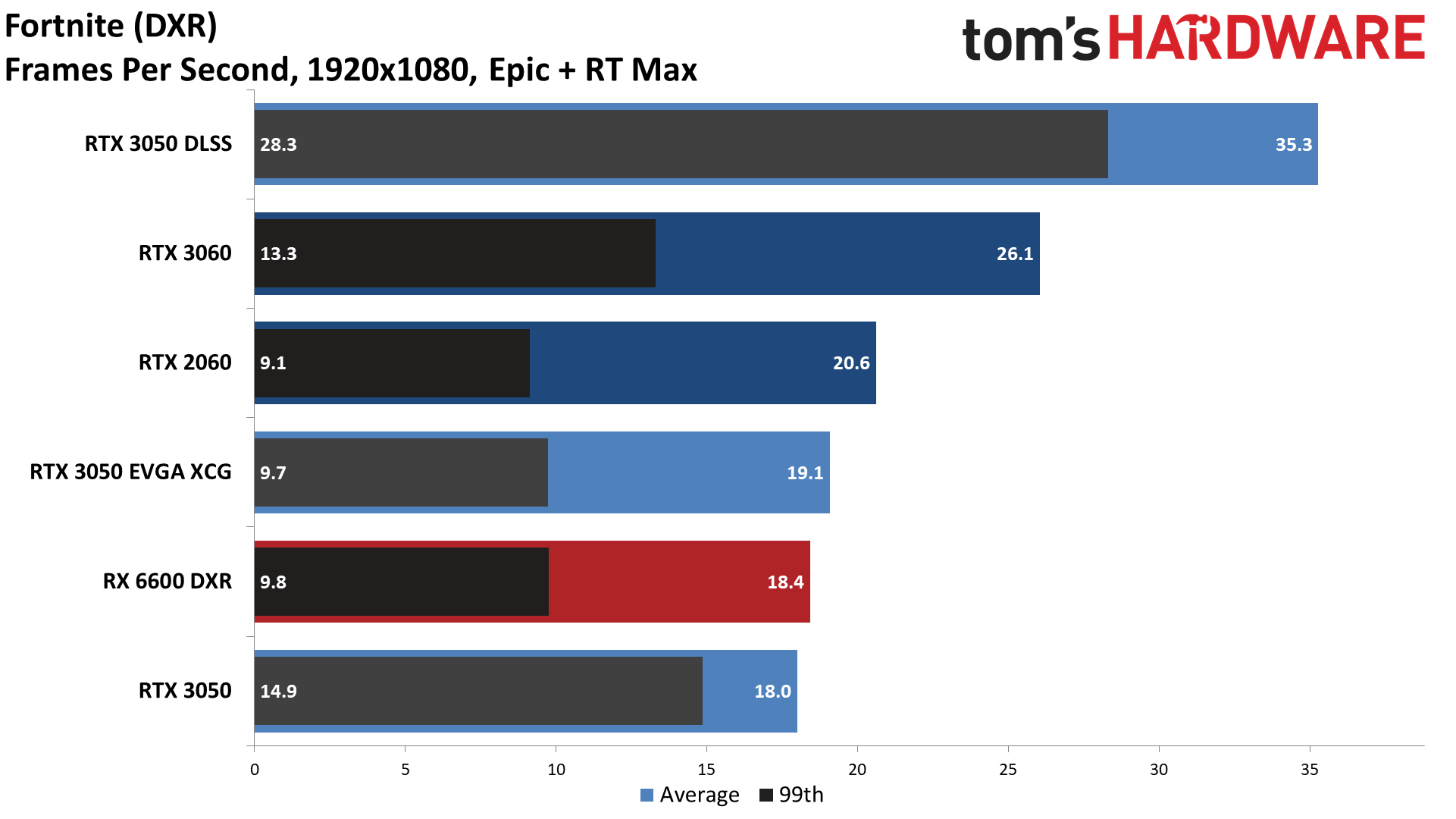

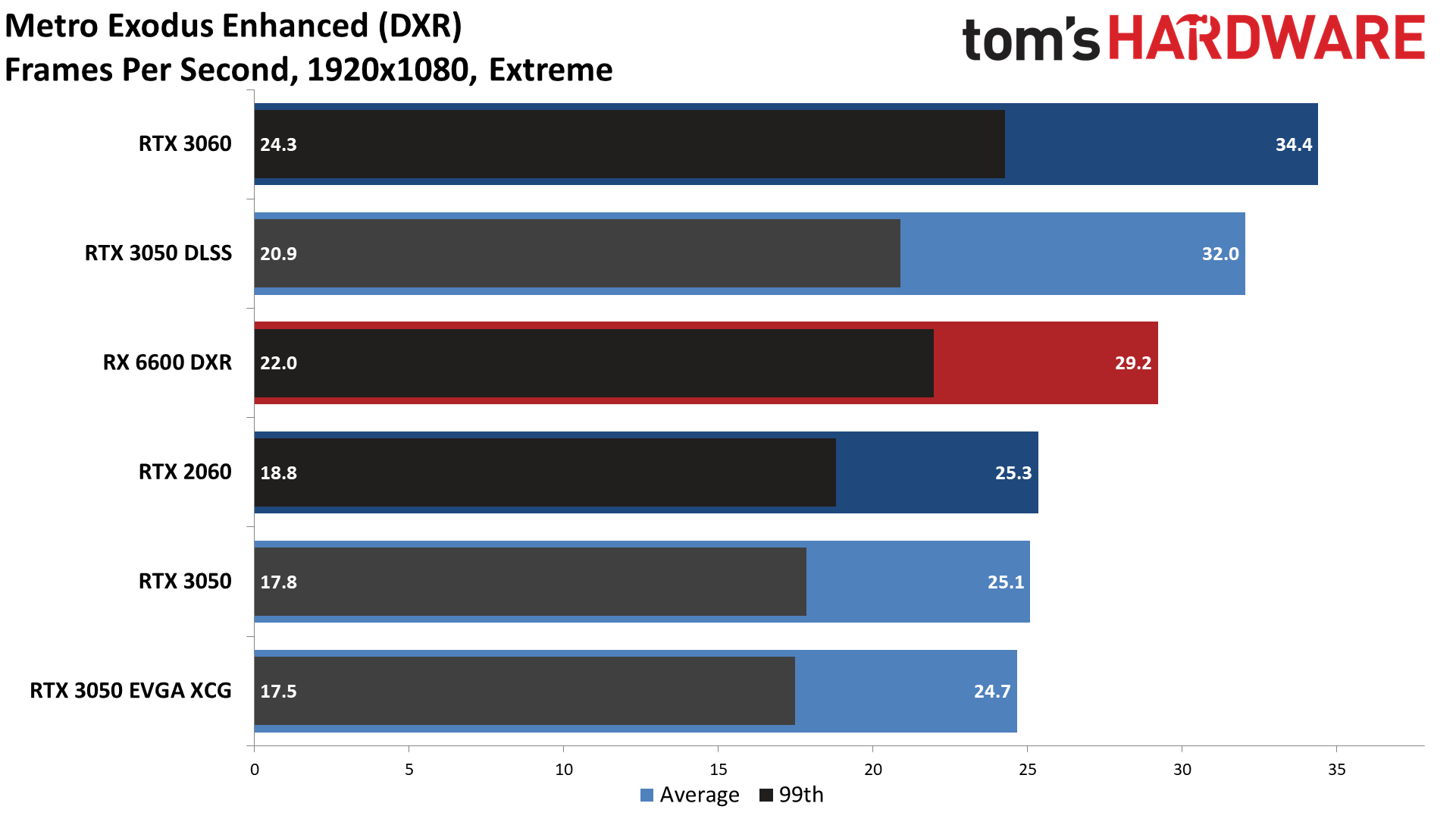

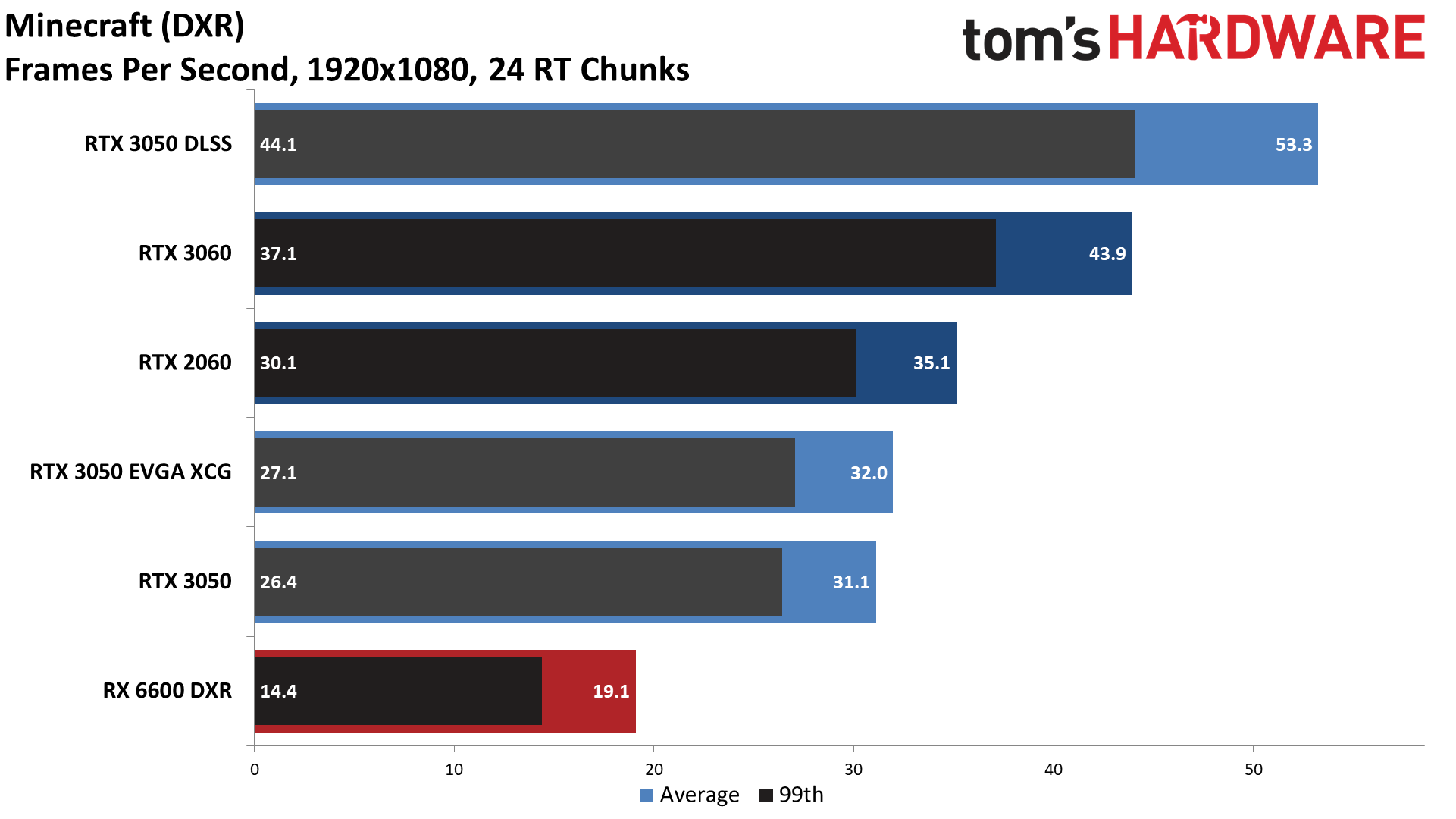

Along with our standard suite of games, we have a separate suite of games that support ray tracing. We'll be including performance testing with the same 1080p "medium" and 1080p/1440p/4K "ultra" settings, but with ray tracing enabled — again, where it makes sense for the particular GPU. Our DXR (DirectX Raytracing) test suite uses the following games: Bright Memory Infinite, Control Ultimate Edition, Cyberpunk 2077, Fortnite, Metro Exodus Enhanced Edition, and Minecraft. We want to provide apples-to-apples comparisons, so we're testing without DLSS (or FSR or NIS) enabled as our baseline, though on RTX cards like the 3050, we'll also show performance using DLSS quality mode.

GeForce RTX 3050 Gaming Performance

Please note: Our XC Black came with a boost clock of 1845MHz, not the reference boost clock of 1777MHz. We've now included both the original (overclocked) results as "EVGA XCG" along with reference clocked results.

The GeForce RTX 3050 focuses primarily on 1080p gaming, though unlike the RX 6500 XT, it can generally stretch to ultra settings and even manage 1440p at playable (>30 fps) framerates. Naturally, any less demanding games or settings should run even better than what we'll show in our charts, but we prefer to focus more on a "worst-case scenario" for gaming performance over using games that just about any GPU can run. We'll start with our 1080p medium testing.

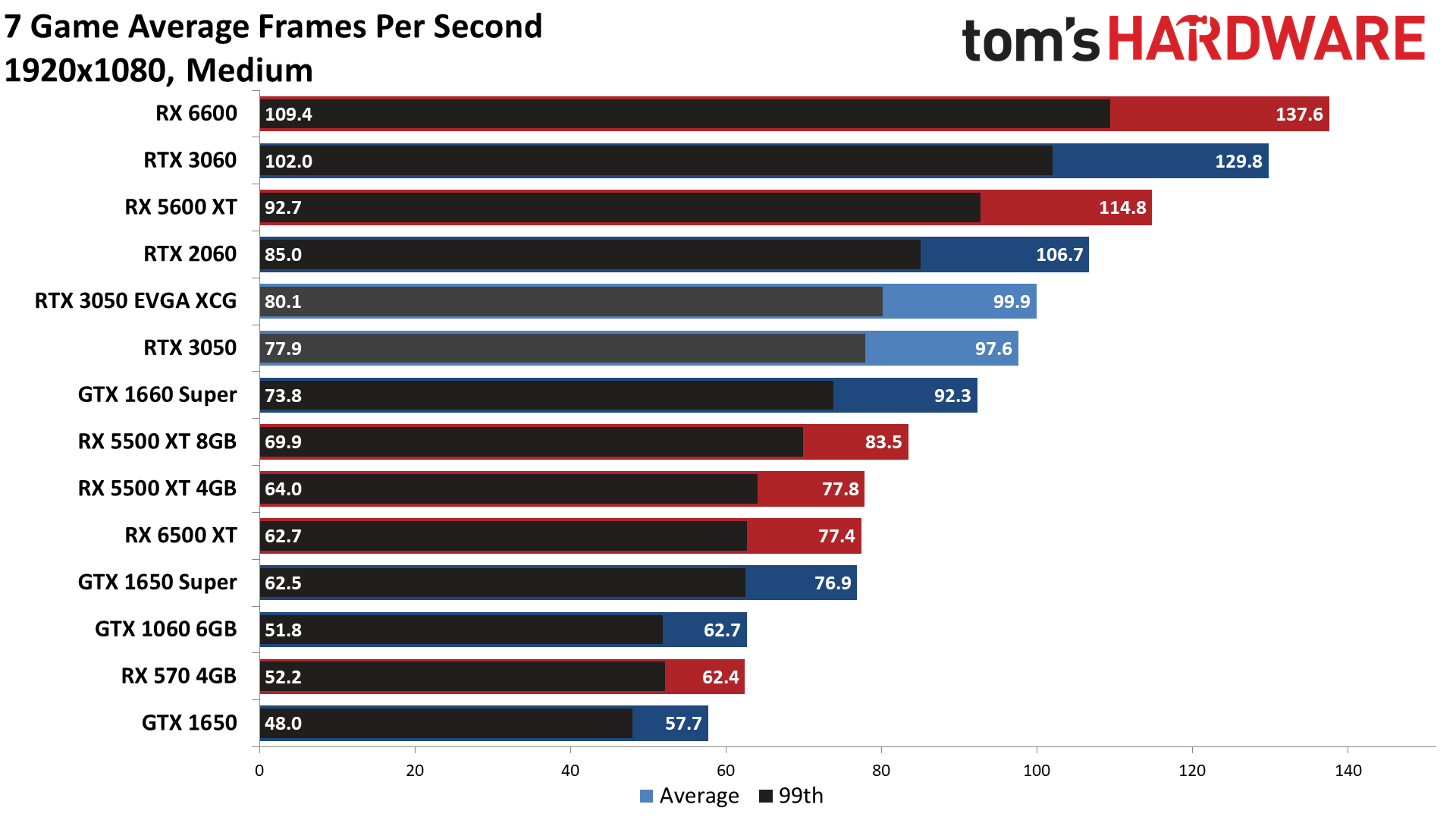

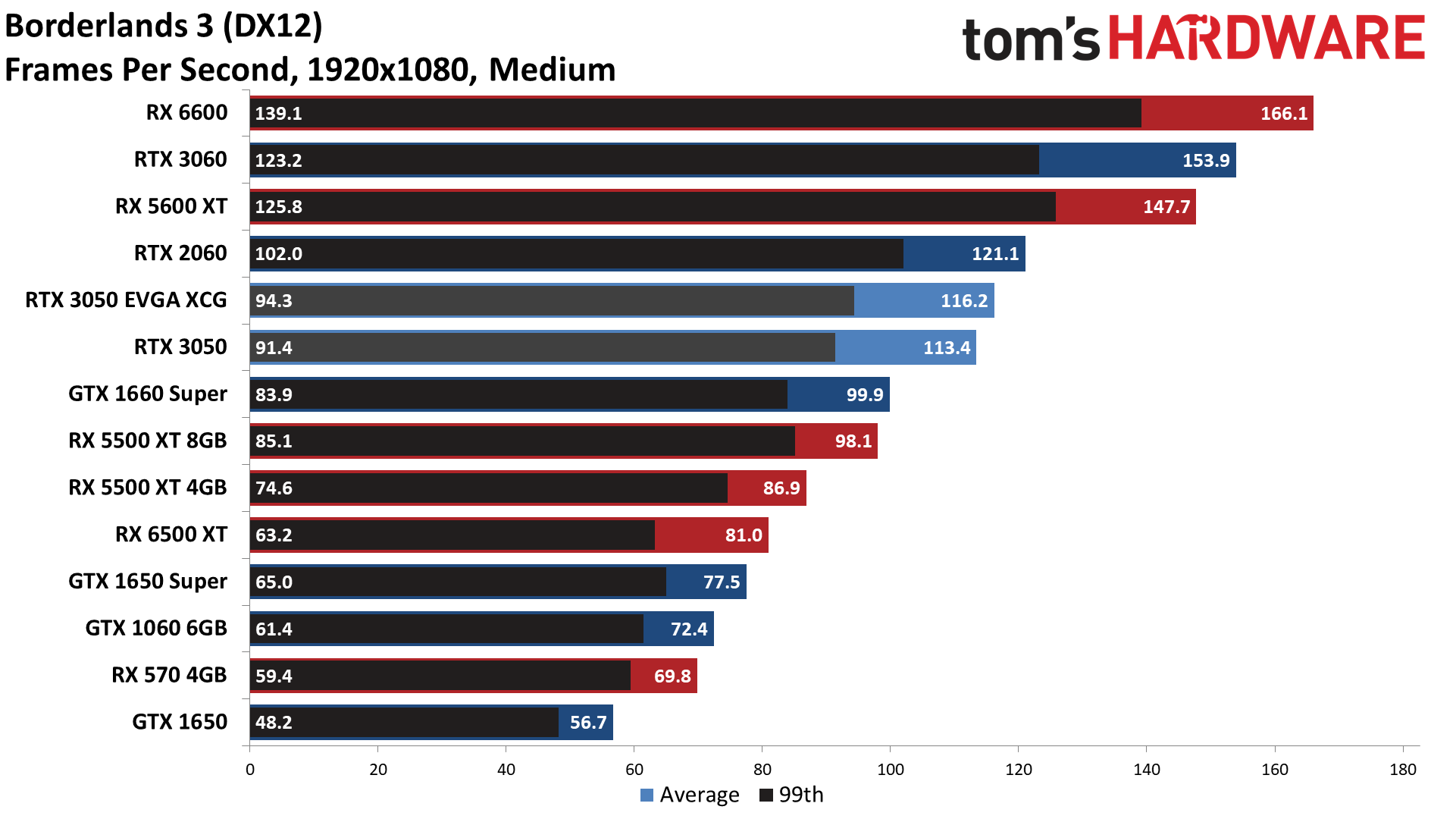

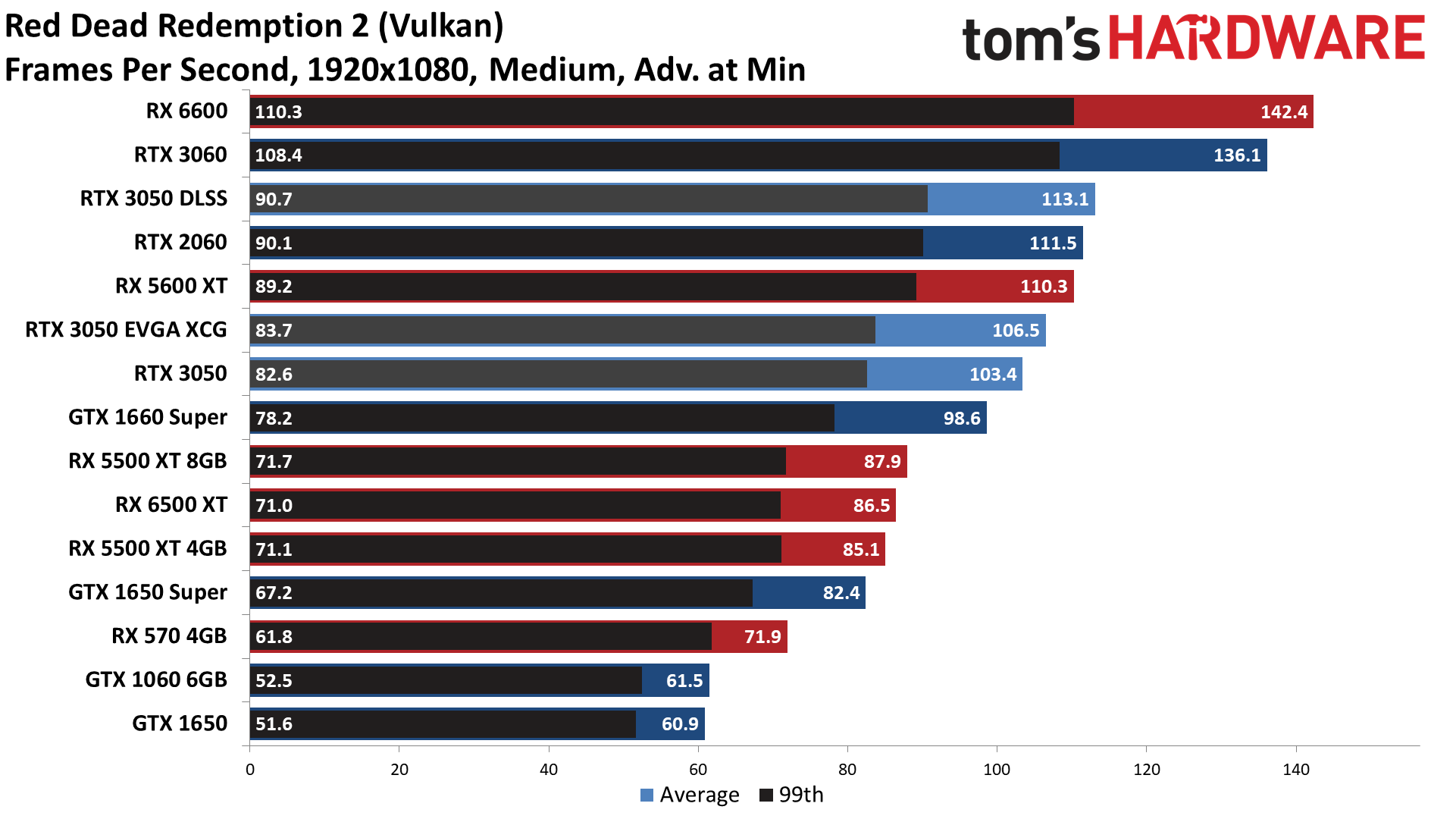

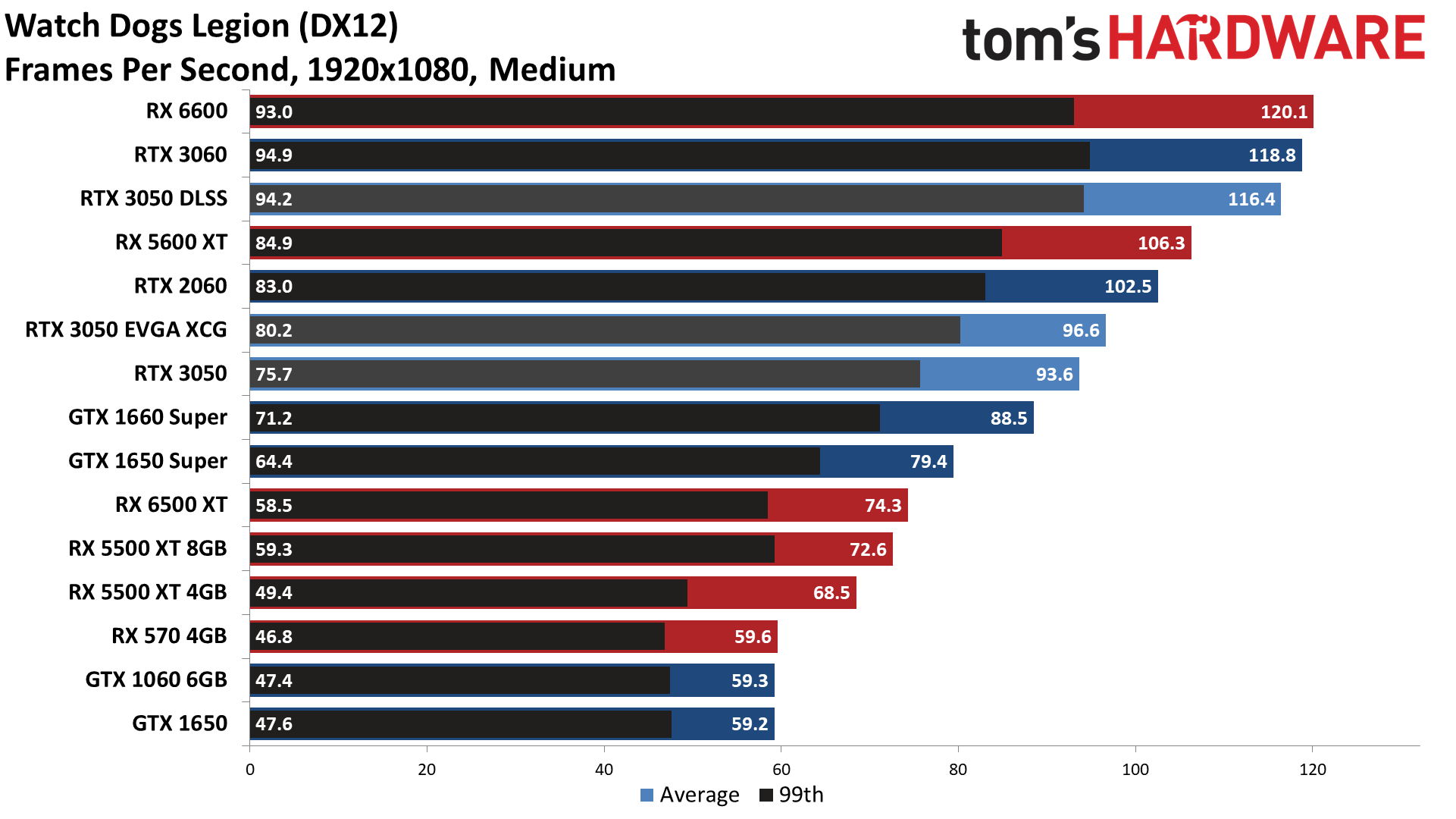

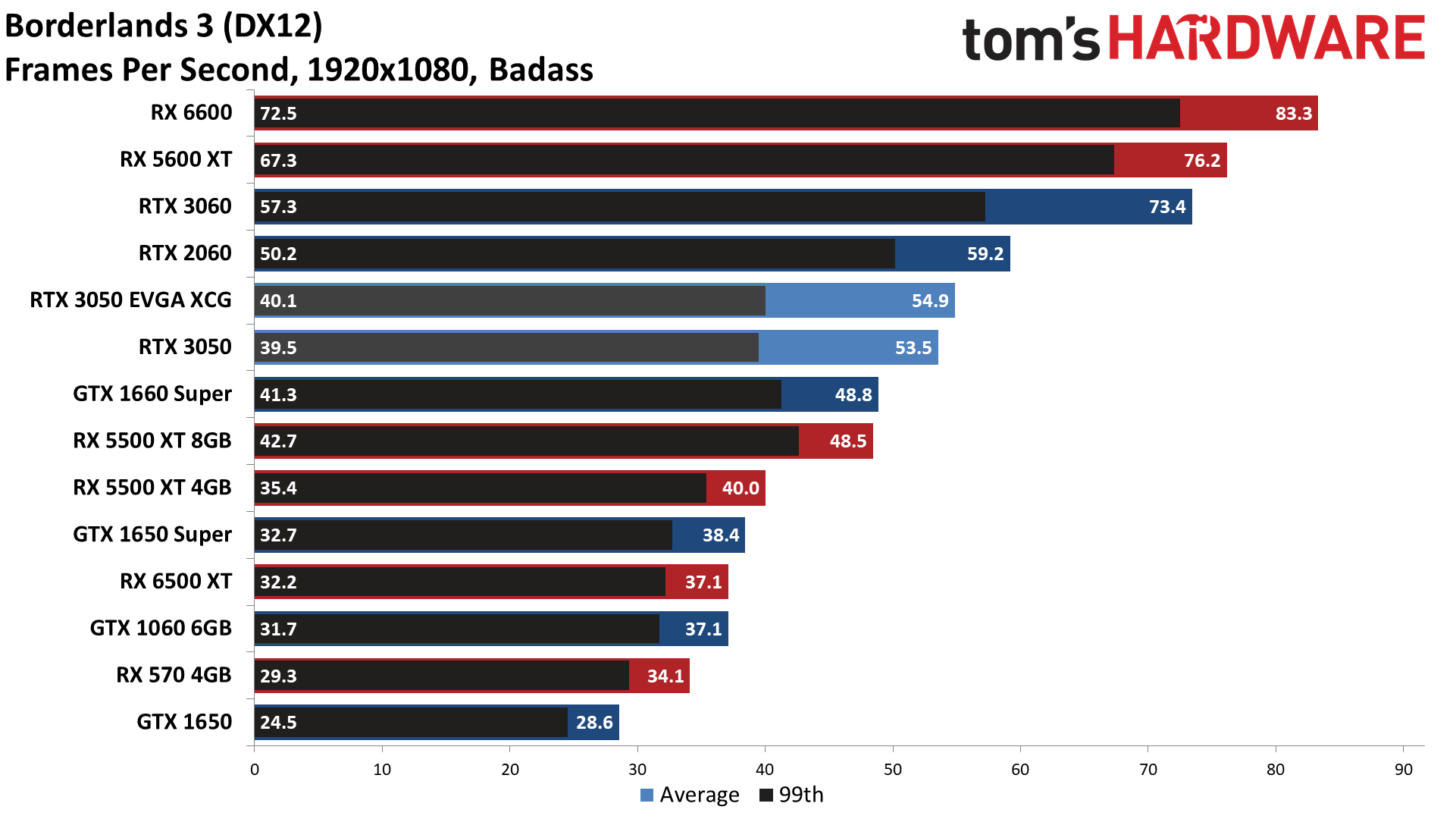

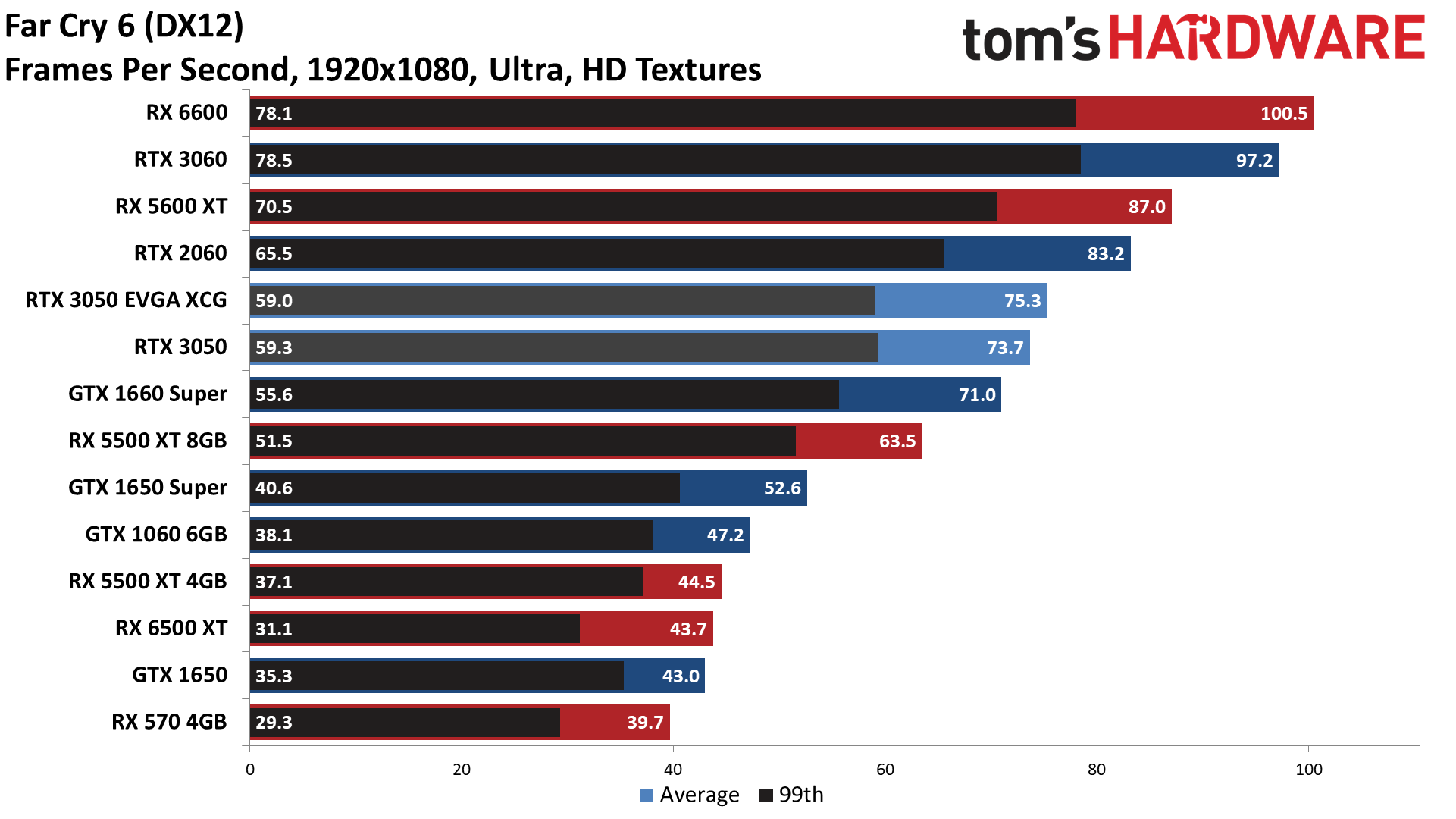

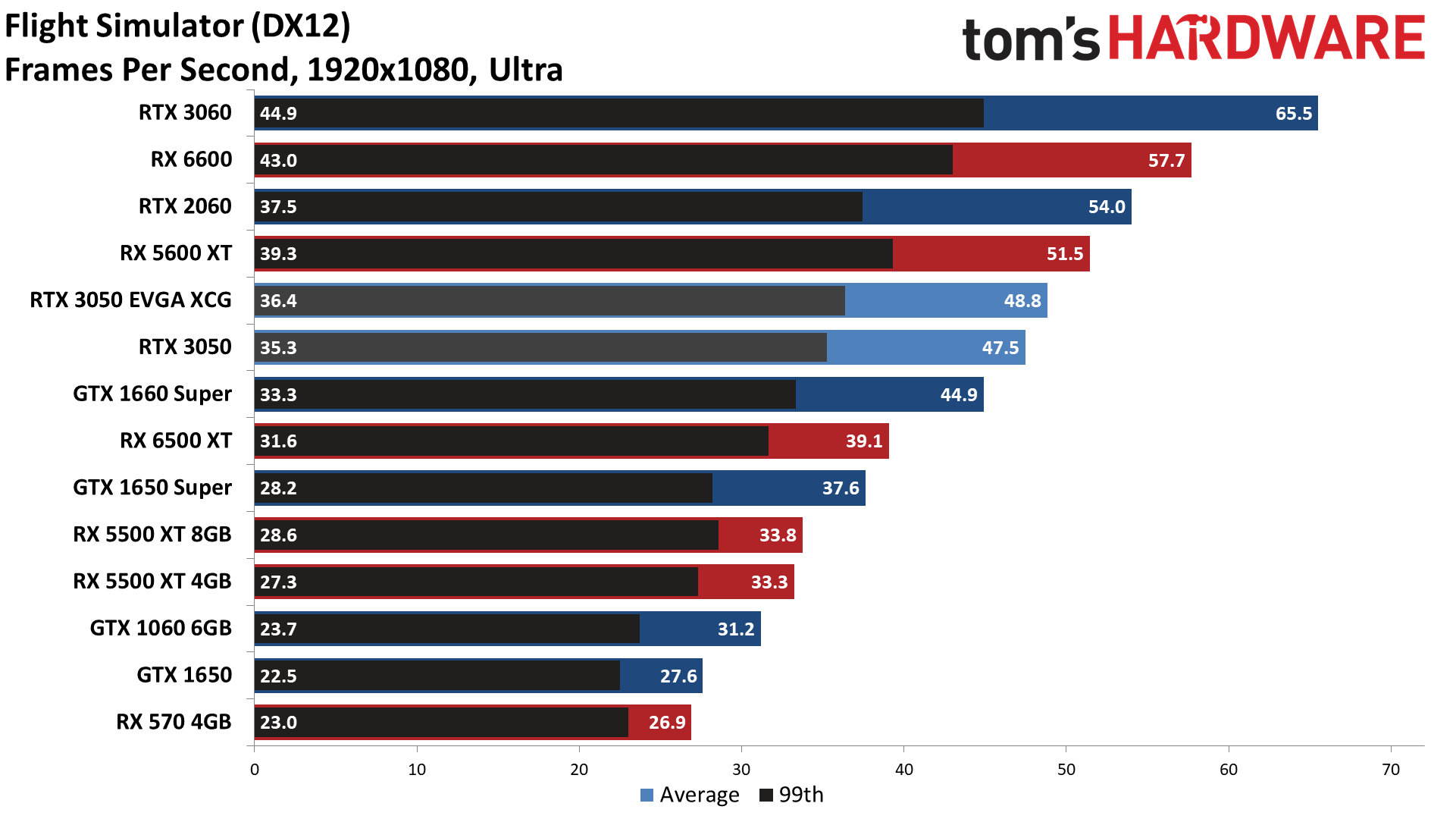

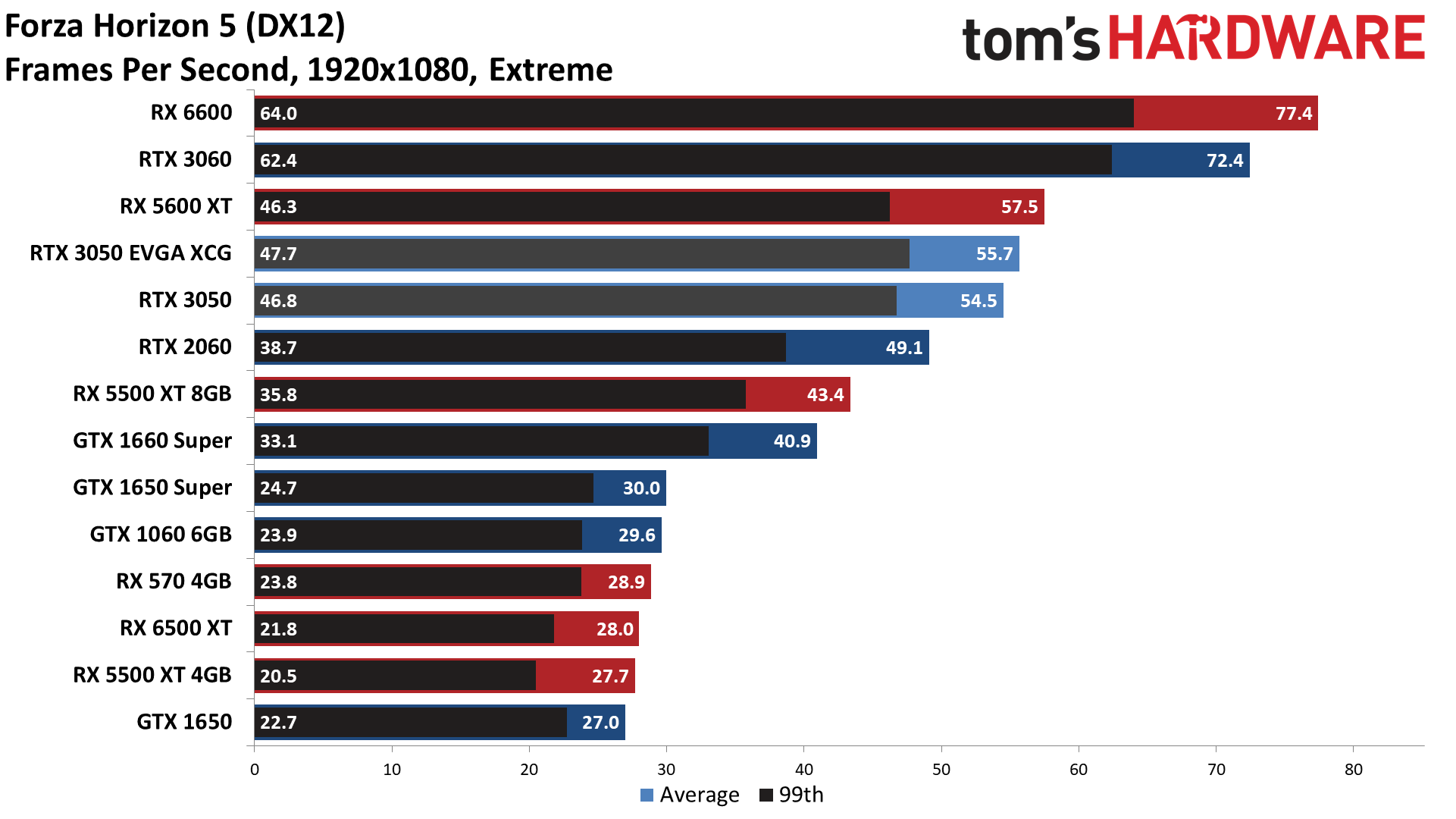

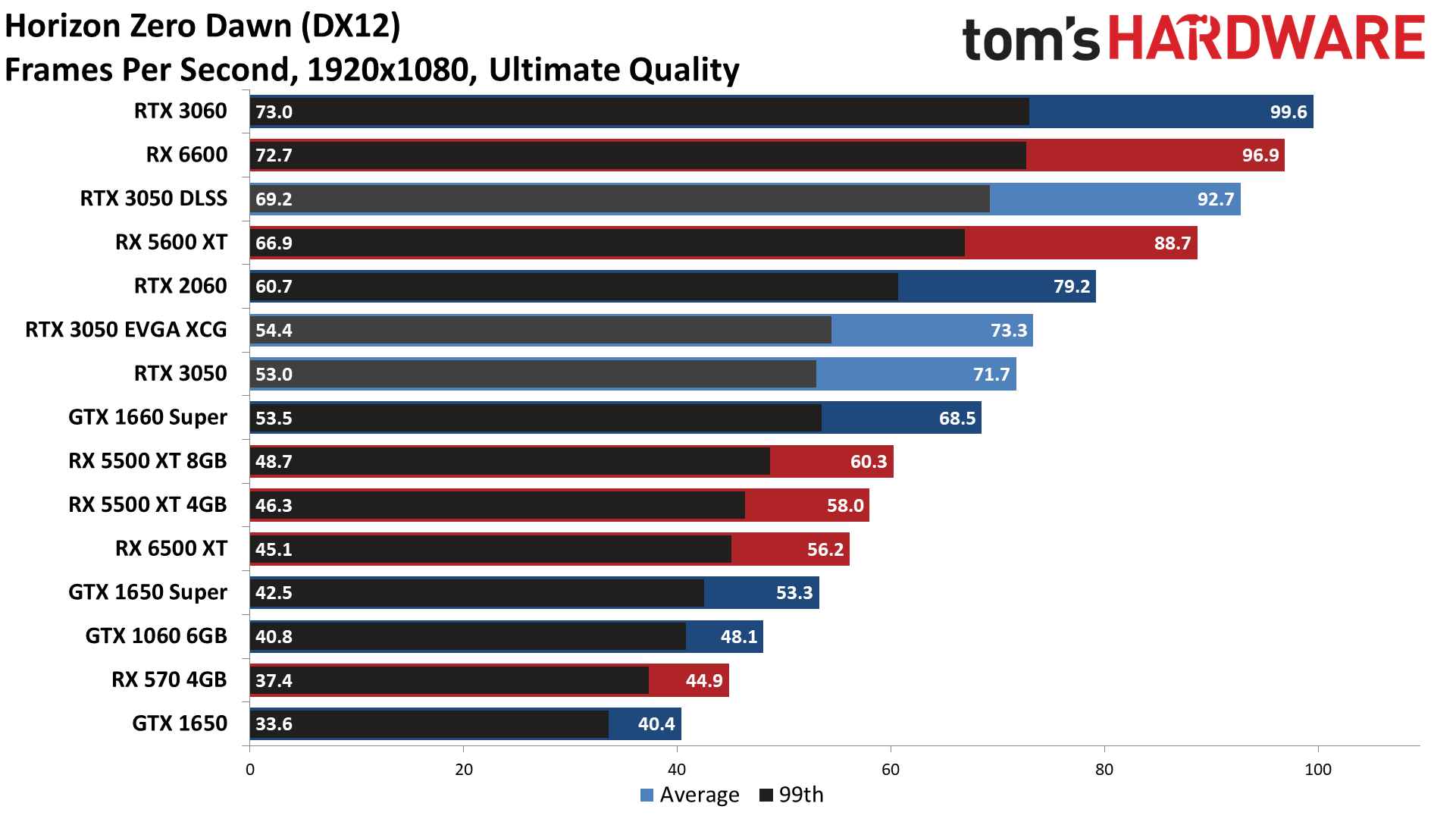

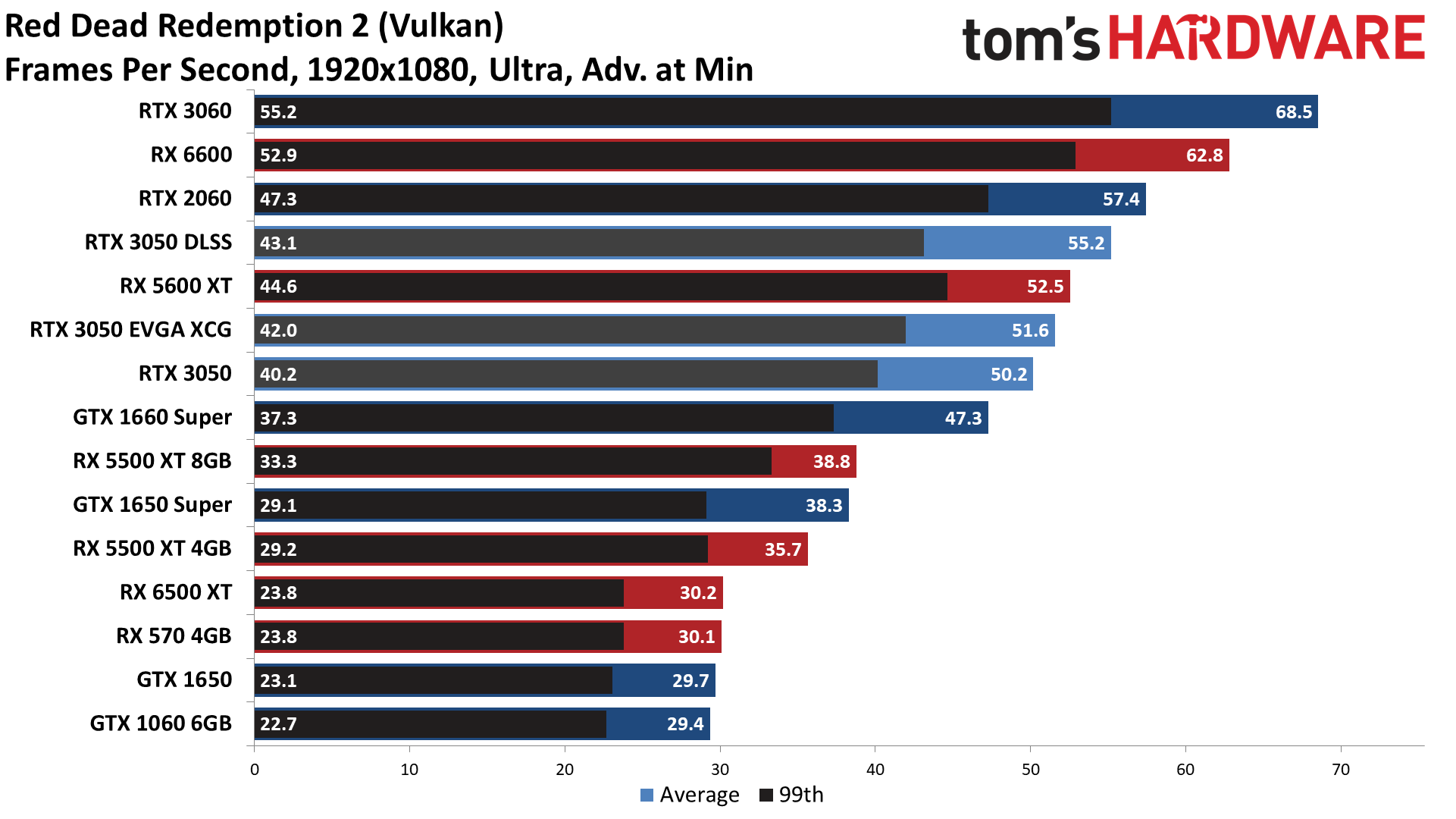

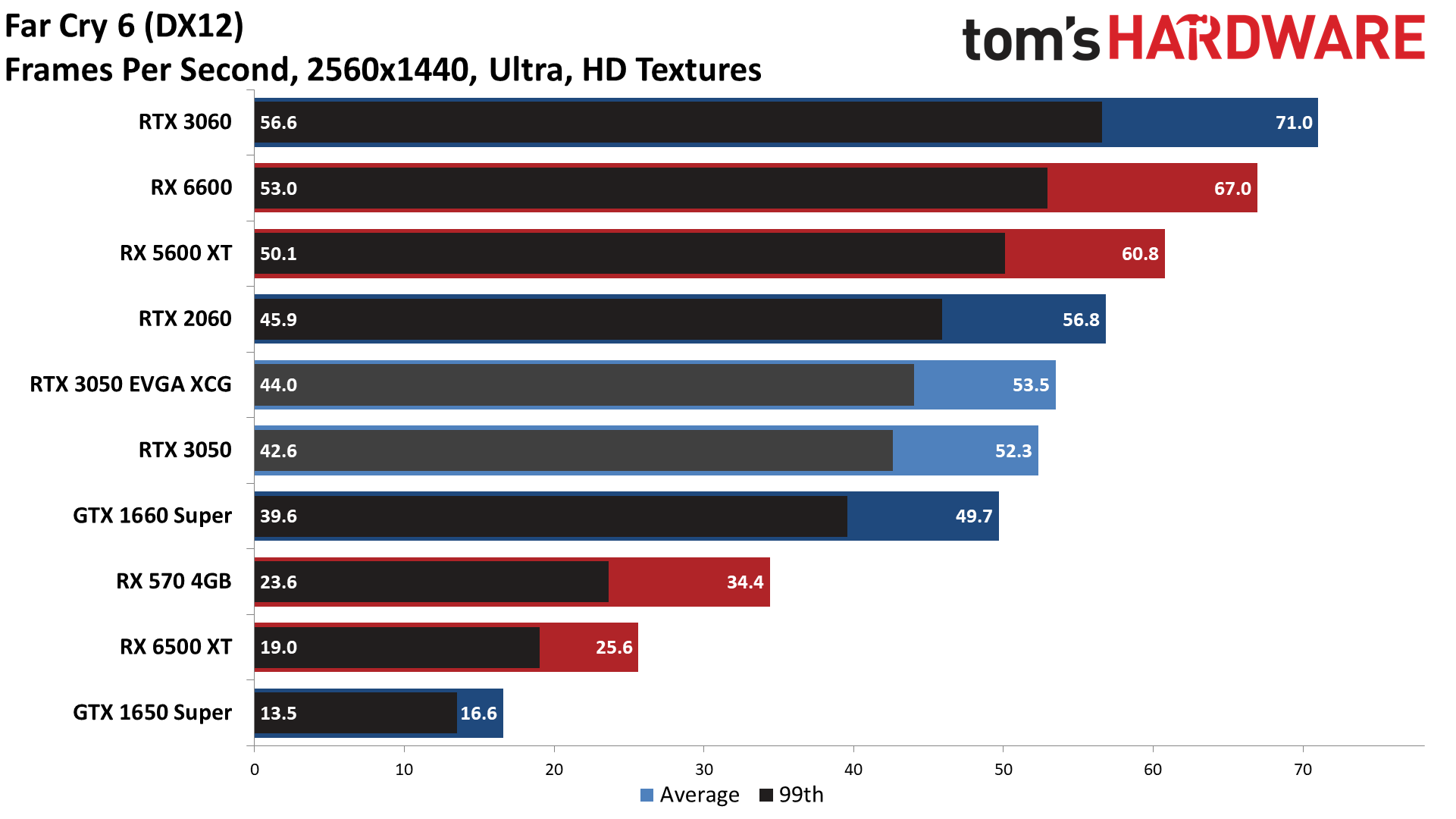

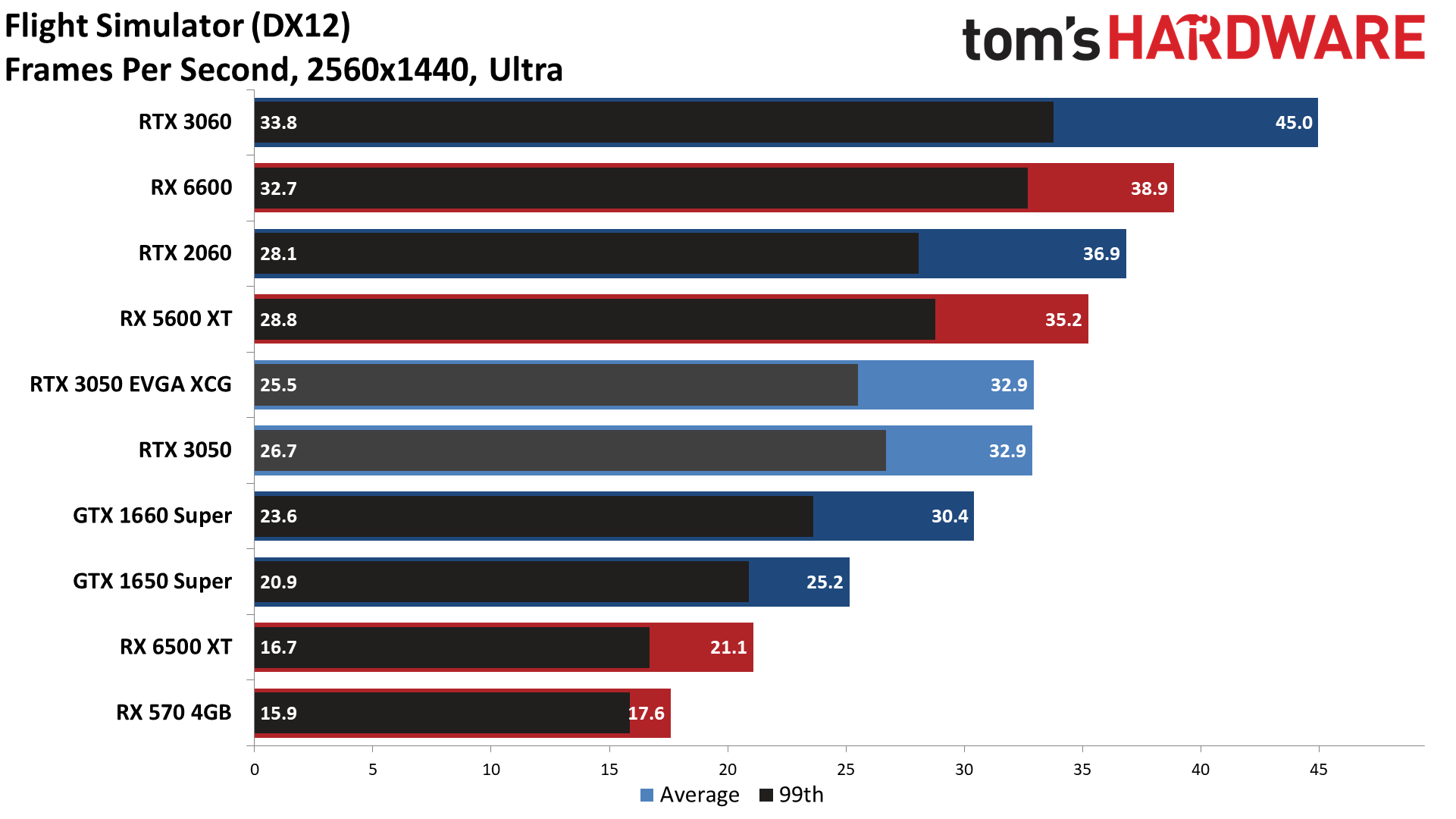

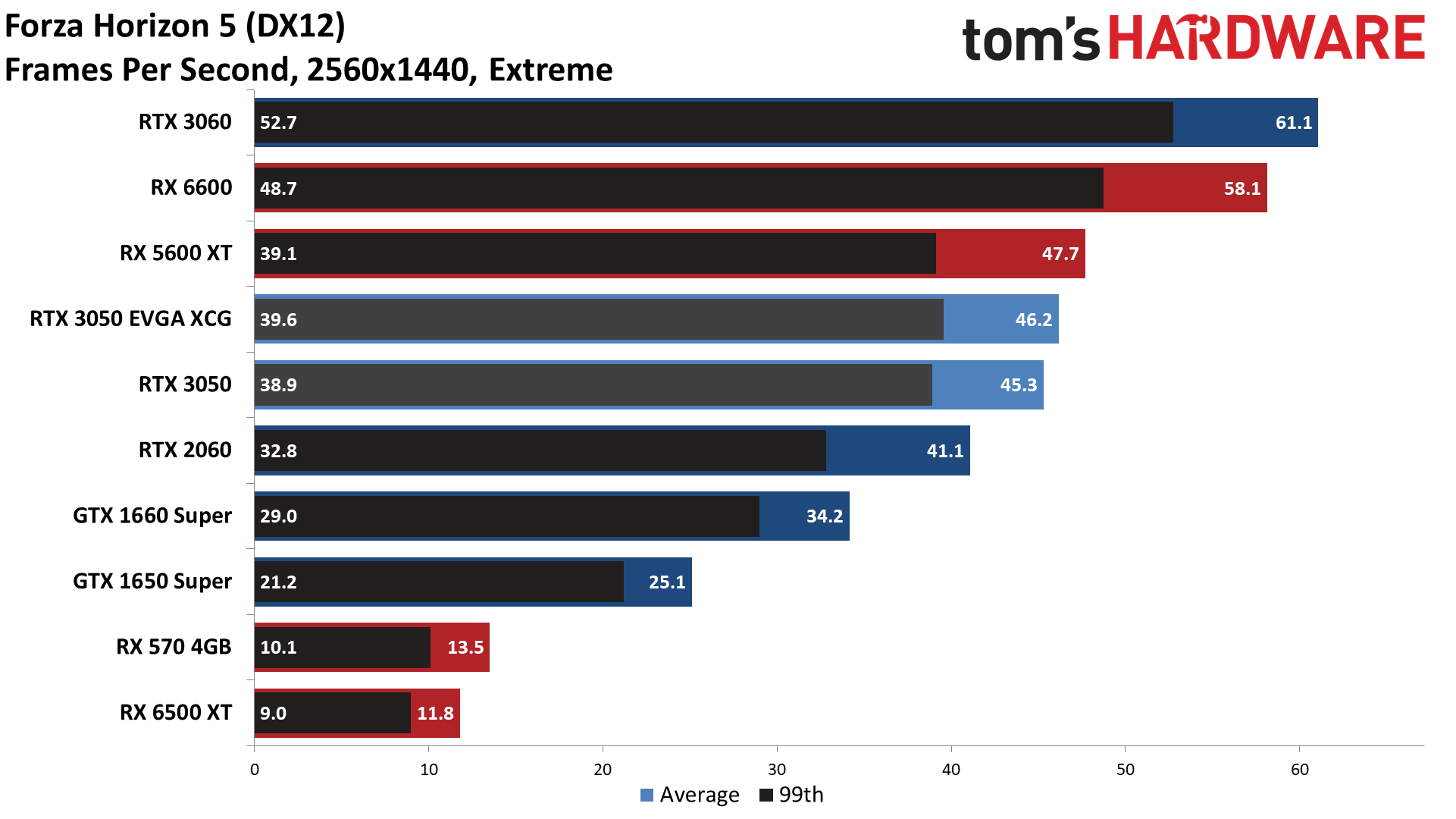

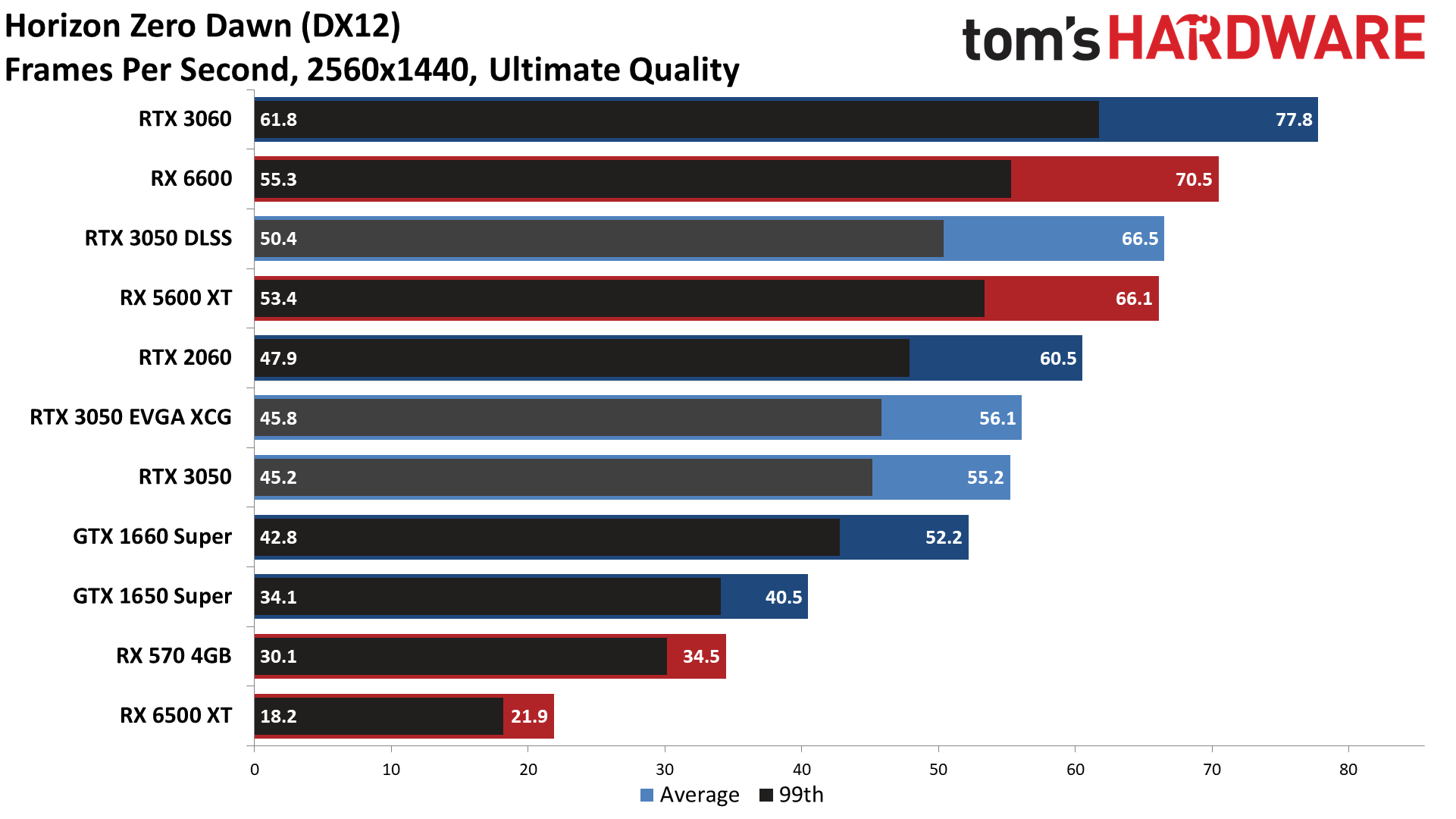

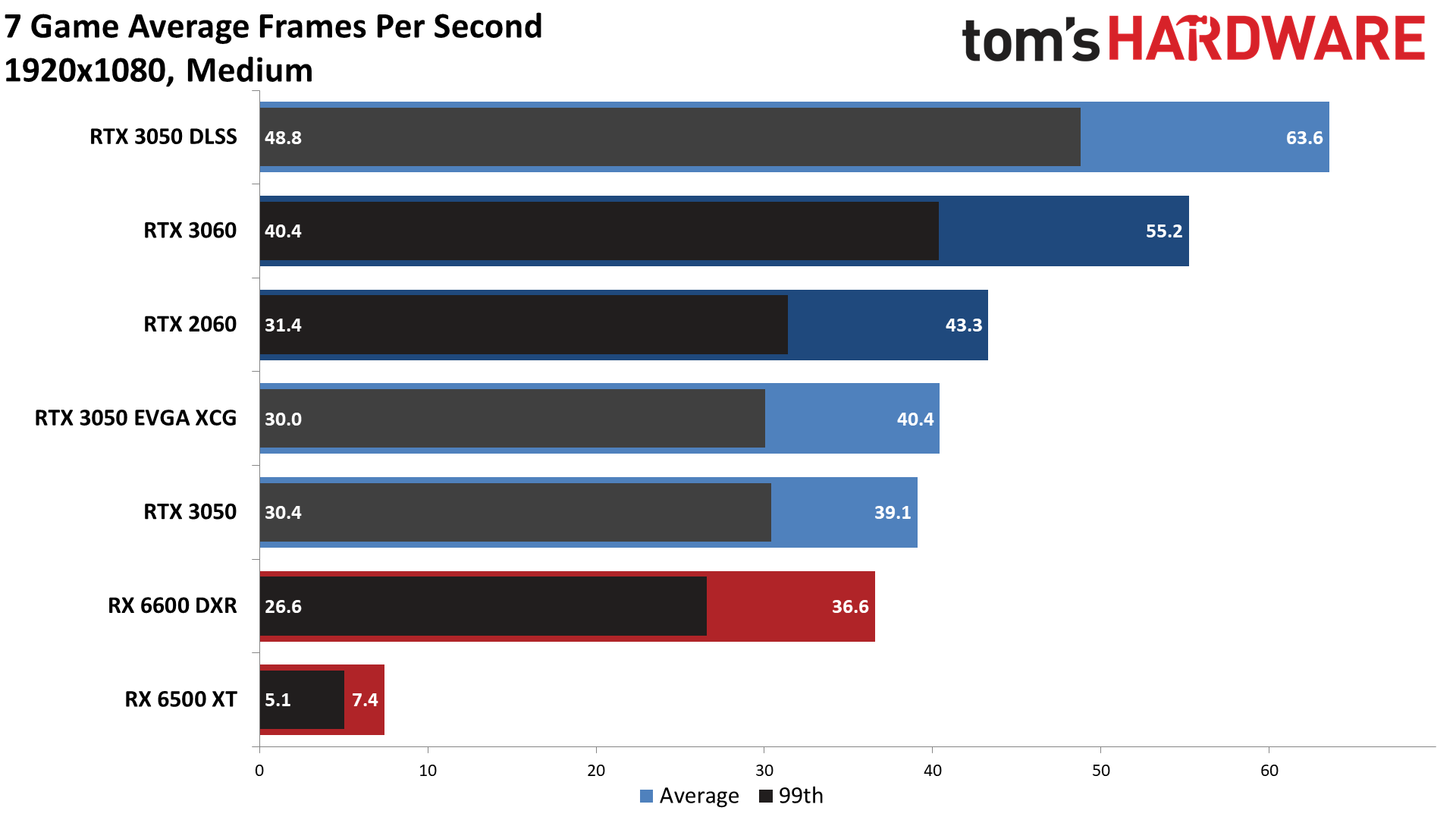

Straight out of the gate, it's obvious the RTX 3050 performs significantly better than the RX 6500 XT, even at modest settings. It's a decent step up in performance relative to the GTX 1660 Super as well, which also easily outperformed AMD's newcomer. (Note that the GTX 1660 Ti and GTX 1660 Super perform nearly identically in all of our previous testing.) The only serious competition will come in the form of higher tier GPUs like the RTX 3060 and RX 6600, though the RTX 2060 and RX 5600 XT also delivered slightly better performance, thanks in no small part to having 192-bit memory interfaces.

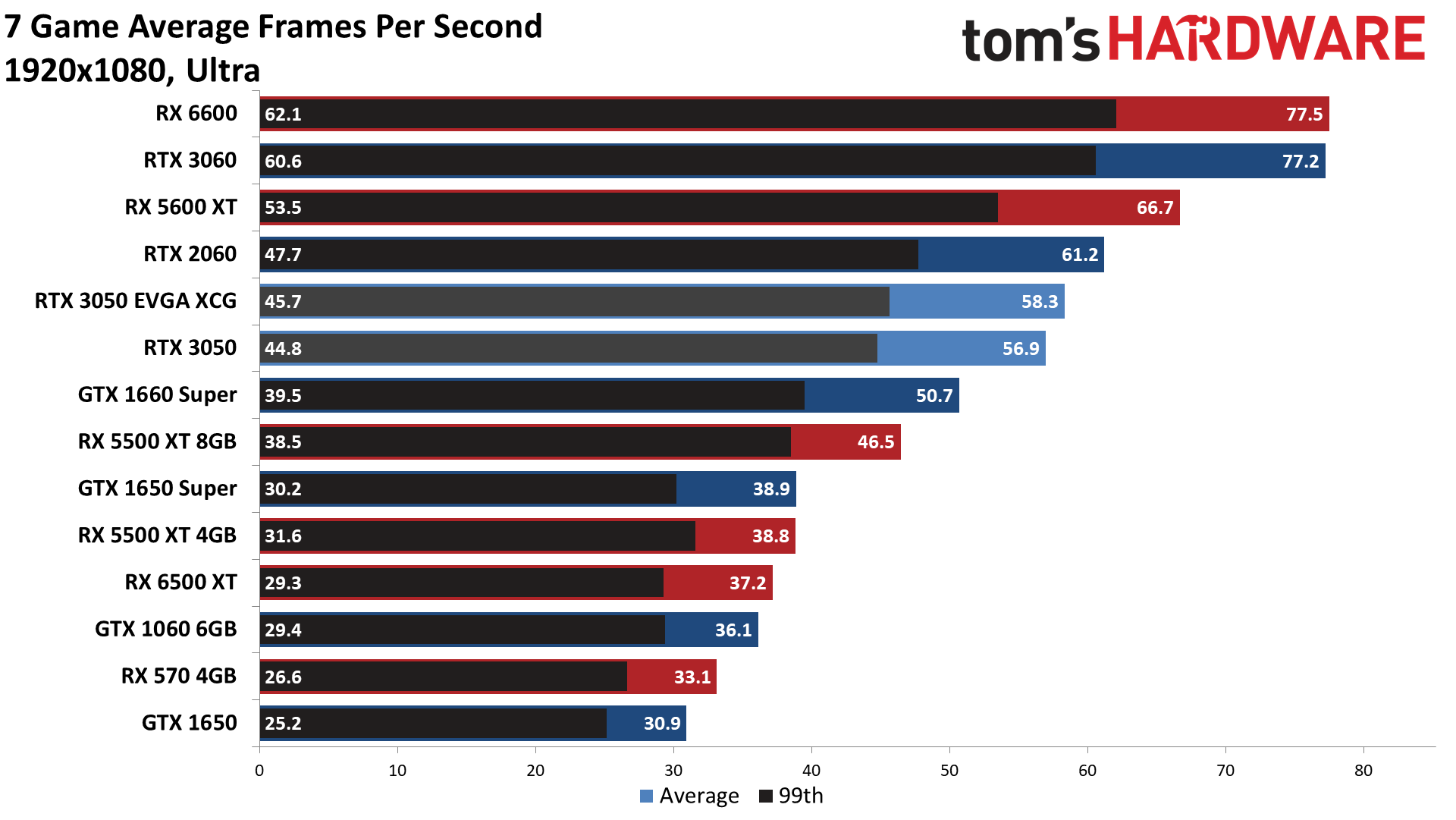

Overall, the RTX 3050 averaged 98 fps across our new test suite, at settings that won't strain the 8GB of VRAM. It was 26% faster than AMD's RX 6500 XT, 69% faster than the previous generation GTX 1650, and 56% faster than the two-generations-old GTX 1060 6GB, but it only beat the GTX 1660 Super by 6%. At the same time, the RTX 2060 was 9% faster than the RTX 3050, the RTX 3060 beat it by 33%, the RX 5600 XT averaged out to 18% faster as well, and the RX 6600 was 41% faster. (And as a quick side note, the "overclocked" original VBIOS was indeed 2.4% faster overall.)

In other words, the RTX 3050 doesn't radically change the status quo. If the supply of GPUs was relatively "normal," the 3050 would slot in just below the three-years-old RTX 2060 6GB. On the other hand, if the supply chain and other factors weren't happening, this might have been a $199 or $229 card instead of starting at a hypothetical $249.

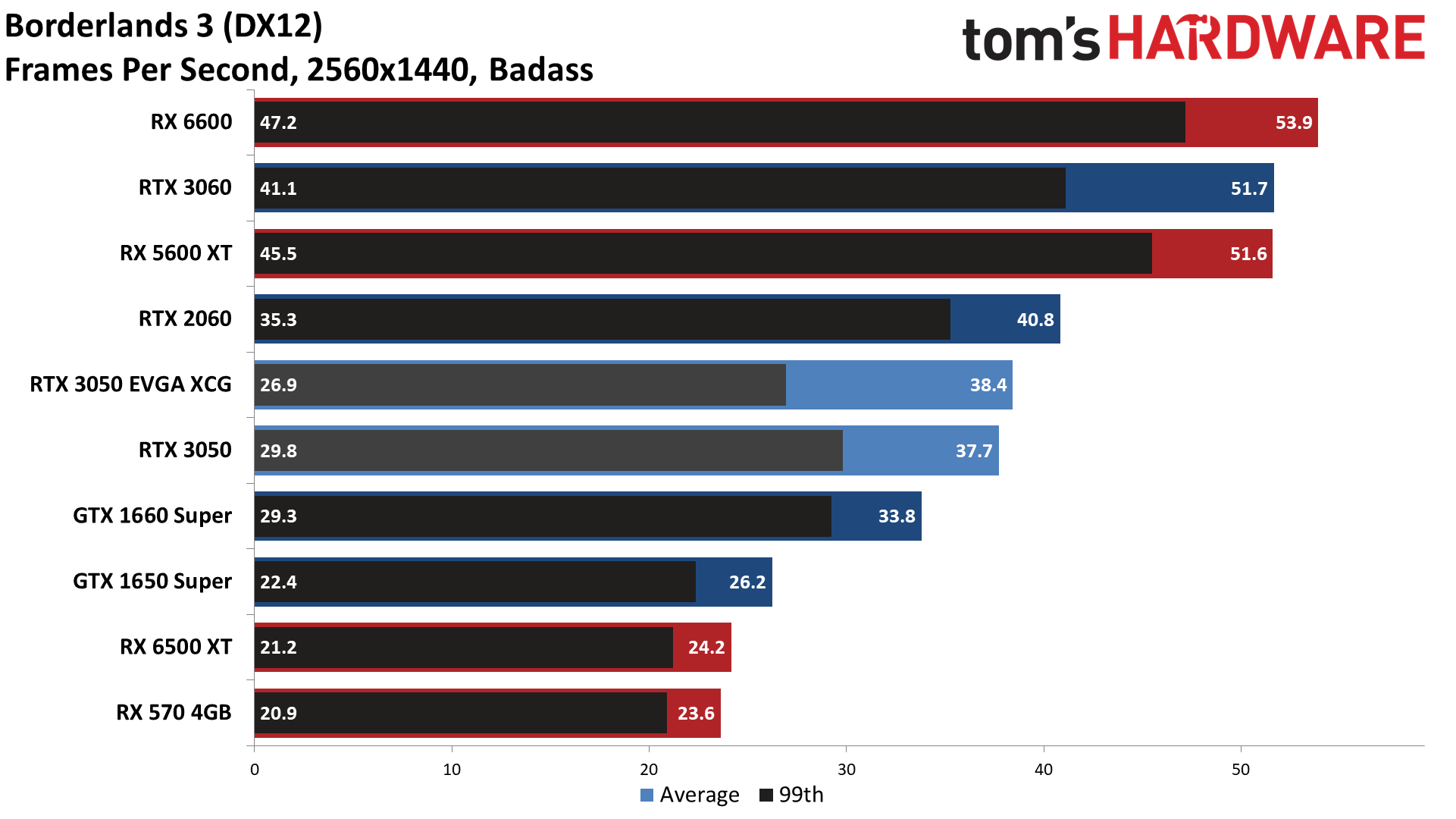

Naturally, there's a wider spread in performance when we look at the individual games. Compared to the RX 6500 XT, the closest AMD got was in Flight Simulator, where the 3050 was only 16% faster. Far Cry 6, Forza Horizon 5, and Red Dead Redemption 2 were all 22–23% faster on the 3050, while the biggest lead was in Borderlands 3, with the 3050 beating the 6500 XT by 44%. That's largely because Borderlands 3 likes having more memory and memory bandwidth, even when using the 1080p medium preset.

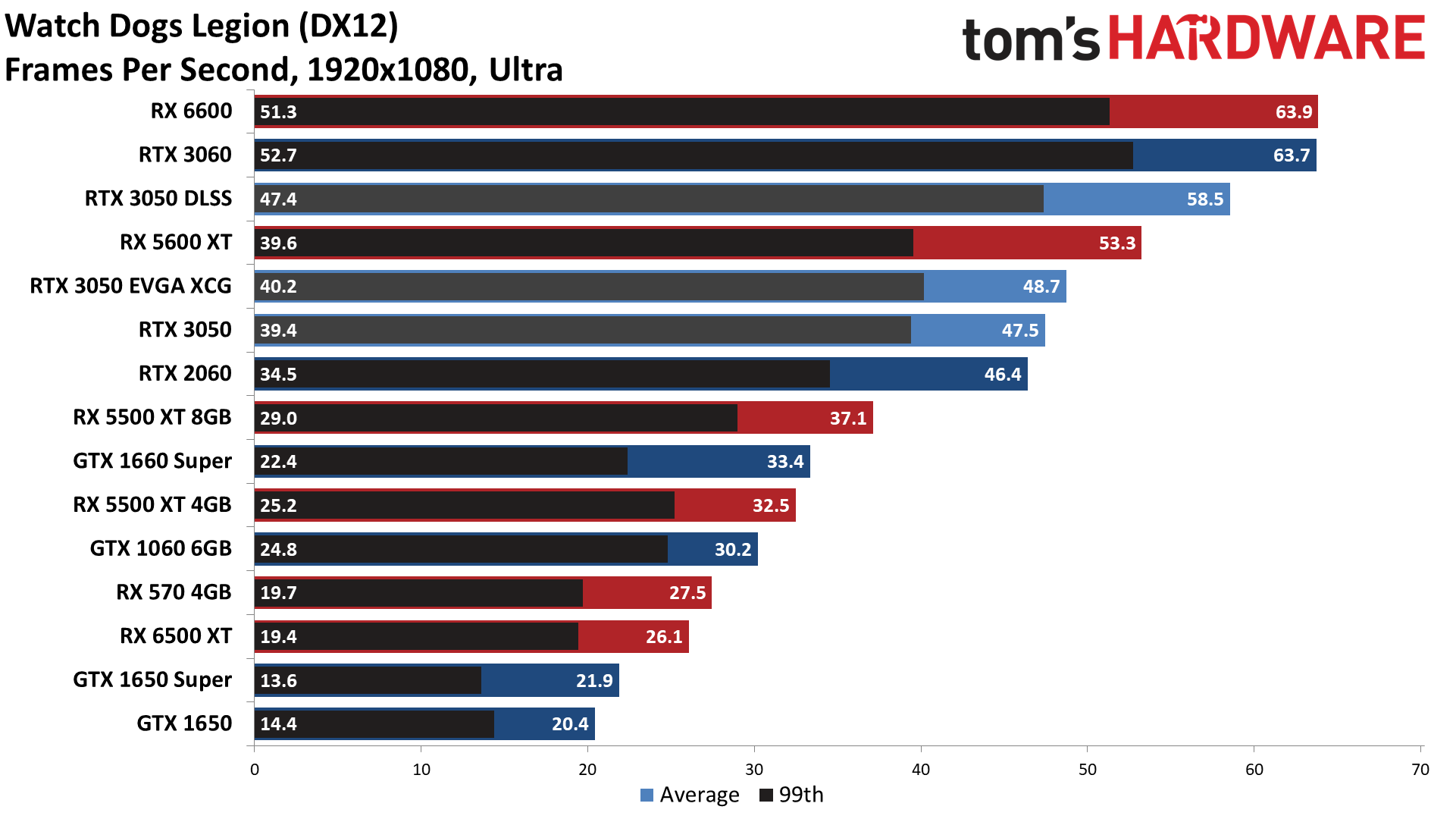

You can also see how much DLSS quality mode improves performance, even at these relatively low settings. HZD was 20% faster, RDR2 improved by 9%, and WDL ran 24% faster. That last result puts the RTX 3050 just behind the RTX 3050 and RX 6600.

Now let's turn up the heat a bit and see what happens.

The RX 6500 XT and other cards with only 4GB VRAM can really struggle at ultra settings in many modern games, but the RTX 3050 continues to perform quite well. Average performance across our test suite was still 58 fps, though only Far Cry 6 and Horizon Zero Dawn managed to exceed 60 fps. Perhaps more importantly, the RTX 3050 beat AMD's RX 6500 XT by 53% and increased its lead over other GPUs with 4GB VRAM. Sticking with the current generation "budget" GPU focus, the RTX 3050 was anywhere from 28% faster than the 6500 XT (in HZD) to as much as 95% faster (Forza Horizon 5).

Of course, it's not all sunshine and roses. Yes, the RTX 3050 can run circles around the RX 6500 XT, but it came in slightly behind the old RTX 2060, and if you're willing to forego ray tracing hardware, the RX 5600 XT was 14% faster. It also trailed the RTX 3060 by 26%, and the RX 6600 was 36% faster. While it's not perfect scaling going from the 3050 to the 3060, the 3060 has 50% more memory, 61% more memory bandwidth, and 40% more compute. That works out to 36% higher performance at 1080p ultra.

This isn't to say that you should run 1080p ultra settings on many of the GPUs in our charts, but at least with the RTX 3050 and faster cards, you can legitimately do so without killing performance. While running at less than 60 fps isn't ideal, it's still definitely playable, and none of the tested games had minimums that consistently fell below 30 fps. DLSS was also able to boost performance by 29% in HZD, 10% in RDR2, and WDL ran 23% faster.

But there's still a bit of gas in the RTX 3050's tank…

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

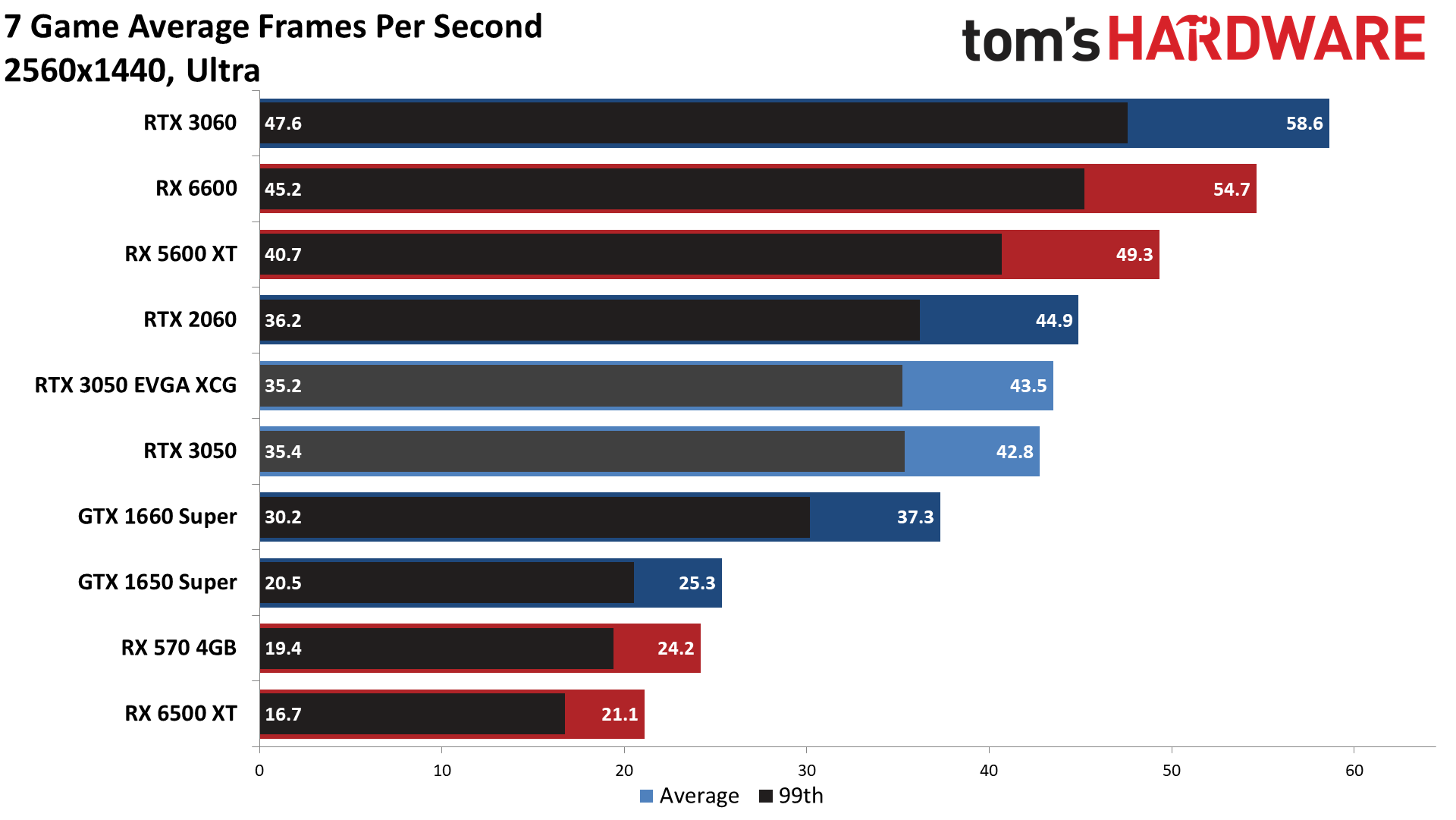

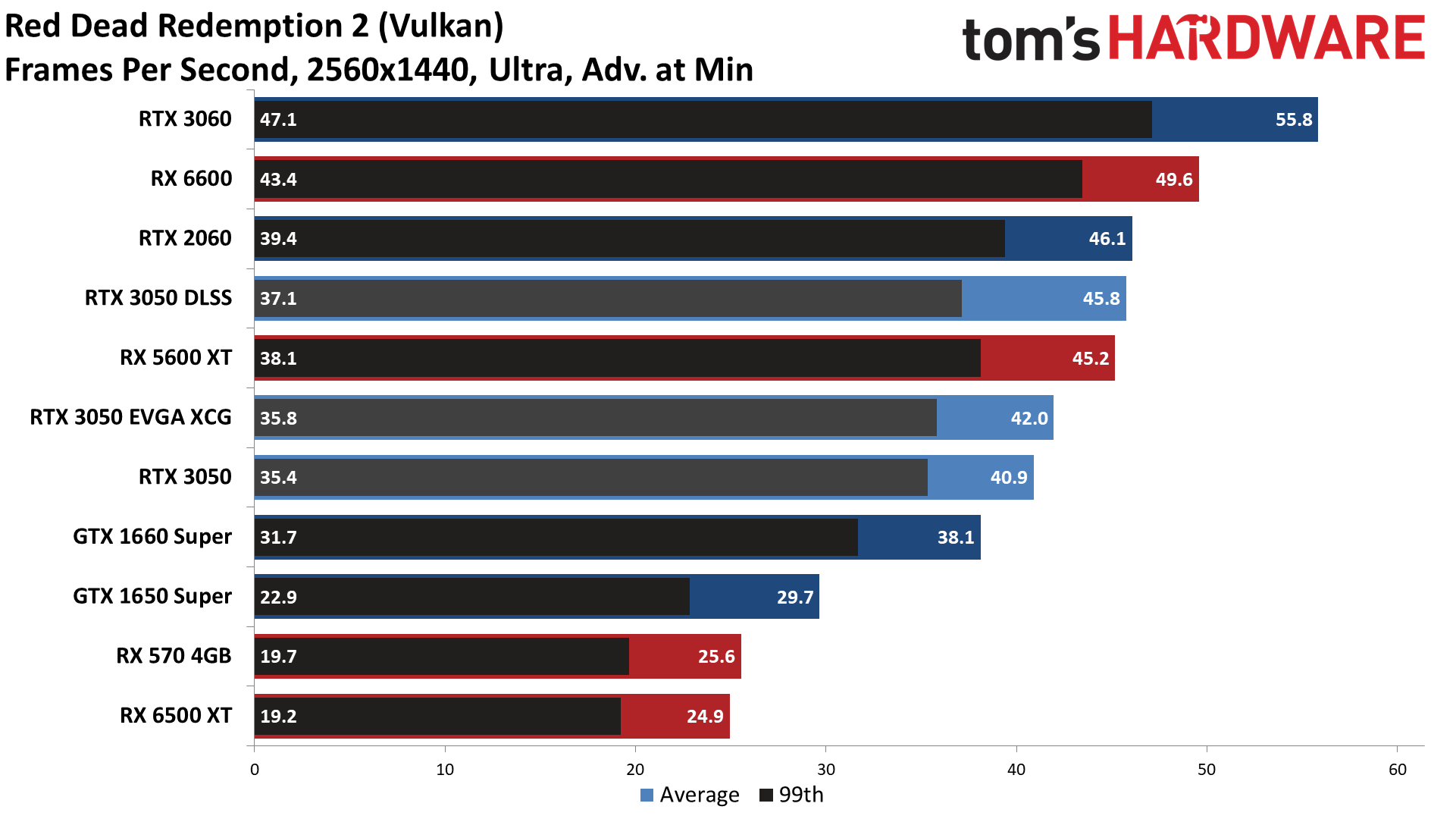

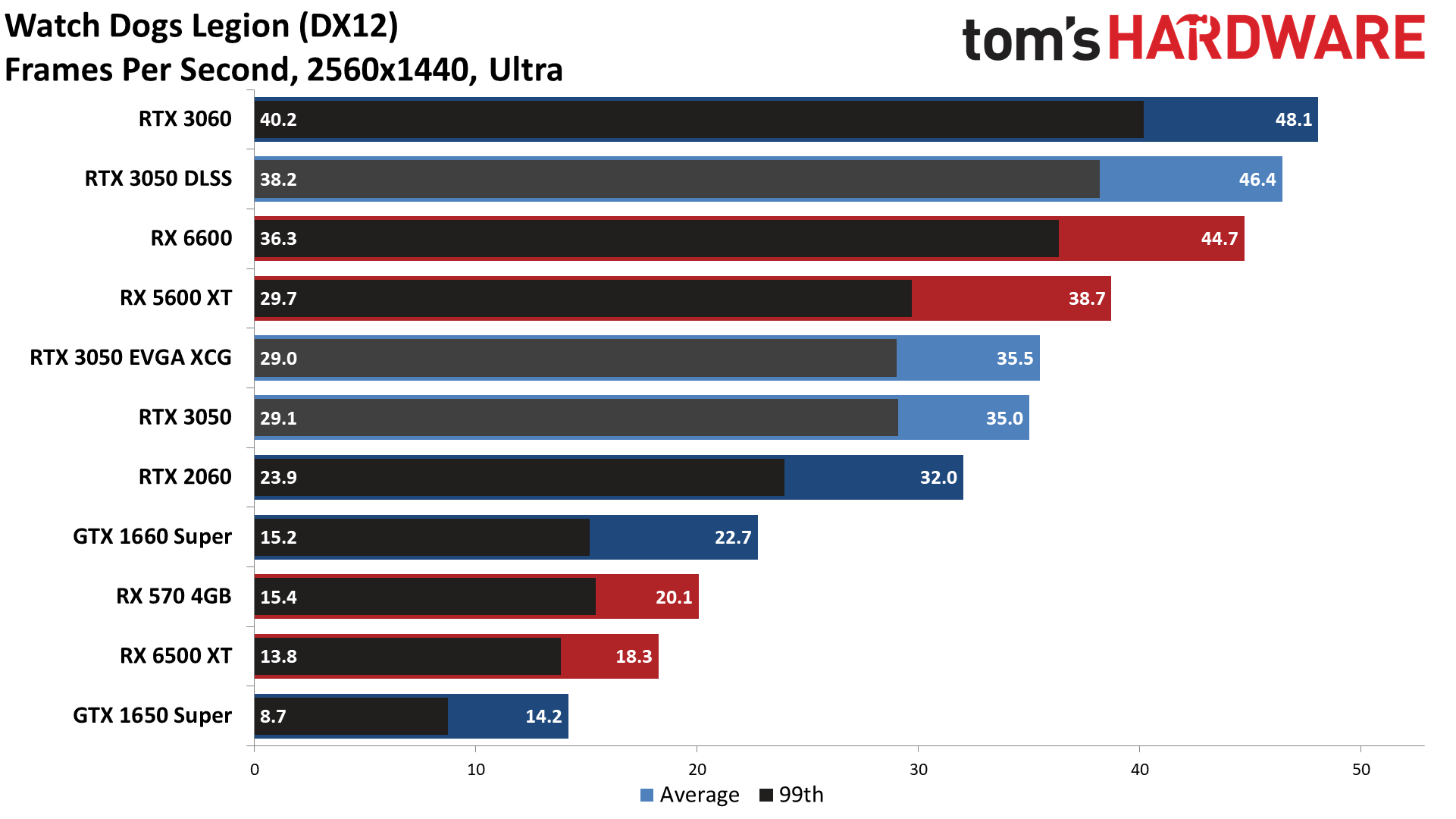

Okay, 1440p ultra definitely isn't the sweet spot for the RTX 3050, but while most of the other cards dropped well below 30 fps (1660 Super and above being required to remain "playable"), the RTX 3050 averaged 44 fps. In fact, it averaged over 30 fps in all seven of the games in our test suite. If you feel like beating a dead horse, it's also more than twice as fast as the RX 6500 XT this time.

DLSS starts to make even more sense at 1440p ultra, though the gains aren't any better. HZD performance improved by 21% this time, RDR2 showed its largest gains but still only 12%, and WDL wins out with the biggest improvement so far in our DLSS testing, with 33% higher performance. That was enough to overtake both the RX 5600 XT and RX 6600, and just a few percent behind the RTX 3060 (which of course could run with DLSS as well).

Not counting DLSS, the RTX 3050 is also the slowest of the mainstream graphics cards that we tested. Even at 1440p ultra, the RTX 2060 still eked out a 5% lead, and in the individual game charts, the 3050 only beat the 2060 in Forza Horizon 5 and Watch Dogs Legion, both games that are known to like having 8GB of VRAM or more. The RX 5600 XT was 15% faster as well, the RX 6600 came out 28% ahead, and the RTX 3060 ended up 37% faster than the 3050.

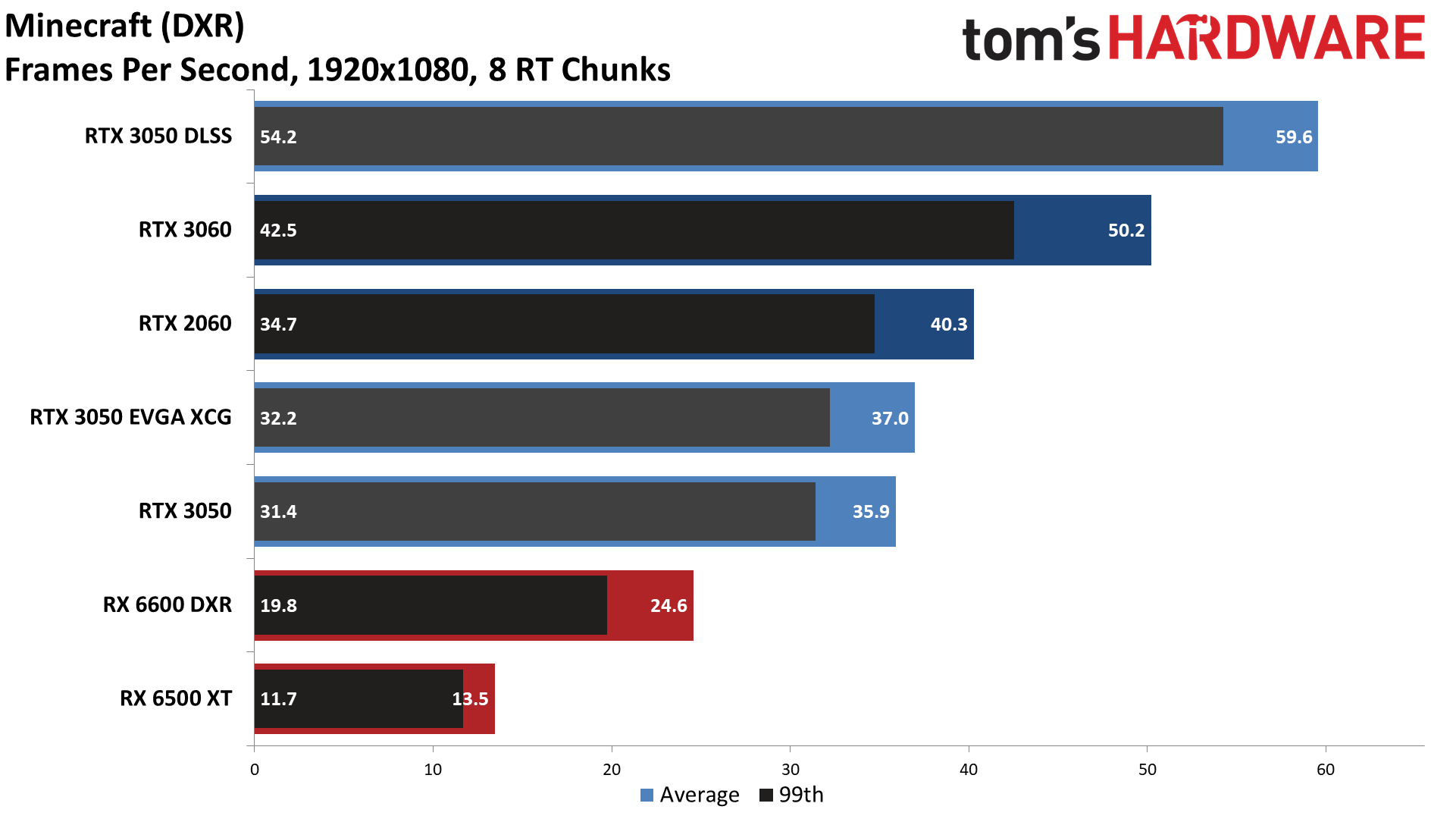

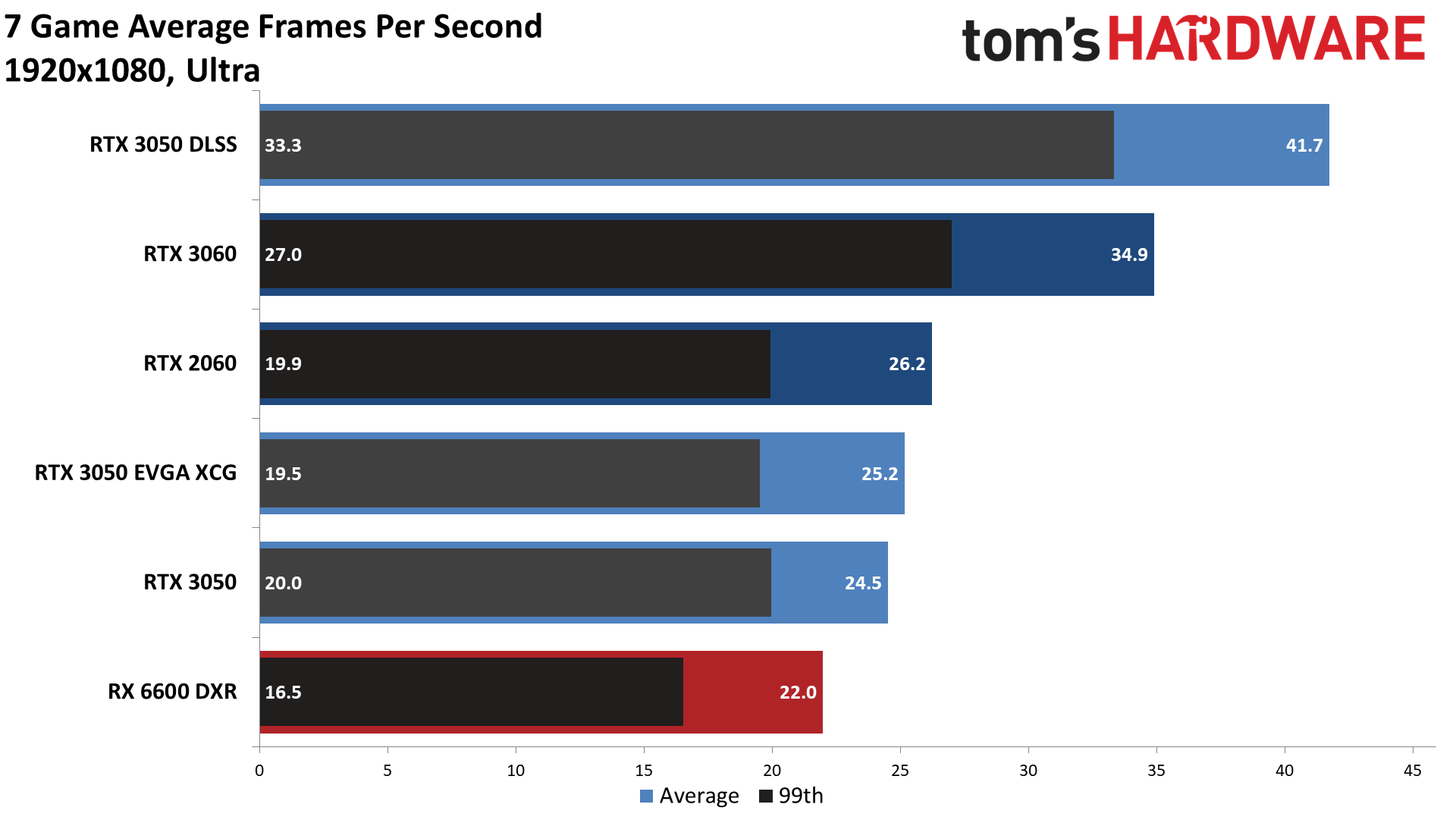

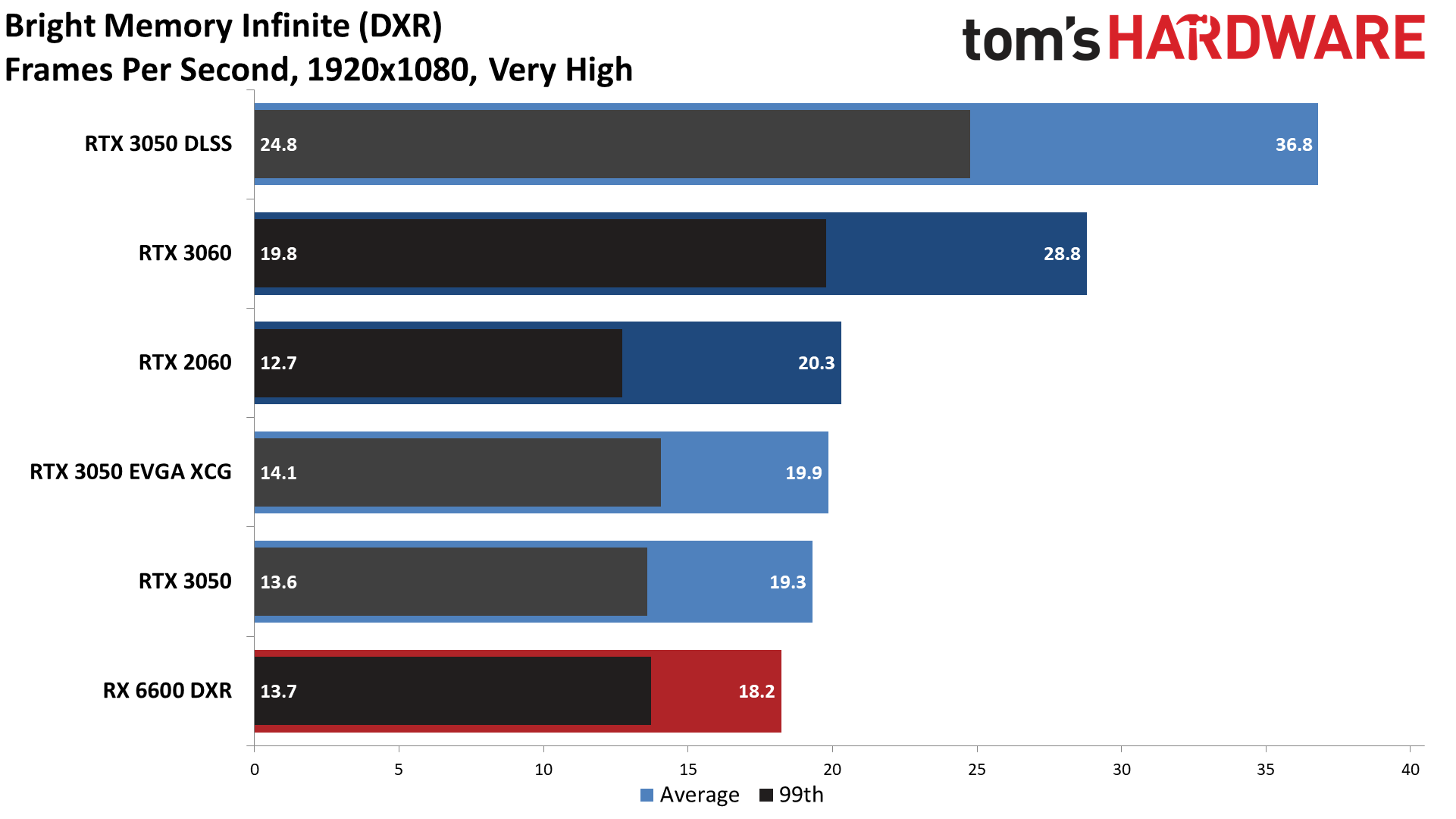

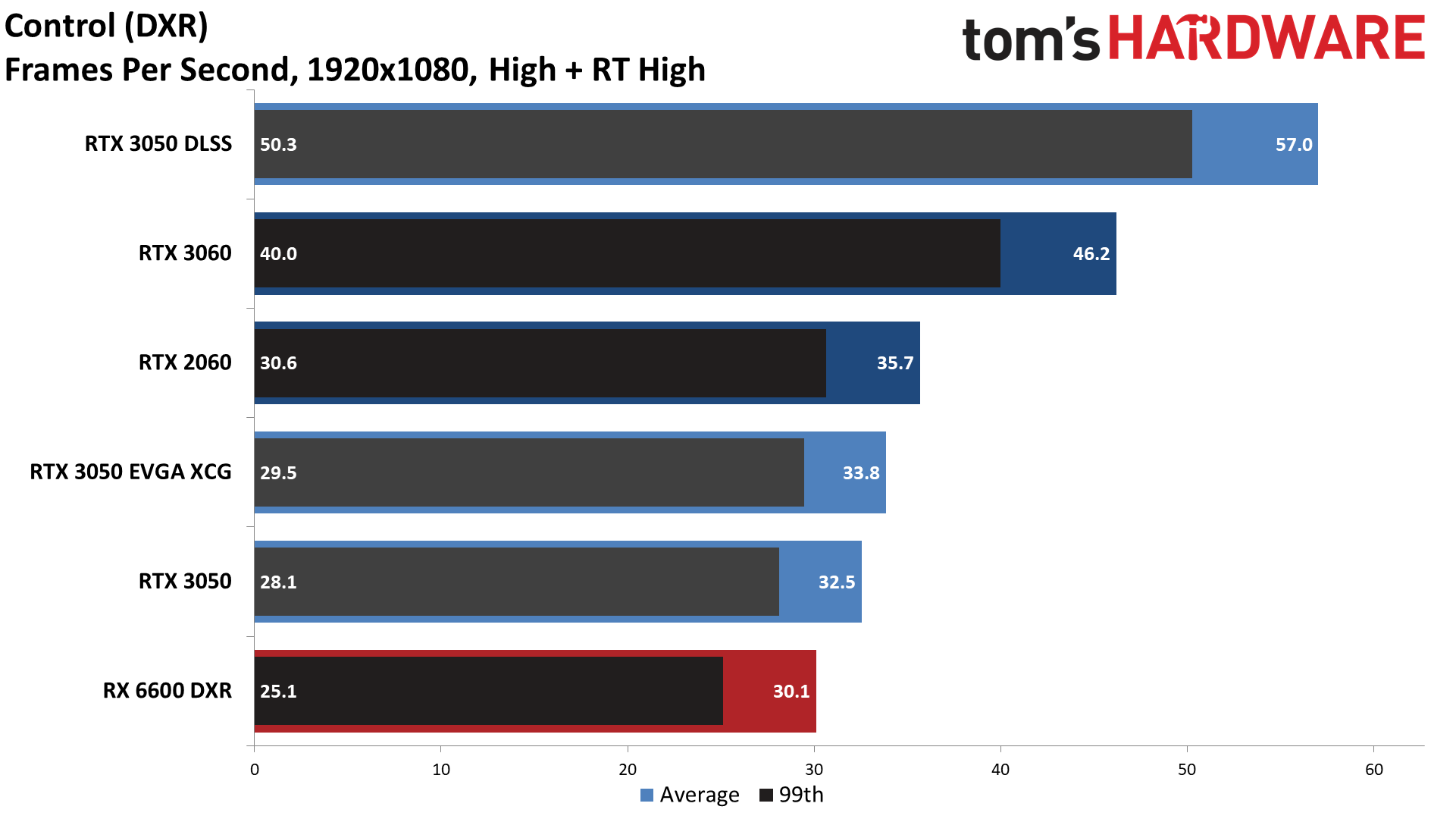

GeForce RTX 3050 Ray Tracing Performance

As an RTX card, ray tracing performance is something to consider, and we tested DLSS on the RTX 3050 as well (in quality mode where applicable, though Minecraft selects its own setting). RT hardware is basically required to run the expanded test suite (GTX 10/16-series cards with 6GB or more VRAM can try to run RT in most of the games in our DXR suite), but with a bit more time, we've now managed to collect performance results from some competing GPUs and have charts. (Yay for charts! Who doesn't love these things?)

As we've stated previously, while games with simpler forms of RT that only use one effect (typically shadows) exist, we don't find the often miniscule visual gains to be worth the performance penalty. In some cases, like Dirt 5, Shadow of the Tomb Raider, and World of WarCraft, it's hard to tell the difference, even if you're specifically staring at the shadows. As a result, our RT test suite uses games that all include multiple RT effects.

Incidentally, the RX 6500 XT wouldn't even let us try to run at our selected RT settings in Control or Fortnite, likely due to only having 4GB VRAM, resulting in a score of "0" for now. The RX 6600 successfully launched Fortnite with our "DXR medium" settings, but it also crashed repeatedly during attempted benchmarking (note that the RX 6600 previously ran Fortnite just fine). We assume there are some driver issues to work out, but we did manage to collect some data for the game.

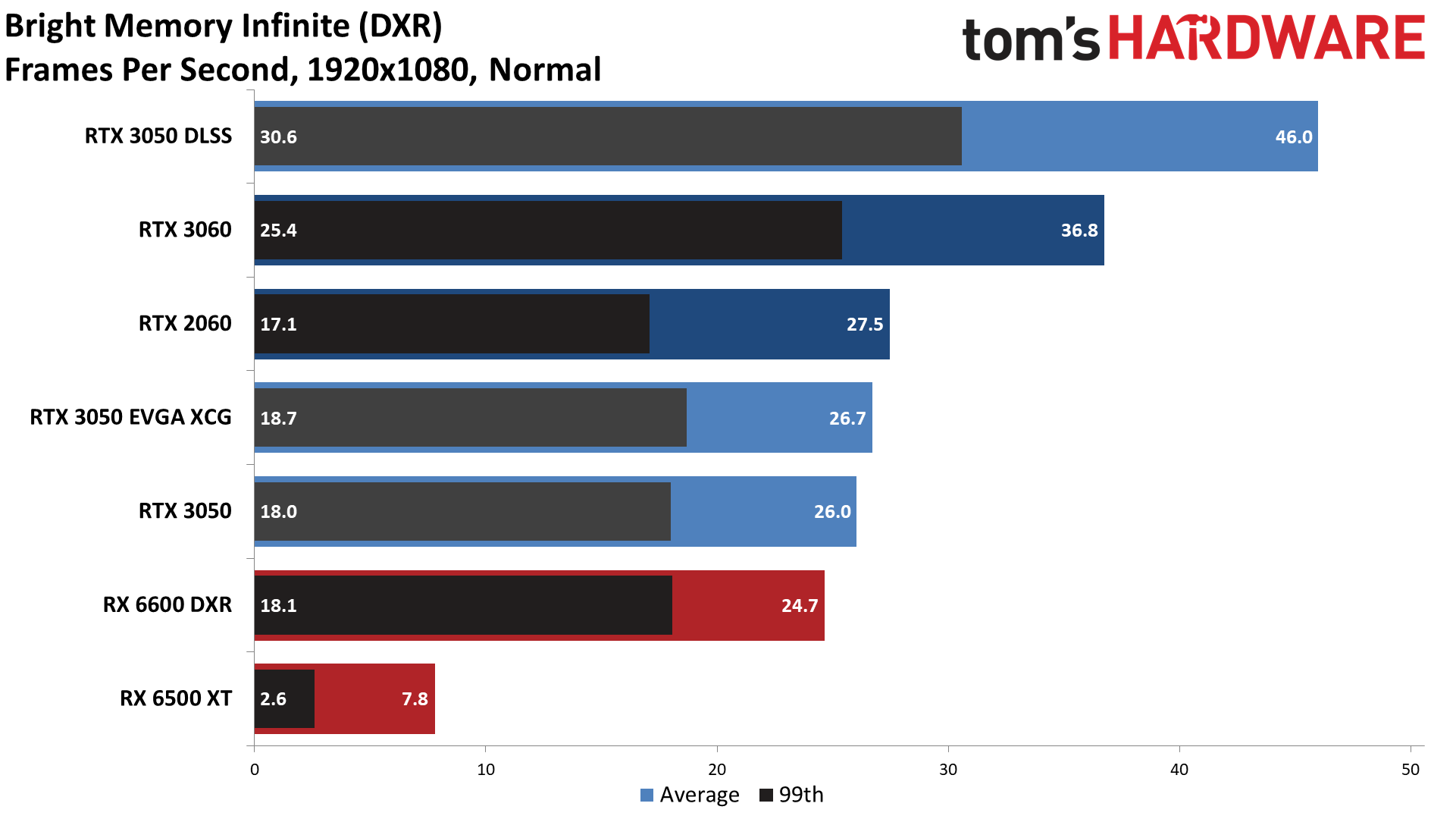

While we tested DXR with both medium and ultra settings, the latter mostly proves too much for the RTX 3050. Sure, it breaks 30 fps with DLSS in all of the games, but generally speaking, the RTX 3050 hardware is intended for medium to high settings with ray tracing enabled. Therefore, we'll focus the rest of the discussion on the DXR medium results.

Once again, the RTX 3050 ends up being slightly slower than the RTX 2060, even in ray tracing games. At 1080p medium, the best it can do is a tie in Cyberpunk 2077. That officially makes this the slowest desktop RTX card, by about 7%. But while that might seem bad, you only need to look at the RX 6500 XT, or even the RX 6600, to see just how bad things can get.

The RTX 3050 averaged 40 fps across our demanding DXR test suite at native 1080p. Using the same settings, even if we discount the failed attempts at two of the games, the RX 6500 was still 60–80% slower. In other words, the RTX 3050 was typically more than three times as fast in games with more than a token amount of RT effects.

The RTX 3050 also beat the RX 6600 in DXR performance by 10%, and turning on DLSS only makes things look worse. DLSS quality mode improved the RTX 3050's performance by 60% on average (and 70% at our ultra settings), making the RTX 3050 potentially 75–95% faster than the RX 6600. Interestingly, without DLSS, AMD's RX 6600 did notch one victory in Metro Exodus Enhanced Edition, where it was 10–15% faster.

If you're at all interested in giving ray tracing games a shot but you want to keep costs as low as possible, the RTX 3050 is a good effort. The RTX 2060 still delivered better performance overall, but it's certainly possible to get playable framerates in all of the major RT-enabled games, particularly if the game supports DLSS.

GeForce RTX 3050 Power, Temps, Noise, Etc.

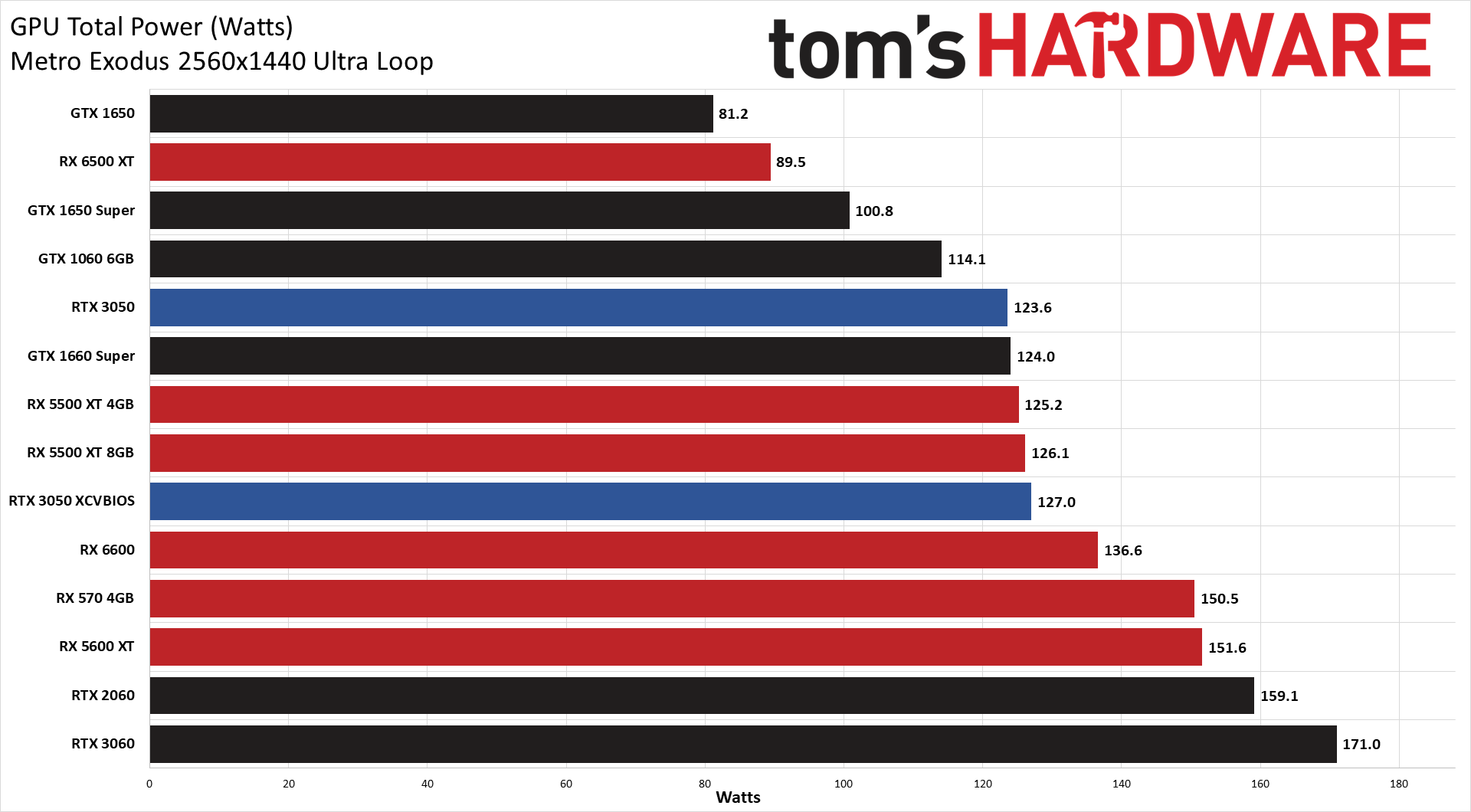

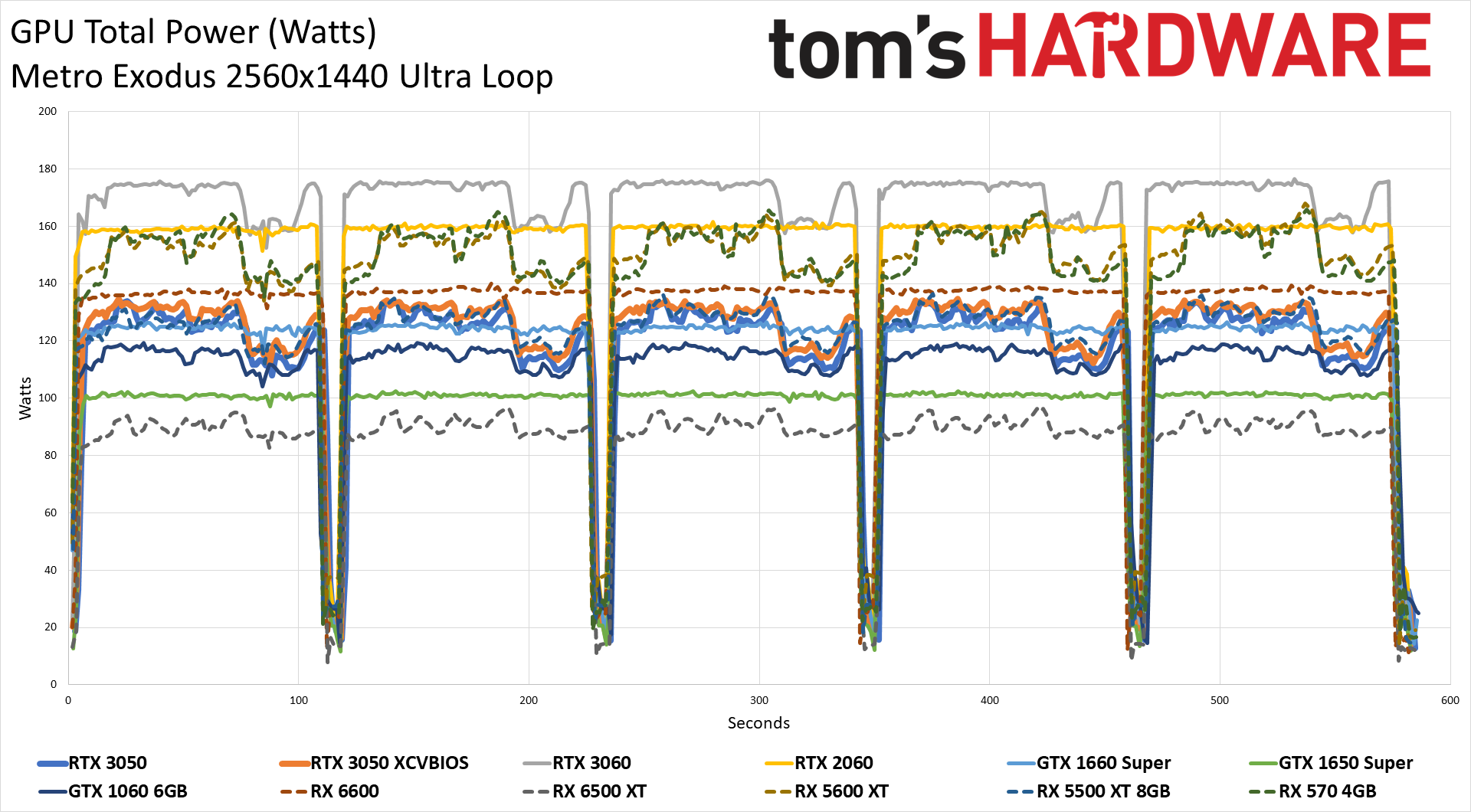

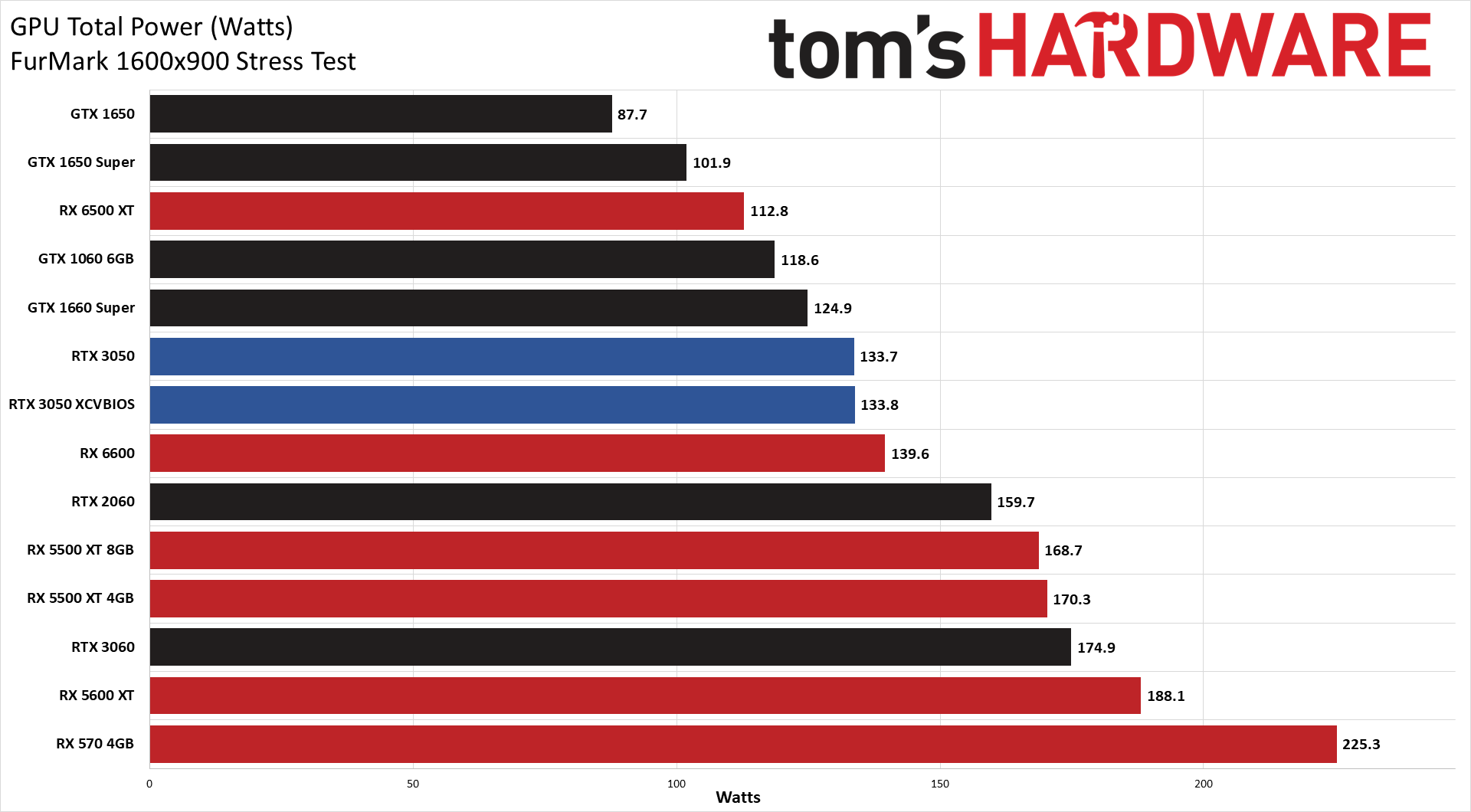

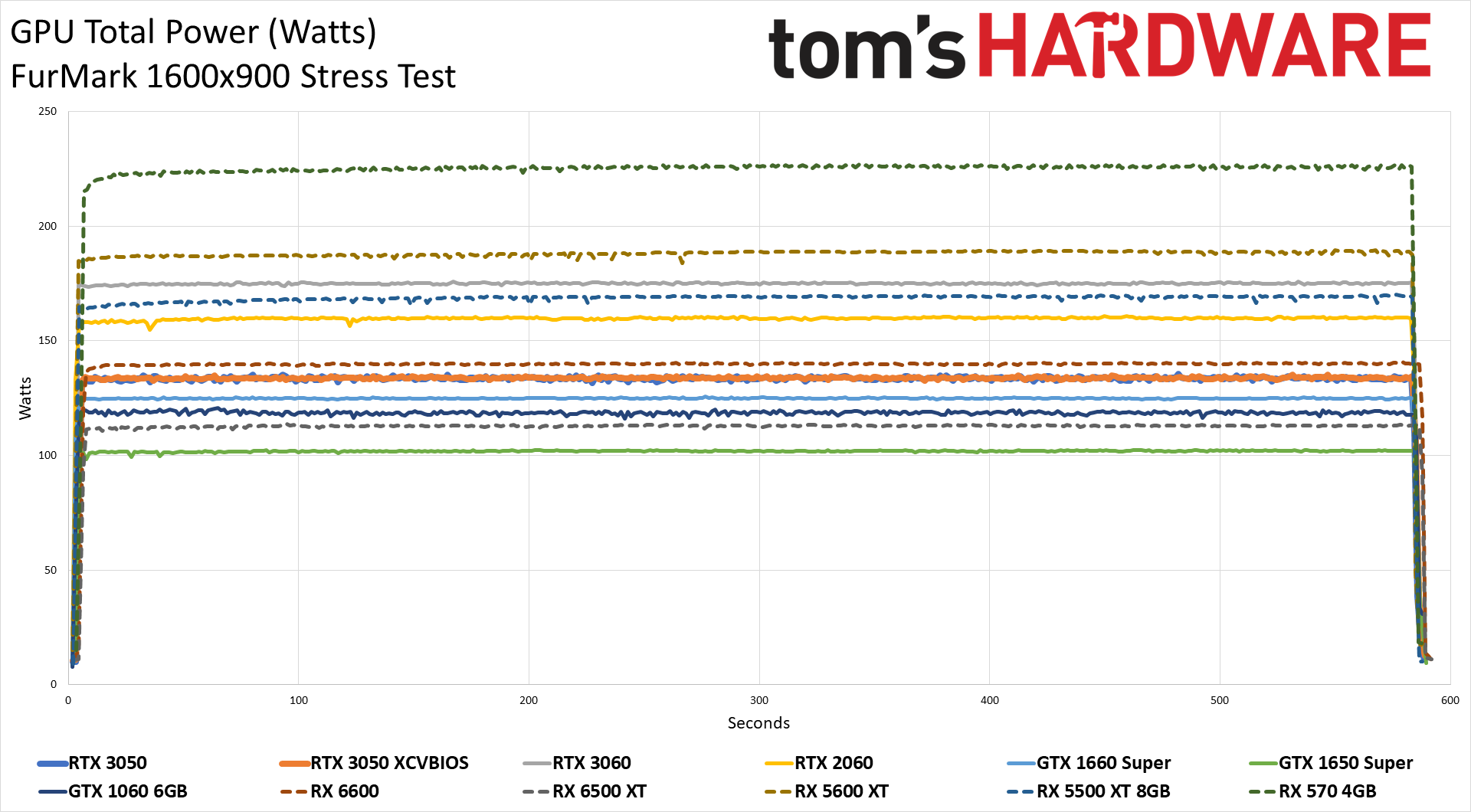

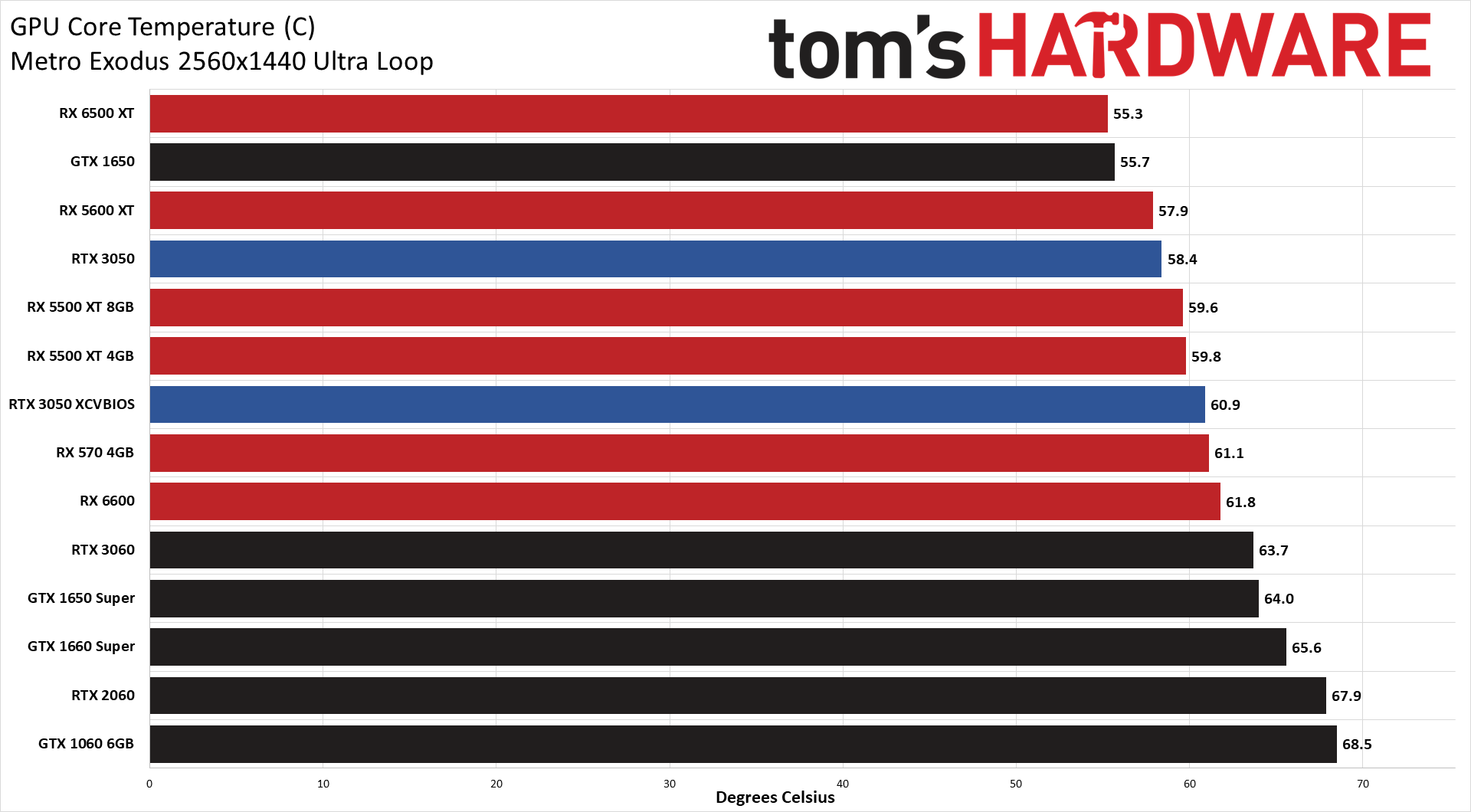

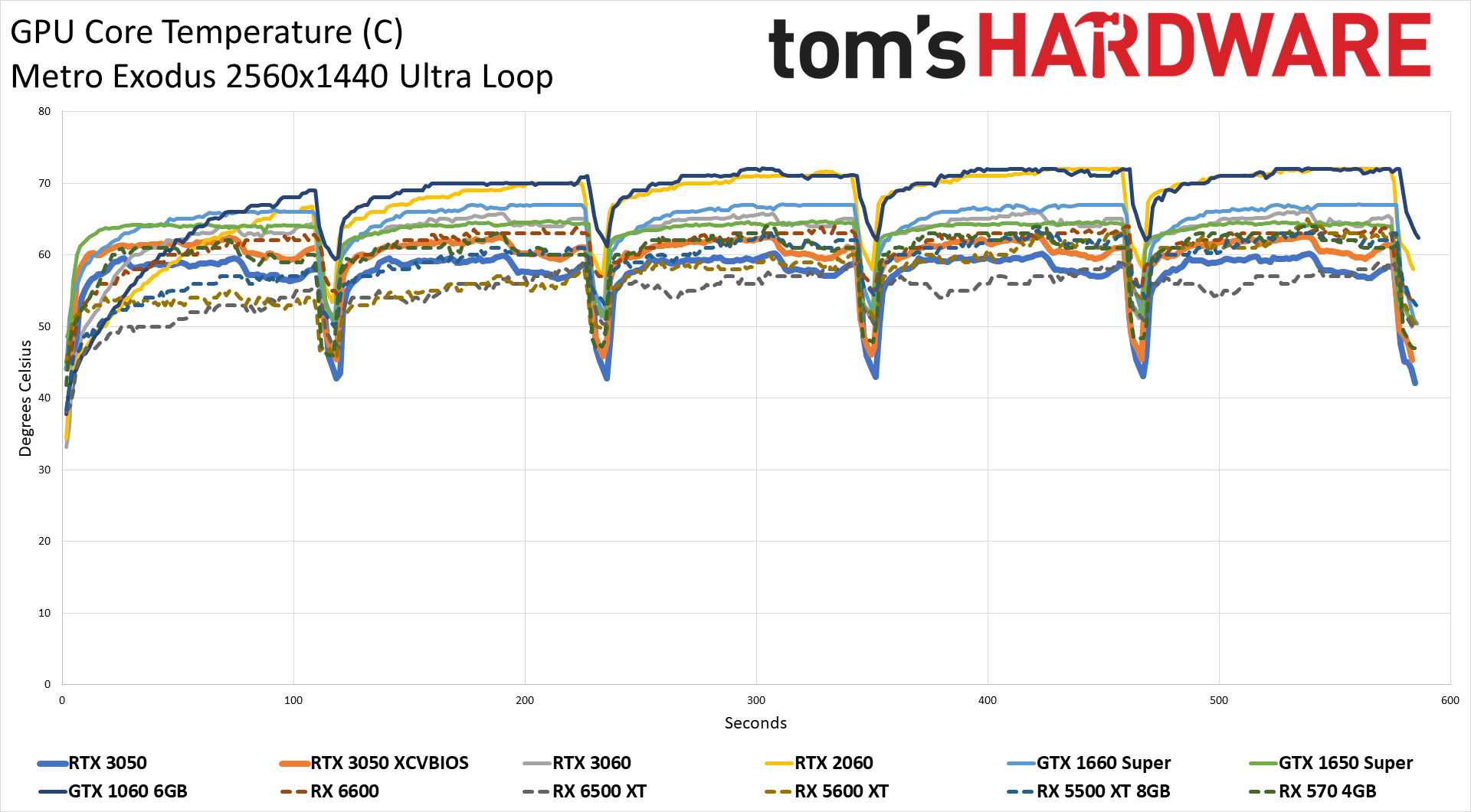

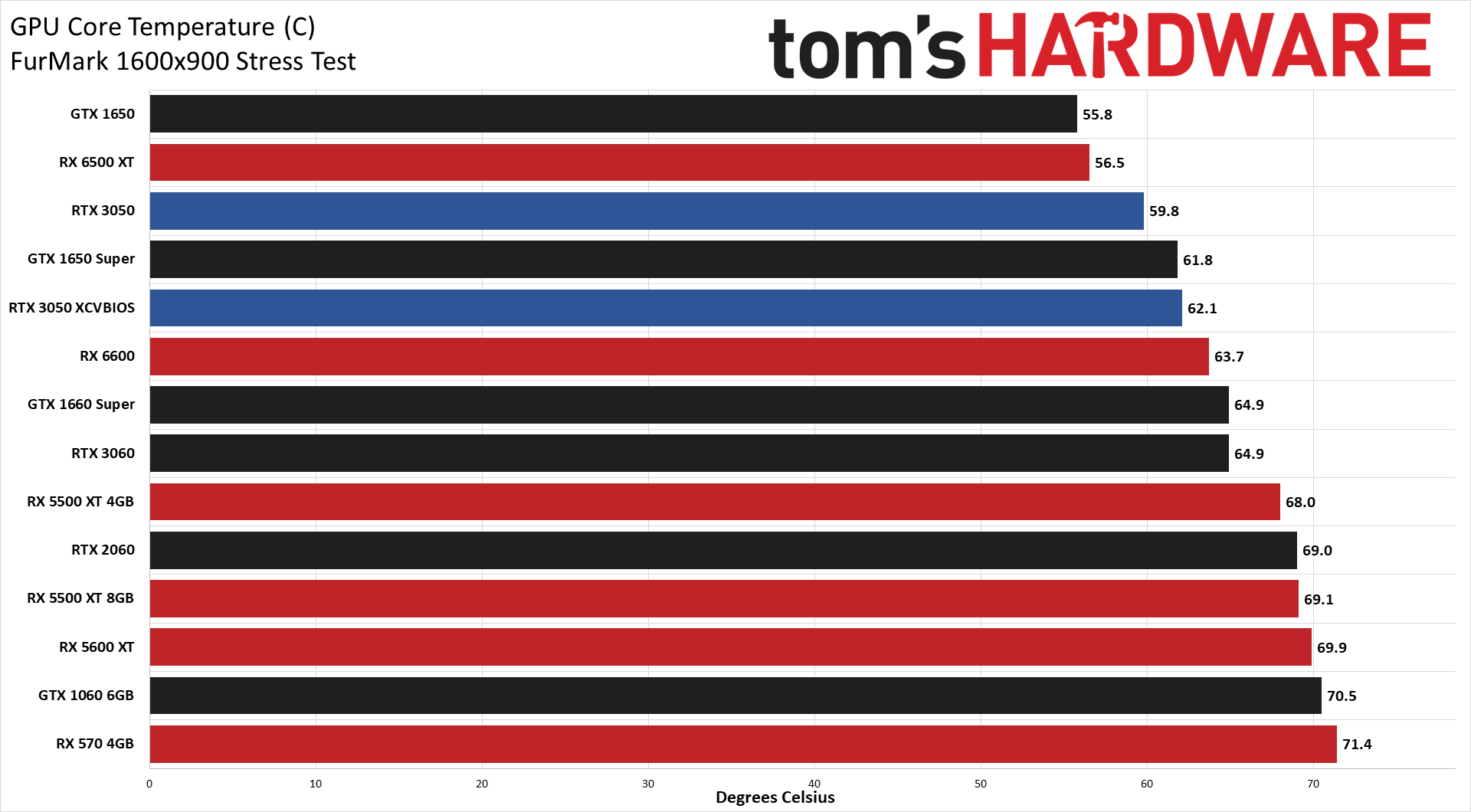

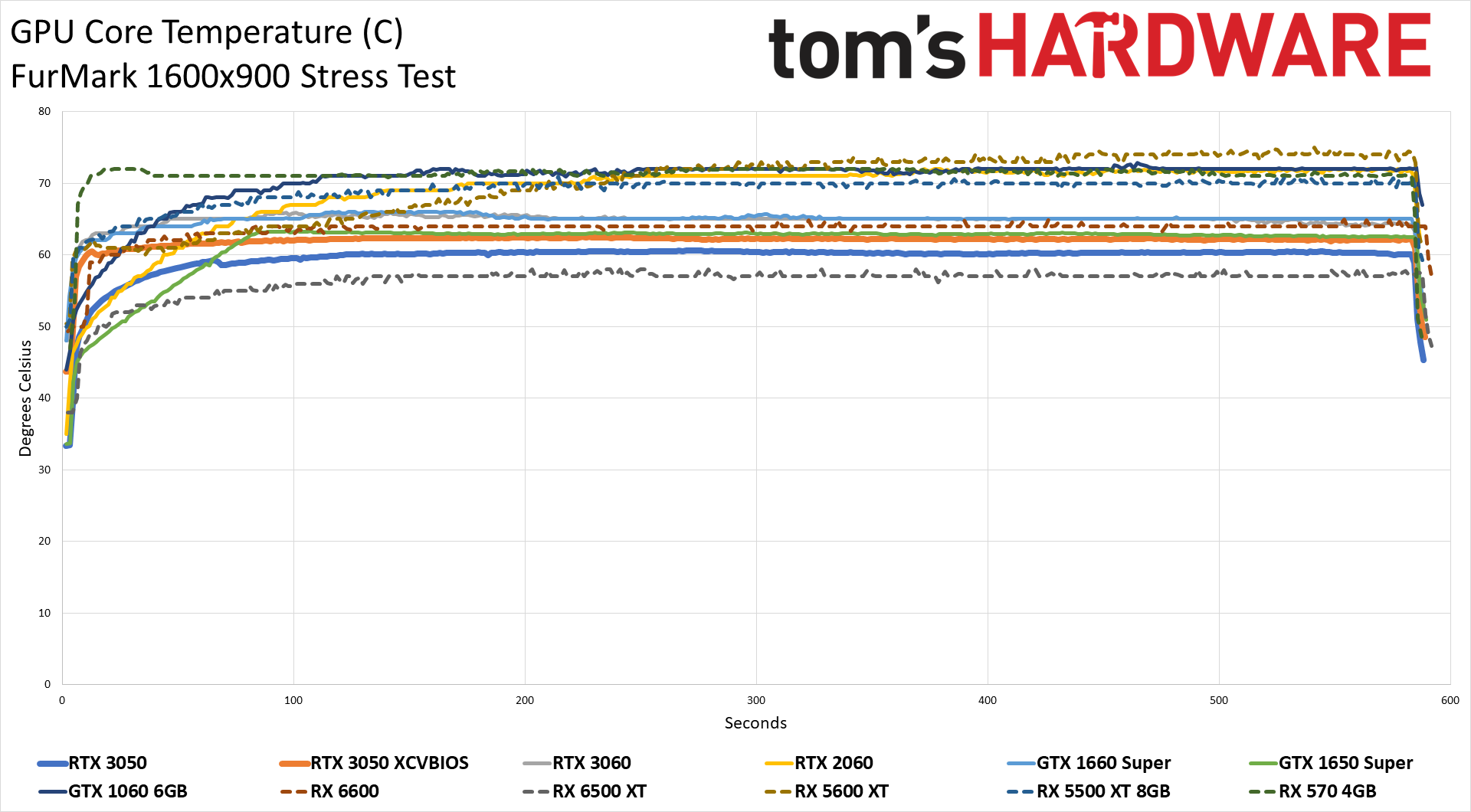

The GeForce RTX 3050 has an official TDP (board power) rating of 130W. We tested with the original and corrected "reference clocks" VBIOS, but thought it would be interesting to include both results here. We're using our Powenetics testing equipment and procedures to measure in-line GPU power consumption and other aspects of the cards. We collect data while running Metro Exodus at 1080p/1440p ultra (whichever uses more power) and run the FurMark stress test at 1600x900. Our test PC remains the same old Core i9-9900K as we've used previously, as it's not a power bottleneck at all.

Power usage is one of the RTX 3050's few disadvantages relative to the RX 6500 XT. The EVGA RTX 3050 averaged 124W of power during the Metro Exodus benchmark loop, compared to just 90W for the 6500 XT. Of course, it also provided substantially better performance, but potentially some PCs with weaker power supplies might benefit — though if that's the case, we'd recommend upgrading your PSU as well as your GPU. Meanwhile, FurMark's power use was slightly higher at 134W on average. Interestingly, the XC VBIOS only made a 3.4W difference in gaming and almost no difference at all in FurMark.

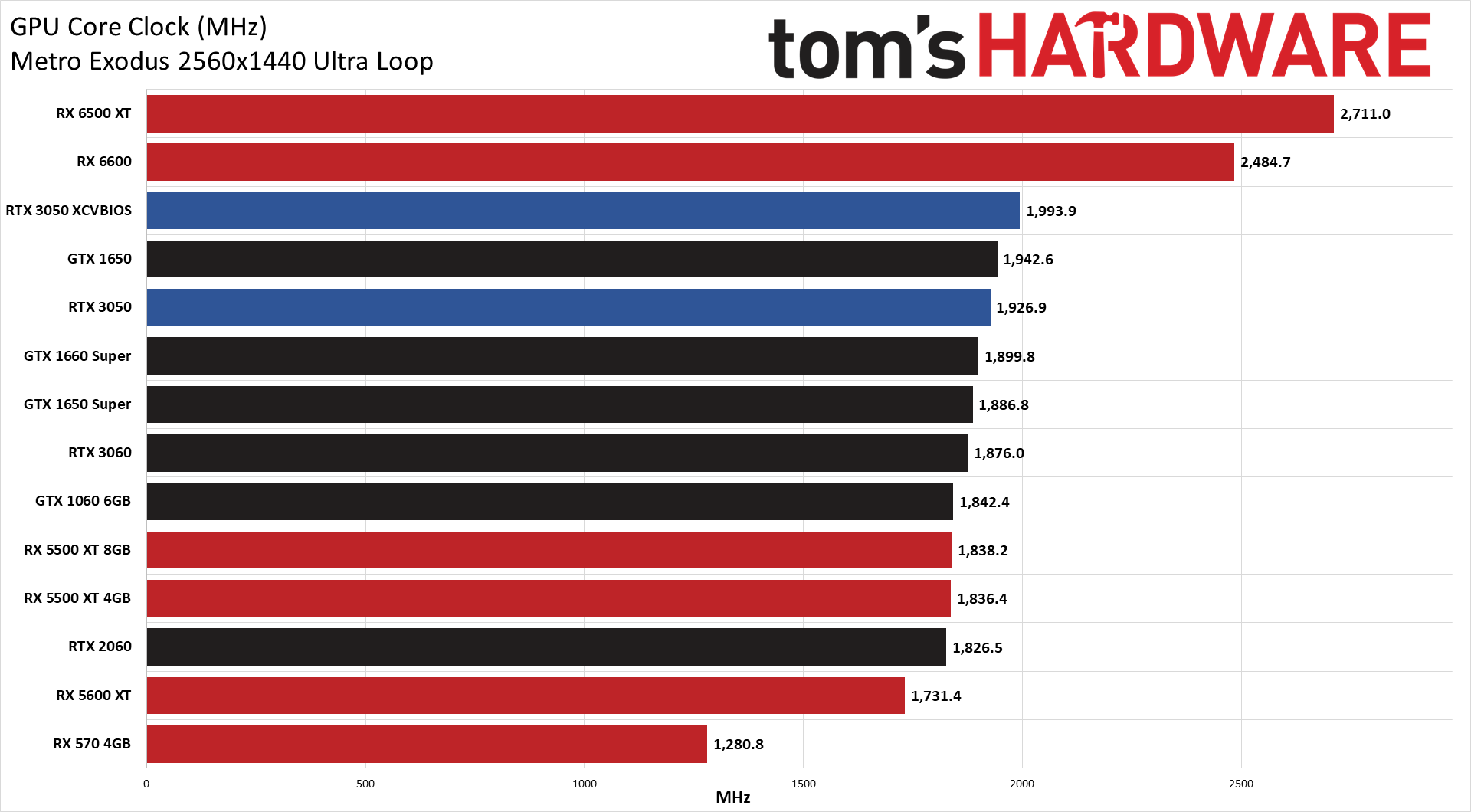

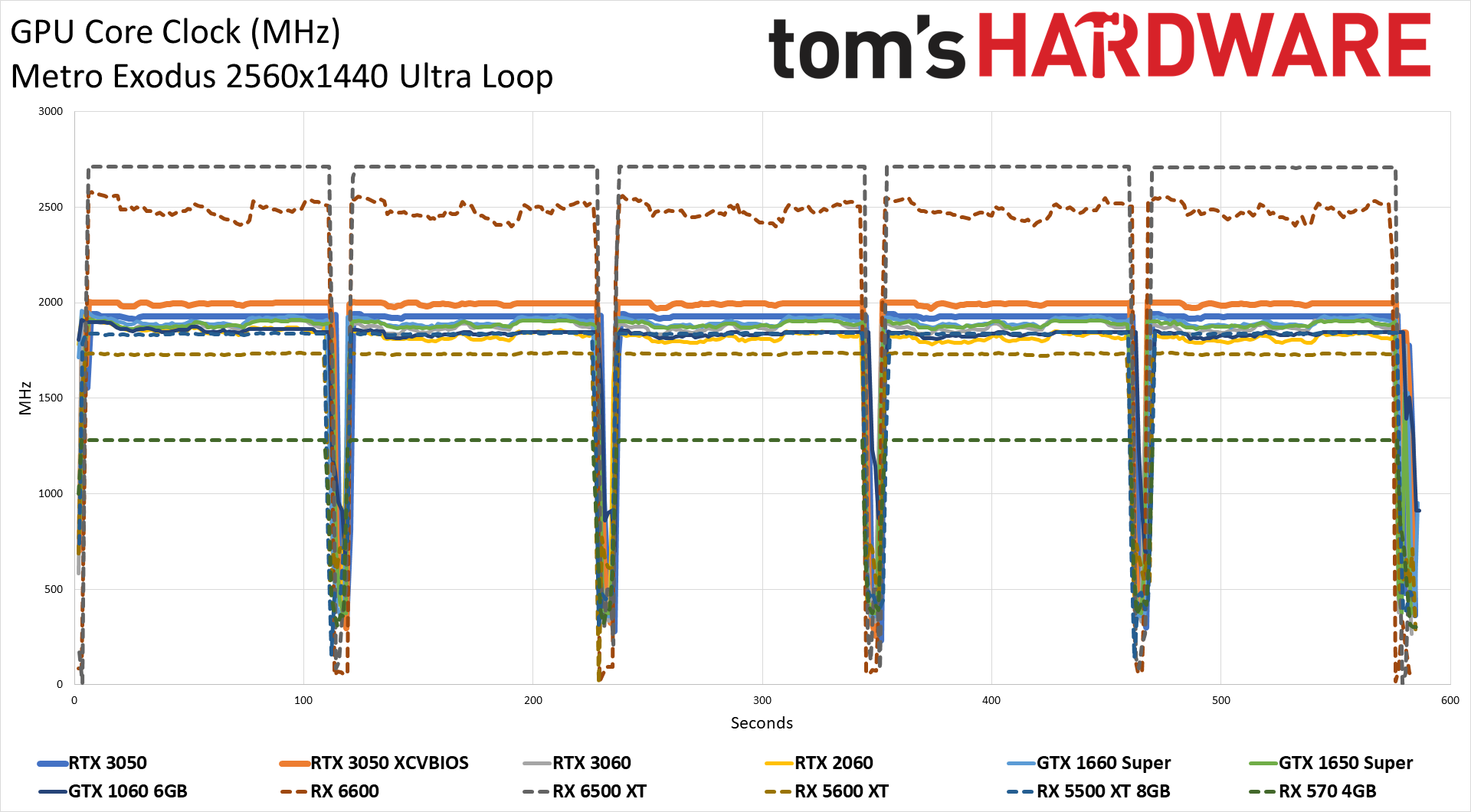

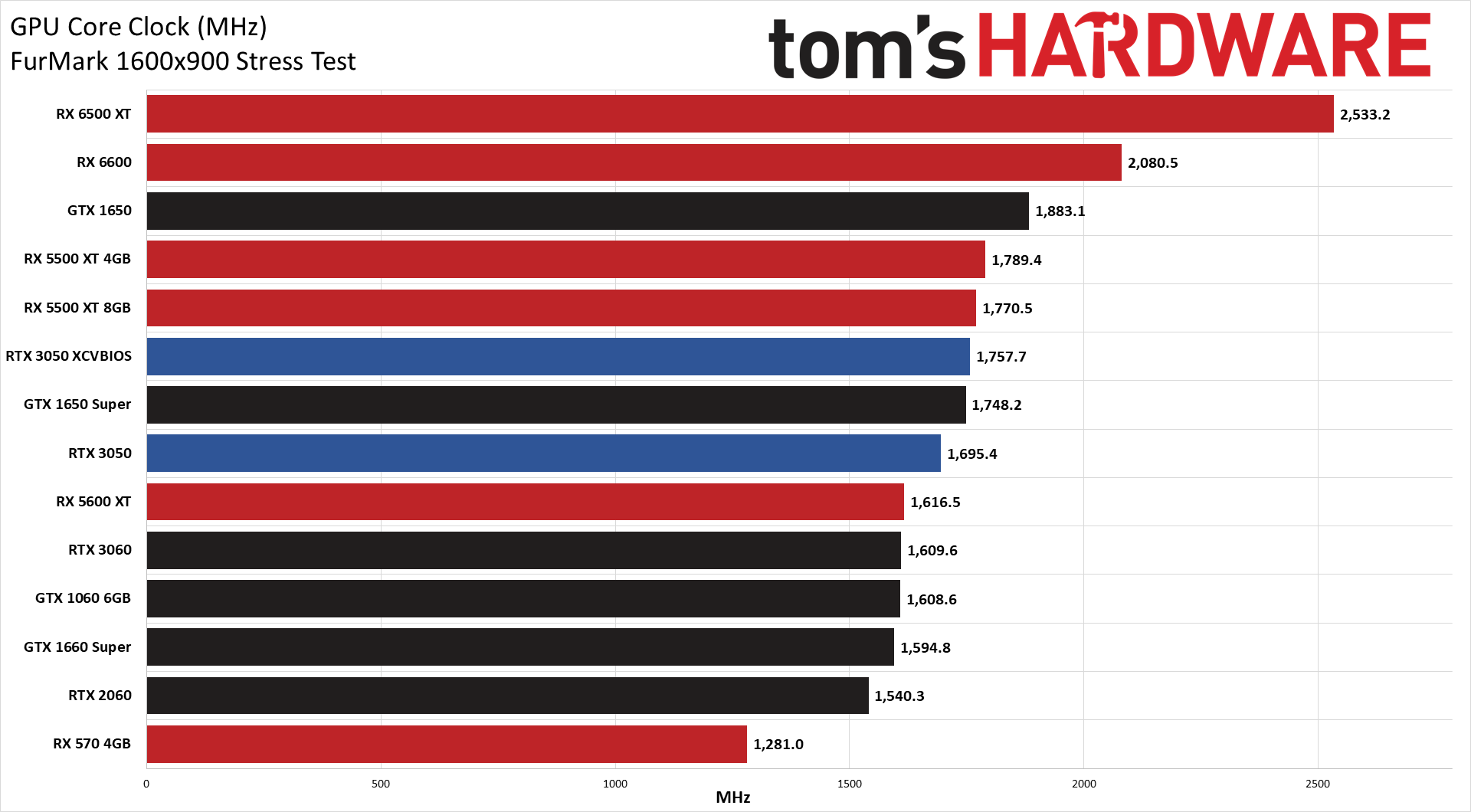

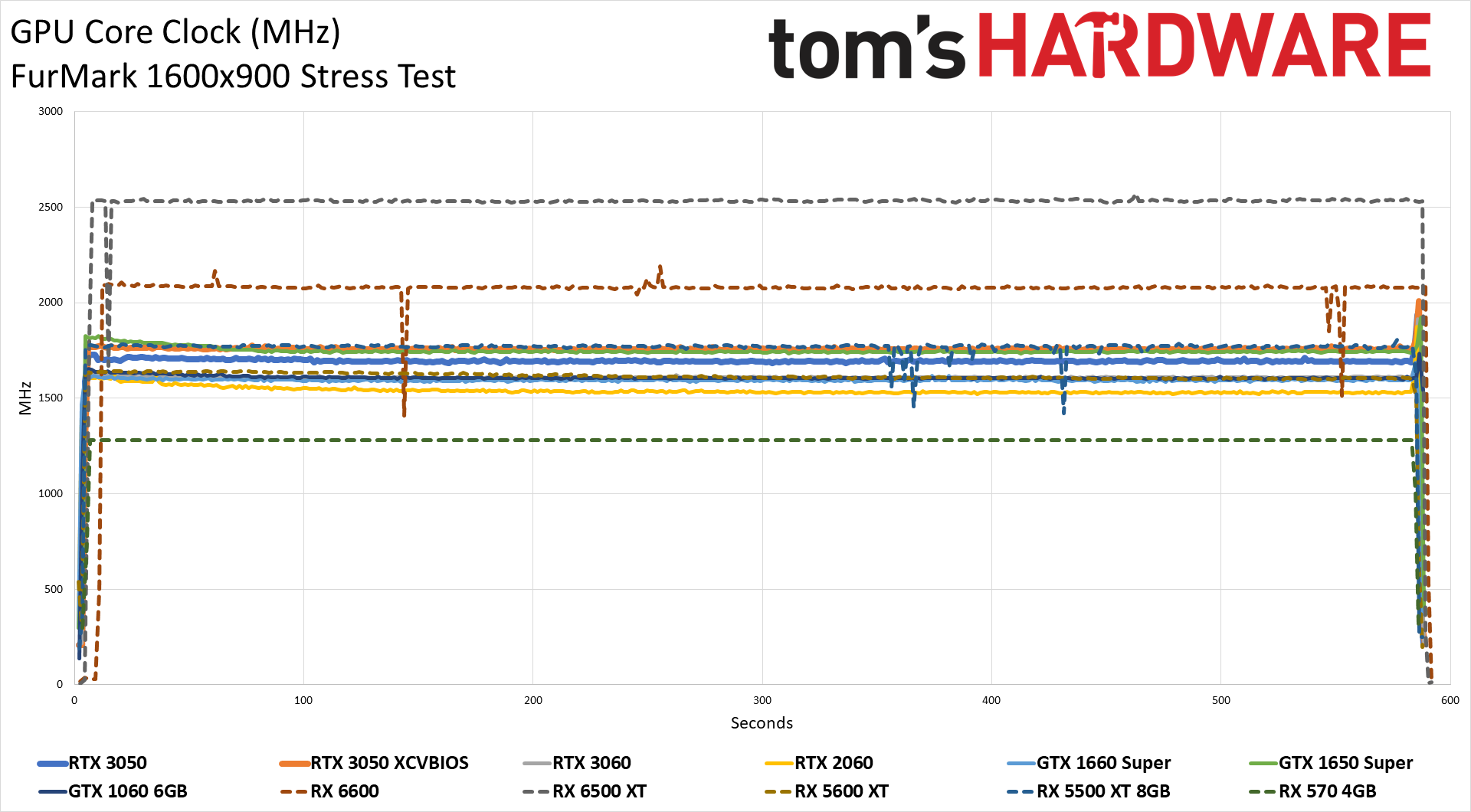

Clock speeds for both the reference-clocked VBIOS and the XC VBIOS end up quite a bit higher than the official boost clock, which is typical of Nvidia GPUs. The reference VBIOS still averages 1927MHz throughout the Metro Exodus test, and the XC VBIOS clocked in at 1994MHz, 67MHz higher and almost exactly in line with the difference in official boost clocks (1MHz off). Clock speeds were over 200MHz lower in FurMark, but that's also expected behavior. The difference in clock speeds between the two VBIOS versions was again almost exactly equal to the difference in boost clock rating — 63MHz this time, compared to the 68MHz difference in specs.

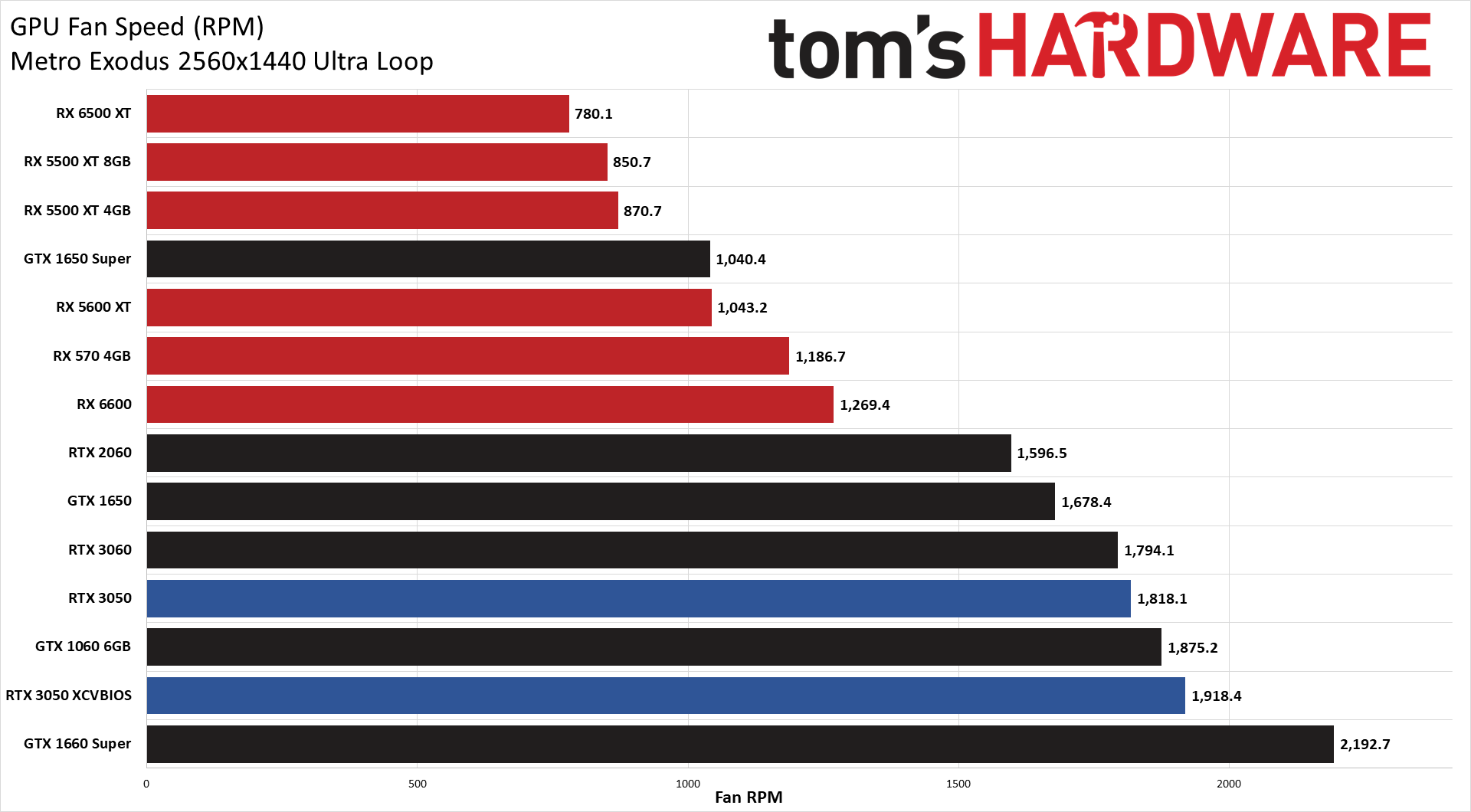

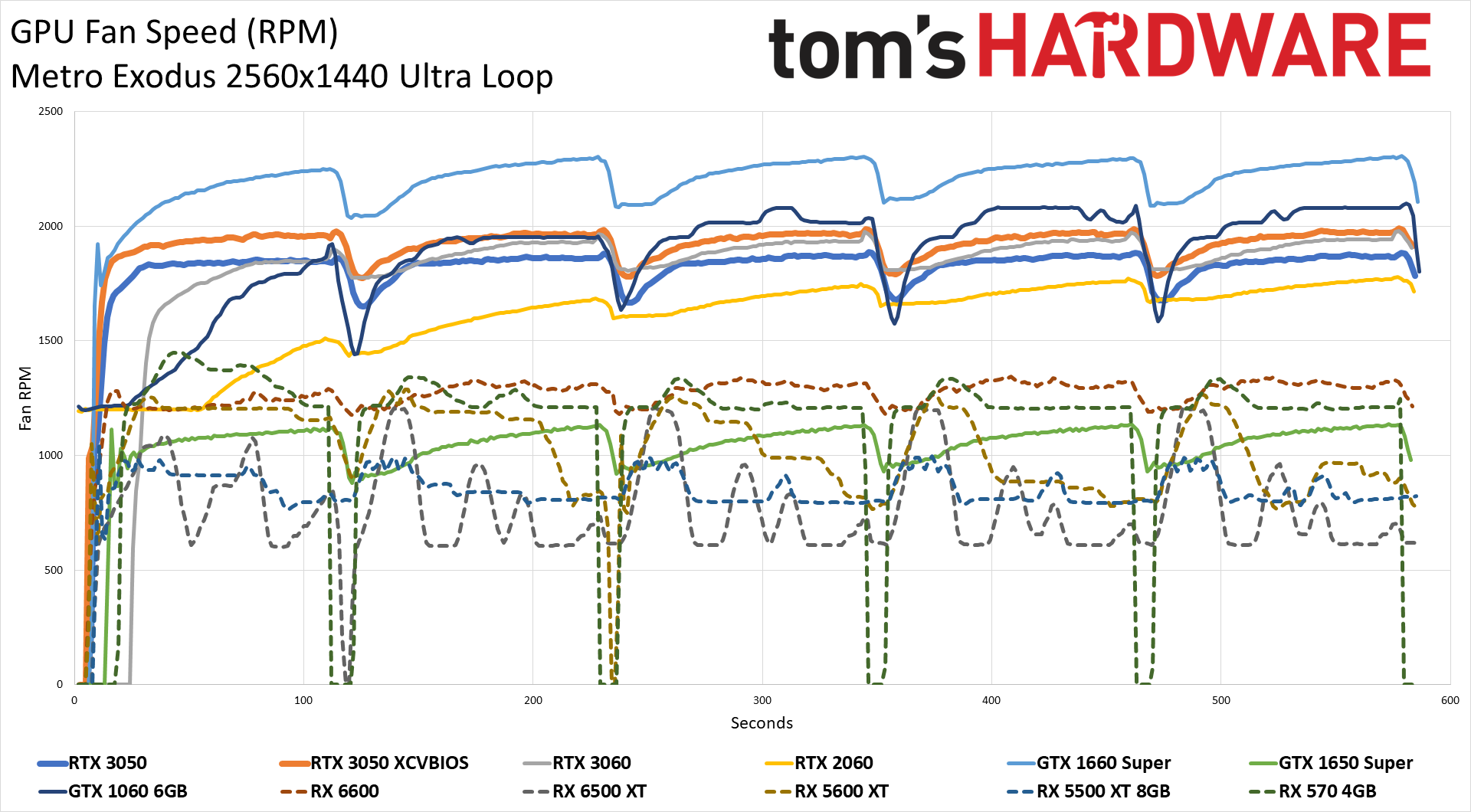

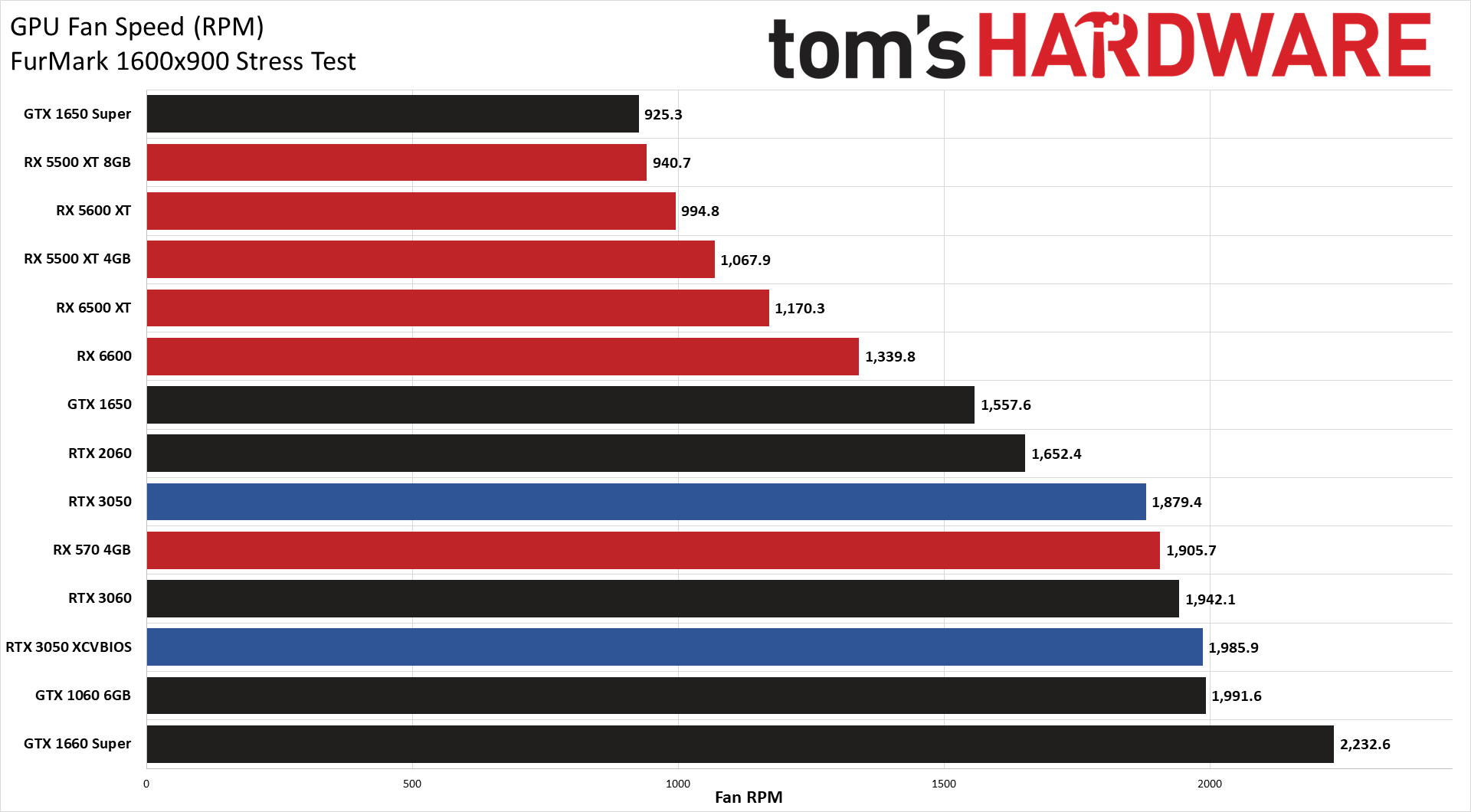

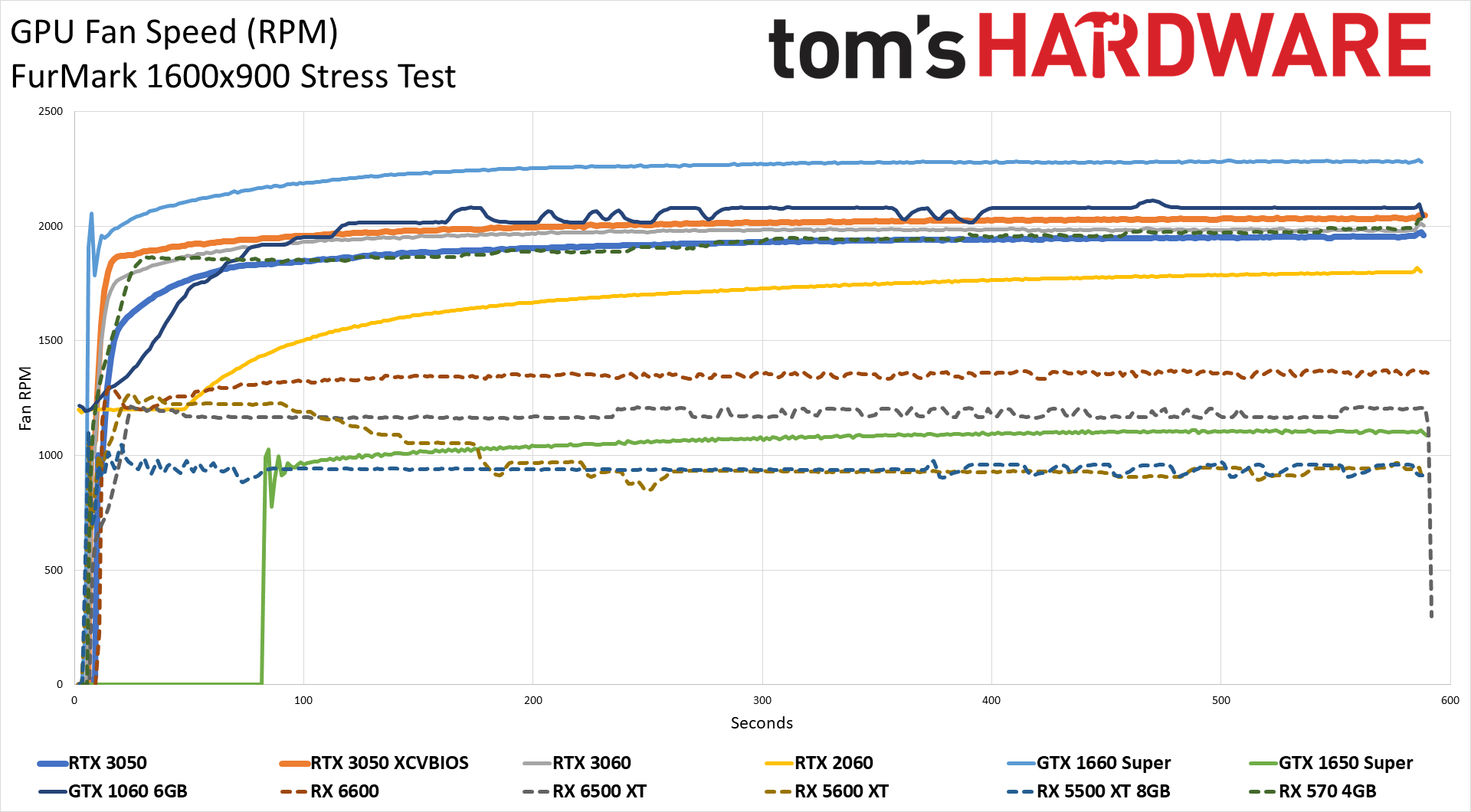

The RTX 3050 doesn't require a ton of power, which means it also doesn't generate a lot of heat or require high fan speeds. Temperatures maxed out at around 60C for the reference-clocked card, weighed in at 62C for the XC VBIOS in FurMark, and were about 1C lower in Metro Exodus. Fan speeds showed a slightly larger gap, with the reference card sitting at 1818 RPM on average compared to 1918 RPM for the XC VBIOS in Metro. FurMark bumped the fan speed up another 60-70 RPM.

Along with the Powenetics data, we also measure noise levels at 10cm using an SPL (sound pressure level) meter. It's aimed right at the GPU fans to minimize the impact of other fans like those on the CPU cooler. The noise floor of our test environment and equipment measures 33 dB(A), and the EVGA RTX 3050 reached a peak noise level of 45.3 dB during testing, with a fan speed of 71%. That's typical of EVGA's budget-oriented cards, opting for higher fan speeds with weaker heatsinks and compromising a bit on noise levels. It's not that the card is loud, but it's certainly louder than some of the other mainstream cards we've tested. We also tested with a static fan speed of 75%, at which point the card generated 48.4 dB of noise, and in warmer environments, it's entirely possible for the card to exceed that value.

GeForce RTX 3050: Good in Theory…

There are two ways to look at the GeForce RTX 3050: How it's ostensibly supposed to stack up to the competition, and what it looks like in the real world. Let's start with the former.

The RTX 3050 brings all of Nvidia's RTX features to a new, lower price point. Technically, we saw RTX 2060 cards bottom out at around $270 back in the day (from mid-2019 to early 2020), but the lowest MSRP was still $299. However, the 3050's lower price does come with slightly lower performance, except in a few instances where the extra 2GB VRAM comes into play, at which point the RTX 3050 can basically tie the RTX 2060.

The real saving grace for all of the RTX cards continues to be DLSS. With or without ray tracing, DLSS can provide a nice boost to performance with virtually no loss in image fidelity. We only tested DLSS in our DXR test suite, but it's also supported in quite a few games that lack ray tracing, like Red Dead Redemption 2, God of War, and many others. AMD's FSR isn't really a direct competitor, either. It competes more with Nvidia's Image Scaling, or at least, Radeon Super Resolution does — it can all get a bit confusing. In short, DLSS quality mode wins out on image quality and does things that FSR, NIS, and RSR can't. Maybe Intel's XeSS will be able to directly compete with DLSS at some point, but DLSS has a significant head start on game developer adoption.

In theory, then, the RTX 3050 looks like a good option. It's basically the same price as the previous-gen GTX 1660 Super, offers ray tracing and DLSS support (along with Nvidia Broadcast and any other Nvidia technologies that can use the RT or Tensor cores), gives you 2GB more VRAM, and performance is typically 10–15% faster. So if you could buy the RTX 3050 for $249, it's certainly a reasonable option. Which brings us to the practical aspects of this review.

| Brand | Product Name | Price |

|---|---|---|

| Asus | ROG-STRIX-RTX3050-O8G-GAMING | $489 |

| Asus | DUAL-RTX3050-O8G | $439 |

| Asus | PH-RTX3050-8G | $249 |

| EVGA | XC GAMING | $329 |

| EVGA | XC BLACK GAMING | $249 |

| Gigabyte | GV-N3050GAMING OC-8GD | $379 |

| Gigabyte | GV-N3050EAGLE OC-8GD | $349 |

| Gigabyte | GV-N3050EAGLE-8GD | $249 |

| MSI | RTX 3050 Gaming X 8G | $379 |

| MSI | RTX 3050 Ventus 2X 8G OC | $349 |

| MSI | RTX 3050 Aero ITX 8G | $249 |

| Zotac | Twin Edge OC | $399 |

| Zotac | Twin Edge | $249 |

The GeForce RTX 3050 officially goes on sale tomorrow, January 27. We've already seen advance listings pop up with prices that are nowhere near the "recommended" $249, and in some cases, prices are $400 or more. We'll see how things shake out over the coming weeks and months, but when the GTX 1660 Super has a current average selling price of $475 on eBay during the past week — and that's after the recent drop in GPU prices that we've noticed — there's little reason to expect the RTX 3050 to sell at substantially lower prices. If the miners don't nab them, the bots and scalpers probably will.

You can see the above table of "official" launch prices from Nvidia's various add-in card partners. Every one of them has a card with a $249 price point, but the jump from there to the overclocked cards ranges from as little as $80 for the EVGA XC Gaming to a whopping $240 gap for the Asus Strix card. Considering EVGA inadvertently proved there's little difference between the XC Gaming and XC Black other than the VBIOS, you probably don't want to spend a ton of extra money on the typically modest factory overclocks.

For Nvidia's partners, it's clear that if they can successfully overclock a chip and sell it for more money, they would much rather sell a $349 or $489 card than a $249 model in a market where every card gets sold. We've seen this in the past, like the EVGA RTX 2080 Ti Black that was one of the few 2080 Ti cards with an official price of $999. They were almost never in stock, and most 2080 Ti models sold at $1,199 or more (the Founders Edition's price).

Fundamentally, it all goes back to supply and demand. Even if the RTX 3050 isn't great for mining — and in the current market, it most certainly isn't, averaging just 22MH/s in Ethereum, which would net a mere $0.60 per day at current prices — there are far too many other people looking to upgrade their PCs. The supply of the RTX 3050 at launch might be okay (it will still sell out in minutes), but it still uses the same GA106 chip as the RTX 3060, and we don't expect long-term supply to be any better than that card. As with the AIC partners, why would Nvidia want to sell GA106 chips in a nominally $249 card if it can put those same chips into a $329 card?

Given that the performance generally ends up being worse than the RTX 2060 and RX 6600, those cards should represent a practical ceiling on RTX 3050 prices. That doesn't bode well since both of those currently average around $510 on eBay. How much should you actually pay for an RTX 3050, if you're interested in buying one? That depends in part on how badly you need it, but we'd try to keep things under $350 as an upper limit. If you can't find the card for less than that, you should probably just wait.

Whatever you choose to do, let's also not forget that Ada Lovelace (we've heard it referred to by both the first and last name), a.k.a. the RTX 40-series, will likely land this fall. Nvidia will probably start with the top-tier parts like the RTX 4090 and 4080 rather than looking at the budget and mainstream parts, but AMD is also working on RDNA3. With both slated to use TSMC's N5 process, supply may or may not be any better than the current GPUs, but we expect a decent performance jump. Depending on where prices go between now and the fall, sitting out the current generation of GPUs isn't a bad idea at this stage.

MORE: Best Graphics Cards

MORE: GPU Benchmarks and Hierarchy

MORE: All Graphics Content

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

logainofhades So roughly 1660ti performance, with 2GB move vram, RTX, and DLSS, and $30 less MSRP. Could be worse, like the 6500xt was, I guess.Reply -

-Fran- If found at MSRP and the card is not a joke manufacturer, then this card is not terrible!Reply

I can't believe this is actually a compelling card at that price point, yikes!

Alas, as mentioned, good luck finding it at or even near MSRP... Sigh...

Regards. -

RodroX At MSRP (today) it seems a very decent card (it does have encoder, 8GB of VRAM and and 8x pcie lines, not like the joke of the RX 6500XT).Reply

But if we think about 2019 MSRP then it makes you cry.

Its unlikely to get this at MSRP anyways. -

VforV The sad reality of today's new GPUs:Reply

1. RX 6500 XT, a pretty bad GPU even at $200 MSRP and worse at $270 which is where it currently sits.

2. RTX 3050 a regression at $250 MSRP based on pre-2019 prices and offerings, but an OK-ish one in current market... BUT most likely will cost above $400 and actually $500 at which point, the better GPU to buy is the RX 6600. No debate here at all.

3. Save us intel with Arc!? (can't believe it has come to this, :ROFLMAO:)

I would be surprised if intel Arc stays close to it's MSRP in these times, despite them being "new" on the discrete GPU market. I fear the dire situation + intel badge will make them as expensive as Radeon, at least... maybe not nvidia level "expensive", but still bad enough price wise. I hope I'm wrong about this pessimistic prediction, though... -

InvalidError Reply

Still manufactured at TSMC, still needs VRM bits, still needs GDDR6 chips, still needs parts to get shipped around for assembly and other expensive bits that are responsible for raising the baseline cost of new GPUs by ~$70. Unless Intel is willing to eat losses to seed the market, DG2 will likely start from $200 for the lowest-end model. Leaked benchmarks indicate that DG2 has yummy double and quad precision performance for big-number crunchers.VforV said:3. Save us intel with Arc!? (can't believe it has come to this, :ROFLMAO:) -

JarredWaltonGPU Reply

Yup, and I strongly suspect Intel's base level Arc cards will end up competing more with the RX 6500 XT rather than the RTX 3050. In fact, I will be very surprised if the 128 EU/Vector Engine cards (assuming they have 4GB VRAM) can actually beat the RX 6500 XT. We'll know in the next couple of months, but the design sort of feels like beefed up Intel GPU with really weak RT capabilities plus some tensor cores for XeSS. Probably designed more for datacenter but then funneled over into the consumer sector. Raw 64-bit and 128-bit compute might be better than anything AMD and Nvidia offer for the price, but in games, FP64 and FP128 are totally irrelevant.InvalidError said:Still manufactured at TSMC, still needs VRM bits, still needs GDDR6 chips, still needs parts to get shipped around for assembly and other expensive bits that are responsible for raising the baseline cost of new GPUs by ~$70. Unless Intel is willing to eat losses to seed the market, DG2 will likely start from $200 for the lowest-end model. Leaked benchmarks indicate that DG2 has yummy double and quad precision performance for big-number crunchers. -

InvalidError Reply

They are all designing their chips with datacenter-first in mind since that is where the biggest bucks per wafer are. Intel being the newest kid on the block has a vested interest in letting potential customers for its pile-of-tiles megaliths sample what small-scale Xe can do on GPUs by not sacrificing its double/quad performance on the altar of market segmentation. Yet.JarredWaltonGPU said:Probably designed more for datacenter but then funneled over into the consumer sector. Raw 64-bit and 128-bit compute might be better than anything AMD and Nvidia offer for the price, but in games, FP64 and FP128 are totally irrelevant. -

-Fran- This is a good analysis on the situation:Reply

g6R2_GEJVzwView: https://www.youtube.com/watch?v=g6R2_GEJVzw

Shenanigans from nVidia? Oh yes, it may be the case. As with everything, time will tell.

Regards. -

Pirx73 Goes for 2xMSRP in my country - 500+ €. I hate to be right :(Reply

Same with 6500XT - 400+ €

@MSRP 3050 would be a decent replacement for my aging GTX970 but 2xMSRP - no way.