Immersion Cooling for data centers: An exotic inevitability?

And the industry is not ready.

Modern data centers (DCs) use a variety of cooling system types. Most DCs today still use air cooling as the baseline, with chilled air circulated through racks and hot air exhausted out, but this method struggles with modern high-power CPUs and GPUs. Starting with Nvidia's Hopper and expanding with Blackwell, operators are moving toward liquid cooling, specifically cold plate and direct-to-chip solutions, which can be integrated with existing air-cooling infrastructure.

However, while more advanced systems like immersion cooling exist, they see limited adoption despite claims of explosive benefits in performance density, overall cost, and efficiency. However, as next generations of AI accelerators are set to increase power consumption, immersion cooling may become inevitable three or four years down the road. But is the industry ready?

Data centers are getting hotter

AI data centers dissipate heat using a combination of airflow, liquid circulation, and heat exchange systems that move the thermal load outside the facility. The basic principle is to move heat away from hot chips (CPUs, GPUs, switches) into a medium — air, water, or a dielectric fluid (such as water glycol) — and then carry that heat to cooling towers, chillers, or evaporative units where it is released into the atmosphere.

In air-cooled DCs, servers push hot exhaust air into return plenums of HVACs, which is then cooled by chillers or evaporative cooling towers before being recirculated, which is cheap and easy to implement, but is insufficient for AI data centers that use power-hungry hardware such as Nvidia's Blackwell GPUs (which are some of the most power hungry processors in the industry).

In liquid-cooled systems, the heat is absorbed by circulating coolant, which flows to a heat exchanger, then the heat is either rejected into facility water loops and cooling towers or partially dissipated through evaporative cooling and then vented outside the premises. In this case, this allows liquid cooling to remove the bulk of the heat load (let's say, 80% - 85%), whereas residual heat is still handled by traditional air cooling.

For now, Nvidia recommends using direct-to-chip (D2C) cooling, wherein a cold plate with liquid running through it is mated with the processor, for Blackwell data center GPUs that can typically be deployed in combination with existing air cooling and liquid cooling infrastructure. Nvidia and its partners believe that D2C cold plates deliver sufficient cooling capacity for Blackwell GPU thermal design power that spans from 1.2 to 1.4 kW. Furthermore, cold plates can be engineered directly into SXM module reference designs and server chassis, which simplifies standardized deployment across OEM partners (Dell, HPE, Lenovo, Supermicro, etc.).

Nvidia's Blackwell GPUs consume up to 1.4 kW per unit, so a GB300 NVL72 rack consumes at least 120 kW, which is well beyond what traditional air-cooled and even liquid data centers were designed for. As a result, data center operators had to upgrade their power delivery infrastructure, which includes new busbars, power distribution units (PDUs), higher-capacity cabling, backup UPSes, and electrical rooms. In addition, they had to upgrade their cooling loops. However, the critical part is that they did not have to completely rebuild their data centers because of Blackwell's power consumption.

And will get even hotter

However, next-generation AI data centers — or as Nvidia calls them AI factories — will likely require more upgrades, or will have to be built from scratch as power consumption of future AI accelerators is expected to increase dramatically in the coming years.

Nvidia's next-generation Rubin and Rubin Ultra data center GPUs are expected (by KAIST and some industry sources) to increase power consumption to 1,800W to 3,600W, respectively, which will again increase the power draw of data center facilities. However, it is projected that Nvidia and its partners will continue to use direct liquid cooling with these processors, even with NVL576 systems (with 144 compute chiplets) based on the Kyber rack architecture. Meanwhile, exascalers planning to use Kyber racks will still have to upgrade their power delivery infrastructure and computer halls substantially to accommodate such systems.

Immersion cooling rises

However, starting with Feynman GPUs due in 2028 that are expected to consume 4,400W per package, KAIST and some sources familiar with Nvidia plans believe that the company is indeed looking towards immersion cooling systems. This requires placing the server boards and equipment directly in a vat of cooling liquid that isn't electrically conductive.

Immersion cooling is nothing particularly new. Placing electrical equipment — such as transformers — into dielectric fluids for cooling purposes was already practiced before 1887, according to Wikipedia. Cray and IBM experimented with immersion cooling in the 1960s and the 1980s, but no large-scale supercomputer with immersion cooling was built back then.

However, immersion cooling came back to the focal point in the mid-2010s when its total cost of ownership (TCO) benefits made it attractive for cryptominers, which pushed the technology towards maturization. In 2017, numerous start-ups built immersion cooling systems for crypto and growing data center cooling needs.

In 2018, the Open Compute Project added immersion under its Advanced Cooling Solutions track, followed in 2019 by the first industry standards unveiled at the OCP Summit in San Jose. Intel has worked with various companies on immersion cooling technologies and, in 2022 – 2023, even announced some practical results.

Immersion cooling offers superior efficiency and can manage extremely dense racks with heat dissipation of well over 100 kW, yet it requires specialized infrastructure and lacks vendor certification. To that end, Nvidia does not exactly endorse immersion cooling for Blackwell GPUs for many reasons:

- Firstly, the long-term reliability of components in dielectric fluids is uncertain, and without established data on component lifespan, the company can hardly provide any warranties.

- Secondly, modern compute halls are not ready for immersion coolers. Immersion requires purpose-built tanks, pumps, and fluid management systems that are (at least for now) not compatible with existing data center plumbing.

- Thirdly, while OCP seems to have standards for immersion cooling setups, the technology is still not completely standardized, which makes its implementation expensive for partners.

Also, not all immersion cooling systems work the same way.

Different types of immersion cooling

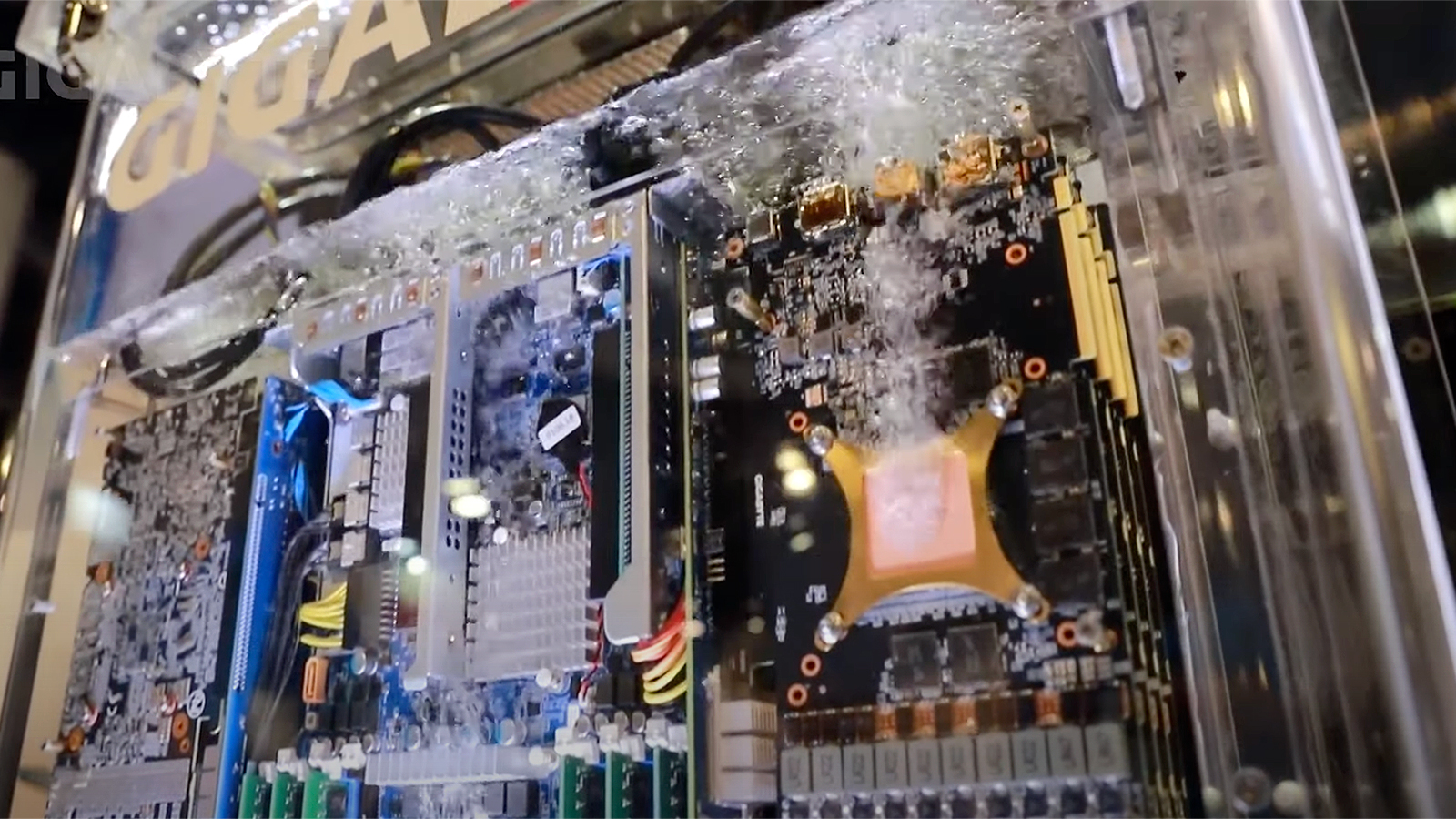

Immersion cooling systems typically used by cryptocurrency mining farms and demonstrated at tradeshows like Computex represent Single-Phase Immersion Cooling. Servers are submerged in a non-conductive dielectric oil (or a special engineered fluid) that absorbs heat directly from components. Pumps circulate the warmed liquid to a heat exchanger, where it releases the heat, gets cooler, and then gets recirculated. Such immersion cooling systems are relatively cheap (you can get a turn-key 12 kW-capable one with coolant for $2,108) and useful for cryptocurrency and small-scale HPC, but are hardly scalable to what Nvidia calls 'AI factories'.

In Two-Phase Immersion Cooling systems, servers are immersed in a dielectric fluid with a low boiling point. Heat from chips causes the fluid to boil into vapor, which rises, condenses on a cooled coil or plate at the top of the tank, and drips back down, thus creating a self-contained cooling cycle. This cycle removes heat more efficiently and can support extremely high rack densities, well exceeding 100 kW.

Furthermore, since two-phase immersion cooling systems do not have any pumps or other means of forced convection, this means one or two fewer potential points of failure. However, the systems themselves rely on specialized and expensive fluids like 3M Novec (which is no longer produced as they contained hazardous per- and polyfluoroalkyl substances, which 3M committed to no longer produce), they must be hermetic to prevent evaporation, and they require carefully engineered infrastructure that will replace that of traditional data centers.

For now, a 40U rack equivalent tank costs $20,000, according to DataCenterDynamics. Specialized dielectric fluid with a low boiling point can cost another $15,000 - $20,000 (3M Novec replacements can cost $1,876 per pail). Also, since 3M ceased to produce dielectric fluids with a low boiling point in 2024, this greatly complicates the development and standardization of two-phase immersion cooling.

In fact, liquids are among the biggest concerns as they are not standardized and nobody knows how they can influence hardware over the long term, which may cause abnormal failure rates. In addition, vapor loss causes frequent fluid top-ups, which increases servicing costs. Meanwhile, servicing submerged hardware itself requires throttling or shutting down tanks to limit boiling, which potentially increases downtime.

Without set standards or even a clear path to the evolution of liquid immersion cooling, different companies try different approaches. For example, Vertiv proposes building hybrid systems that combine two-phase immersion cooling with other cooling strategies, including the use of water loops or rear-door heat exchangers to remove residual heat.

Few deployments so far

When it comes to big companies, none have deployed immersion cooling at scale. Neither AWS, Google, Meta, nor Oracle has confirmed deployments of immersion cooling at any scale.

Microsoft was the first major cloud provider to place two-phase immersion cooling into real production, using tanks filled with a low-boiling dielectric liquid to cool down CPUs and GPUs at its data center in Quincy, Washington, in 2021. The setup co-designed by Microsoft and Wywinn submerges 300W CPUs and 700W GPUs into 3M's engineered fluid, which boils at about 50°C.

Microsoft's tests showed that this method can cut energy use per server by 5% to 15% compared with conventional cooling, while also enabling overclocking to absorb workload spikes such as those seen during peak Teams meeting times. Beyond efficiency, immersion also allows denser server layouts, which push computing capacity higher without the limits of airflow.

Microsoft also mentions that immersion cooling may also lower hardware failure rates by reducing exposure to oxygen and humidity, similar to findings from Project Natick's underwater data center trial. If proven viable, immersion cooling could be used in environments where components are not immediately replaced when they fail. However, the company has not announced any updates to its immersion cooling project, so we do not know whether findings of the experiment can be used to cool down next-generation AI data centers several years from now.

Light at the end of the tunnel?

There might be light at the end of the tunnel. Intel and Shell introduced the first fully certified single-phase immersion cooling solution for data centers earlier this year. The collaboration also involved Supermicro and Submer, which provides it with some weight in the industry.

The solution relies on Shell's single-phase dielectric fluids developed from the company's gas-to-liquids (GTL) chemistry and ester formulations for maximum thermal performance. These fluids are electrically non-conductive, PFAS-free, and biodegradable to varying degrees, which makes them safer and more sustainable than 3M's discontinued fluorocarbon-based options.

Shell claims that using its fluids instead of air cooling, power consumption can be reduced by as much as 48%, capital and operating expenses can drop by up to 33%, and even computer hall floorspace can shrink. Intel hasn't disclosed the exact benefits for its CPUs, though it is obvious that it can get server temperatures significantly lower with single-phase immersion cooling systems.

The solution is validated to operate in ambient conditions at up to 45°C and is certified for usage with platforms running the 4th and 5th Gen Intel Xeon processors, with Intel providing an Immersion Warranty Rider to cover their use. On the one hand, this reaffirms that Intel can guarantee that immersion cooling provides durability equal to air-cooled systems. But on the other hand, the warranty is only valid for previous-generation Intel Xeon CPUs, which are not exactly used for the most powerful machines around.

But the key thing about the announcement is that three major high-tech companies —Intel, Shell, and Supermicro — joined by Submer are actively working on solutions for single-phase immersion cooling systems. We do not know whether this collaboration can be expanded to two-phase immersion cooling solutions, though.

Summary: Industry should act now

Traditional data centers rely mainly on air cooling, but this approach is increasingly insufficient for AI data centers that use high-power CPUs and GPUs like Nvidia's Blackwell-series GPUs. To cope with rising power densities, DC operators are adopting liquid cooling, which is expected to work for today's hardware as well as accelerators like next-generation Rubin and Rubin Ultra that are expected to reach 1.8 kW – 3.6 kW. However, industry sources and institutes like KAIST expect Nvidia and its partners to require immersion liquid cooling for codenamed Feynman GPUs that are projected to have a power consumption of around 4.4 kW.

But while immersion cooling is a likely necessity within the next few years, it is hardly ready for prime time. Single-phase immersion is relatively cheap but hard to scale for AI data centers that house thousands of GPUs; whereas two-phase systems, which use boiling dielectric fluids, are far more efficient but expensive and complex to build.

So far, none of the big cloud service providers (except Microsoft, but we do not know the scale of its experiment) have attempted to deploy immersion liquid cooling at any significant scale, despite the fact that OCP outlined specifications for immersion liquid cooling in 2019. To that end, it does not look like all large CSPs are interested in immersion cooling.

Momentum may build again as Intel, Shell, Supermicro, and Submer recently introduced the first fully certified single-phase immersion solution for Intel's Xeon processors that does not void warranty. The collaboration signals growing interest in standardized, certified immersion solutions; however, we are talking about only four companies, which is not enough to prep the immersion cooling ecosystem for launch in 2028 – 2029.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.