AMD's customers begin receiving the first Instinct MI300X AI GPUs — the company's toughest competitor to Nvidia's AI dominance is now shipping

An important milestone for AMD.

Accroding to Sharon Zhou, chief executive of LaminiAI, AMD has begun to ship its Instinct MI300X GPUs for artificial intelligence (AI) and high-performance computing (HPC) applications. As the 'LamniAI' name implies, the company is set to use AMD's Instinct MI300X accelerators to run large language models (LLMs) for enterprises.

While AMD has been shipping its Instinct MI300-series products to its supercomputer customers for a while now and expects the series to become its fastest product to $1 billion in sales in history, it looks like AMD has also initiated shipments of its Instinct MI300X GPUs. LaminiAI has partnered with AMD for a while, so it certainly has priority access to the company's hardware. Still, nonetheless, this is an important milestone for AMD as this is the first time we have learned about volume shipments of MI300X. Indeed, the post indicated that LaminiAI had gotten multiple Instinct MI300X-based machines with eight accelerators apiece (8-way).

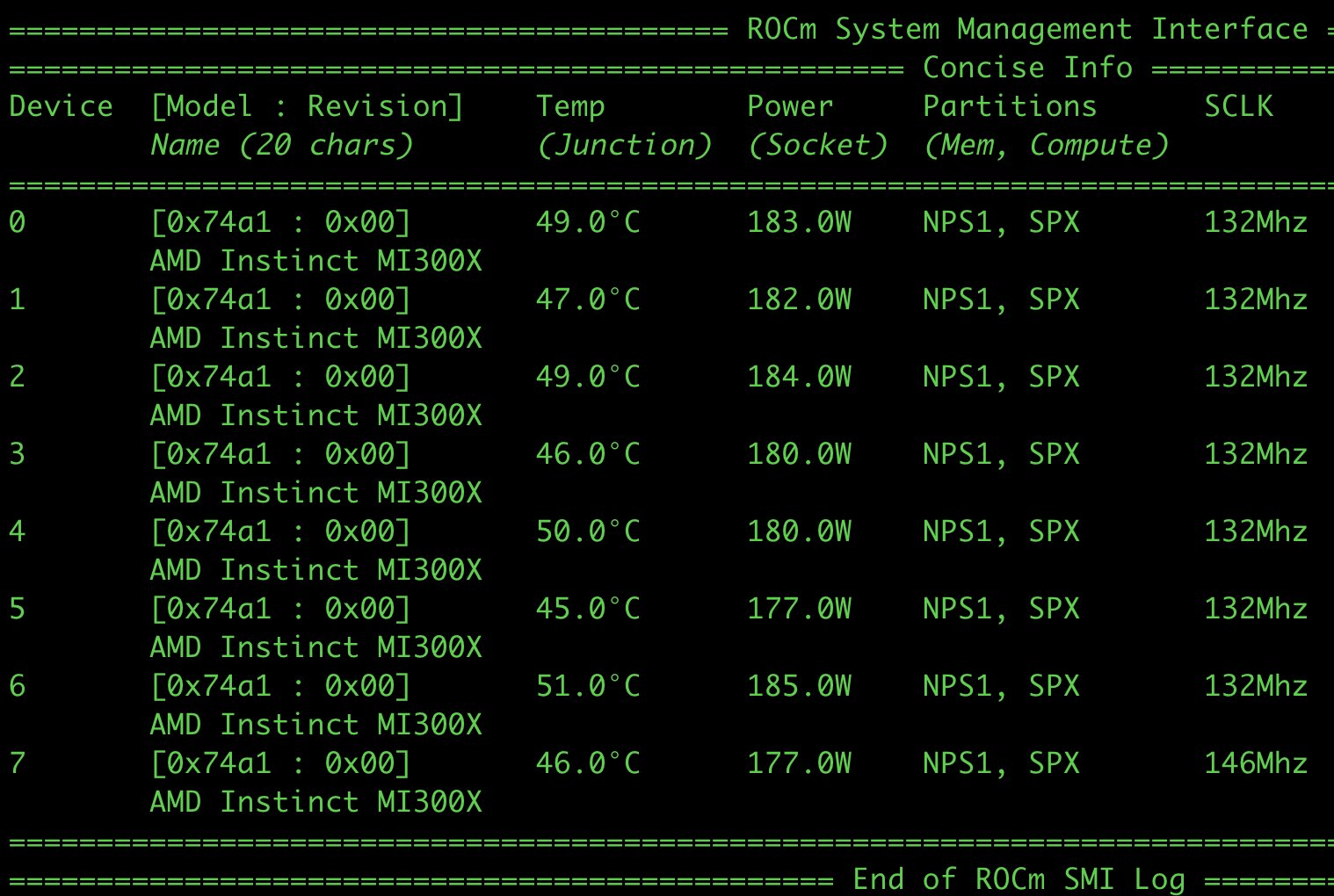

"The first AMD MI300X live in production," Zhou wrote. "Like freshly baked bread, 8x MI300X is online. If you are building on open LLMs and you are blocked on compute, let me know. Everyone should have access to this wizard technology called LLMs. That is to say, the next batch of LaminiAI LLM pods are here."

A screenshot published by Zhou shows that an 8-way AMD Instinct MI300X is in operation. Meanwhile, the power consumption listed in the system screenshot indicates the GPUs are probably idling for the pic — they surely aren't running demanding compute workloads.

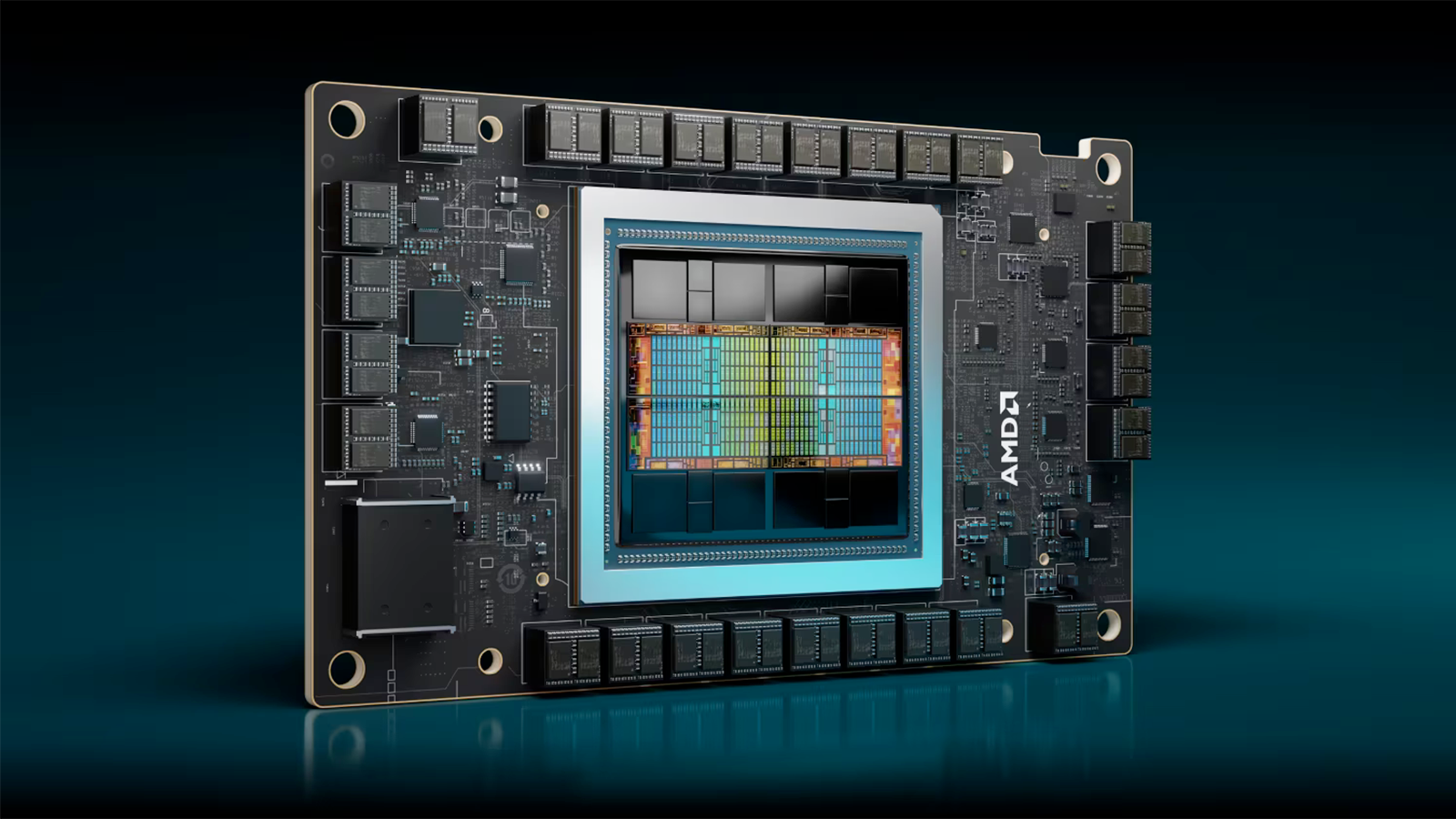

AMD's Instinct MI300X is a brother of the company's Instinct MI300A, the industry's first data center-grade accelerated processing unit featuring both general-purpose x86 CPU cores and CDNA 3-based highly parallel compute processors for AI and HPC workloads.

Unlike the Instinct MI300A, the Instinct MI300X lacks x86 CPU cores but has more CDNA 3 chiplets (for 304 compute units in total, which is significantly higher than 228 CUs in the MI300A) and therefore offers higher compute performance. Meanwhile, an Instinct MI300X carries 192 GB of HBM3 memory (at a peak bandwidth of 5.3 TB/s).

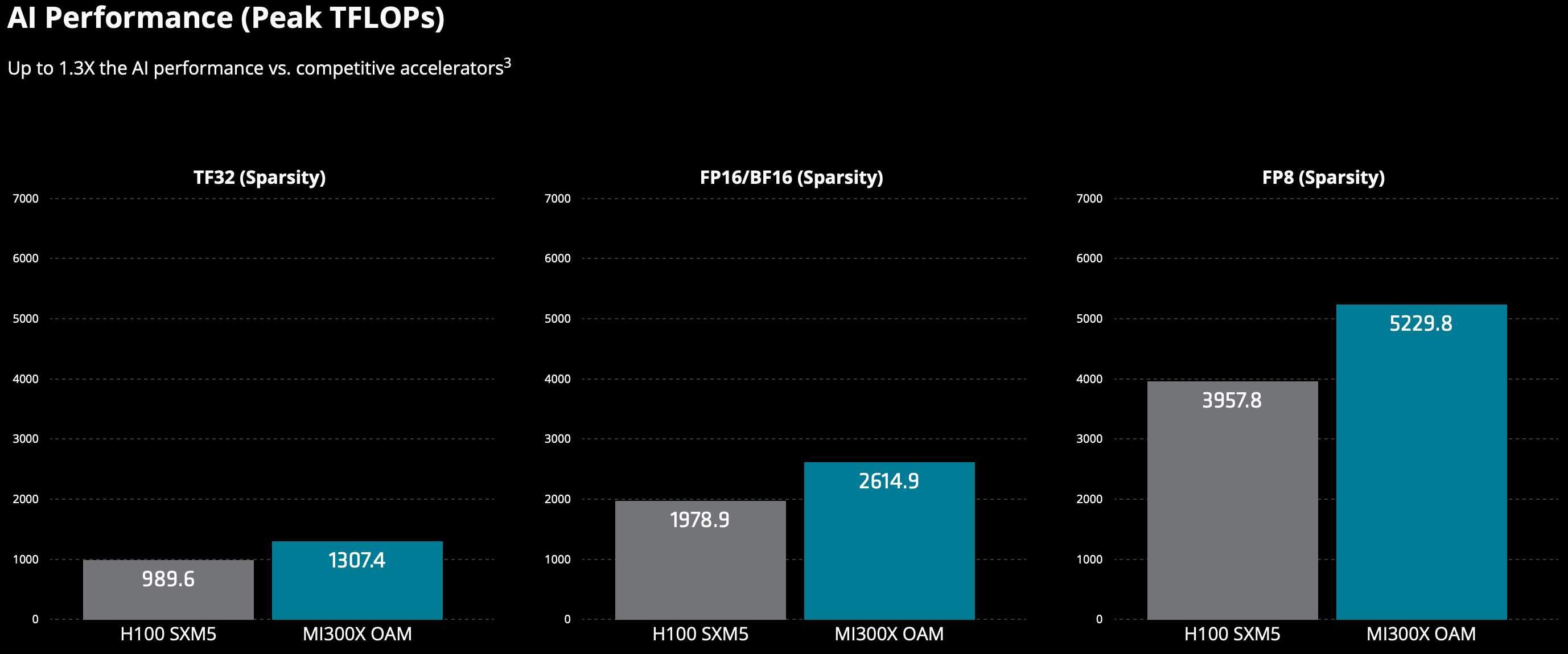

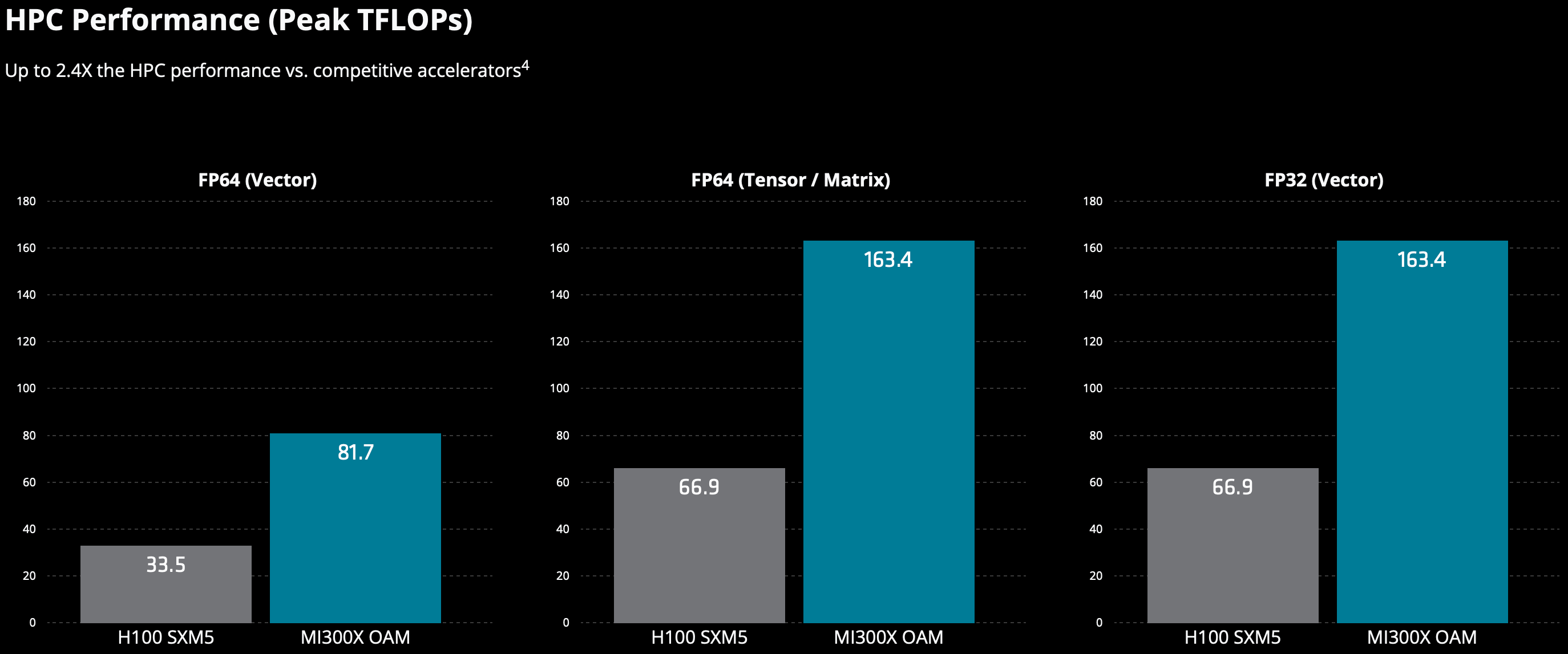

Based on performance numbers demonstrated by AMD, the Instinct MI300X outperforms Nvidia's H100 80GB, which is available already and is massively deployed by hyperscalers like Google, Meta (Facebook), and Microsoft. The Instinct MI300X is probably also a formidable competitor to Nvidia's H200 141GB GPU, which is yet to hit the market.

According to previous reports, Meta and Microsoft are procuring AMD's Instinct MI300-series products in large volumes. Yet again, LaminiAI is the first company to confirm using Instinct MI300X accelerators in production.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

bit_user Congrats to AMD. I think this is the first time they've delivered something with better on-paper AI performance than Nvidia's current gen.Reply

Theoretical specs are great, but Nvidia has spent years optimizing the snot out of its libraries.

To use the analogy of gaming GPUs, there was a time when AMD GPUs typically had better theoretical performance than Nvidia, but achieved lower FPS in games. There can be a variety of reasons for that, but my point is that merely delivering hardware with the right specs doesn't equate to winning.

Just being realistic about the challenges that still lie ahead, for AMD. -

The Hardcard Replybit_user said:Congrats to AMD. I think this is the first time they've delivered something with better on-paper AI performance than Nvidia's current gen.

Theoretical specs are great, but Nvidia has spent years optimizing the snot out of its libraries.

To use the analogy of gaming GPUs, there was a time when AMD GPUs typically had better theoretical performance than Nvidia, but achieved lower FPS in games. There can be a variety of reasons for that, but my point is that merely delivering hardware with the right specs doesn't equate to winning.

Just being realistic about the challenges that still lie ahead, for AMD.

AMD does still have a lot of work to do, but it will get done faster than ever there is a large demand for AI compute yesterday and wait times for Nvidia hardware is into 2025.

Way too late for many, so they will spend the extra money and take the time to work with AMD on optimizations and stability. There will be effective code and documentation for AMD hardware before Nvidia can satisfy demand.