Intel's Ponte Vecchio, Tiger Lake, Shortages and Data Streaming Accelerator

Intel is moving on multiple fronts as it plows forward to 7nm.

It has been a busy period for Intel related news: The company recently unveiled its 2021 flagship 7nm product, the Ponte Vecchio general-purpose GPU for the Aurora exaflop supercomputer and launched its flagship oneAPI software project. Tiger Lake also appeared on Geekbench, Intel gave a sneak peak of Golden Cove and provided a supply update. Intel also announced a ‘data streaming accelerator’ coming in future CPUs and more.

Aurora, Ponte Vecchio, Xe HPC, oneAPI: Putting it all Together

At its annual HPC Developer Conference tied to Supercomputing 2019 – which saw no changes in the top 10 supercomputers compared to June – Intel made a trifecta of announcements: Aurora, Ponte Vecchio and oneAPI.

Starting from a high level, Intel disclosed the architecture of the late 2021 Aurora supercomputer, the first exascale supercomputer in the United States. A (compute) node will consist of two Sapphire Rapids CPUs and six Ponte Vecchio GPUs with HBM. They are connected via a new all-to-all Xe Link that is based on CXL. It will be programmed with oneAPI and also feature a new file system called DAOS.

On the CPU side, Sapphire Rapids is likely to feature the 10nm++ Golden Cove architecture and is part of the Eagle Stream platform. On the memory and storage side, it will feature third generation Optane Persistent Memory and Optane SSDs and the successor of next year’s 144-layer 3D NAND. (The upcoming second generation Optane PM will double in density by having four layers of 3D XPoint.)

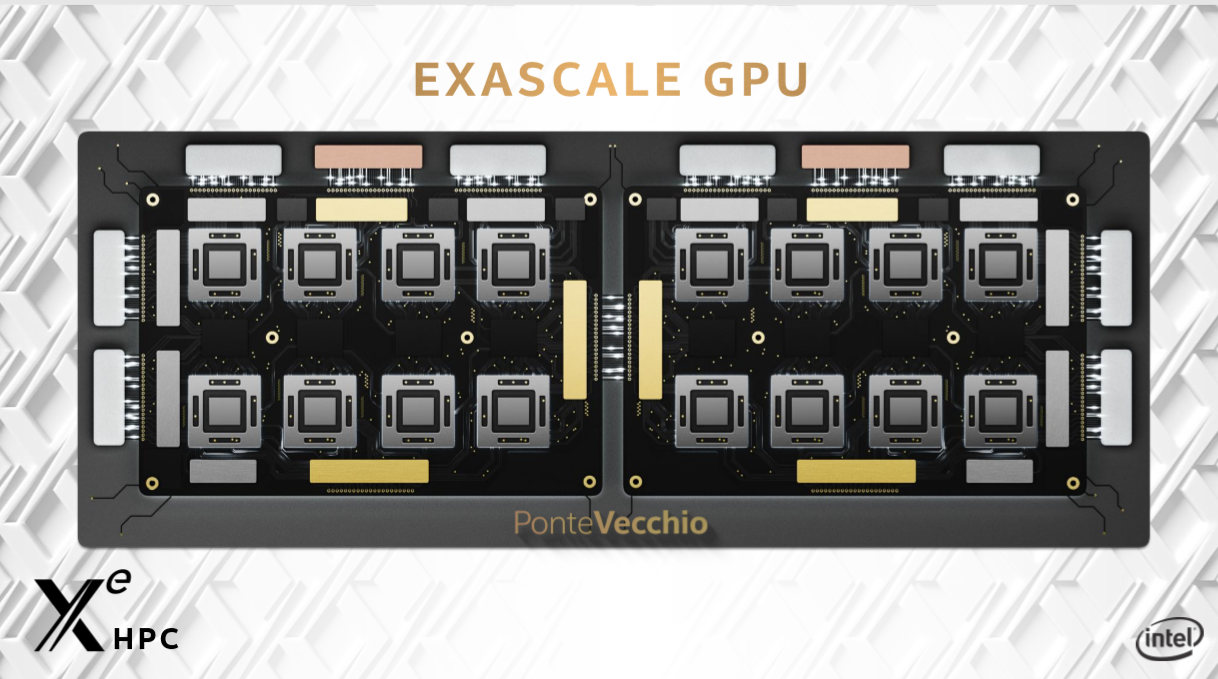

Intel mainly focused on the 7nm Ponte Vecchio GPU. For its most recent process nodes, Intel started with low-power lead products such as Ice Lake-U and Broadwell-Y. Compared to that, the Ponte Vecchio 7nm lead product is set to be truly on another level.

One Ponte Vecchio consists of two distinct cards that seem to be connected via EMIB. (EMIB is also used for connecting multiple stacks of HBM to the package.) Each card has eight 7nm GPU chiplets that are packaged with its 3D Foveros technology. Each card also features a Xe Memory Fabric (XEMF) interconnect for the chiplets (scalable to thousands of EUs). The XEMF also has a Rambo cache that serves as a ultra high-bandwidth unified memory for the GPUs, CPUs and HBM. There seems to be one XEMF die per two Xe compute chiplets.

Not all details are clear yet, though. Are all chiplets part of one Foveros active interposer, or is there one Foveros for each individual GPU ‘island’? Does Intel use EMIB and Foveros separately, or are they ‘combined’ in the more elaborate Co-EMIB technology? Specifications such as the die size, amount of EUs or FLOPS per chiplet, transistor count and TDP were also not divulged.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Diving deeper into the compute chiplets, Intel disclosed that they are build on an Xe HPC flavor of the Xe architecture, with Xe LP and Xe HP being the other two. Raja Koduri clarified in a interview that Xe is process independent and that Xe incorporates many features from Gen, which will be abandoned going forward.

Xe HPC’s headline feature is a new ‘data parallel matrix vector engine’ for AI that seems to be Intel’s answer to Nvidia’s Tensor cores. It supports BF16, FP16 and INT8 and delivers up to a 32x higher vector rate. Intel also isn’t neglecting traditional HPC, as Intel claims a 40x improvement in double precision (64-bit) floating-point performance per EU. For comparison, a Gen11 EU has a 4x lower FP64 throughput than FP32.

Xe HPC will also feature both SIMT (from GPUs) and SIMD (from CPUs) units. The latter come in both medium and large sizes. The goal is to cover a wide range of vector sizes, which can provide a nice performance bump for some applications.

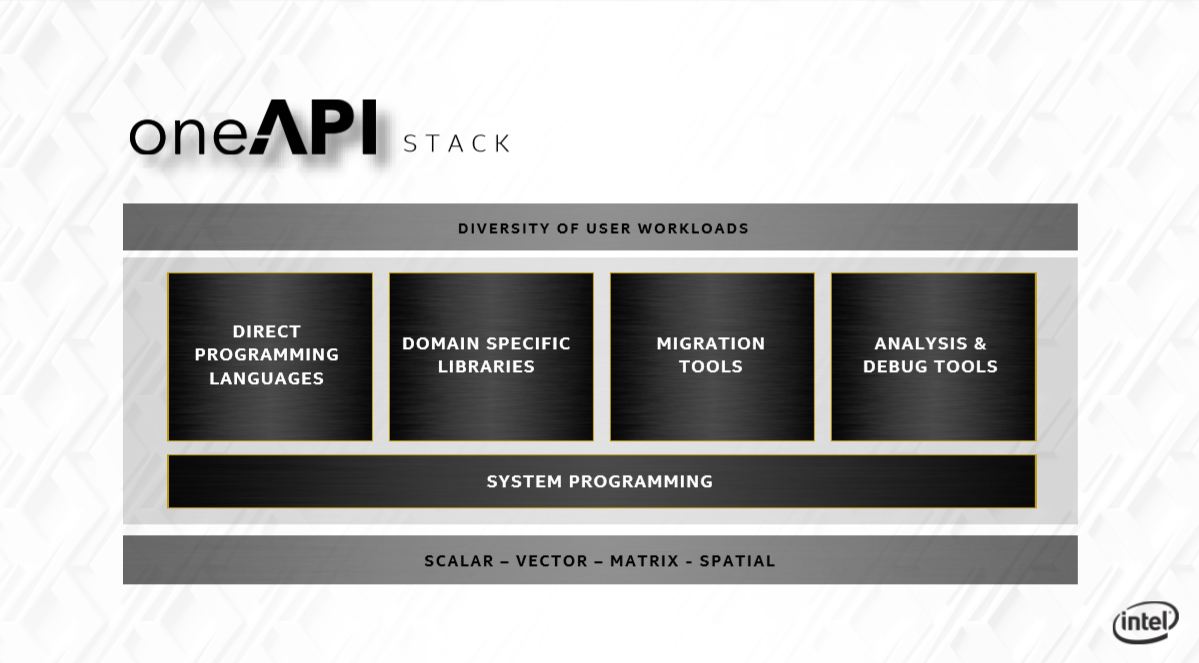

Finally, Intel officially launched its oneAPI initiative to the public via its DevCloud with a public beta. oneAPI serves to simplify each kind of compute architecture from having its own compilers, libraries and tools. In other words, it is a programming model for heterogeneous compute, for all developers and workloads. It consists of a full software stack for system programming or direct programming (DPC++, Fortran, C++, Python supported). It also contains domain specific libraries, migration tools (from CUDA) and and analysis & debug tools.

Thoughts

Taking a step back, while the company stopped its Omni-Path HPC fabric development (perhaps to be replaced by its Barefoot Networks switches and silicon photonics eventually), it will be delivering CPUs, GPUs, memory, software and perhaps 3D NAND storage to Aurora, touching virtually all of its six pillars. Moreover, when Ponte Vecchio launches, Intel will have completed its AI portfolio from IoT and edge to client to data center to HPC.

With 16 Xe chiplets per Ponte Vecchio, we could be looking at several thousand square millimeters of logic silicon per GPU, depending on one chiplet’s die size. (While process lead products generally tend to be small, its target market is the less price sensitive data center and HPC, and a GPU has inherent redundancy which helps yield.) All in all, Ponte Vecchio’s performance scope and combination of technologies with cutting edge process and packaging make it a highly anticipated product.

Foveros and EMIB definitely help to drastically reduce the time to market of such a breakthrough product by several years, akin to if Nvidia had hypothetically released a (beyond) reticle sized 7nm GPU in 2018 as 7nm lead product. Intel is making a bold statement by launching its 7nm (somewhere between TSMC's 5 and 3nm) with Ponte Vecchio.

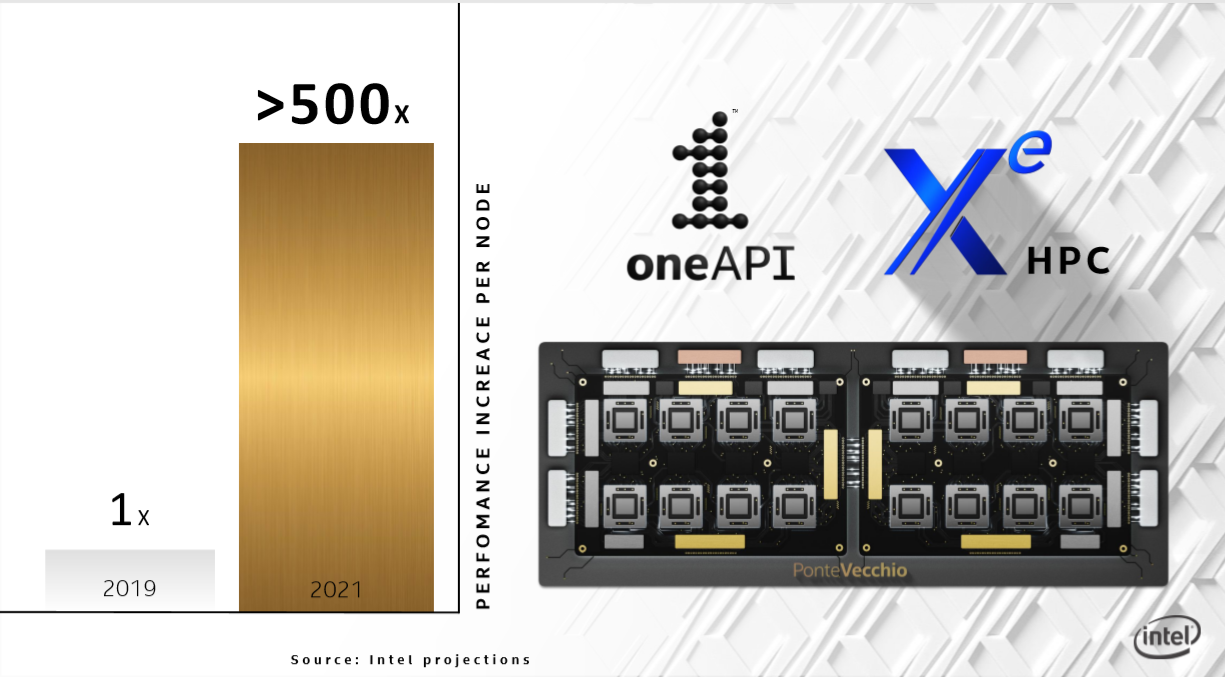

Intel claims it will deliver a greater than 500x increase in performance per node compared to 2019, although it didn’t clarify if the 2019 node includes Nvidia GPUs. Ponte Vecchio will launch in the fourth quarter of 2021.

Data Center VP Leaves

Coincident with Supercomputing 2019, news came out that Rajeeb Hazra is retiring from Intel after being with the company for more than 24 years. In his last position, he was a corporate vice president of the Data Center Group serving as the general manager of the Enterprise and Government Group, which is one the three big data center segments alongside the cloud and networking infrastructure groups.

He told HPC Wire that he left on his own accord and will also remain active in the industry. Intel has not yet found a successor.

Tiger Lake-Y: Willow Cove IPC and Tech One CEO Comment

After being stuck for several years on Skylake, Intel’s Haifa architecture team is catching up with a yearly cadence for the Core architecture, now named after coves. Ice Lake featured the Sunny Cove architecture, with Willow Cove following next year with Tiger Lake.

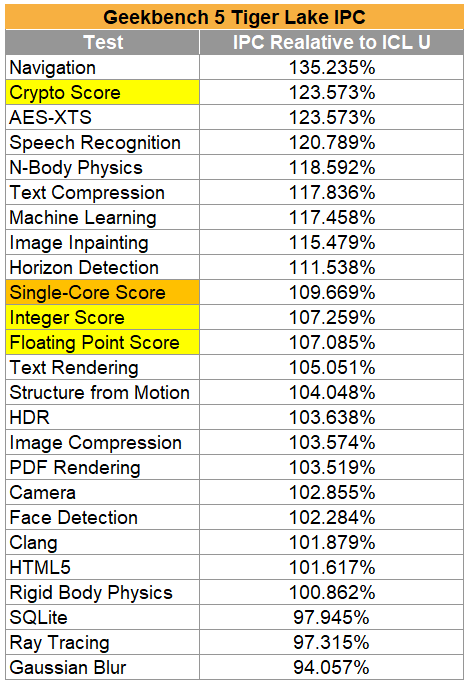

On Wednesday, Tiger Lake-Y showed up in Geekbench 5. Redditor dylan522p compiled a table of the scores, showing that Willow Cove will deliver a high single digit (9%) IPC (instructions per clock) gain on average. Several tests such a crypto had even larger gains. It also showed a change in cache structure with a bigger L2 cache.

While Geekbench is not the most ideal benchmark, it would put next year’s Willow Cove at almost 30% higher performance than Skylake, clock for clock. There are no confirmations that Intel has a Tiger Lake-S for the desktop planned, making it seem like Intel is careless about Ryzen’s success there.

Further, the Japanese Tech One’s CEO commented on Tiger Lake-Y. He said it featured the ‘second-generation 10nm process’ (likely 10nm++). He also claimed that Ice Lake-Y is only available to Apple and Microsoft, making it the reason the company choose Tiger Lake-Y in an upcoming product.

Golden Cove Architecture Detail

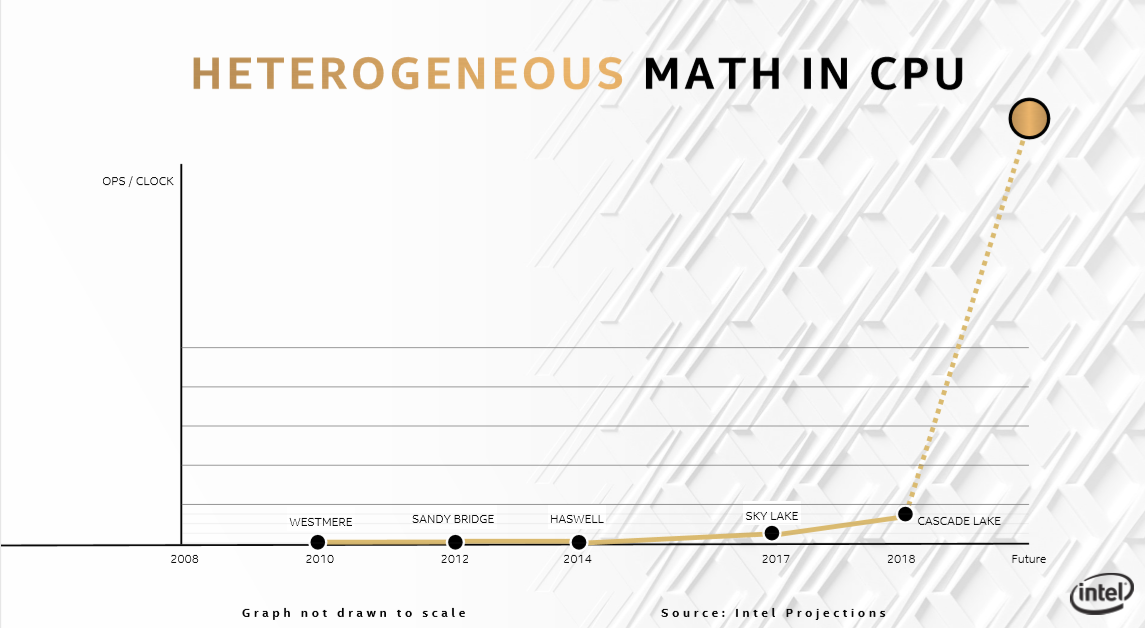

Golden Cove follows in 2021, presumably in Alder Lake and Sapphire Rapids. Intel has previously said it focused on security and single-threaded, AI and 5G performance. One slide from Raja Koduri’s presentation at Supercomputing 2019 caught our attention, related to the AI aspect of the architecture.

The heterogeneous (i.e. SIMD vector) performance per clock of Cascade Lake is 150x higher than Westmere in 2010. It is also 3x higher than Skylake due to the DL Boost VNNI instructions for INT8 support that Intel added. Intel is not done and wants to deliver another order of magnitude in performance in 2021, according to AnandTech.

There are several ways how Intel could achieve this. Assuming that the graph also includes performance gains from higher core count, naturally a factor of two or more will be gained from Sapphire Rapid’s increase in cores compared to Cascade Lake’s 28. Besides that, there are a few other scaling vectors. Like Intel has done with Agilex and Nvidia in Turing, it could include support for INT4 or even INT2 operations.

A more involved possibility would be to increase the vector width, as it did with the transition from AVX2 to AVX-512. Or alternatively to this, Intel could beef up its architecture by including more AVX-512 units per core, perhaps doubling from the two per core at present. This could be more beneficial than a hypothetical AVX-1024 since such transitions take up a lot of software resources. There is also no evidence at present of such new architecture extensions.

On the topic of heterogenous performance, Golden Cove will likely also introduce BF16 from Cooper Lake.

Supply Update

Intel issued a press release this week apologizing for recent “PC CPU shipment delays”, still the aftermath of the shortages that started over a year ago as demand for PC and data center CPUs increased more than expected in early 2018 (and again more recently in 2019) and Intel has not yet had any breathing room to increase its inventory.

This steep demand increase, in particular from the cloud providers, caught Intel off-guard as it simultaneously had to delay the 10nm ramp – for which it had put capacity in place – to 2019 while also seeing a fresh 14nm wafer demand influx at the time beyond the unexpected demand increase: from transitioning its chipsets from 22nm and 4G modems from TSMC 28nm (and gaining share from Qualcomm). Another contributor to the 14nm pressure could be the steady increase in core counts in laptop and desktop over the lifespan of 14nm in response to Ryzen, which has likely increased the average die size over the years.

In response, Intel increased its 14nm investments (adding 25% wafer capacity in 2019 and another 25% in 2020) and unveiled plans to expand its manufacturing network with expansions to its fabs in Oregon, Arizona, Israel and Ireland. The company also announced a new $10 billion fab in Israel.

In this week’s update, Intel blamed “production variability”, but did not further elaborate.

Putting the shortages in context however, Intel maintains that the shortages were caused by much higher revenue growth (demand) than expected, as 14nm yield is very mature: the company grew by 28% or $15.4 billion in revenue in three years from 2015 to 2018. Intel has stated that it has primarily conceded some market share on the low-end of the market as it has prioritized Core and Xeon production, and is on track to deliver its fourth record revenue year in a row in 2019 by a slim margin.

Gadi Singer Interview: Discussing NNP-I

CRN published an interview on Friday about the NNP-I with Gadi Singer, who leads the inference division of Intel’s overarching AI Products Group. Or as he said, the group was reorganized half a year ago to focus on the products coming to market, which resulted in the Inference Products Group which he leads. To give some sense of its scope, he told that the group has at least as many people working on software as hardware.

Singer said the NNP-I was focused on energy efficiency and compute density (as evidence by its ruler form factor) while also being able to scale and accept a wide range of usages. The latter is done by including heterogenous capabilities that are needed for real-life workloads: tensor operations, vector processing, two Sunny Cove cores and a large amount of cache. “Moving data is more expensive than calculating it,” he quipped.

Aside from the M.2, ruler and dual-chip PCIe form factor, he said the company is working to bring it to other form factors as well.

On NNP-I adoption, he says the company is primarily working with the cloud service providers, but the company also aims to make it available “for larger scale” through its channel partners.

DSA: Data Streaming Accelerator

Intel announced the Data Streaming Accelerator (DSA) on Wednesday via its open source blog, and said it will be integrated in future Intel processors. The company describes it as a “high-performance data copy and transformation accelerator targeted for optimizing streaming data movement and transformation operations common with applications for high-performance storage, networking, persistent memory, and various data processing applications”. It will replace the current QuickData Technology.

In more digestible terms, Intel says that it will improve overall system performance for data operations and freeing up CPU cycles for higher level functions. In particular it applies to high-performance storage, networking, persistent memory, and various data processing applications.

The Intel DSA will consist of new CPU and platform features and Intel will introduce support in Linux in several phases. Intel has published the full specification.

Various: Neuromorphic Computing, Mobileye win, 5G, Apple-Intel Antitrust

The first corporate members joined Intel neuromorphic research community Intel announced: Airbus, Hitachi, GE and Accenture. It is Intel’s next step to bring neuromorphic computing to commercialization.

In an update from Intel’s legal department in a bid to increase diversity in the legal profession, it said that it will not work with law firms that have a below average representation of minorities and women, starting in 2021.

Intel announced that Michigan will add Mobileye’s latest EyeQ4 powered aftermarket systems to its state and city fleets as part of a trial and with the longer term goal to prepare the state for AVs and robotaxis.

Intel and Apple filed an antitrust case against a firm owned by SoftBank that reportedly asked billions in dollars from Apple related to patents.

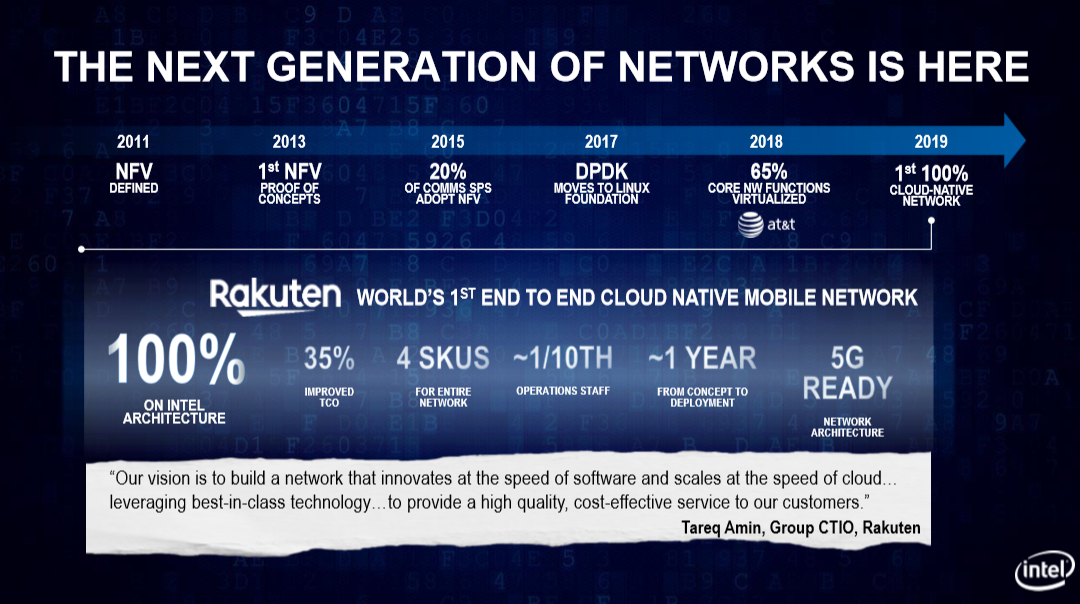

Intel this week held a presentation about its 5G involvement, where it is the leader on the infrastructure side as networks are being virtualized on standard Xeons instead of ASICs. Intel’s networking revenue (an over $20 billion market) has grown at a steep pace from 8% market share and $1 billion in 2014 to 22% market share and $4 billion in 2018 (two thirds of the growth coming from increased volume and one third from increased average selling prices). Intel has also said that it is on track for $5 billion in networking revenue in 2019.

Intel also reiterated that its Snow Ridge SoC based on 10nm Atom Tremont that is specifically targeted at 5G base stations will come in the first half of 2020. Intel did not provide its current market share, but expects to reach over 40% market share in 2022 in base stations.