Why you can trust Tom's Hardware

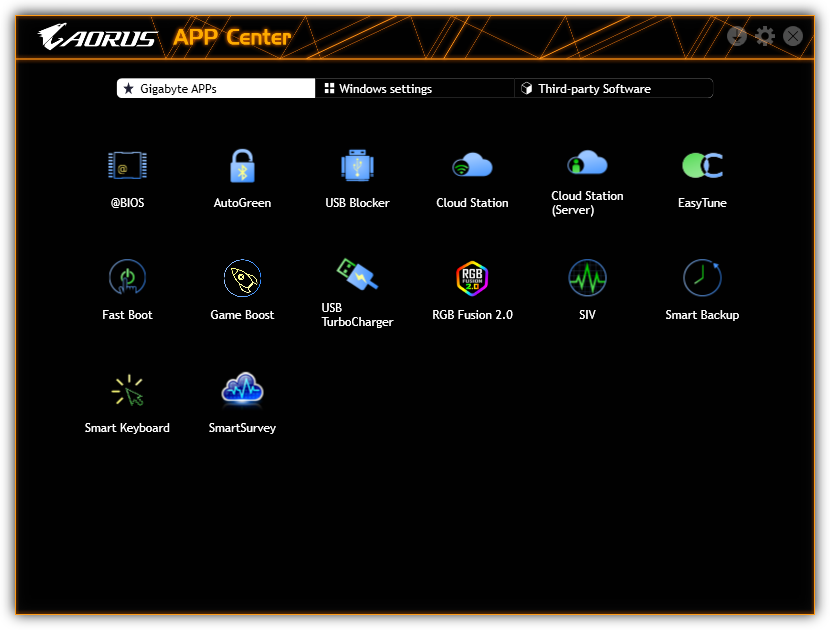

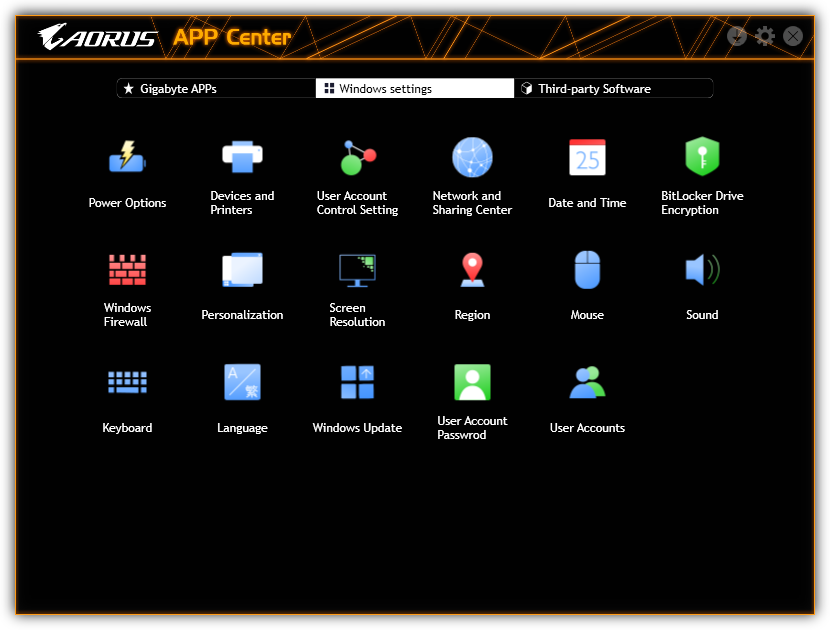

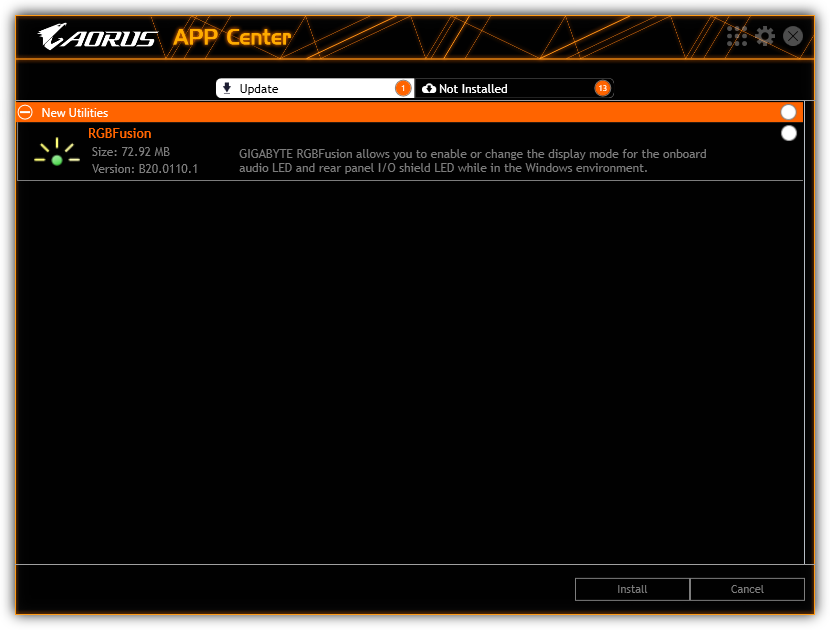

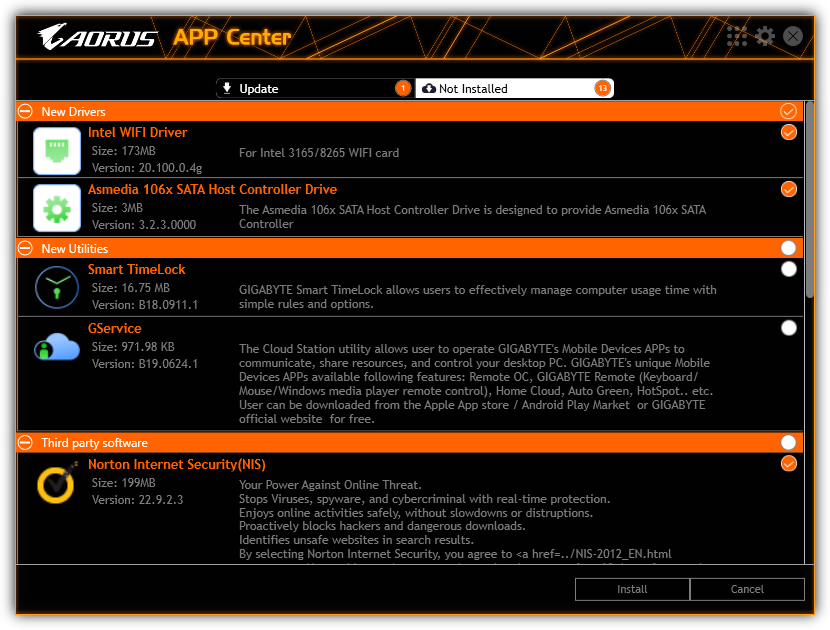

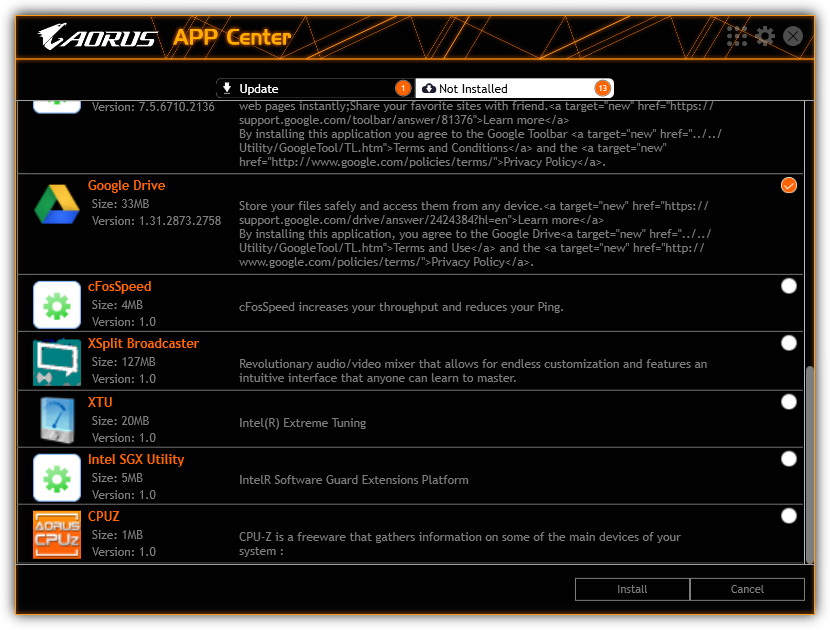

Gigabyte’s App Center is a central launch point for most of the firm’s included software, with added functionality including a few shortcuts to Windows settings and a software downloader with updater. When using the updater, be careful to assure that any unwanted freeware is manually deselected.

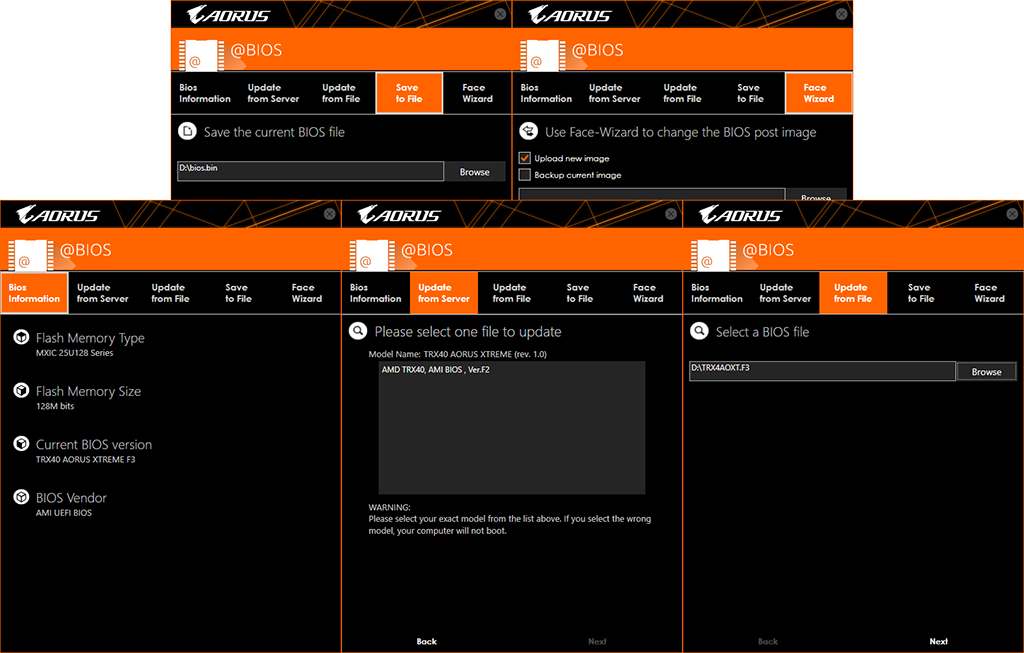

Gigabyte @BIOS allows users to update or save firmware from within Windows, and even includes a utility to change the board’s boot-up splash screen.

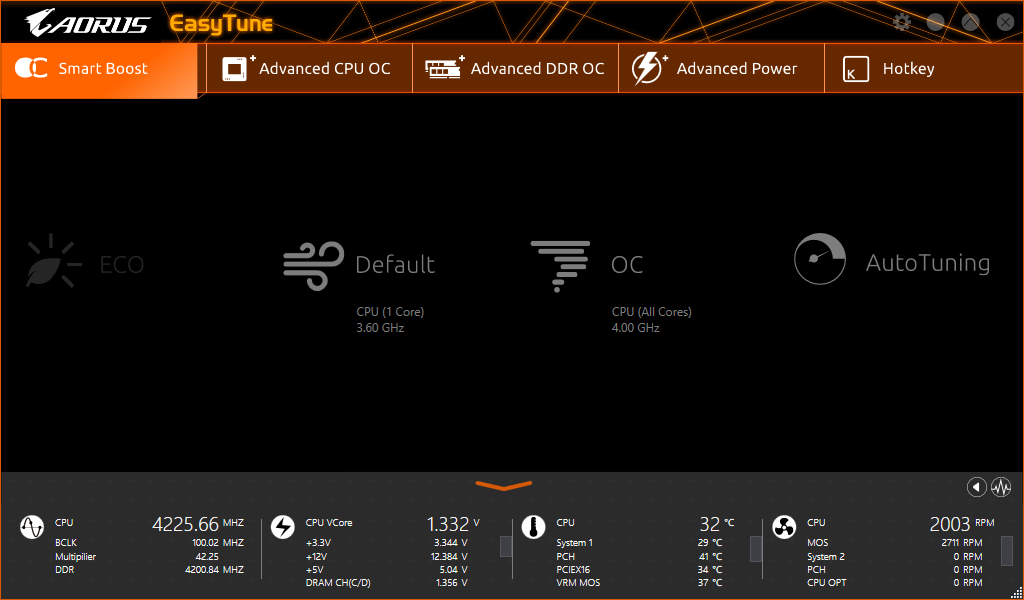

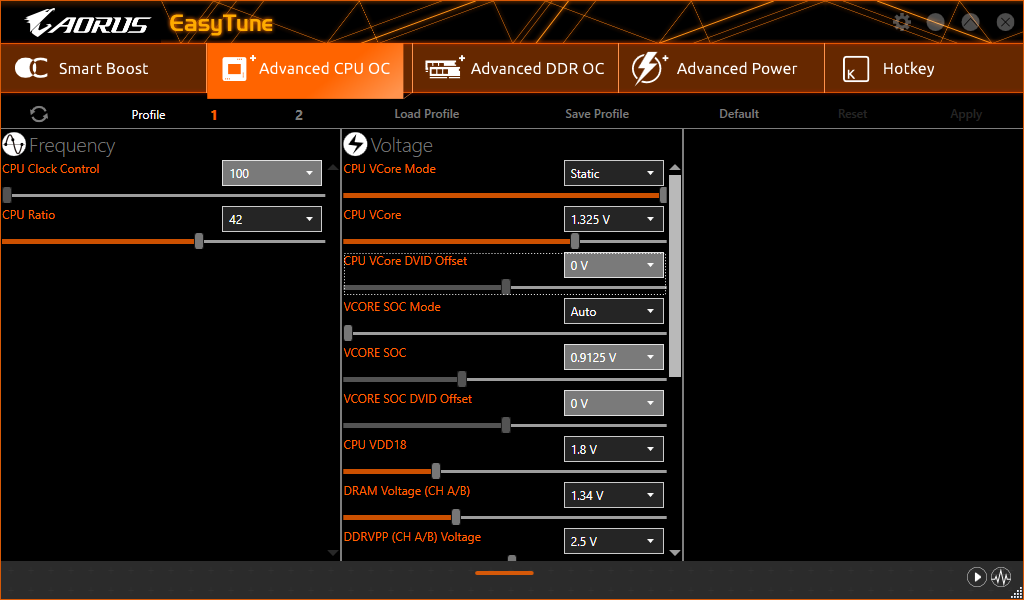

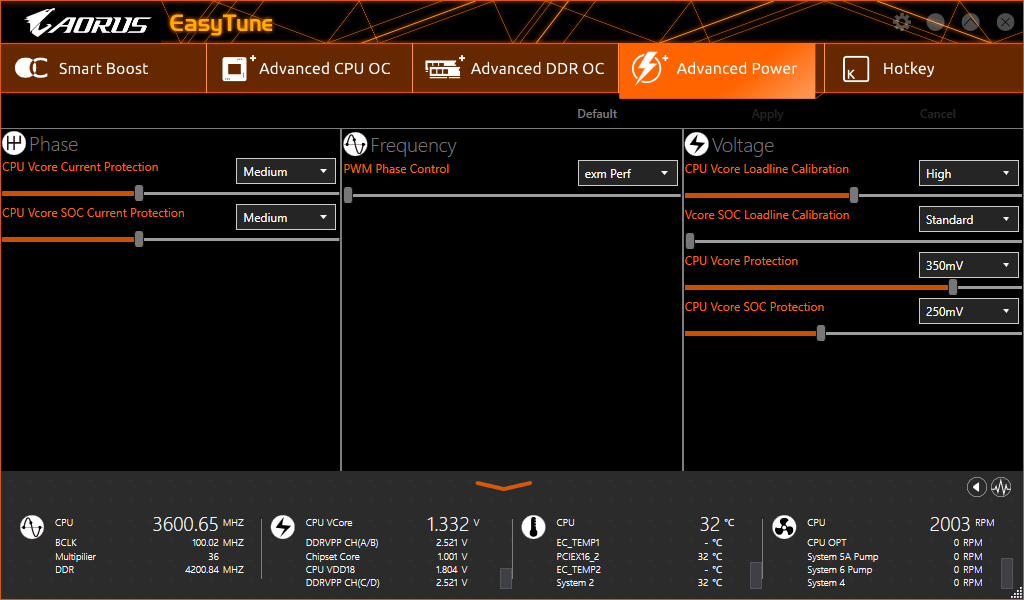

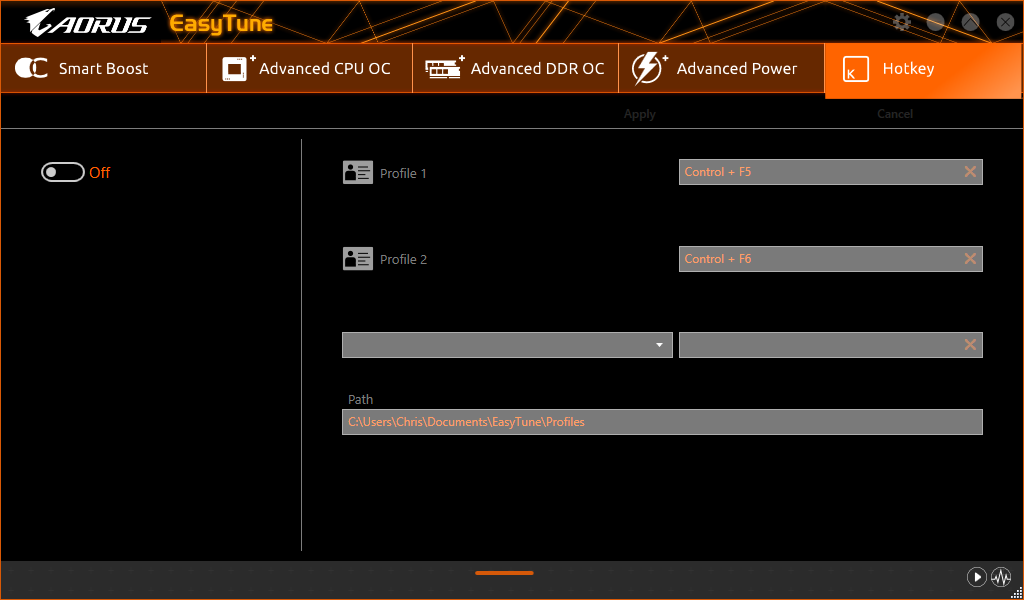

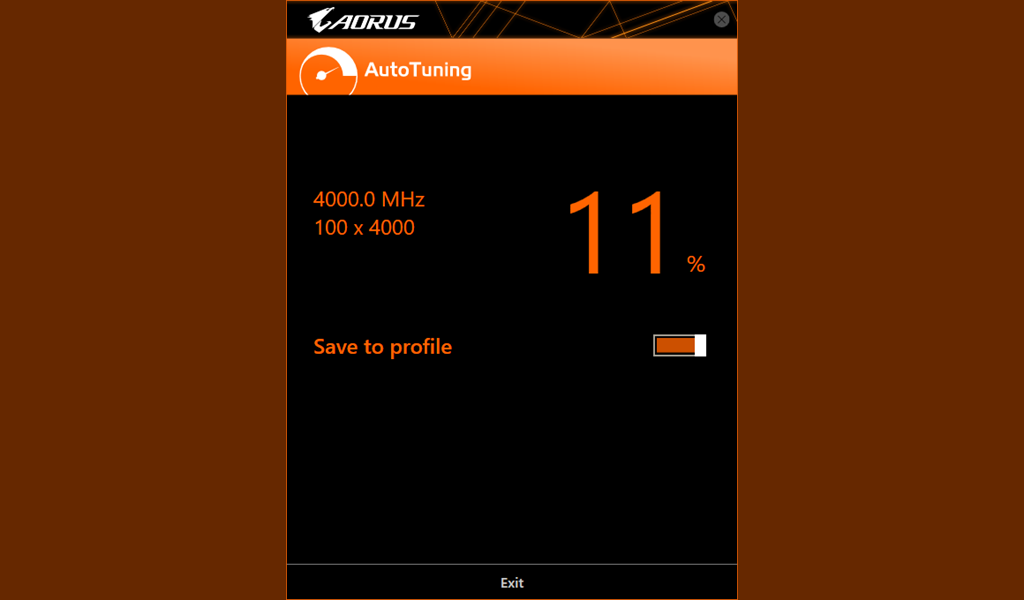

Gigabyte EasyTune worked well for changing clocks and voltage levels, but its automatic overclocking program only pushed our 3970X to 4.0GHz at 1.38V. We can do better manually.

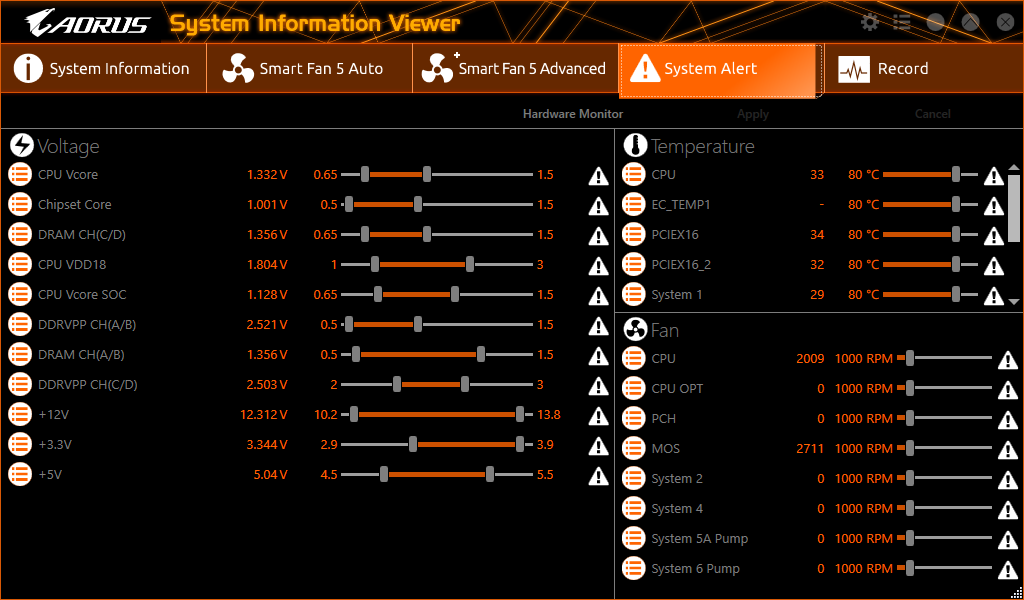

Clicking the little heart monitor icon in the lower-right corner of EasyTune brings up a Hardware Monitor menu on the right edge of the screen. We split that and put the halves side-by-side so it would fit into this image box.

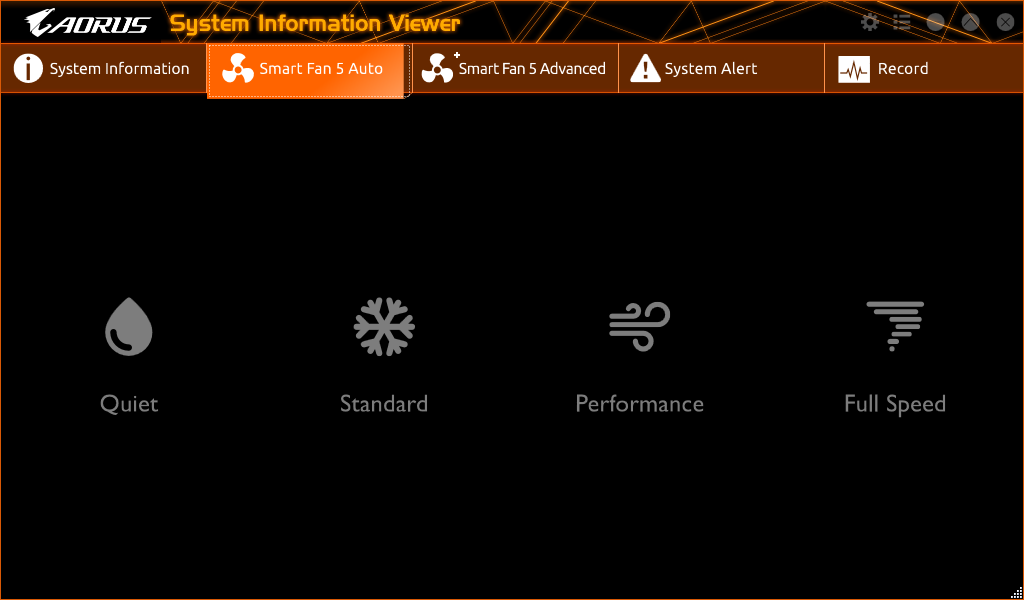

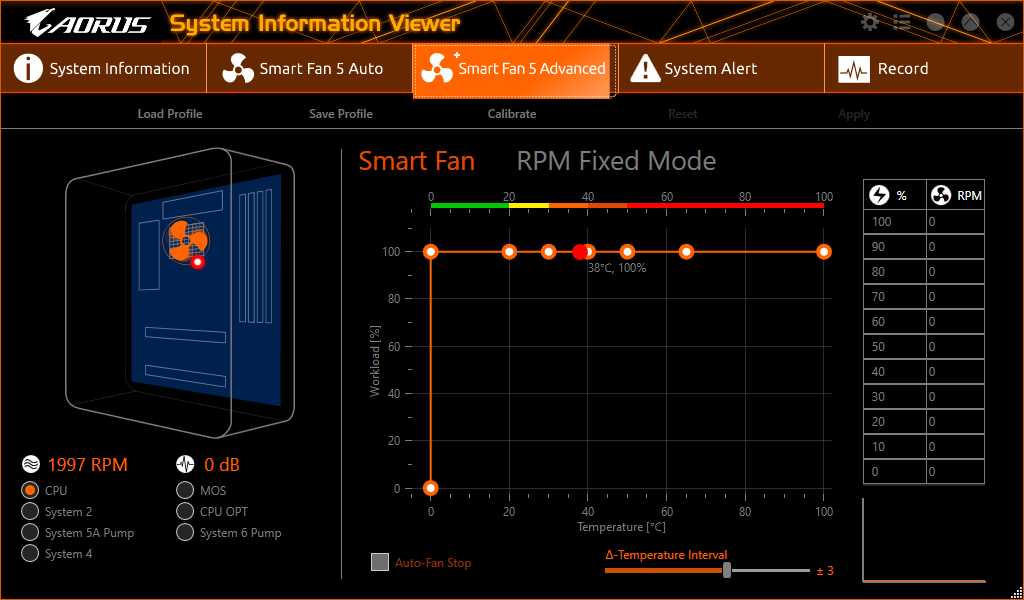

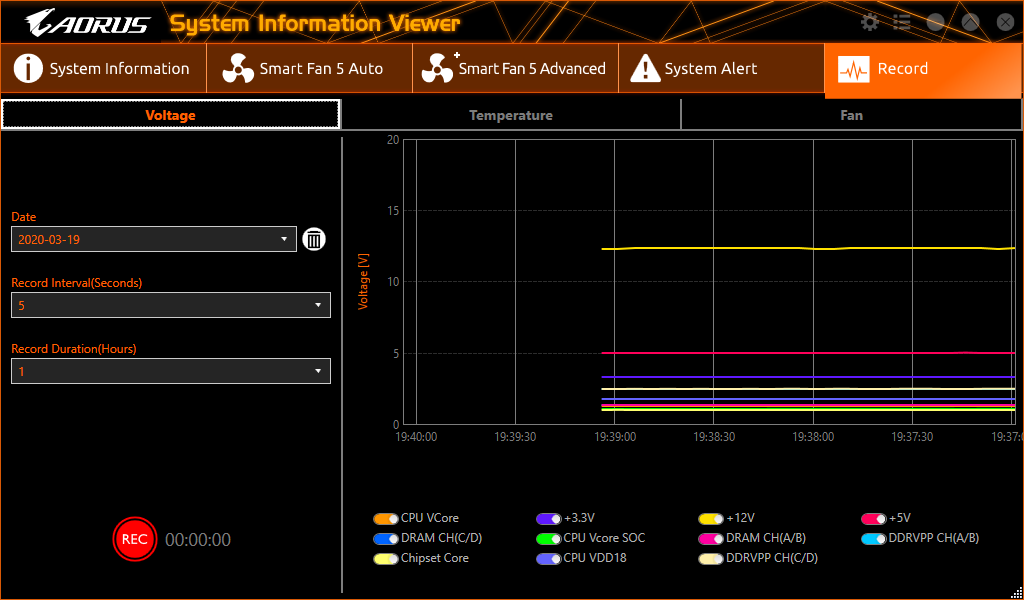

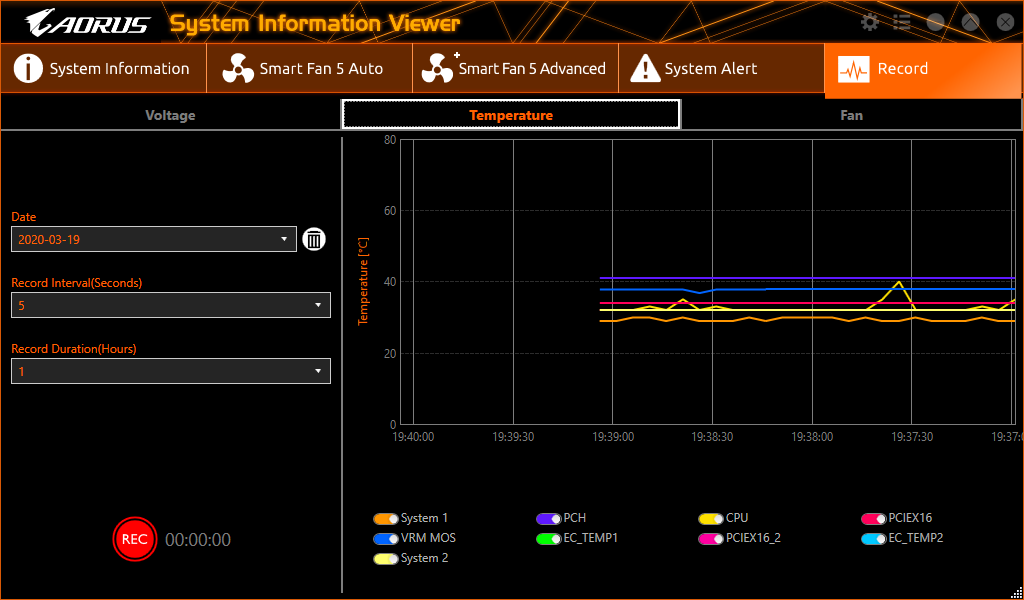

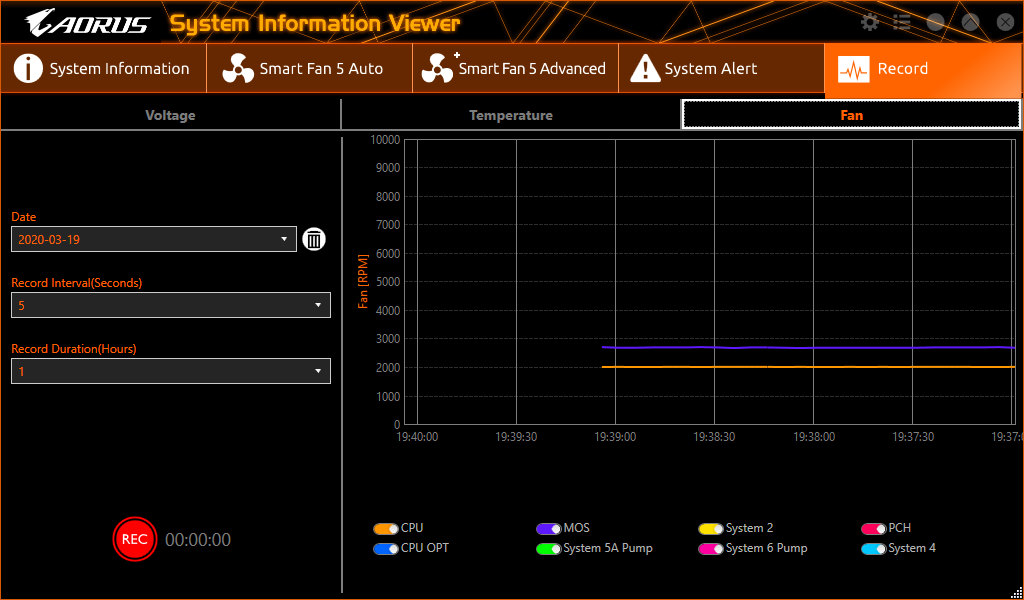

Hardware Monitor is part of Gigabyte’s System Information Viewer, so that clicking its return icon brings us here rather than back to EasyTune. After running a fan optimization test upon first use, users can choose a fan profile, configure their own, set system alarm levels, and log many of the stats displayed in Hardware Monitor.

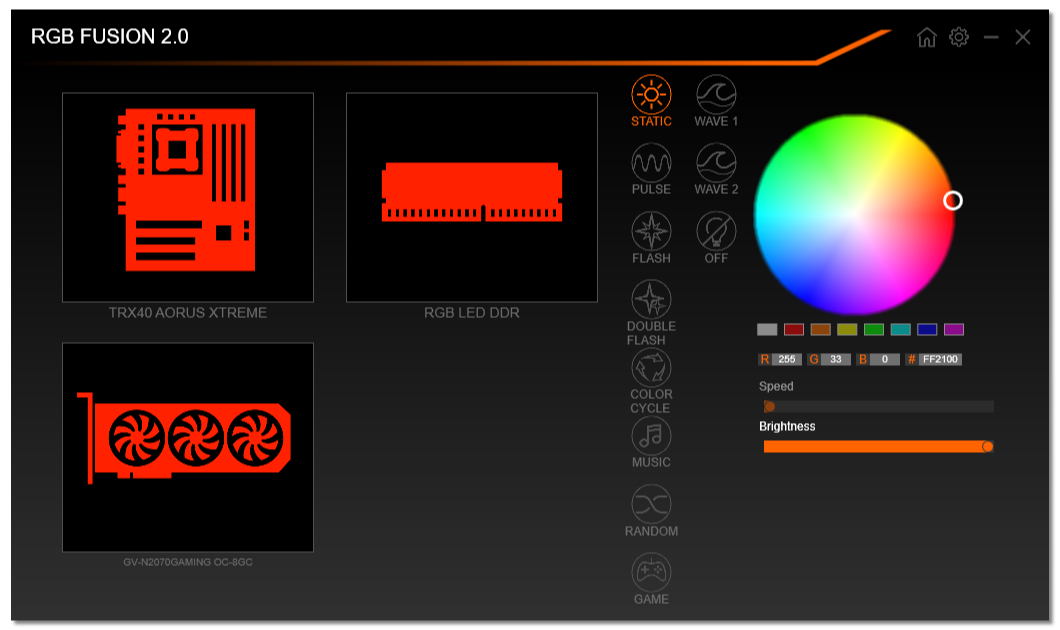

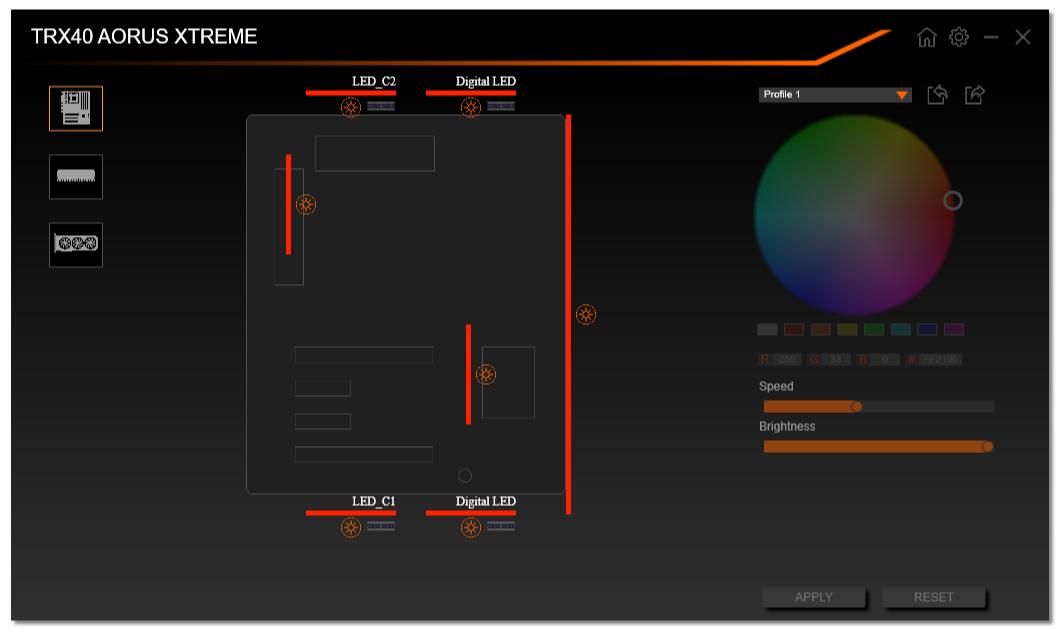

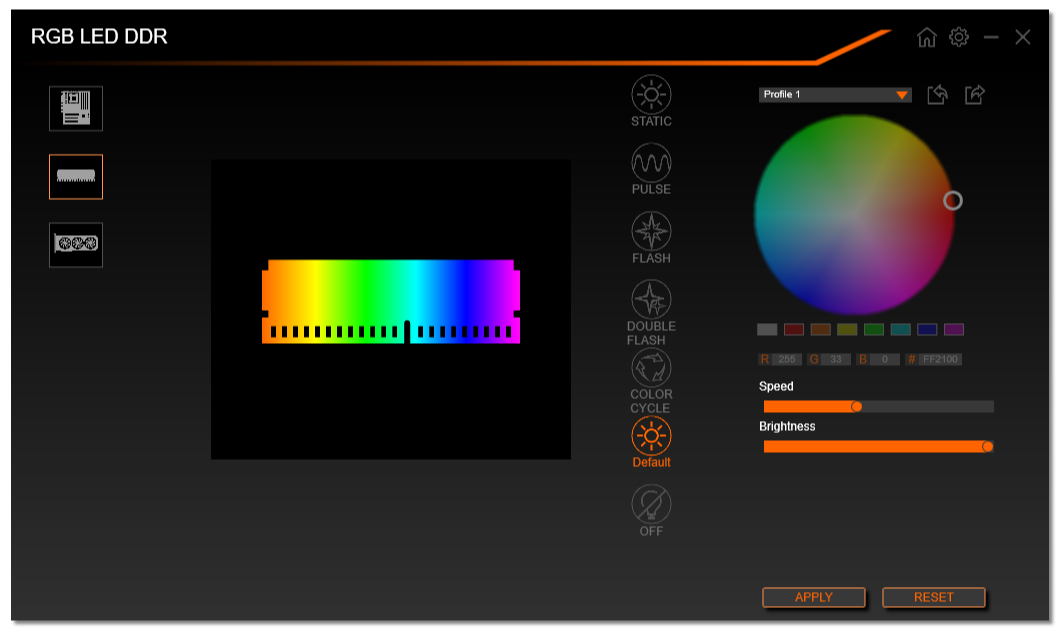

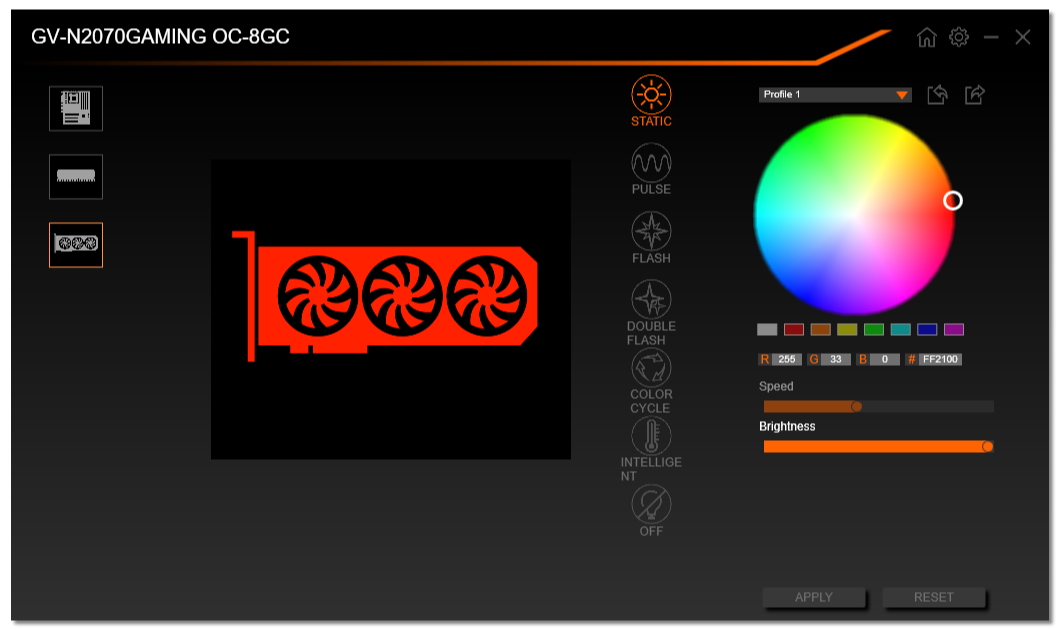

Gigabyte RGB Fusion lighting control software worked with its board, our memory, and our graphics card, for the most part. While many of the settings operated synchronously between all components, the program could not address wave (rainbow wave) mode on our DRAM unless we set these items asynchronously, and reverted to the former synchronous setting (or turned memory LEDs off) when we switched from the memory menu to another menu with memory set to “default” (rainbow wave). That still leaves a bunch of non-wave lighting patterns to choose from.

Firmware

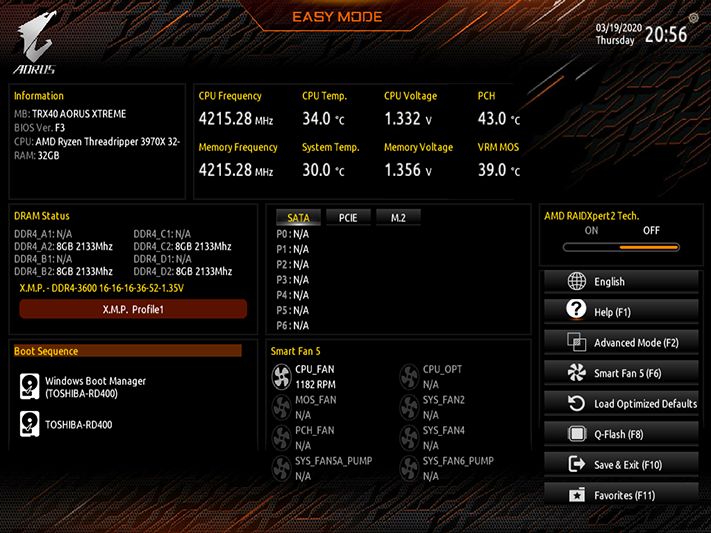

TRX40 Aorus Xtreme firmware defaults to its Easy Mode interface, but remembers the chosen UI from which the user last saved so that if you leave from Advanced Mode, you’ll return to Advanced Mode.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

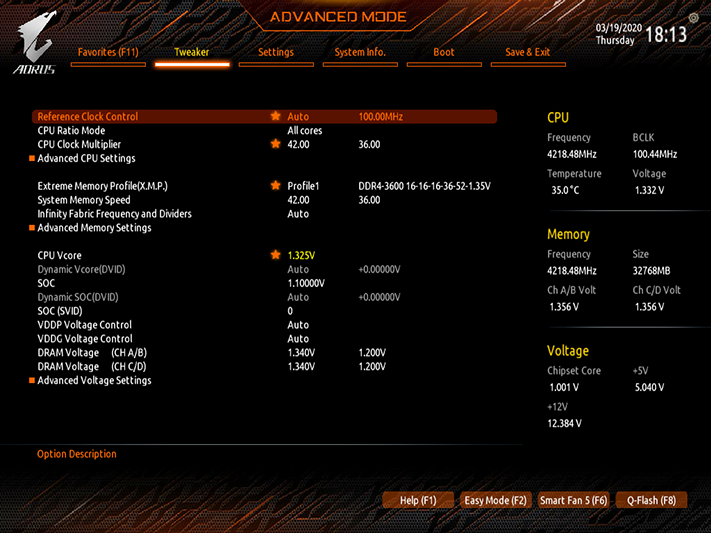

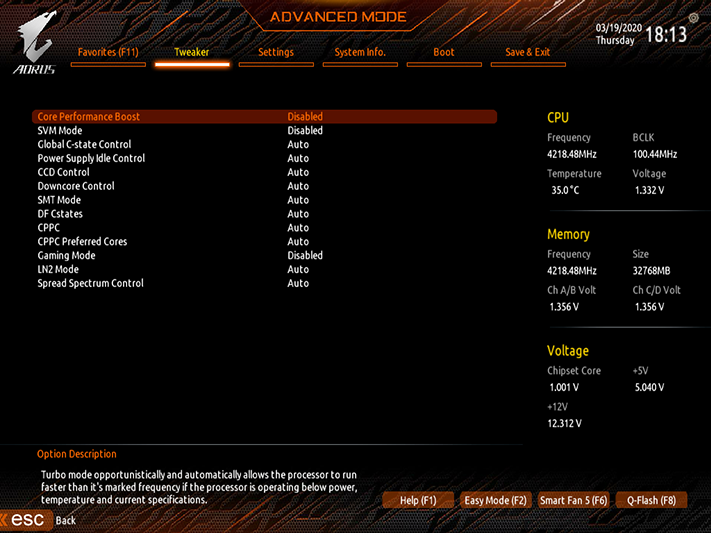

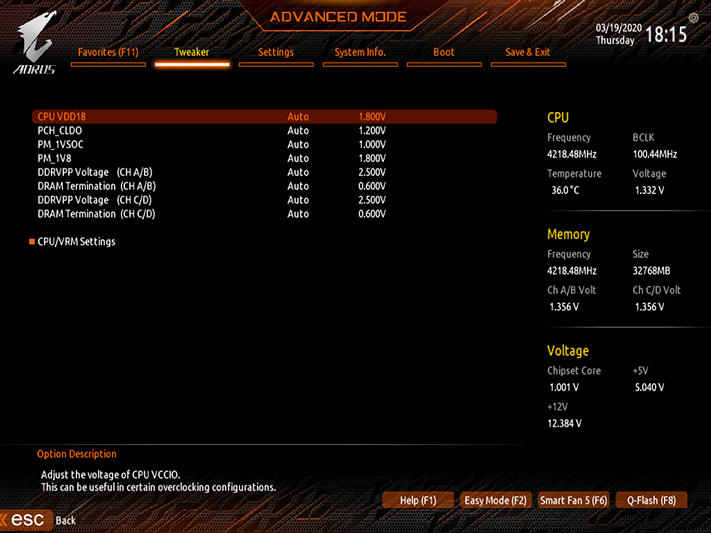

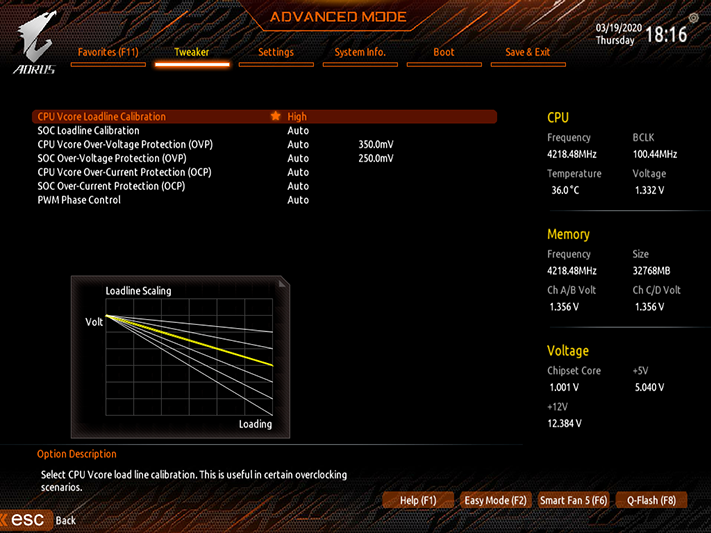

The Tweaker menu from advanced mode let us set a stable 4.20 GHz CPU clock at 1.35V under load, but the way we got there was a little convoluted: After first setting “High” VCore Loadline Calibration within the CPU/VRM Settings of Advanced Voltage Settings, we gradually dropped the CPU VCore setting from 1.35V to 1.325V until it no longer overshot our desired voltage.

The reason we didn’t try a lower VCore Loadline Calibration setting is that every time we adjusted the Loadline or CPU multiplier, the board would reset CPU voltage to stock. And, it wouldn’t show that change in settings, so we were left guessing, reconfiguring it, and rechecking it at the next boot.

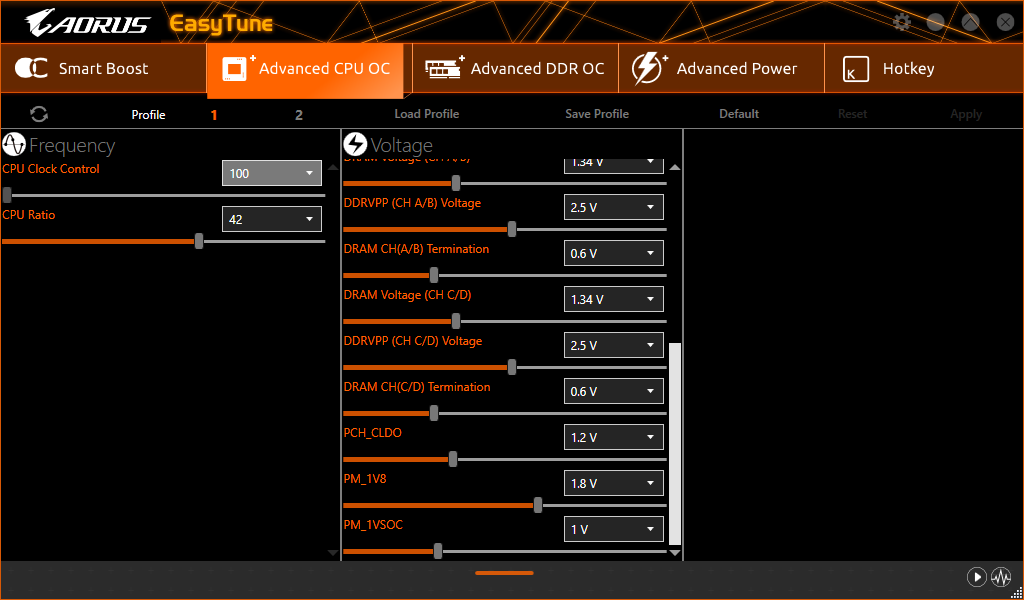

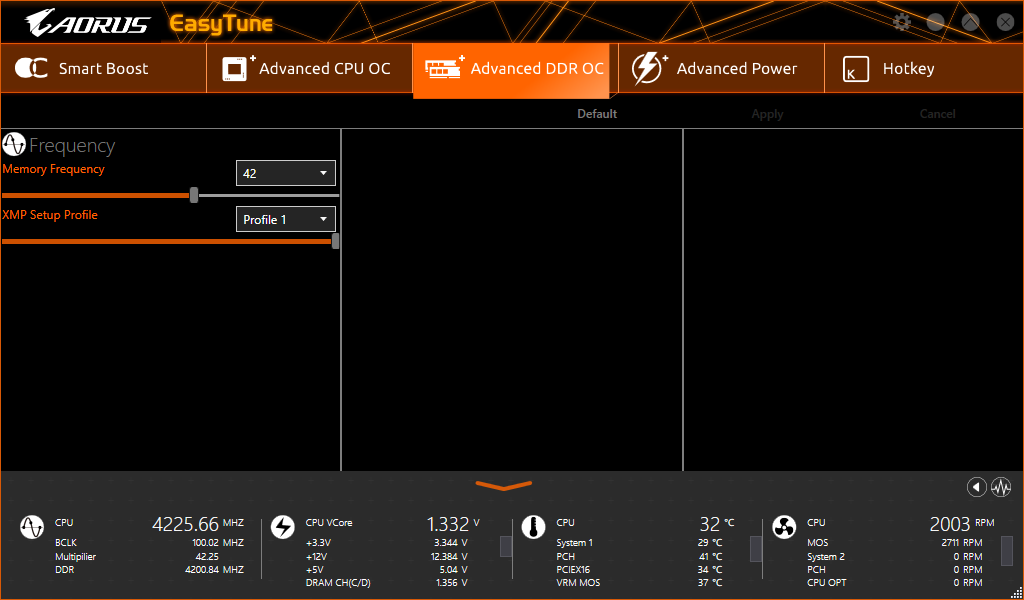

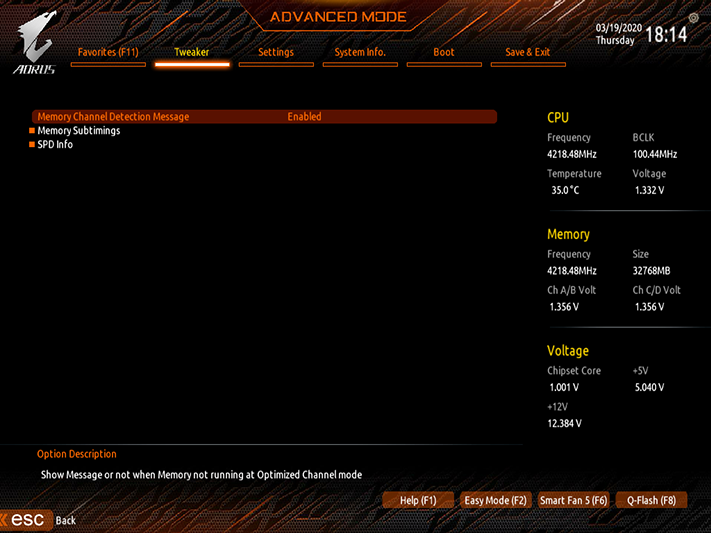

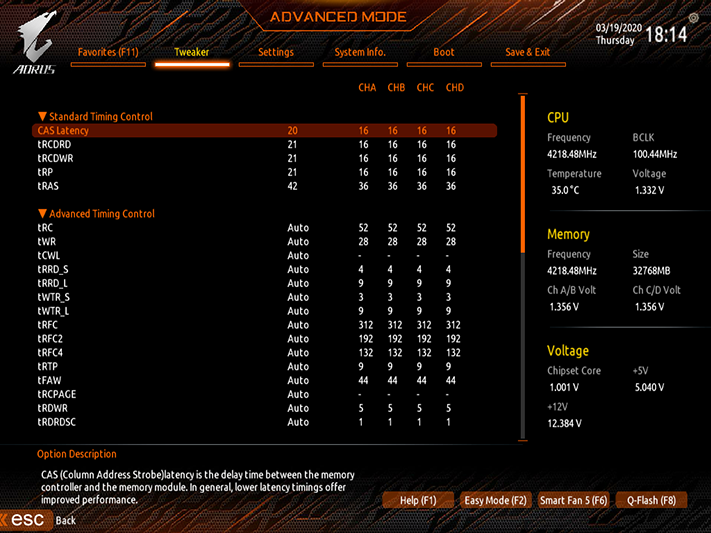

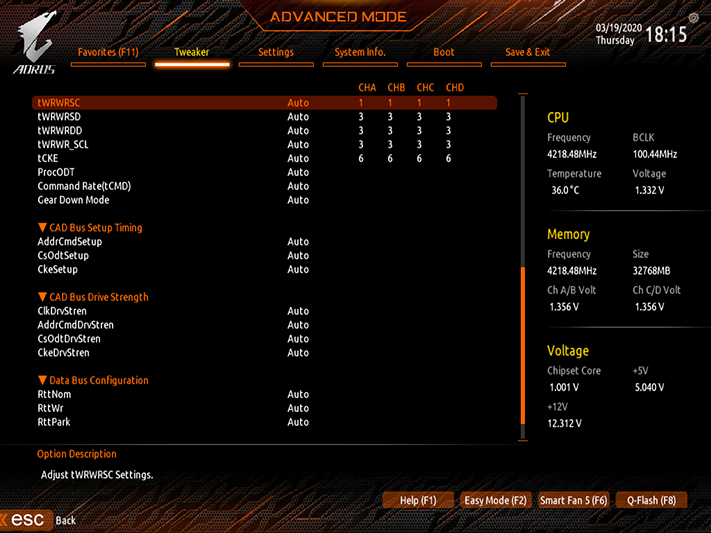

The TRX40 Aorus Xtreme has a complete set of primary and secondary memory timings to play with, along with advanced controls and even a menu that displays SPD and XMP configurations. We reached DDR4-4200 at 1.352-1.354V on our voltmeter, though getting there required us to set 1.34V within the Tweaker menu and it was displayed as 1.356V by firmware.

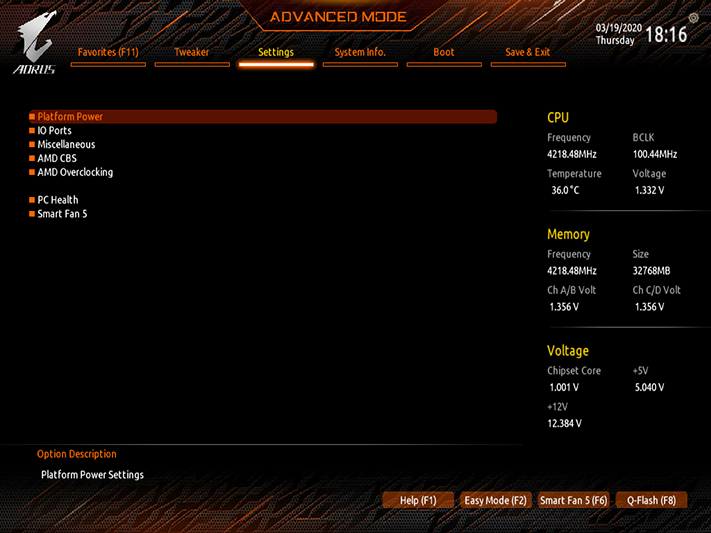

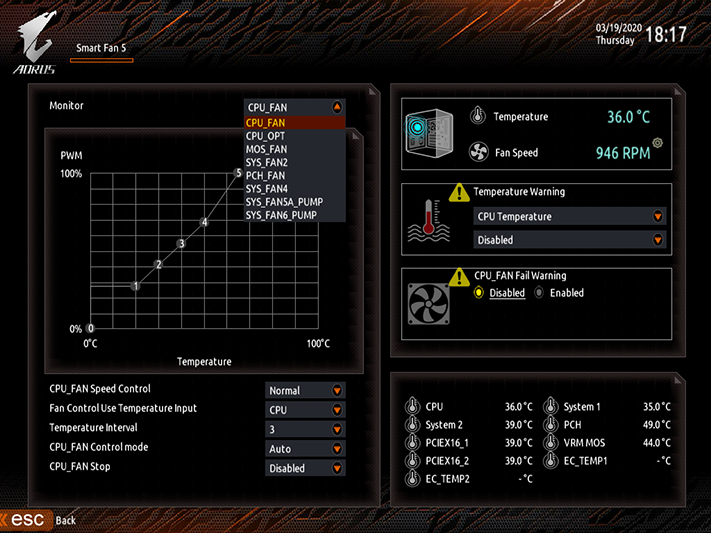

The generically named Settings menu includes a very limited PC Health page plus Smart Fan 5 settings. Having said that, the Smart Fan 5 popup can be accessed from any menu simply by pressing the keyboard’s F6 function. Six of the fan’s headers can be controlled independently here, using the tuner’s choice of PWM or voltage-based RPM control.

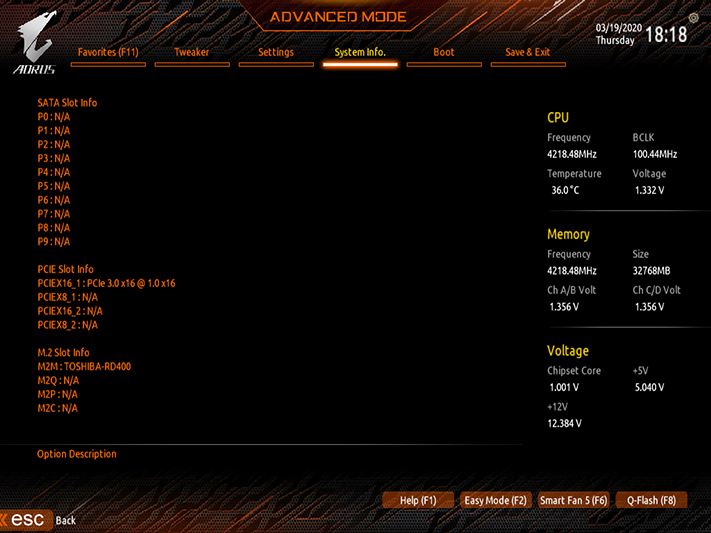

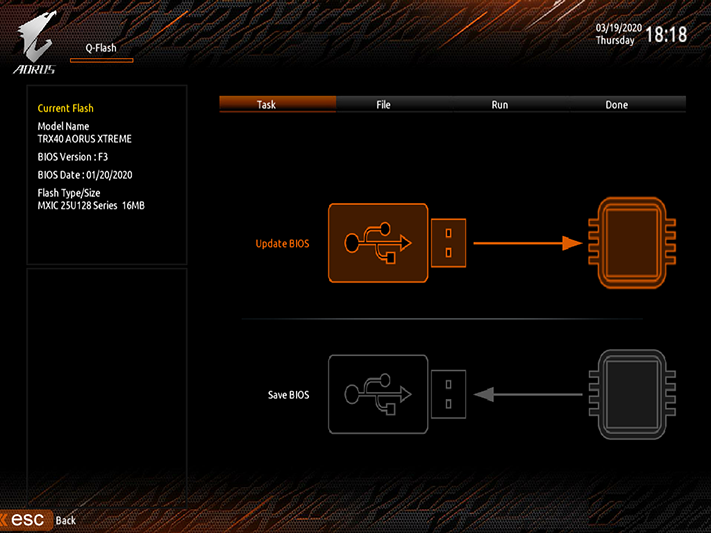

The System Info tab includes a Plug in Devices menus that shows the location of each detected device, and a Q-Flash menu for updating firmware. Checking the status of devices here can help one determine whether a device that dropped out of windows did so due to a Windows fault, or a hardware fault.

Overclocking

| Frequency and Voltage settings | Gigabyte TRX40 Aorus Xtreme | Asus ROG Zenith II Extreme | MSI Creator TRX40 |

|---|---|---|---|

| BIOS | F3 (01/20/20120) | 0602 (11/18/2019) | 1.10 (11/18/2019) |

| Reference Clock | 80-200 MHz (10 kHz) | 40-300 MHz (0.2 MHz) | 80-200 MHz (50 kHz) |

| CPU Multiplier | 8-64x (0.25x) | 22-64x (0.25x) | 8-64x (0.25x) |

| DRAM Data Rates | 1333-2666/5000/6000 (266/66/100 MHz) | 1333-2666/5000/6000 (267/66/100 MHz) | 1600-2666/5000/6000 (267/66/100 MHz) |

| CPU Voltage | 0.75-1.80V (6.25 mV) | 0.75-1.70V (6.25 mV) | 0.90-2.10V (12.5 mV) |

| CPU SOC | 0.75-1.80V (6.25 mV) | 0.70-1.50V (6.25 mV) | 0.90-1.55V (12.5 mV) |

| VDDP | 0.70-9.998V (10mV) | 0.70-V (1 mV) | - |

| DRAM Voltage | 1.00-2.00V (10 mV) | 0.50-2.15V (10 mV) | 0.80-2.10V (10 mV) |

| DDR VTT | 0.375-0.833V (4 mV) | Offset -600 to +600 mV (10mV) | Offset -600 to +600 mV (10mV) |

| Chipset 1.05V | 0.80-1.50V (20mV) | 0.70-1.40V (6.25 mV) | 0.85-1.50V (10 mV) |

| CAS Latency | 8-33 Cycles | 5-33 Cycles | 8-33 Cycles |

| tRCDRD/RDCWR | 8-27 Cycles | 8-27 Cycles | 8-27 Cycles |

| tRP | 8-27 Cycles | 8-27 Cycles | 8-27 Cycles |

| tRAS | 21-58 Cycles | 8-58 Cycles | 21-58 Cycles |

Gigabyte’s TRX40 Aorus Xtreme takes on the top contenders from previous reviews, which include the $850 ROG Zenith II Extreme from Asus, MSI’s $700 Creator TRX40, and ASRock’s TRX40 Taichi. Gigabyte’s GeForce RTX 2070 Gaming OC 8G, Toshiba’s OCZ RD400 and G.Skill’s Trident-Z DDR4-3600 feed AM’s Ryzen Threadripper 3970X. Alphacool’s Eisbecher D5 pump/reservoir and NexXxoS UT60 X-flow radiator cool the CPU through Swiftech’s SKF TR4 Heirloom.

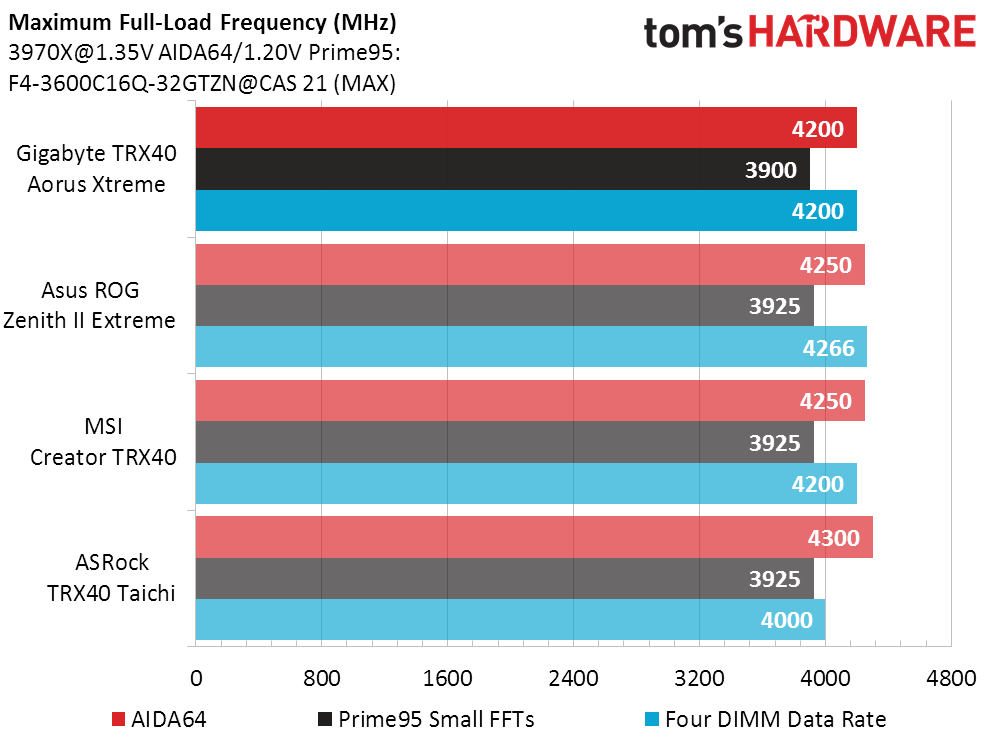

The TRX40 Aorus Xtreme achieved a solid overclock for both our CPU and DRAM, but still came up a little shy compared to the ROG Zenith II Extreme. Memory data rate showed the largest difference, but only at a mere 66 MHz.

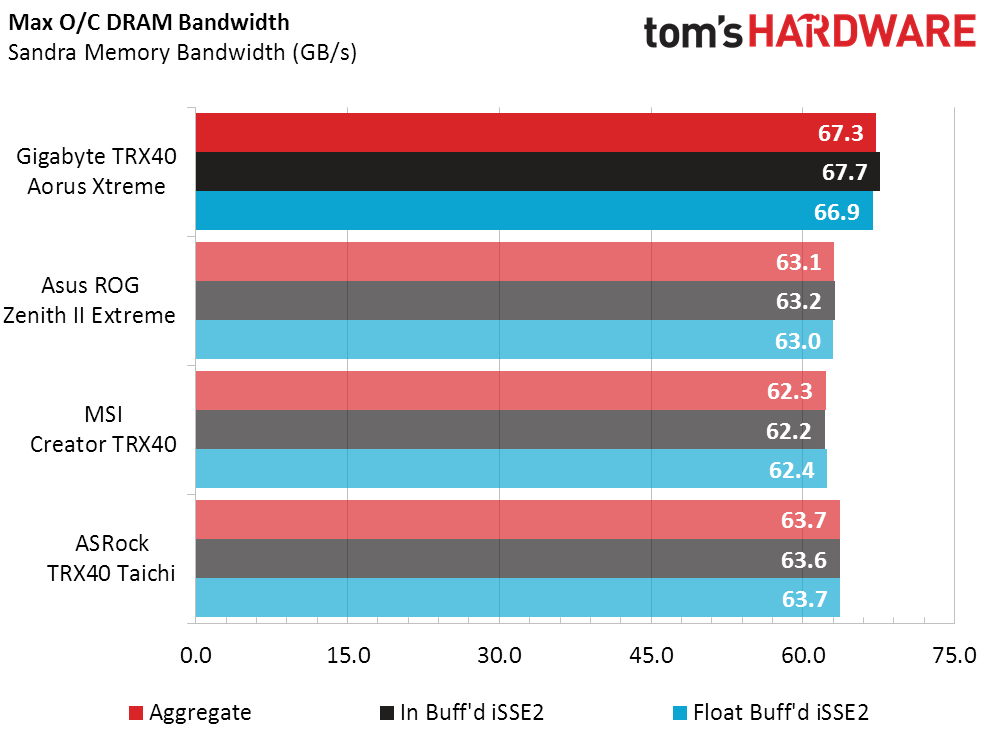

Gigabyte didn’t need that extra 66 MHz of data rate to rule the ROG Zenith II Extreme in memory bandwidth, and we might even be willing to recommend the board to overclockers had it not reset our CPU voltage every time we changed the CPU multiplier.

MORE: Best Motherboards

MORE: How To Choose A Motherboard

MORE: All Motherboard Content

Current page: Software, Firmware and Overclocking

Prev Page Features and Specifications Next Page Benchmark Results and Final Analysis-

mrwitte UPDATE: I eventually did get 4 GPUs to work on this motherboard, with a lot of research and help & suggestions from several different people. The simple lowdown:Reply

Enable Above 4G Decoding in UEFI

Convert Windows OS drive from MBR to GPT (using mbr2gpt in Command Prompt)

Disable CSM Support (This makes the system unbootable unless you perform Step 2 above)I'm not 100% certain that step 1 was necessary, but it does seem likely, judging from everything I've read about Above 4G Encoding. But I do know that everything is indeed working (and at x16/x8/x16/x8 as the specs state) after performing all three of these steps.

Very glad to be able to keep this motherboard after all.

I got one of these for a 4xGPU (RTX 2080 ti's) Octane/Redshift render workstation build, and that's apparently not what this motherboard is for. When I connect the 4th GPU it won't post and kicks out a d4 code. From the manual: "PCI resource allocation error. Out of Resources."

I've ruled out other variables by rotating GPUs/cables/slot population through all sorts of configurations, and it consistently spins like a top with 3GPUs wherever they're placed, and croaks with an error as soon as 4 GPUs are connected. The conclusion I've reached is that despite what the physical spacing of the PCIE slots suggests, this motherboard does not support four GPUs simultaneously.

Getting rid of this thing is really going to hurt because it's the most beautiful motherboard I have ever beheld! Unfortunately, it's of no use to me if I can't add that 4th 2080 ti to the pool. Instead, I'll be going for a known quantity: the ASRock TRX40 Creator. To my taste, an incredibly goofy-looking motherboard compared to the Aorus Xtreme, but I've been in personal contact with several people who've successfully built quad-GPU workstations on the platform. This is what matters, so I'll learn to love it! -

Crashman Reply

That certainly sounds like an undocumented shared resource, have you contacted Gigabyte to clarify?mrwitte said:I got one of these for a 4xGPU (RTX 2080 ti's) Octane/Redshift render workstation build, and that's apparently not what this motherboard is for. When I connect the 4th GPU it won't post and kicks out a d4 code. From the manual: "PCI resource allocation error. Out of Resources."

I've ruled out other variables by rotating GPUs/cables/slot population through all sorts of configurations, and it consistently spins like a top with 3GPUs wherever they're placed, and croaks with an error as soon as 4 GPUs are connected. The conclusion I've reached is that despite what the physical spacing of the PCIE slots suggests, this motherboard does not support four GPUs simultaneously.

Getting rid of this thing is really going to hurt because it's the most beautiful motherboard I have ever beheld! Unfortunately, it's of no use to me if I can't add that 4th 2080 ti to the pool. Instead, I'll be going for a known quantity: the ASRock TRX40 Creator. To my taste, an incredibly goofy-looking motherboard compared to the Aorus Xtreme, but I've been in personal contact with several people who've successfully built quad-GPU workstations on the platform. This is what matters, so I'll learn to love it! -

mrwitte No, haven't heard back yet. But I'm all set anyway!Reply

Crashman said:That certainly sounds like an undocumented shared resource, have you contacted Gigabyte to clarify? -

3L6research Reviewing your old reviews for the Gigabyte TRX40 Aorus Xtreme (1 APR 2020), I noticed in one of your photos that it looked like you had paired this MOBO with a Cooler Master HAF XB or XB EVO case.Reply

Because the MOBO is supposed to be either XL-ATX or E-ATX (depending on how you name these things) and the case specs state it only takes ATX boards, how did you make this work?

Like to try it myself. -

Crashman Reply

I wrote several case guides about this very issue: Most "EATX" PC motherboards are not full EATX spec. They're XL-ATX depth and ATX from north to south edges (call that height if you build towers or width if you do server racks). In other words, rather than 13" deep they're only 10.6" deep.3L6research said:Reviewing your old reviews for the Gigabyte TRX40 Aorus Xtreme (1 APR 2020), I noticed in one of your photos that it looked like you had paired this MOBO with a Cooler Master HAF XB or XB EVO case.

Because the MOBO is supposed to be either XL-ATX or E-ATX (depending on how you name these things) and the case specs state it only takes ATX boards, how did you make this work?

Like to try it myself.

The HAF XB has a roughly 10.5" tray with plenty of room ahead of it, so that folding the front edge down a bit easily allows boards up to roughly 13" to fit. But why lead with the added details?

Because I was also responsible for many of the site's case reviews, and many of THOSE cases were built to the defunct XL-ATX standard. A 10.6" board fits an XL-ATX case, while a 13" board does not. And since XL-ATX was defunct, those cases were sold as ATX.

Because of this, I routinely went after both contributing motherboard editors to provide exact motherboard depth, as well as contributing case editors to manually measure the clearance before a motherboard contacted any part of the case. The last two Editors In Chief thought I was being obtuse about this stuff, but it's obvious that you don't need 13" of clearance to fit a 10.6" board.

After that, things got ridiculous with case labels: Companies started calling any case that had 13" of clearance EATX, even though many of those didn't have the front (forth) column of standoffs needed to support a 13"-deep motherboard's forward edge. Some manufacturers started making notes like "EATX" with an asterisk pointing to a number such as "Up to 11" deep". But that's not the full EATX spec, and I'm always concerned with someone getting parts that don't fit based on faulty specifications. It's important for buyers to be as fully-informed as possible.

I can give you some historical perspective about how the HAF XB ended up being the standard platform if you'd like.